ResNet

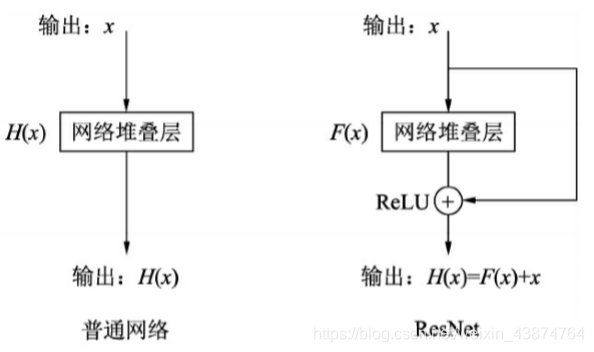

ResNet(Residual Network,残差网络)缓解了深度网络难以训练的问题,它的思想在于让卷积网络学习残差映射而非直接映射。

它的假设是残差映射H(x)-x比H(x)更容易训练,至于理论推导…看不懂

ResNet的一个残差模块称为Bottleneck,以ResNet-50的一个卷积组为例尝试构造Bottleneck

import torch

from torch import nn

class resnet_bottleneck(nn.Module):

def __init__(self, in_dim, out_dim, stride=1):

super(resnet_bottleneck, self).__init__()

#1x1,3x3,1x1三层卷积以及BN层

self.bottleneck = nn.Sequential(nn.Conv2d(in_dim, in_dim, 1, bias=False),

nn.BatchNorm2d(in_dim),

nn.ReLU(inplace=True),

nn.Conv2d(in_dim, in_dim, 3, stride, 1, bias=False),

nn.BatchNorm2d(in_dim),

nn.ReLU(inplace=True),

nn.Conv2d(in_dim, out_dim, 1, bias=False),

nn.BatchNorm2d(out_dim))

self.relu = nn.ReLU(inplace=True)

#为了使x与H(x)-x能相加,需要改变x的大小

self.Downsample = nn.Sequential(nn.Conv2d(in_dim, out_dim, 1, 1),

nn.BatchNorm2d(out_dim))

def forward(self, x):

identity = x

out = self.bottleneck(x)

identity = self.Downsample(x)

#将输入与out相加

out += identity

out = self.relu(out)

return out

#实例化Bottleneck

bottleneck_1_1 = resnet_bottleneck(64, 256).cuda()

print(bottleneck_1_1)

inputs = torch.randn(1, 64, 56, 56).cuda()

print(inputs.shape)

output = bottleneck_1_1(inputs)

print(output.shape)

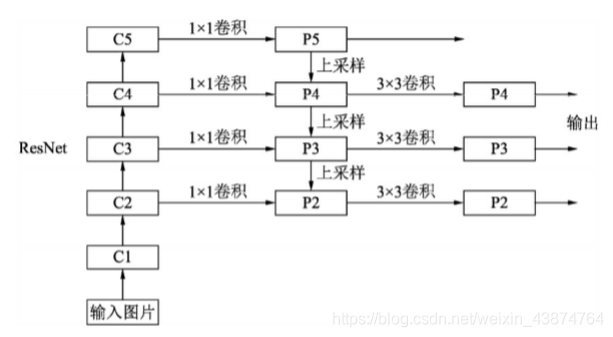

FPN

传统物体检测模型只在深度卷积网络的最后一个特征图上进行后续操作,但这一层图像的缩小倍数很大,导致小物体的检测性能不高。而图像金字塔将输入图像做成多个尺寸,在不同尺寸的图像上生成不同尺度的特征,虽然计算量大,但是较好的改善了多尺度检测问题。

FPN主要包括四个部分:

- 自下而上的网络(最左侧的普通卷积网络,常使用Resnet结构)特征图大小递进减小

- 自上而下的网络(上采样)

- 横向连接

- 卷积融合(每个横向得到特征后,利用3*3的卷积生成P2,P3,P4再进行融合,目的是消除上采样带来的重叠效应)

PyTorch FPN

import torch

from torch import nn

import torch.nn.functional as F

import math

#ResNet的bottleneck基本类

class Bottleneck(nn.Module):

expansion = 4

def __init__(self, in_planes, planes, stride=1, downsample=None):

super(Bottleneck, self).__init__()

self.bottleneck = nn.Sequential(nn.Conv2d(in_planes, planes, 1, bias=False),

nn.BatchNorm2d(planes),

nn.ReLU(inplace=True),

nn.Conv2d(planes, planes, 3, stride, 1, bias=False),

nn.BatchNorm2d(planes),

nn.ReLU(inplace=True),

nn.Conv2d(planes, self.expansion*planes, 1, bias=False),

nn.BatchNorm2d(self.expansion*planes))

self.relu = nn.ReLU(inplace = True)

self.downsample = downsample

def forward(self, x):

identity = x

out = self.bottleneck(x)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

class FPN(nn.Module):

#初始化需要输入一个list代表ResNet每个阶段的Bottleneck数

def __init__(self, layers):

super(FPN, self).__init__()

self.inplanes = 64

#处理输入的C1模块

self.conv1 = nn.Conv2d(3, 64, 7, 2, 3, bias=True)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(3, 2, 1)

#自下而上

self.layer1 = self._make_layer(64, layers[0])

self.layer2 = self._make_layer(128, layers[1], 2)

self.layer3 = self._make_layer(256, layers[2], 2)

self.layer4 = self._make_layer(512, layers[3], 2)

#得到P5

self.toplayer = nn.Conv2d(2048, 256, 1, 1)

#3x3卷积融合

self.smooth1 = nn.Conv2d(256, 256, 3, 1, 1)

self.smooth2 = nn.Conv2d(256, 256, 3, 1, 1)

self.smooth3 = nn.Conv2d(256, 256, 3, 1, 1)

#横向连接

self.latlayer1 = nn.Conv2d(1024, 256, 1, 1, 0)

self.latlayer2 = nn.Conv2d(512, 256, 1, 1, 0)

self.latlayer3 = nn.Conv2d(256, 256, 1, 1, 0)

def _make_layer(self, planes, blocks, stride=1):

downsample = None

if stride != 1 or self.inplanes != Bottleneck.expansion*planes:

downsample = nn.Sequential(nn.Conv2d(self.inplanes, Bottleneck.expansion*planes, 1, stride, bias=False),

nn.BatchNorm2d(Bottleneck.expansion*planes))

layers = []

layers.append(Bottleneck(self.inplanes, planes, stride, downsample))

self.inplanes = planes*Bottleneck.expansion

for i in range(1, blocks):

layers.append(Bottleneck(self.inplanes, planes))

return nn.Sequential(*layers)

def _upsample_add(self, x, y):

_,_,H,W = y.shape

return F.interpolate(x, size=(H,W), mode='bilinear', align_corners=True) + y

def forward(self, x):

#自下而上

c1 = self.maxpool(self.relu(self.bn1(self.conv1(x))))

c2 = self.layer1(c1)

c3 = self.layer2(c2)

c4 = self.layer3(c3)

c5 = self.layer4(c4)

#自上而下

p5 = self.toplayer(c5)

p4 = self._upsample_add(p5, self.latlayer1(c4))

p3 = self._upsample_add(p4, self.latlayer2(c3))

p2 = self._upsample_add(p3, self.latlayer3(c2))

#卷积融合

p4 = self.smooth1(p4)

p3 = self.smooth2(p3)

p2 = self.smooth3(p2)

return p2, p3, p4, p5

#测试

net_fpn = FPN([3, 4, 6, 3]).cuda()

print(net_fpn)

inputs = torch.randn(1, 3, 224, 224).cuda()

out = net_fpn(inputs)

print(out)

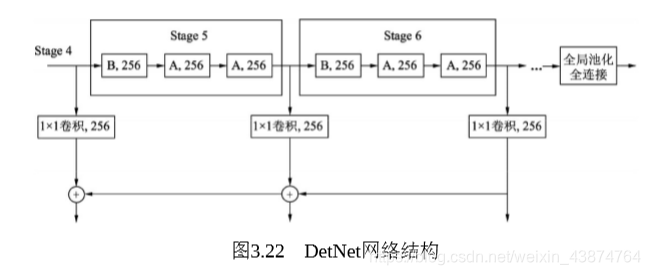

DetNet(为检测而生)

前面的网络是为图像分类设计的,分类任务侧重于全图的特征提取,而物体检测需要定位出物体位置,所以特征图分别率不饿能太小。而且之前的网络对于物体的边缘难以精确预测,回归边界较难。

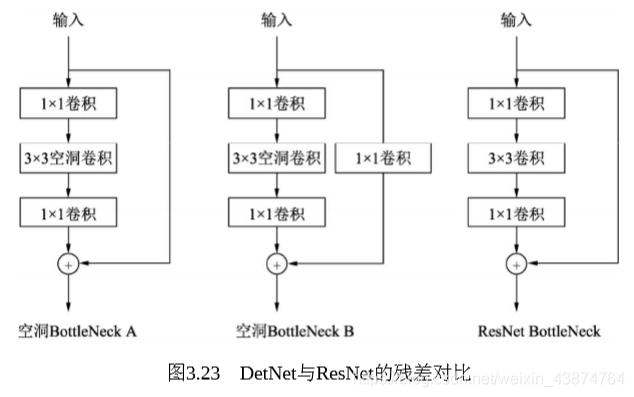

DetNet采用空洞卷积避免了多次上采样,DetNet仍然选择ResNet作为基础结构,前4个stage与ResNet-50相同,而stage5,stage6,使用空洞数为2的33的卷积取代了步长为2的33的卷积。

DetNet空洞卷积分为A,B两种

PyTorch Desbottleneck A/B

import torch

from torch import nn

class DetBottleneck(nn.Module):

#extra为False时为A,True为B

def __init__(self, inplanes, planes, stride, extra=False):

super(DetBottleneck, self).__init__()

self.bottleneck = nn.Sequential(nn.Conv2d(inplanes, planes, 1, bias=False),

nn.BatchNorm2d(planes),

nn.ReLU(inplace=True),

nn.Conv2d(planes, planes, kernel_size=3, stride=1, padding=2, dilation=2, bias=False),

nn.BatchNorm2d(planes),

nn.ReLU(inplace=True),

nn.Conv2d(planes, planes, 1, bias=False),

nn.BatchNorm2d(planes))

self.relu = nn.ReLU(inplace=True)

self.extra = extra

#B类型的1x1的卷积

if self.extra:

self.extra_conv = nn.Sequential(nn.Conv2d(inplanes, planes, 1, bias=False)

nn.BatchNorm2d(planes))

def forward(self, x):

if self.extra:

identity = self.extra_conv(x)

else:

identity = x

out = self.bottleneck(x)

out += identity

out = self.relu(x)

return out