提供两个文件:

information.txt:

爱好 专业 id

游戏 大数据 1

Null Java 3

学习 Null 4

逛街 全栈 2

student.txt:

id 姓名 性别

1 张三 女

2 李四 男

3 王五 女

4 赵六 男

题目要求

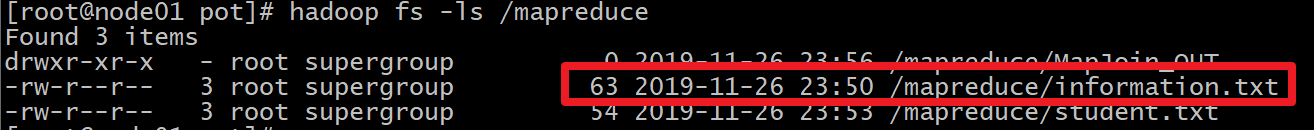

information.txt 上传到Hdfs

student.txt 放在本地

通过distribute读取hdfs上的数据,

将hdfs和本地数据封装到一个JavaBean对象中

要求在map端封装好对象,在reduce端计算对象中属性为Null的个数作为value个数输出,输出key为Bean对象的tostring

分区设为两个,根据性别分区

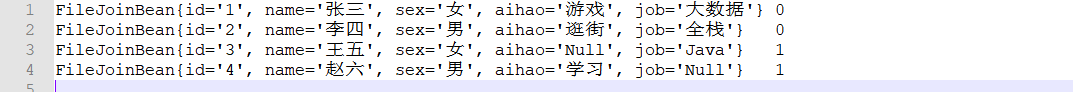

不分区前的结果(结果在本地):

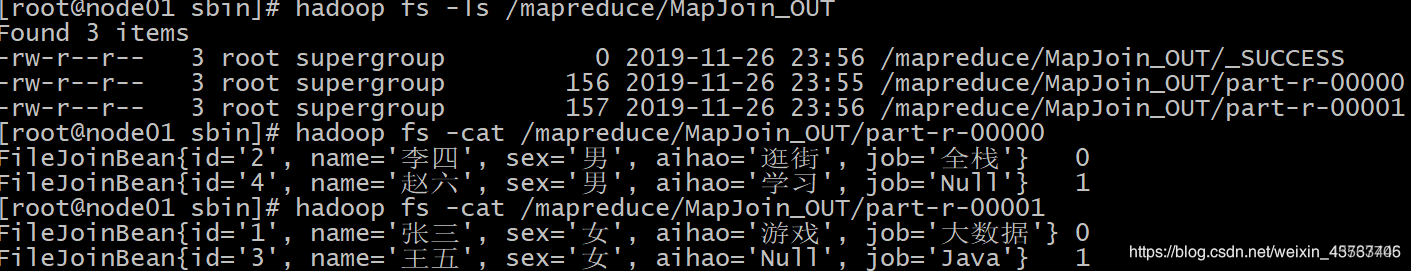

分区后的结果(分区要在集群上运行)

代码

POM.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>cn.itcast</groupId>

<artifactId>mapreduce</artifactId>

<version>1.0-SNAPSHOT</version>

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>org.apache.Hadoop</groupId>

<artifactId>Hadoop-client</artifactId>

<version>2.6.0-mr1-cdh5.14.0</version>

</dependency>

<dependency>

<groupId>org.apache.Hadoop</groupId>

<artifactId>Hadoop-common</artifactId>

<version>2.6.0-cdh5.14.0</version>

</dependency>

<dependency>

<groupId>org.apache.Hadoop</groupId>

<artifactId>Hadoop-hdfs</artifactId>

<version>2.6.0-cdh5.14.0</version>

</dependency>

<dependency>

<groupId>org.apache.Hadoop</groupId>

<artifactId>Hadoop-mapreduce-client-core</artifactId>

<version>2.6.0-cdh5.14.0</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.testng</groupId>

<artifactId>testng</artifactId>

<version>RELEASE</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.0</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.4.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<minimizeJar>true</minimizeJar>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

不分区前

第一步:把information.txt文件上传到集群

第二步:运行代码

FileJoinBean

package com.czxy.ittianmao.demo06;

import org.apache.hadoop.io.Writable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class FileJoinBean implements Writable {

private String id;

private String name;

private String sex;

private String aihao;

private String job;

public FileJoinBean() {

}

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public String getSex() {

return sex;

}

public void setSex(String sex) {

this.sex = sex;

}

public String getAihao() {

return aihao;

}

public void setAihao(String aihao) {

this.aihao = aihao;

}

public String getJob() {

return job;

}

public void setJob(String job) {

this.job = job;

}

@Override

public String toString() {

return "FileJoinBean{" +

"id='" + id + '\'' +

", name='" + name + '\'' +

", sex='" + sex + '\'' +

", aihao='" + aihao + '\'' +

", job='" + job + '\'' +

'}';

}

@Override

public void write(DataOutput out) throws IOException {

out.writeUTF(id+"");

out.writeUTF(name+"");

out.writeUTF(sex+"");

out.writeUTF(aihao+"");

out.writeUTF(job+"");

}

@Override

public void readFields(DataInput in) throws IOException {

this.id=in.readUTF();

this.name=in.readUTF();

this.sex=in.readUTF();

this.aihao=in.readUTF();

this.job=in.readUTF();

}

}

FileJoinMap

package com.czxy.ittianmao.demo06;

import org.apache.hadoop.filecache.DistributedCache;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.URI;

import java.util.HashMap;

public class FileJoinMap extends Mapper<LongWritable, Text, Text,FileJoinBean> {

String line=null;

HashMap<String,String> map = new HashMap<>();

@Override

protected void setup(Context context) throws IOException, InterruptedException {

URI[] cacheFiles = DistributedCache.getCacheFiles(context.getConfiguration());

FileSystem fs = FileSystem.get(cacheFiles[0], context.getConfiguration());

FSDataInputStream open = fs.open(new Path(cacheFiles[0]));

BufferedReader bufferedReader = new BufferedReader(new InputStreamReader(open));

while ((line=bufferedReader.readLine())!=null){

map.put(line.toString().split("\t")[2],line);

}

}

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

FileJoinBean bean = new FileJoinBean();

String[] student = value.toString().split("\t");

String ThisValue = map.get(student[0]);

String[] orders = ThisValue.split("\t");

bean.setId(student[0]);

bean.setName(student[1]);

bean.setSex(student[2]);

bean.setAihao(orders[0]);

bean.setJob(orders[1]);

context.write(new Text(student[0]),bean);

}

}

FileJoinReduce

package com.czxy.ittianmao.demo06;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class FileJoinReduce extends Reducer<Text, FileJoinBean, FileJoinBean, LongWritable> {

@Override

protected void reduce(Text key, Iterable<FileJoinBean> values, Context context) throws IOException, InterruptedException {

int count=0;

for (FileJoinBean value : values) {

if (value.getName()==null||value.getName().equals("Null")){

count++;

}

if (value.getJob()==null||value.getJob().equals("Null")){

count++;

}

if (value.getAihao()==null||value.getAihao().equals("Null")){

count++;

}

if (value.getSex()==null||value.getSex().equals("Null")){

count++;

}

context.write(value,new LongWritable(count));

}

}

}

FileJoinDriver

package com.czxy.ittianmao.demo06;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.filecache.DistributedCache;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

import java.net.URI;

public class FileJoinDriver extends Configured implements Tool {

@Override

public int run(String[] args) throws Exception {

Configuration configuration = new Configuration();

DistributedCache.addCacheFile(new URI("hdfs://192.168.100.100:8020/mapreduce/information.txt"),configuration);

Job job = Job.getInstance(configuration);

job.setJarByClass(FileJoinDriver.class);

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.addInputPath(job,new Path("E:\\传智专修学院大数据课程\\第一学期\\作业\\MapReduce阶段作业\\mapreduce综合题\\student.txt"));

job.setMapperClass(FileJoinMap.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(FileJoinBean.class);

job.setReducerClass(FileJoinReduce.class);

job.setOutputKeyClass(FileJoinBean.class);

job.setOutputValueClass(LongWritable.class);

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job,new Path("E:\\传智专修学院大数据课程\\第一学期\\作业\\MapReduce阶段作业\\mapreduce综合题\\join"));

return job.waitForCompletion(true)?0:1;

}

public static void main(String[] args) throws Exception{

ToolRunner.run(new FileJoinDriver(),args);

}

}

分区后的代码

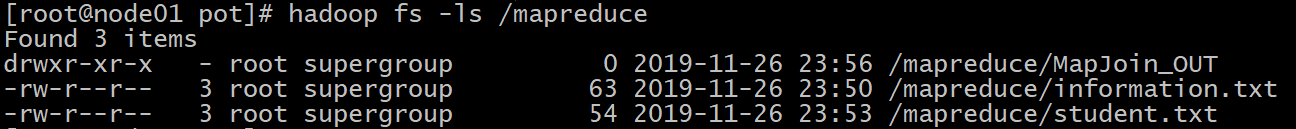

第一步 :把 student.txt也上传到HDFS中

添加代码:

FilePartition

package com.czxy.ittianmao.demo06;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

public class FilePartition extends Partitioner<Text,FileJoinBean> {

@Override

public int getPartition(Text text, FileJoinBean fileJoinBean, int i) {

if (fileJoinBean.getSex().equals("男")){

return 0;

}else{

return 1;

}

}

}

修改代码

FileJoinDriver

package com.czxy.ittianmao.demo06;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.filecache.DistributedCache;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

import java.net.URI;

public class FileJoinDriver extends Configured implements Tool {

@Override

public int run(String[] args) throws Exception {

Configuration configuration = new Configuration();

//添加缓存

DistributedCache.addCacheFile(new URI("hdfs://192.168.100.100:8020/mapreduce/information.txt"),configuration);

Job job = Job.getInstance(configuration);

//如果想在集群上运行那就必须添加这一句

job.setJarByClass(FileJoinDriver.class);

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.addInputPath(job,new Path("hdfs://192.168.100.100:8020/mapreduce/student.txt"));

job.setMapperClass(FileJoinMap.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(FileJoinBean.class);

job.setReducerClass(FileJoinReduce.class);

job.setOutputKeyClass(FileJoinBean.class);

job.setOutputValueClass(LongWritable.class);

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job,new Path("/mapreduce/MapJoin_OUT"));

//这里是分区后添加的

//这是设置自己的自定义分区

job.setPartitionerClass(FilePartition.class);

//这是这是reduce计算的数量

job.setNumReduceTasks(2);

return job.waitForCompletion(true)?0:1;

}

public static void main(String[] args) throws Exception{

ToolRunner.run(new FileJoinDriver(),args);

}

}