ndk实例总结系列

ndk实例总结:jni实例

ndk实例总结:opencv图像处理

ndk实例总结:安卓Camera与usbCamera原始图像处理

ndk实例总结补充:使用V4L2采集usb图像分析

ndk实例总结:使用ffmpeg播放rtsp流

前言

本篇博客总结下在jni中使用opencv进行图像处理的使用实例

在Android中opencv的使用有两种方式,一种是使用opencv的Android版api,另一种是通过jni来使用opencv,本篇总结是第二种方式

依赖库编译

自行编译参考这篇博客:https://www.jianshu.com/p/f2fa2243ad17

demo里也会提供编译好的so库

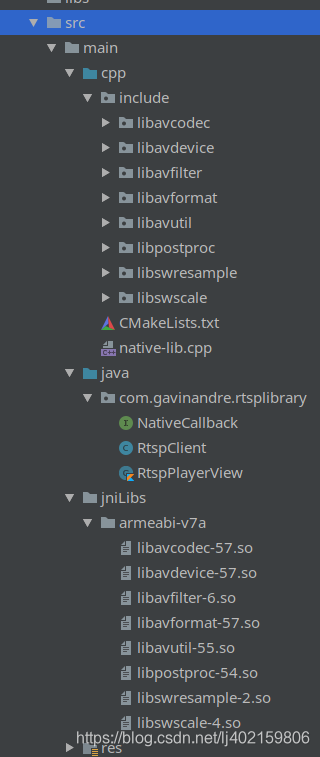

项目构架

从上图中可以看到cpp文件夹内存放的ffmpeg头文件、jni的native代码和CMakeLists文件

jniLibs文件夹中存放的是armv7构架编译的ffmpeg动态库文件

动态库在CMakeLists文件中添加

cmake_minimum_required(VERSION 3.4.1)

#设置头文件目录

include_directories(${CMAKE_SOURCE_DIR}/include)

#设置jniLibs目录

set(jniLibs "${CMAKE_SOURCE_DIR}/../jniLibs/${ANDROID_ABI}")

#ffmpeg

add_library(avcodec-lib SHARED IMPORTED)

set_target_properties(avcodec-lib PROPERTIES IMPORTED_LOCATION

${jniLibs}/libavcodec-57.so)

add_library(avformat-lib SHARED IMPORTED)

set_target_properties(avformat-lib PROPERTIES IMPORTED_LOCATION

${jniLibs}/libavformat-57.so)

add_library(avutil-lib SHARED IMPORTED)

set_target_properties(avutil-lib PROPERTIES IMPORTED_LOCATION

${jniLibs}/libavutil-55.so)

add_library(swresample-lib SHARED IMPORTED)

set_target_properties(swresample-lib PROPERTIES IMPORTED_LOCATION

${jniLibs}/libswresample-2.so)

add_library(swscale-lib SHARED IMPORTED)

set_target_properties(swscale-lib PROPERTIES IMPORTED_LOCATION

${jniLibs}/libswscale-4.so)

add_library(rtsp_lib

SHARED

native-lib.cpp)

find_library(log-lib

log)

target_link_libraries(

rtsp_lib

${log-lib}

avcodec-lib

avformat-lib

avutil-lib

swresample-lib

swscale-lib)

流程很简单,分别设置头文件目录jniLibs目录路径,然后添加自行编译的opencv动态库,最后添加到target link中就可以了

build.gradle

android {

...

defaultConfig {

...

externalNativeBuild {

cmake {

arguments "-DANDROID_ARM_NEON=TRUE", "-DCMAKE_BUILD_TYPE=Release"

cppFlags "-std=c++11"

}

}

ndk {

abiFilters 'armeabi-v7a'

}

}

...

externalNativeBuild {

cmake {

path "src/main/cpp/CMakeLists.txt"

version "3.10.2"

}

}

}

在gradle中添加相应配置:NEON指令开启、使用release来编译、使用c++11标准库,只打包armeabi-v7a的动态库、设置CMakeLists文件的路径和指定cmake版本

ffmpeg播放rtsp流

主要流程就是在java层中将interface对象传递到jni层,jni层使用ffmpeg获取rtsp流,将每帧图像通过接口回调传递到java层后使用textureView绘制出来

流程分析

override fun onSurfaceTextureAvailable(surface: SurfaceTexture, width: Int, height: Int) {

Log.i(TAG, "onSurfaceTextureAvailable: ")

endpoint = rtspEndpointListener

?.rtspEndpoint

?: let {

Log.e(TAG, "init: rtsp endpoint is null")

return

}

isStop = false

mDstRect.set(0, 0, getWidth(), getWidth())

job = initRtsp()

}

首先在onSurfaceTextureAvailable中做一些初始化操作

private fun initRtsp(): Job {

// 启动一个新协程并保持对这个作业的引用

return GlobalScope.launch {

rtspClient = RtspClient(this@RtspPlayerView)

while (isActive) {

if (rtspClient.play(endpoint) == 0) {

break

}

delay(5000L)

}

Log.i(TAG, "initRtsp: coroutine finish")

}

}

jni相关的初始化和视频重连逻辑都在协程中进行

然后开启循环,循环的条件是isActive,isActive是协程内部的标志位,当调用协程的cancel函数时这个标志位会更改,能够自动退出循环

在循环内将rtsp流地址传递到jni层,开始rtsp流原始帧的获取,如果失败的话等待5秒

public RtspClient(NativeCallback callback) {

if (initialize(callback) == -1) {

Log.i(TAG, "RtspClient initialize failed");

} else {

Log.i(TAG, "RtspClient initialize successfully");

}

}

extern "C"

jint Java_com_gavinandre_rtsplibrary_RtspClient_initialize(JNIEnv *env, jobject, jobject callback) {

isStop = false;

gCallback = env->NewGlobalRef(callback);

jclass clz = env->GetObjectClass(gCallback);

if (clz == NULL) {

return JNI_ERR;

} else {

gCallbackMethodId = env->GetMethodID(clz, "onFrame", "([BIII)V");

env->DeleteLocalRef(clz);

return JNI_OK;

}

}

初始化jni对象时传递一个接口,将这个接口转换成全局jobject,并获取接口方法的methodId,之后在jni层会通过这个回调传递每帧原始图像到java层

extern "C"

jint Java_com_gavinandre_rtsplibrary_RtspClient_play(

JNIEnv *env, jobject, jstring endpoint) {

try {

SwsContext *img_convert_ctx;

//分配一个AVFormatContext,FFMPEG所有的操作都要通过这个AVFormatContext来进行

AVFormatContext *context = avformat_alloc_context();

AVCodecContext *ccontext = avcodec_alloc_context3(nullptr);

//初始化FFMPEG 调用了这个才能正常适用编码器和解码器

av_register_all();

//初始化网络模块

avformat_network_init();

AVDictionary *option = nullptr;

// av_dict_set(&option, "buffer_size", "1024000", 0);

// av_dict_set(&option, "max_delay", "500000", 0);

// av_dict_set(&option, "stimeout", "20000000", 0); //设置超时断开连接时间

av_dict_set(&option, "rtsp_transport", "udp", 0); //以udp方式打开,如果以tcp方式打开将udp替换为tcp

const char *rtspUrl = env->GetStringUTFChars(endpoint, JNI_FALSE);

//打开网络流或文件

if (int err = avformat_open_input(&context, rtspUrl, nullptr, &option) != 0) {

char errors[1024];

av_strerror(err, errors, 1024);

__android_log_print(ANDROID_LOG_ERROR, TAG, "Cannot open input %s, error code: %s",

rtspUrl,

errors);

return JNI_ERR;

}

env->ReleaseStringUTFChars(endpoint, rtspUrl);

av_dict_free(&option);

//获取视频流信息

if (avformat_find_stream_info(context, nullptr) < 0) {

__android_log_print(ANDROID_LOG_ERROR, TAG, "Cannot find stream info");

return JNI_ERR;

}

//循环查找视频中包含的流信息,直到找到视频类型的流

int video_stream_index = -1;

//nb_streams代表有几路流,一般是2路:即音频和视频,顺序不一定

for (int i = 0; i < context->nb_streams; i++) {

if (context->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO)

//这一路是视频流,标记一下,以后取视频流都从ifmt_ctx->streams[video_stream_index]取

video_stream_index = i;

}

//如果video_stream_index为-1 说明没有找到视频流

if (video_stream_index == -1) {

__android_log_print(ANDROID_LOG_ERROR, TAG, "Video stream not found");

return JNI_ERR;

}

// Open output file

AVFormatContext *oc = avformat_alloc_context();

AVStream *stream = nullptr;

// Start reading packets from stream and write them to file

av_read_play(context);

AVCodec *codec = nullptr;

//使用h264作为解码器

codec = avcodec_find_decoder(AV_CODEC_ID_H264);

if (!codec) {

__android_log_print(ANDROID_LOG_ERROR, TAG, "Cannot find decoder H264");

return JNI_ERR;

}

avcodec_get_context_defaults3(ccontext, codec);

avcodec_copy_context(ccontext, context->streams[video_stream_index]->codec);

//打开解码器

if (avcodec_open2(ccontext, codec, nullptr) < 0) {

__android_log_print(ANDROID_LOG_ERROR, TAG, "Cannot open codec");

return JNI_ERR;

}

//设置数据转换参数

img_convert_ctx = sws_getContext(

//源视频图像长宽以及数据格式

ccontext->width, ccontext->height, ccontext->pix_fmt,

//目标视频图像长宽以及数据格式

ccontext->width, ccontext->height, AV_PIX_FMT_RGB24,

//算法类型 AV_PIX_FMT_YUV420P AV_PIX_FMT_RGB24

SWS_BICUBIC, nullptr, nullptr, nullptr);

//分配空间,一帧图像数据大小

size_t size = (size_t) avpicture_get_size(AV_PIX_FMT_YUV420P, ccontext->width,

ccontext->height);

uint8_t *picture_buf = (uint8_t *) (av_malloc(size));

AVFrame *pic = av_frame_alloc();

AVFrame *picrgb = av_frame_alloc();

size_t size2 = (size_t) avpicture_get_size(AV_PIX_FMT_RGB24, ccontext->width,

ccontext->height);

uint8_t *picture_buf2 = (uint8_t *) (av_malloc(size2));

avpicture_fill((AVPicture *) pic, picture_buf, AV_PIX_FMT_YUV420P, ccontext->width,

ccontext->height);

//将picrgb的数据按RGB格式自动"关联"到buffer

//即picrgb中的数据改变了 picture_buf2中的数据也会相应的改变

avpicture_fill((AVPicture *) picrgb, picture_buf2, AV_PIX_FMT_RGB24, ccontext->width,

ccontext->height);

//分配AVPacket结构体

AVPacket packet;

//分配packet的数据

av_init_packet(&packet);

//从输入流中读取一帧视频,数据存入AVPacket的结构中

while (!isStop && av_read_frame(context, &packet) >= 0) {

if (packet.stream_index == video_stream_index) { // Packet is video

if (stream == nullptr) {

stream = avformat_new_stream(oc,

context->streams[video_stream_index]->codec->codec);

avcodec_copy_context(stream->codec,

context->streams[video_stream_index]->codec);

stream->sample_aspect_ratio = context->streams[video_stream_index]->codec->sample_aspect_ratio;

}

int check = 0;

packet.stream_index = stream->id;

//视频解码函数,解码之后的数据存储在pic中

avcodec_decode_video2(ccontext, pic, &check, &packet);

//按长宽缩放图像,并转换格式yuv->rgb

sws_scale(img_convert_ctx, (const uint8_t *const *) pic->data, pic->linesize, 0,

ccontext->height, picrgb->data, picrgb->linesize);

// LOGI("gCallback %p ", &gCallback);

// LOGI("gCallbackMethodId %p ", &gCallbackMethodId);

if (gCallback != nullptr) {

//回调到java层

callback(env, picture_buf2, 3, ccontext->width, ccontext->height);

}

}

av_free_packet(&packet);

av_init_packet(&packet);

}

av_free(pic);

av_free(picrgb);

av_free(picture_buf);

av_free(picture_buf2);

av_read_pause(context);

avio_close(oc->pb);

avformat_free_context(oc);

avformat_close_input(&context);

} catch (std::exception &e) {

jclass je = env->FindClass("java/lang/Exception");

env->ThrowNew(je, e.what());

}

return isStop ? JNI_OK : JNI_ERR;

}

该函数就是通过ffmpeg获取rtsp流,代码作用可以看注释

void callback(JNIEnv *env, uint8_t *buf, int nChannel, int width, int height) {

try {

int len = nChannel * width * height;

jbyteArray gByteArray = env->NewByteArray(len);

env->SetByteArrayRegion(gByteArray, 0, len, (jbyte *) buf);

env->CallVoidMethod(gCallback, gCallbackMethodId, gByteArray, nChannel, width, height);

env->DeleteLocalRef(gByteArray);

} catch (std::exception &e) {

jclass je = env->FindClass("java/lang/Exception");

env->ThrowNew(je, e.what());

}

}

通过初始化时保存的全局jobject和methodId,将图像的帧数据和尺寸回调到java层

override fun onFrame(frame: ByteArray, nChannel: Int, width: Int, height: Int) {

if (isStop) {

return

}

val area = width * height

val pixels = IntArray(area)

for (i in 0 until area) {

var r = frame[3 * i].toInt()

var g = frame[3 * i + 1].toInt()

var b = frame[3 * i + 2].toInt()

if (r < 0) r += 255

if (g < 0) g += 255

if (b < 0) b += 255

pixels[i] = Color.rgb(r, g, b)

}

bmp?.apply {

setPixels(pixels, 0, width, 0, 0, width, height)

drawBitmap(this)

} ?: apply {

bmp = Bitmap.createBitmap(width, height, Bitmap.Config.ARGB_8888)

}

}

private fun drawBitmap(bitmap: Bitmap) {

//锁定画布

val canvas = lockCanvas()

if (canvas != null) {

//清空画布

canvas.drawColor(Color.TRANSPARENT, PorterDuff.Mode.CLEAR)

//将bitmap画到画布上

canvas.drawBitmap(bitmap, null, mDstRect, null)

//解锁画布同时提交

unlockCanvasAndPost(canvas)

}

}

在回调函数中将图像帧数据从byte数据转换成int数组,然后放入bitmap对象中,最后绘制出来

override fun onSurfaceTextureDestroyed(surface: SurfaceTexture): Boolean {

Log.i(TAG, "onSurfaceTextureDestroyed: ")

isStop = true

job.cancel()

rtspClient.stop()

SystemClock.sleep(200)

rtspClient.dispose()

return true

}

在onSurfaceTextureDestroyed中做停止操作,job.cancel方法是停止协程

extern "C"

void Java_com_gavinandre_rtsplibrary_RtspClient_stop(JNIEnv *env, jobject) {

isStop = true;

}

extern "C"

void Java_com_gavinandre_rtsplibrary_RtspClient_dispose(JNIEnv *env, jobject) {

try {

env->DeleteGlobalRef(gCallback);

gCallback = nullptr;

} catch (std::exception &e) {

jclass je = env->FindClass("java/lang/Exception");

env->ThrowNew(je, e.what());

}

}

停止ffmpeg获取rtsp流,释放jni全局引用

ndk开发基础学习系列:

JNI和NDK编程(一)JNI的开发流程

JNI和NDK编程(二)NDK的开发流程

JNI和NDK编程(三)JNI的数据类型和类型签名

JNI和NDK编程(四)JNI调用Java方法的流程