部分代码参考了

https://github.com/apache/flink/blob/master/flink-end-to-end-tests/flink-streaming-kafka-test/src/main/java/org/apache/flink/streaming/kafka/test/KafkaExample.java

pom

<!--flink-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>1.8.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.11</artifactId>

<version>1.8.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_2.11</artifactId>

<version>1.8.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>1.8.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_2.12</artifactId>

<version>1.8.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.11</artifactId>

<version>1.8.0</version>

</dependency>

代码

package com.bigdata.flink;

import org.apache.flink.api.common.restartstrategy.RestartStrategies;

import org.apache.flink.api.java.utils.ParameterTool;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class KafkaExampleUtil {

public static StreamExecutionEnvironment prepareExecutionEnv(ParameterTool parameterTool)

throws Exception {

if (parameterTool.getNumberOfParameters() < 5) {

System.out.println("Missing parameters!\n" +

"Usage: Kafka --input-topic <topic> --output-topic <topic> " +

"--bootstrap.servers <kafka brokers> " +

"--zookeeper.connect <zk quorum> --group.id <some id>");

throw new Exception("Missing parameters!\n" +

"Usage: Kafka --input-topic <topic> --output-topic <topic> " +

"--bootstrap.servers <kafka brokers> " +

"--zookeeper.connect <zk quorum> --group.id <some id>");

}

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.getConfig().setRestartStrategy(RestartStrategies.fixedDelayRestart(4, 10000));

env.enableCheckpointing(5000); // create a checkpoint every 5 seconds

env.getConfig().setGlobalJobParameters(parameterTool); // make parameters available in the web interface

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

return env;

}

}

代码

package com.bigdata.flink;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.java.utils.ParameterTool;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

public class KafkaExample {

public static void main(String[] args) throws Exception {

// parse input arguments

final ParameterTool parameterTool = ParameterTool.fromArgs(args);

StreamExecutionEnvironment env = KafkaExampleUtil.prepareExecutionEnv(parameterTool);

DataStream input = env

.addSource(new FlinkKafkaConsumer("test_topic", new SimpleStringSchema(), parameterTool.getProperties()));

input.print();

env.execute("Modern Kafka Example");

}

}

代码

package com.bigdata.flink;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.java.utils.ParameterTool;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

public class KafkaExample {

public static void main(String[] args) throws Exception {

// parse input arguments

final ParameterTool parameterTool = ParameterTool.fromArgs(args);

StreamExecutionEnvironment env = KafkaExampleUtil.prepareExecutionEnv(parameterTool);

DataStream input = env

.addSource(new FlinkKafkaConsumer("test_topic", new SimpleStringSchema(), parameterTool.getProperties()));

input.print();

env.execute("Modern Kafka Example");

}

}

配置

--input-topic test_topic --output-topic test_source --bootstrap.servers master:9092 --zookeeper.connect master:2181 --group.id test_group

往kafka topic中放数据

/opt/cloudera/parcels/KAFKA/bin/kafka-console-producer --broker-list master:9092 --topic test_topic

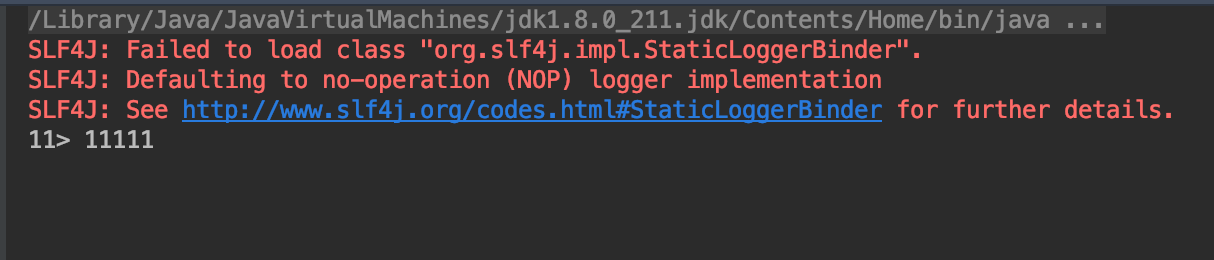

输出