步骤

1 maven中添加kafka的依赖

2 编写消费kafka的程序

3 启动kafka创建topic主题

4 启动flink程序消费kafka观察现象

1 maven中添加kafka的依赖

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka-0.10_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

2 编写消费kafka的程序

package com._51doit.wc

import java.util.Properties

import org.apache.flink.api.common.serialization.{DeserializationSchema, SimpleStringSchema}

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.connectors.kafka.{FlinkKafkaConsumer010, FlinkKafkaConsumer09}

import org.apache.kafka.clients.consumer.ConsumerConfig

/**

* @Auther: 多易教育-行哥

* @Date: 2020/6/14

* @Description:

*/

object KafkaDemo1 {

def main(args: Array[String]): Unit = {

//导入 flink的scala的API

import org.apache.flink.api.scala._

// 创建实时开发环境

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

// 配置文件对象

val p = new Properties()

//指定kafka的broker机器的位置

p.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "lx01:9092,lx01:9092,lx03:9092")

// 指定GroupId

p.put(ConsumerConfig.GROUP_ID_CONFIG, "flinkDemoGroup")

// 如果没有记录偏移量的话 第一次从topic的开始消费数据

p.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest")

// 创建消费者对象

val consumer = new FlinkKafkaConsumer010[String]("flink_demo", new SimpleStringSchema(), p)

// 通过添加Source的方式 创建DataStream

val ds: DataStream[String] = env.addSource(consumer)

// 打印结果

ds.print()

env.execute("consumer-kafka")

}

}

3 启动kafka创建topic主题

3.1 启动kafka集群

bin/kafka-server-start.sh -daemon /usr/apps/kafka_2.11-1.1.1/config/server.properties 3.2 创建topic主题

[root@lx01 bin]# ./kafka-topics.sh --zookeeper lx01:2181,lx02:2181,lx03:2181 --create --replication-factor 3 --partitions 3 --topic flink_demo

WARNING: Due to limitations in metric names, topics with a period ('.') or underscore ('_') could collide. To avoid issues it is best to use either, but not both.

Created topic "flink_demo".

3.3 向主题中写数据

./kafka-console-producer.sh --broker-list lx01:9092 --topic flink_demo

>hello kafka

>hello doit

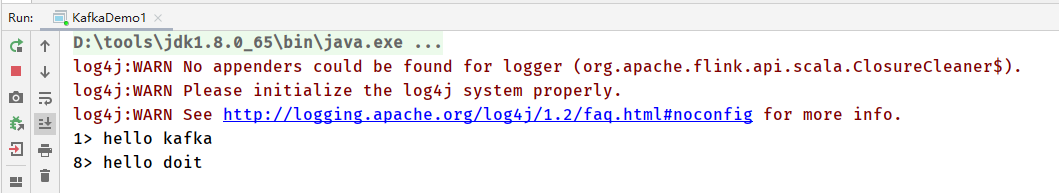

4 启动flink程序消费kafka观察现象