一说到爬虫,大家首先想到用python语言,的确,python有强大的类库,处理数据十分方便。但作为java程序猿,我所了解到,python中的许多功能,java也可以做到,比如,java中有类似于Scrapy的爬虫框架webMagic,他们实现的核心思路都是一样的;java也有词云生成框架KUMO。

今天我们就用java爬取《鸡你太美》这首歌曲的网易云音乐评论,并生成词云。

第一步,创建maven工程,导入jar包依赖。

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

<!-- httpClient -->

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

</dependency>

<!-- Jsoup -->

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.8.3</version>

</dependency>

<!-- 工具包 -->

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.9</version>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.5.5</version>

</dependency>

<!-- json转map工具-->

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

<version>2.9.3</version>

</dependency>

<!-- java生成词云框架 kumo -->

<dependency>

<groupId>com.kennycason</groupId>

<artifactId>kumo-core</artifactId>

<version>1.13</version>

</dependency>

<!-- java生成词云框架 kumo -->

<dependency>

<groupId>com.kennycason</groupId>

<artifactId>kumo-tokenizers</artifactId>

<version>1.12</version>

</dependency>

</dependencies>2.分析网易云评论是如何获取到的。

在网址栏输入只因你太美的url:“https://music.163.com/#/song?id=444267215”

按F12开发者工具,进入网络选项卡。

经过一番仔细的寻找,评论都在R_SO开头的文件中存着。

参考知乎大神 https://www.zhihu.com/question/36081767 “肖飞”的评论,我们可以发现获取评论的url格式为:

http://music.163.com/api/v1/resource/comments/R_SO_4_444267215?limit=20&offset="+offset;

只需要改变每页的评论数与偏移量offset就可以实现评论的翻页爬取。R_SO_4_444267215是这首歌曲的唯一标识。

分析完了, 动手写代码:

import org.apache.http.client.config.RequestConfig;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.impl.conn.PoolingHttpClientConnectionManager;

import org.apache.http.util.EntityUtils;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.kennycason.kumo.CollisionMode;

import com.kennycason.kumo.WordCloud;

import com.kennycason.kumo.WordFrequency;

import com.kennycason.kumo.bg.CircleBackground;

import com.kennycason.kumo.font.KumoFont;

import com.kennycason.kumo.font.scale.SqrtFontScalar;

import com.kennycason.kumo.nlp.FrequencyAnalyzer;

import com.kennycason.kumo.nlp.tokenizers.ChineseWordTokenizer;

import com.kennycason.kumo.palette.LinearGradientColorPalette;

import java.awt.Color;

import java.awt.Dimension;

import java.io.BufferedWriter;

import java.io.IOException;

import java.io.PrintWriter;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

@SuppressWarnings("static-access")

public class getComments {

private static PoolingHttpClientConnectionManager cm;

public getComments() {

this.cm = new PoolingHttpClientConnectionManager();

// 设置最大连接数

this.cm.setMaxTotal(100);

// 设置每个主机最大连接数

this.cm.setDefaultMaxPerRoute(10);

}

public static void main(String[] args) throws Exception {

// 开启爬虫

doTask();

}

public static void doTask() throws Exception {

int offset = 20;

int count = 0;

BufferedWriter bw = new BufferedWriter(new PrintWriter("D:\\ji.txt"));

while(offset<10000) {

//解析地址

String url = "http://music.163.com/api/v1/resource/comments/R_SO_4_444267215?limit=20&offset="+offset; //首页

String json = doGetJson(url);

count = parse(bw,json,count);

offset+=20;

}

System.out.println("总评论数:"+count);

bw.close();

//生成词云

cerateWordCloud();

}

/**

* 根据请求地址下载页面数据

*

* @param url

* @return 页面数据

*/

public static String doGetJson(String url) {

// 获取httpClient对象

CloseableHttpClient httpClient = HttpClients.custom().setConnectionManager(cm).build();

// 创建httpGet请求对象,设置url地址

HttpGet httpGet = new HttpGet(url);

httpGet.addHeader("User-Agent",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/38.0.2125.122 UBrowser/4.0.3214.0 Safari/537.36");

// 设置请求信息

httpGet.setConfig(getConfig());

// 使用httpClient发起请求,获取响应

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

// 解析响应,返回结果

if (response.getStatusLine().getStatusCode() == 200) {

// 判断响应体Entity是否不为空,如果不为空,就可以使用Entityutils

if (response.getEntity() != null) {

String content = EntityUtils.toString(response.getEntity(), "UTF-8");

// System.out.println(content);

return content;

}

}

} catch (IOException e) {

e.printStackTrace();

} finally {

// 关闭response

if (response != null) {

try {

response.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

// 解析响应,返回结果

return "";

}

@SuppressWarnings("unchecked")

private static Integer parse(BufferedWriter bw, String json,int count) throws Exception {

if (json != null) {

ObjectMapper mapper = new ObjectMapper();

HashMap<String, Object> map = (HashMap<String, Object>) mapper.readValue(json.toString(), Map.class);

List<Map<String, Object>> commentList = (List<Map<String, Object>>) map.get("comments");

System.out.println(commentList.size());

if (commentList != null) {

for (Map<String, Object> comment : commentList) {

//可以遍历评论人的昵称

/*

* Map<String, Object> userMap = (Map<String, Object>) comment.get("user"); for

* (Entry<String, Object> user : userMap.entrySet()) { if

* ("nickname".equals(user.getKey())) { System.out.print(user.getValue() + ":");

* } }

*/

String content = comment.get("content").toString().trim();

bw.write(content);

count++;

}

}

}

return count;

}

private static void cerateWordCloud() throws IOException {

// 建立词频分析器,设置词频,以及词语最短长度,此处的参数配置视情况而定即可

FrequencyAnalyzer frequencyAnalyzer = new FrequencyAnalyzer();

frequencyAnalyzer.setWordFrequenciesToReturn(600);

frequencyAnalyzer.setMinWordLength(2);

// 引入中文解析器

frequencyAnalyzer.setWordTokenizer(new ChineseWordTokenizer());

// 指定文本文件路径,生成词频集合

final List<WordFrequency> wordFrequencyList = frequencyAnalyzer.load("D:\\ji.txt");

// 设置图片分辨率

Dimension dimension = new Dimension(1920, 1080);

// 此处的设置采用内置常量即可,生成词云对象

WordCloud wordCloud = new WordCloud(dimension, CollisionMode.PIXEL_PERFECT);

// 设置边界及字体

wordCloud.setPadding(2);

java.awt.Font font = new java.awt.Font("STSong-Light", 2, 20);

// 设置词云显示的三种颜色,越靠前设置表示词频越高的词语的颜色

wordCloud.setColorPalette(new LinearGradientColorPalette(Color.RED, Color.BLUE, Color.GREEN, 30, 30));

wordCloud.setKumoFont(new KumoFont(font));

// 设置背景色

wordCloud.setBackgroundColor(new Color(255, 255, 255));

// 设置背景图片

// wordCloud.setBackground(new PixelBoundryBackground("E:\\爬虫/google.jpg"));

// 设置背景图层为圆形

wordCloud.setBackground(new CircleBackground(300));

wordCloud.setFontScalar(new SqrtFontScalar(12, 45));

// 生成词云

wordCloud.build(wordFrequencyList);

wordCloud.writeToFile("D:\\wordCloud.png");

System.out.println("词云生成完毕!");

}

// 设置请求信息

private static RequestConfig getConfig() {

RequestConfig config = RequestConfig.custom()

.setConnectTimeout(1000)

.setConnectionRequestTimeout(500)

.setSocketTimeout(10000) //数据传输的最长时间

.build();

return config;

}

}上边代码,我将评论爬取到,通过BufferedWriter装饰类将评论写到了txt文件中,然后在生成词云的时候,再从文件中获取评论。生成词云的代码都是固定不变的,只需要修改文件的路径即可。

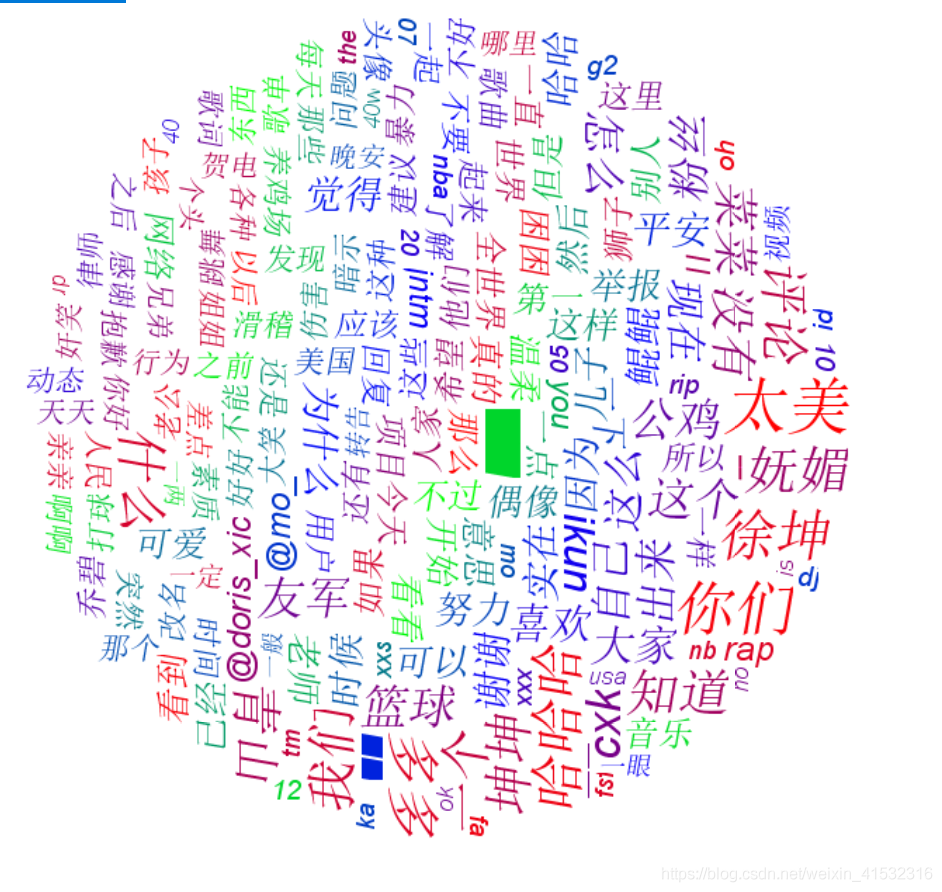

爬取完毕,再D盘下生成了一个名为:“wordCloud.png”的图片,就是词云了。

结果:

觉得有趣的话,请动手试试吧,爬取一下您喜欢的歌曲!