一、从HDFS读取文件输出到控制台

public static void catFile() throws IOException {

//获取配置文件对象

Configuration conf = new Configuration();

//添加配置信息

conf.set("fs.defaultFS","hdfs://master:9000");

//获取文件系统对象

FileSystem fs = FileSystem.get(conf);

//操作文件系统

FSDataInputStream fis = fs.open(new Path("/user/hadoop/input/people.csv"));

//读取文件输出到控制台

IOUtils.copyBytes(fis, System.out, conf, true);

//关闭文件系统

if(fs != null) {

fs.close();

}

}二、从HDFS读取文件,保存写入到本地

public static void catFile2() throws IOException {

//获取配置文件对象

Configuration conf = new Configuration();

//添加配置信息

conf.set("fs.defaultFS","hdfs://master:9000");

//获取文件系统对象

FileSystem fs = FileSystem.get(conf);

//操作文件系统

FSDataInputStream fis = fs.open(new Path("/user/hadoop/input/people.csv"));

OutputStream out = new FileOutputStream(new File("E:\\Hadoop\\data\\aaa.txt"));

//从HDFS读取文件,写入本地

IOUtils.copyBytes(fis, out, conf, true);

//关闭文件系统

if(fs != null) {

fs.close();

}

}三、在HDFS创建一个多级目录

public static void mkdir() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://master:9000");

FileSystem fs = FileSystem.get(conf);

Path outputDir=new Path("/user/hadoop/test_mkdir");

if(!fs.exists(outputDir)){//判断如果不存在就删除

fs.mkdirs(new Path("/user/hadoop/test_mkdir"));

System.out.println("文件创建成功!");

}else {

System.out.println("文件路径已经存在!");

}

fs.close();

}四、在HDFS判断是否存在,存在删除

public static void delete() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://master:9000");

FileSystem fs = FileSystem.get(conf);

if(fs.exists(new Path("/user/hadoop/test_delete"))){//判断如果不存在就删除

fs.delete(new Path("/user/hadoop/test_delete"),true);

System.out.println("文件删除成功!");

}else {

System.out.println("文件路径不存在!");

}

fs.close();

}五、从本地上传单个文件到HDFS

public static void updata() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://master:9000");

FileSystem fs = FileSystem.get(conf);

Path srcPath = new Path("E:\\Hadoop\\input\\people.csv"); //本地上传文件路径

Path dstPath = new Path("/user/hadoop/test_mkdir"); //HDFS目标路径

//调用文件系统的文件复制函数,前面参数是指是否删除原文件,true为删除,默认为false

fs.copyFromLocalFile(false, srcPath, dstPath);

System.out.println("文件上传成功!");

fs.close();

}六、从本地上传多个文件到HDFS

public static void updata2() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://master:9000");

FileSystem fs = FileSystem.get(conf);

Path[] paths = new Path[] {new Path("E:\\Hadoop\\input"), new Path("E:\\Hadoop\\data")};

Path dstPath = new Path("/user/hadoop/test_mkdir"); //HDFS目标路径

//调用文件系统的文件复制函数,前面参数是指是否删除原文件,true为删除,默认为false

fs.copyFromLocalFile(false, true, paths, dstPath);

System.out.println("文件上传成功!");

fs.close();

}七、从HDFS下载文件到本地

public static void download() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://master:9000");

FileSystem fs = FileSystem.get(conf);

Path dstPath = new Path("E:\\Hadoop\\data"); //本地保存路径

Path srcPath = new Path("/user/hadoop/test_mkdir/people.csv"); //HDFS目标路径文件

fs.copyToLocalFile(false, srcPath, dstPath);

System.out.println("文件下载成功!");

fs.close();

}八、在HDFS创建文件并写入内容

public static void touchFileWith() throws Exception{

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://master:9000");

FileSystem fs = FileSystem.get(conf);

FSDataOutputStream fos=fs.create(new Path("/user/hadoop/test.txt"));

fos.write("hello guiyang".getBytes());

fs.close();

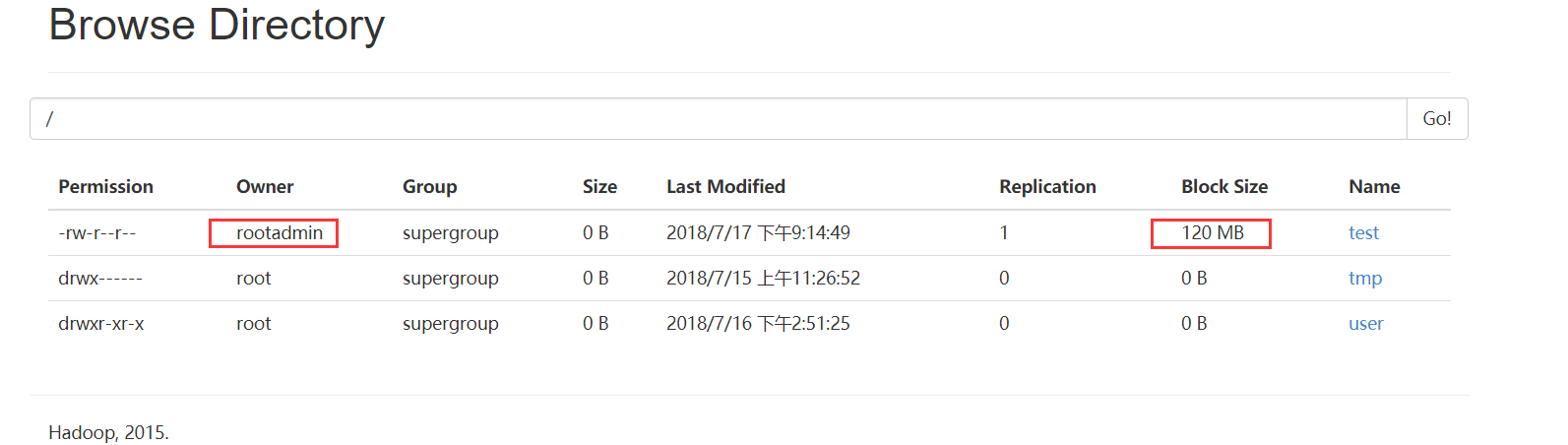

}九、在HDFS创建文件并实现自定义Owner、块大小、副本数

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class HDFSClient {

public static FileSystem getFs() {

// TODO Auto-generated method stub

Configuration conf = new Configuration();

conf.set("dfs.blocksize","120M");//自定义块大小

// conf.set("fs.defaultFS","hdfs://master:9000");

conf.set("dfs.replication", "1");//自定义副本数

FileSystem fs = null;

try {

//自定义Owner

fs = FileSystem.get(new URI("hdfs://master:9000"), conf, "rootadmin");

}catch(IOException | InterruptedException | URISyntaxException e) {

e.printStackTrace();

}

return fs;

}

public static void close(FileSystem fs) {//关闭

if(fs != null) {

try {

fs.close();

}catch(IOException e) {

e.printStackTrace();

}

}

}

public static void test() throws IllegalArgumentException, IOException {

FileSystem fs = HDFSClient.getFs();

fs.create(new Path("/test"));

System.out.println("Finished!");

HDFSClient.close(fs);

}

public static void main(String[] args) throws IOException {

// 主函数调用

test();

}

}

进入http://192.168.228.140:50070/explorer.html#/,即可看到如下效果图

附录:整体源代码

package HT;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.OutputStream;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import com.sun.swing.internal.plaf.metal.resources.metal_zh_TW;

public class HT {

public static void main(String[] args) throws IOException {

// TODO Auto-generated method stub

// 主函数调用

// catFile();

// catFile2();

mkdir();

// delete();

// updata();

updata2();

// download();

// test();

}

/*

* catFile()

* 从HDFS读取文件

*

* */

public static void catFile() throws IOException {

//获取配置文件对象

Configuration conf = new Configuration();

//添加配置信息

conf.set("fs.defaultFS","hdfs://master:9000");

//获取文件系统对象

FileSystem fs = FileSystem.get(conf);

//操作文件系统

FSDataInputStream fis = fs.open(new Path("/user/hadoop/input/people.csv"));

//读取文件输出到控制台

IOUtils.copyBytes(fis, System.out, conf, true);

//关闭文件系统

if(fs != null) {

fs.close();

}

}

/*

* catFile2

* 从HDFS读取文件,保存写入到本地

*

* */

public static void catFile2() throws IOException {

//获取配置文件对象

Configuration conf = new Configuration();

//添加配置信息

conf.set("fs.defaultFS","hdfs://master:9000");

//获取文件系统对象

FileSystem fs = FileSystem.get(conf);

//操作文件系统

FSDataInputStream fis = fs.open(new Path("/user/hadoop/input/people.csv"));

OutputStream out = new FileOutputStream(new File("E:\\Hadoop\\data\\aaa.txt"));

//从HDFS读取文件,写入本地

IOUtils.copyBytes(fis, out, conf, true);

//关闭文件系统

if(fs != null) {

fs.close();

}

}

/*

* mkdir

* 在HDFS创建一个多级目录

*

* */

public static void mkdir() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://master:9000");

FileSystem fs = FileSystem.get(conf);

Path outputDir=new Path("/user/hadoop/test_mkdir");

if(!fs.exists(outputDir)){//判断如果不存在就删除

fs.mkdirs(new Path("/user/hadoop/test_mkdir"));

System.out.println("文件创建成功!");

}else {

System.out.println("文件路径已经存在!");

}

fs.close();

}

/*

* delete()

* 在HDFS判断是否存在,存在删除

*

* */

public static void delete() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://master:9000");

FileSystem fs = FileSystem.get(conf);

if(fs.exists(new Path("/user/hadoop/test_delete"))){//判断如果不存在就删除

fs.delete(new Path("/user/hadoop/test_delete"),true);

System.out.println("文件删除成功!");

}else {

System.out.println("文件路径不存在!");

}

fs.close();

}

/*

* updata()

* 从本地上传单个文件到HDFS

*

* */

public static void updata() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://master:9000");

FileSystem fs = FileSystem.get(conf);

Path srcPath = new Path("E:\\Hadoop\\input\\people.csv"); //本地上传文件路径

Path dstPath = new Path("/user/hadoop/test_mkdir"); //HDFS目标路径

//调用文件系统的文件复制函数,前面参数是指是否删除原文件,true为删除,默认为false

fs.copyFromLocalFile(false, srcPath, dstPath);

System.out.println("文件上传成功!");

fs.close();

}

/*

* updata2()

* 从本地上传多个文件到HDFS

*

* */

public static void updata2() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://master:9000");

FileSystem fs = FileSystem.get(conf);

Path[] paths = new Path[] {new Path("E:\\Hadoop\\input"), new Path("E:\\Hadoop\\data")};

Path dstPath = new Path("/user/hadoop/test_mkdir"); //HDFS目标路径

//调用文件系统的文件复制函数,前面参数是指是否删除原文件,true为删除,默认为false

fs.copyFromLocalFile(false, true, paths, dstPath);

System.out.println("文件上传成功!");

fs.close();

}

/*

* download()

* 从HDFS下载文件到本地

*

* */

public static void download() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://master:9000");

FileSystem fs = FileSystem.get(conf);

Path dstPath = new Path("E:\\Hadoop\\data"); //本地保存路径

Path srcPath = new Path("/user/hadoop/test_mkdir/people.csv"); //HDFS目标路径文件

fs.copyToLocalFile(false, srcPath, dstPath);

System.out.println("文件下载成功!");

fs.close();

}

/*

* touchFileWith()

* 在HDFS创建文件并写入内容

*

* */

public static void touchFileWith() throws Exception{

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://master:9000");

FileSystem fs = FileSystem.get(conf);

FSDataOutputStream fos=fs.create(new Path("/user/hadoop/test.txt"));

fos.write("hello guiyang".getBytes());

fs.close();

}

}