Docker现在用的越来越多了,咱们也要跟上节奏呀,来吧

我的目录结构如下:

首先创建一个目录用来存放咱们后来想要存放的一些东西,例如hadoop的安装包,jdk的安装包等.

zhenghui@F117:/soft/code/hadoopImages$

zhenghui@F117:/soft/code/hadoopImages$ pwd

/soft/code/hadoopImages

zhenghui@F117:/soft/code/hadoopImages$

zhenghui@F117:/soft/code/hadoopImages$

zhenghui@F117:/soft/code/hadoopImages$ ll

总用量 404136

drwxr-xr-x 2 zhenghui zhenghui 4096 2月 12 09:26 ./

drwxr-xr-x 7 zhenghui zhenghui 4096 2月 12 08:44 ../

-rw-r--r-- 1 zhenghui zhenghui 1083 2月 12 09:08 Dockerfile

-rwxrwxrwx 1 zhenghui zhenghui 218720521 10月 2 20:38 hadoop-2.7.7.tar.gz*

-rwxrwxrwx 1 zhenghui zhenghui 195094741 10月 3 11:42 jdk-8u221-linux-x64.tar.gz*

zhenghui@F117:/soft/code/hadoopImages$

zhenghui@F117:/soft/code/hadoopImages$

zhenghui@F117:/soft/code/hadoopImages$

编辑Dockerfile文件

zhenghui@F117:/soft/code/hadoopImages$ sudo vim Dockerfile

内容如下:

FROM centos:7

MAINTAINER zhenghui<[email protected]>

ADD hadoop-2.7.7.tar.gz /usr/local/

ADD jdk-8u221-linux-x64.tar.gz /usr/local/

RUN yum -y install vim

RUN yum -y install net-tools

ENV MYLOGINPATH /usr/local

WORKDIR $MYLOGINPATH

ENV JAVA_HOME /usr/local/jdk1.8.0_221

ENV CLASSPATH $JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

ENV HADOOP_HOME /usr/local/hadoop-2.7.7

ENV PATH $PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

EXPOSE 22

EXPOSE 50010

EXPOSE 50075

EXPOSE 50475

EXPOSE 50020

EXPOSE 50070

EXPOSE 50470

EXPOSE 8020

EXPOSE 8485

EXPOSE 8019

EXPOSE 8032

EXPOSE 8030

EXPOSE 8031

EXPOSE 8033

EXPOSE 8088

EXPOSE 8088

EXPOSE 8040

EXPOSE 8041

EXPOSE 10020

EXPOSE 19888

EXPOSE 60000

EXPOSE 60010

EXPOSE 60020

EXPOSE 60030

EXPOSE 2181

EXPOSE 2888

EXPOSE 3888

EXPOSE 9083

EXPOSE 10000

EXPOSE 2181

EXPOSE 2888

EXPOSE 3888

构建容器

zhenghui@F117:/soft/code/hadoopImages$ sudo docker build -f Dockerfile -t myhadoop:0.1 .

查看是否构建完成

zhenghui@F117:/soft/code/hadoopImages$

zhenghui@F117:/soft/code/hadoopImages$ sudo docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

myhadoop 0.1 21e9954de389 25 minutes ago 1.22GB

centos 7 5e35e350aded 3 months ago 203MB

zhenghui@F117:/soft/code/hadoopImages$

zhenghui@F117:/soft/code/hadoopImages$

运行构建好的hadoop容器

zhenghui@F117:/soft/code/hadoopImages$ sudo docker run -itd --name myhd -p 2222:22 myadoop

查看是否启动成功

zhenghui@F117:/soft/code/hadoopImages$

zhenghui@F117:/soft/code/hadoopImages$ sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

78d601d004df myhadoop:0.1 "/bin/bash" 23 minutes ago Up 23 minutes 2181/tcp, 2888/tcp, 3888/tcp, 8019-8020/tcp, 8030-8033/tcp, 8040-8041/tcp, 8088/tcp, 8485/tcp, 9083/tcp, 10000/tcp, 10020/tcp, 19888/tcp, 50010/tcp, 50020/tcp, 50070/tcp, 50075/tcp, 50470/tcp, 50475/tcp, 60000/tcp, 60010/tcp, 60020/tcp, 60030/tcp, 0.0.0.0:2222->22/tcp myhd

zhenghui@F117:/soft/code/hadoopImages$

zhenghui@F117:/soft/code/hadoopImages$

进入容器

zhenghui@F117:/soft/code/hadoopImages$

zhenghui@F117:/soft/code/hadoopImages$ sudo docker exec -it myhd /bin/bash

查看是否进入

zhenghui@F117:/soft/code/hadoopImages$

zhenghui@F117:/soft/code/hadoopImages$ sudo docker exec -it myhd /bin/bash

[root@78d601d004df local]#

[root@78d601d004df local]#

[root@78d601d004df local]# pwd

/usr/local

[root@78d601d004df local]#

可以看到现在当前所在目录下有hadoop和jdk的目录

[root@78d601d004df local]#

[root@78d601d004df local]# ll

total 52

drwxr-xr-x 2 root root 4096 Apr 11 2018 bin

drwxr-xr-x 2 root root 4096 Apr 11 2018 etc

drwxr-xr-x 2 root root 4096 Apr 11 2018 games

drwxr-xr-x 1 1000 ftp 4096 Feb 12 01:21 hadoop-2.7.7

drwxr-xr-x 2 root root 4096 Apr 11 2018 include

drwxr-xr-x 7 10 143 4096 Jul 4 2019 jdk1.8.0_221

drwxr-xr-x 2 root root 4096 Apr 11 2018 lib

drwxr-xr-x 2 root root 4096 Apr 11 2018 lib64

drwxr-xr-x 2 root root 4096 Apr 11 2018 libexec

drwxr-xr-x 2 root root 4096 Apr 11 2018 sbin

drwxr-xr-x 5 root root 4096 Oct 1 01:15 share

drwxr-xr-x 2 root root 4096 Apr 11 2018 src

[root@78d601d004df local]#

查看构建容器的时候是否已经配置好了环境变量

[root@78d601d004df local]# java -version

java version "1.8.0_221"

Java(TM) SE Runtime Environment (build 1.8.0_221-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.221-b11, mixed mode)

[root@78d601d004df local]#

[root@78d601d004df local]# hadoop version

Hadoop 2.7.7

Subversion Unknown -r c1aad84bd27cd79c3d1a7dd58202a8c3ee1ed3ac

Compiled by stevel on 2018-07-18T22:47Z

Compiled with protoc 2.5.0

From source with checksum 792e15d20b12c74bd6f19a1fb886490

This command was run using /usr/local/hadoop-2.7.7/share/hadoop/common/hadoop-common-2.7.7.jar

[root@78d601d004df local]#

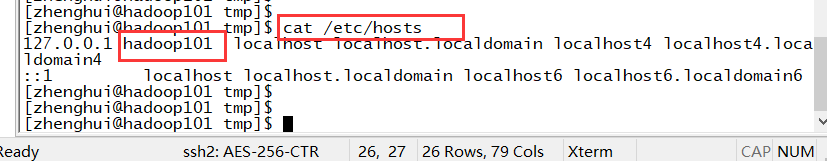

配置hadoop的host映射文件

[root@78d601d004df local]# vim /etc/hosts

加入与本机ip的映射,不然一会你会启动不来hadoop

172.17.0.3 hadoop101

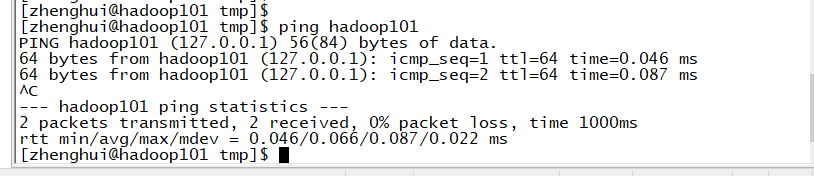

查看是否映射成功

[root@78d601d004df local]#

[root@78d601d004df local]# ping hadoop101

PING localhost (127.0.0.1) 56(84) bytes of data.

64 bytes from localhost (127.0.0.1): icmp_seq=1 ttl=64 time=0.033 ms

64 bytes from localhost (127.0.0.1): icmp_seq=2 ttl=64 time=0.055 ms

64 bytes from localhost (127.0.0.1): icmp_seq=3 ttl=64 time=0.052 ms

^C

--- localhost ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2041ms

rtt min/avg/max/mdev = 0.033/0.046/0.055/0.012 ms

[root@78d601d004df local]#

配置hadoop配置文件

进入到此目录

[root@78d601d004df hadoop]# pwd

/usr/local/hadoop-2.7.7/etc/hadoop

[root@78d601d004df hadoop]#

编辑hdfs-site.xml文件

内容如下:

<configuration>

<!--指定HDFS副本的数量,默认是三个,因为现在只有1个节点-->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

配置core-site.xml

vi etc/hadoop/core-site.xml

加入以下内容:

<configuration>

<!--指定DFS中NameNode的地址-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop101:9000</value>

</property>

<!--指定Hadoop运行时产生文件的存储目录-->

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop-2.7.7/data/tmp</value>

</property>

</configuration>

启动

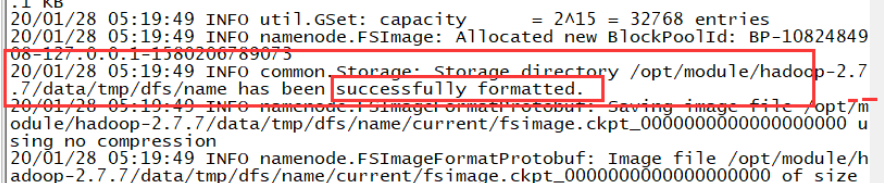

1、格式化

hdfs namenode -format

运行格式化命令时一定要注意:

hdfs://hadoop101:9000中的hadoop101能ping通,不然就会一直卡着。

和我下面这个出现的结果一样就算格式化成功了

2、启动namenode和datanode

hadoop-daemon.sh start namenode

hadoop-daemon.sh start datanode

运行jps如果出现下面这些就成功

[zhenghui@hadoop101 hadoop-2.7.7]$ jps

10582 NameNode

10726 Jps

10649 DataNode

测试

浏览器访问http://ip:50070/

例如我这个:http://172.17.0.3:50070/