一、Dataloader与Dataset

数据:

- 数据收集:原始数据(img), 标签(label)

- 数据划分:训练集(训练模型),验证集(验证模型是否过拟合),测试集(测试模型性能)

- 数据读取:DataLoader

- Sampler:生成索引,也就是样本的序号

- DataSet:根据索引读取样本和标签

- 数据预处理:transforms

1.1 torch.utils.data.DataLoader

DataLoader( dataset,

batch_size=1,

shuffle=False,

sampler=None,

batch_sampler=None,

num_workers=0,

collate_fn=None,

pin_memory=False,

drop_last=False,

timeout=0,

worker_init_fn=None,

multiprocessing_context=None)

功能: 构建可迭代的数据装载器

- dataset : Dataset类,决定数据从哪读取及如何读取

- batchsize : 批大小

- num_works: 是否采用多进程读取数据

- shuffle: 每个epoch是否乱序

- drop_last: 当样本数不能被batchsize整除时,是否舍弃最后一批数据

Epoch, Iteration 和 Batchsize之间的关系:

- Epoch: 所有训练样本都已输入到模型中,称为一个Epoch

- Iteration: 一批样本输入到模型中,称之为一个lteration

- Batchsize: 批大小,决定一个Epoch有多少个Iteration

样本总数: 80, Batchsize: 8

1 Epoch = 10 Iteration

样本总数: 87, Batchsize: 8

1 Epoch = 10 Iteration ? drop last = True

1 Epoch = 11 Iteration ? drop last = False

1.2 torch.utils.data.Dataset

class Dataset(object):

def getitem (self, index):

raise NotImplementedError

def add (self, other):

return ConcatDataset([self, other1)

功能: Dataset抽象类,所有自定义的 Dataset需要继承它,并重写_getitem__()

- getitem接收一个索引,返回一个样本

1.3 Pytorch的数据读取机制

数据读取的三个主要问题:

pytorch中的数据读取机制:

DataLoader中根据num_works,选择单进程还是多进程读取机制,即选择相应的DataLoaderIter。

在DataLoaderIter中的__next__()方法获取,而其中_next_index()方法读取索引,dataset_fetcher.fetch()方法根据得到的索引来获取数据

- _next_index()方法读取数据的索引:

它其中使用到了sampler的__iter__()方法,该方法给出每个batchsize该读那些数据,即数据的索引,所以,数据的索引最终是由sampler的__iter__()方法得到的 - dataset_fetcher.fetch()方法获取数据:

dataset_fetcher的fetch()方法中实现了具体的数据读取,即通过Dataset中的getitem来得到具体的数据和标签,然后fetch方法将得到的数据和标签通过collate方法整合起来,形成Batchdata,即数据对应标签的格式的list。

二、人民币二分类

人民币二分类模型:

机器学习模型训练步骤:

机器学习模型训练步骤:

- 数据

- 模型

- 损失函数

- 优化器

- 迭代训练

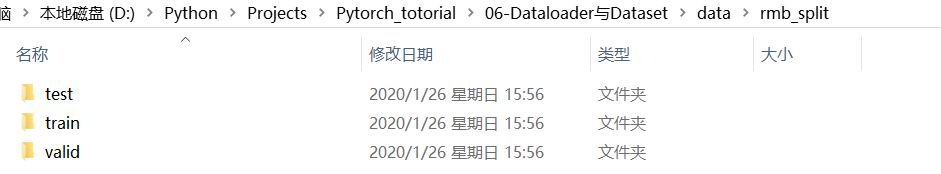

2.1 划分数据集

# -*- coding: utf-8 -*-

import os

import random

import shutil

def makedir(new_dir):

if not os.path.exists(new_dir):

os.makedirs(new_dir)

if __name__ == '__main__':

random.seed(1)

dataset_dir = os.path.join("..", "..", "data", "RMB_data")

split_dir = os.path.join("..", "..", "data", "rmb_split")

train_dir = os.path.join(split_dir, "train")

valid_dir = os.path.join(split_dir, "valid")

test_dir = os.path.join(split_dir, "test")

train_pct = 0.8

valid_pct = 0.1

test_pct = 0.1

for root, dirs, files in os.walk(dataset_dir):

for sub_dir in dirs:

imgs = os.listdir(os.path.join(root, sub_dir))

imgs = list(filter(lambda x: x.endswith('.jpg'), imgs))

random.shuffle(imgs)

img_count = len(imgs)

train_point = int(img_count * train_pct)

valid_point = int(img_count * (train_pct + valid_pct))

for i in range(img_count):

if i < train_point:

out_dir = os.path.join(train_dir, sub_dir)

elif i < valid_point:

out_dir = os.path.join(valid_dir, sub_dir)

else:

out_dir = os.path.join(test_dir, sub_dir)

makedir(out_dir)

target_path = os.path.join(out_dir, imgs[i])

src_path = os.path.join(dataset_dir, sub_dir, imgs[i])

shutil.copy(src_path, target_path)

print('Class:{}, train:{}, valid:{}, test:{}'.format(sub_dir, train_point, valid_point-train_point,

img_count-valid_point))

运行结果:

划分结果:

2.2 训练模型

# -*- coding: utf-8 -*-

import os

import random

import numpy as np

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

import torch.optim as optim

from matplotlib import pyplot as plt

from model.lenet import LeNet

from tools.my_dataset import RMBDataset

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed() # 设置随机种子

rmb_label = {"1": 0, "100": 1}

# 参数设置

MAX_EPOCH = 10

BATCH_SIZE = 16

LR = 0.01

log_interval = 10

val_interval = 1

# ============================ step 1/5 数据 ============================

split_dir = os.path.join("..", "..", "data", "rmb_split")

train_dir = os.path.join(split_dir, "train")

valid_dir = os.path.join(split_dir, "valid")

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

train_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.RandomCrop(32, padding=4),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

valid_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

# 构建MyDataset实例

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

valid_data = RMBDataset(data_dir=valid_dir, transform=valid_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)

# ============================ step 2/5 模型 ============================

net = LeNet(classes=2)

net.initialize_weights()

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数

# ============================ step 4/5 优化器 ============================

optimizer = optim.SGD(net.parameters(), lr=LR, momentum=0.9) # 选择优化器

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1) # 设置学习率下降策略

# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()

for epoch in range(MAX_EPOCH):

loss_mean = 0.

correct = 0.

total = 0.

net.train()

for i, data in enumerate(train_loader):

# forward

inputs, labels = data

outputs = net(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

# 统计分类情况

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().sum().numpy()

# 打印训练信息

loss_mean += loss.item()

train_curve.append(loss.item())

if (i+1) % log_interval == 0:

loss_mean = loss_mean / log_interval

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, i+1, len(train_loader), loss_mean, correct / total))

loss_mean = 0.

scheduler.step() # 更新学习率

# validate the model

if (epoch+1) % val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

net.eval()

with torch.no_grad():

for j, data in enumerate(valid_loader):

inputs, labels = data

outputs = net(inputs)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).squeeze().sum().numpy()

loss_val += loss.item()

valid_curve.append(loss_val/valid_loader.__len__())

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, j+1, len(valid_loader), loss_val, correct_val / total_val))

train_x = range(len(train_curve))

train_y = train_curve

train_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval # 由于valid中记录的是epochloss,需要对记录点进行转换到iterations

valid_y = valid_curve

plt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')

plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()

# ============================ inference ============================

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

test_dir = os.path.join(BASE_DIR, "test_data")

test_data = RMBDataset(data_dir=test_dir, transform=valid_transform)

valid_loader = DataLoader(dataset=test_data, batch_size=1)

for i, data in enumerate(valid_loader):

# forward

inputs, labels = data

outputs = net(inputs)

_, predicted = torch.max(outputs.data, 1)

rmb = 1 if predicted.numpy()[0] == 0 else 100

print("模型获得{}元".format(rmb))

训练结果: