custom-metrics部署

架构分析

prometheus组件: 负责存储获取到的k8s集群中各种监控数据

node_exporter组件: 是prometheus安装在每个节点采集数据的agent

custom-metrics-apiserver组件: 负责接收用户和响应用户发起的rest风格对监控指标操作的请求

k8s-promethues-adpater组件: 把kube-state-metrics组件返回的数据输出为一个API服务,并集成到k8s的API Server中

kube-state-metrics组件: 负责完成k8s和prometheus两者之间的数据格式转换.数据转换之后还不能直接通过k8s的API Server获取 还必须经过k8s-promethues-adpater组件的进一步处理

部署步骤

清单地址: https://github.com/iKubernetes/k8s-prom

1.创建名称空间(可选)

---

apiVersion: v1

kind: Namespace

metadata:

name: prom

2.安装node_exporter

3.安装prometheus

4.安装kube-state-metrics

5.安装k8s-promethues-adpater

参考清单: https://github.com/DirectXMan12/k8s-prometheus-adapter/tree/master/deploy/manifests

apiVersion: v1 kind: ConfigMap metadata: name: adapter-config namespace: prom data: config.yaml: | rules: - seriesQuery: '{__name__=~"^container_.*",container_name!="POD",namespace!="",pod_name!=""}' seriesFilters: [] resources: overrides: namespace: resource: namespace pod_name: resource: pod name: matches: ^container_(.*)_seconds_total$ as: "" metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>,container_name!="POD"}[1m])) by (<<.GroupBy>>) - seriesQuery: '{__name__=~"^container_.*",container_name!="POD",namespace!="",pod_name!=""}' seriesFilters: - isNot: ^container_.*_seconds_total$ resources: overrides: namespace: resource: namespace pod_name: resource: pod name: matches: ^container_(.*)_total$ as: "" metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>,container_name!="POD"}[1m])) by (<<.GroupBy>>) - seriesQuery: '{__name__=~"^container_.*",container_name!="POD",namespace!="",pod_name!=""}' seriesFilters: - isNot: ^container_.*_total$ resources: overrides: namespace: resource: namespace pod_name: resource: pod name: matches: ^container_(.*)$ as: "" metricsQuery: sum(<<.Series>>{<<.LabelMatchers>>,container_name!="POD"}) by (<<.GroupBy>>) - seriesQuery: '{namespace!="",__name__!~"^container_.*"}' seriesFilters: - isNot: .*_total$ resources: template: <<.Resource>> name: matches: "" as: "" resources: template: <<.Resource>> name: matches: ^(.*)_total$ as: "" metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>}[1m])) by (<<.GroupBy>>) - seriesQuery: '{namespace!="",__name__!~"^container_.*"}' seriesFilters: [] resources: template: <<.Resource>> name: matches: ^(.*)_seconds_total$ as: "" metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>}[1m])) by (<<.GroupBy>>) resourceRules: cpu: resources: overrides: instance: resource: node namespace: resource: namespace pod_name: resource: pod containerLabel: container_name memory: containerQuery: sum(container_memory_working_set_bytes{<<.LabelMatchers>>}) by (<<.GroupBy>>) nodeQuery: sum(container_memory_working_set_bytes{<<.LabelMatchers>>,id='/'}) by (<<.GroupBy>>) resources: overrides: instance: resource: node namespace: resource: namespace pod_name: resource: pod containerLabel: container_name window: 1m

apiVersion: apps/v1 kind: Deployment metadata: labels: app: custom-metrics-apiserver name: custom-metrics-apiserver namespace: prom spec: replicas: 1 selector: matchLabels: app: custom-metrics-apiserver template: metadata: labels: app: custom-metrics-apiserver name: custom-metrics-apiserver spec: serviceAccountName: custom-metrics-apiserver containers: - name: custom-metrics-apiserver image: directxman12/k8s-prometheus-adapter-amd64 args: - --secure-port=6443 - --tls-cert-file=/var/run/serving-cert/serving.crt - --tls-private-key-file=/var/run/serving-cert/serving.key - --logtostderr=true - --prometheus-url=http://prometheus.prom.svc:9090/ - --metrics-relist-interval=1m - --v=10 - --config=/etc/adapter/config.yaml ports: - containerPort: 6443 volumeMounts: - mountPath: /var/run/serving-cert name: volume-serving-cert readOnly: true - mountPath: /etc/adapter/ name: config readOnly: true - mountPath: /tmp name: tmp-vol volumes: - name: volume-serving-cert secret: secretName: cm-adapter-serving-certs - name: config configMap: name: adapter-config - name: tmp-vol emptyDir: {}

k8s-promethues-adpater默认使用的是http协议 k8s默认采取的是https协议 必须手动把 k8s-promethues-adpater的协议升级为https

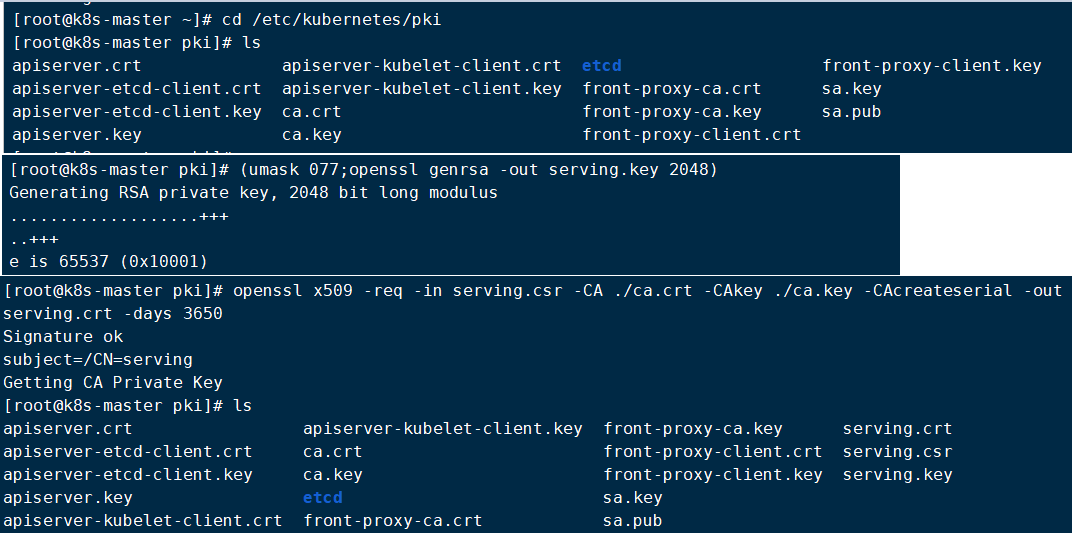

用k8s自带的CA创建证书用来和apiserver进行通信 1. cd /etc/kubernetes/pki 2. (umask 077;openssl genrsa -out serving.key 2048) 3.openssl req -new -key serving.key -out serving.csr -subj "/CN=serving" 4.openssl x509 -req -in serving.csr -CA ./ca.crt -CAkey ./ca.key -CAcreateserial -out serving.crt -days 3650 5.kubectl create secret generic cm-adapter-serving-certs --from-file=serving.crt=./serving.crt --from-file=serving.key=./serving.key -n prom cm-adapter-serving-certs是pod中指定需要加载的secret名称 这两个地方的名称必须是一致的

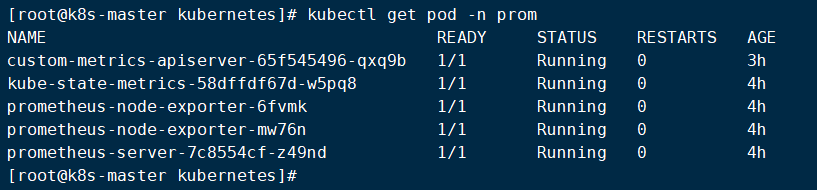

检查部署结果如下:

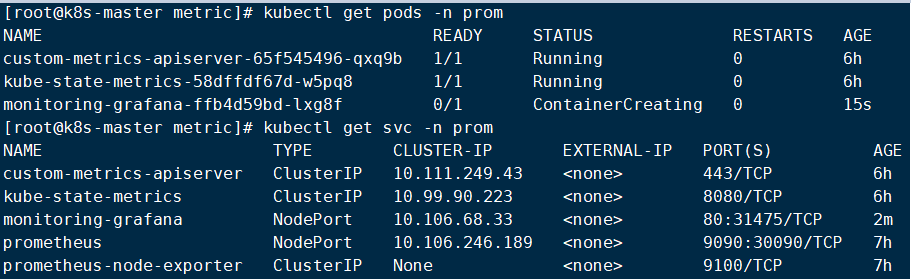

6.prometheus集成Grafana

apiVersion: apps/v1beta1 kind: Deployment metadata: name: monitoring-grafana namespace: prom spec: replicas: 1 template: metadata: labels: task: monitoring k8s-app: grafana spec: containers: - name: grafana image: k8s.gcr.io/heapster-grafana-amd64:v5.0.4 ports: - containerPort: 3000 protocol: TCP volumeMounts: - mountPath: /etc/ssl/certs name: ca-certificates readOnly: true - mountPath: /var name: grafana-storage env: - name: GF_SERVER_HTTP_PORT value: "3000" # The following env variables are required to make Grafana accessible via # the kubernetes api-server proxy. On production clusters, we recommend # removing these env variables, setup auth for grafana, and expose the grafana # service using a LoadBalancer or a public IP. - name: GF_AUTH_BASIC_ENABLED value: "false" - name: GF_AUTH_ANONYMOUS_ENABLED value: "true" - name: GF_AUTH_ANONYMOUS_ORG_ROLE value: Admin - name: GF_SERVER_ROOT_URL # If you're only using the API Server proxy, set this value instead: # value: /api/v1/namespaces/kube-system/services/monitoring-grafana/proxy value: / volumes: - name: ca-certificates hostPath: path: /etc/ssl/certs - name: grafana-storage emptyDir: {} --- apiVersion: v1 kind: Service metadata: labels: # For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons) # If you are NOT using this as an addon, you should comment out this line. kubernetes.io/cluster-service: 'true' kubernetes.io/name: monitoring-grafana name: monitoring-grafana namespace: prom spec: # In a production setup, we recommend accessing Grafana through an external Loadbalancer # or through a public IP. # type: LoadBalancer # You could also use NodePort to expose the service at a randomly-generated port type: NodePort ports: - port: 80 targetPort: 3000 selector: k8s-app: grafana

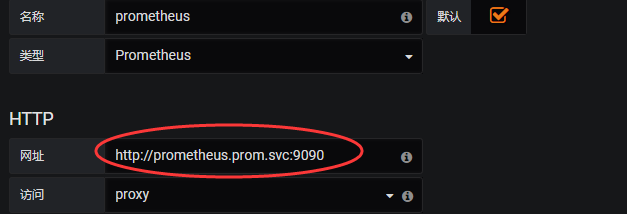

monitoring-grafana这个pod通过prometheus这个svc来访问prometheus提供的服务 所以在grafana在配置数据源的时候可以如下格式

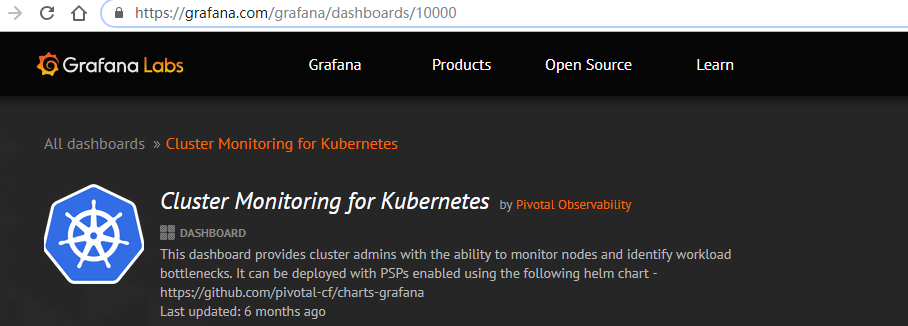

选择一个合适的kubernetes的展示模板 https://grafana.com/grafana/dashboards/

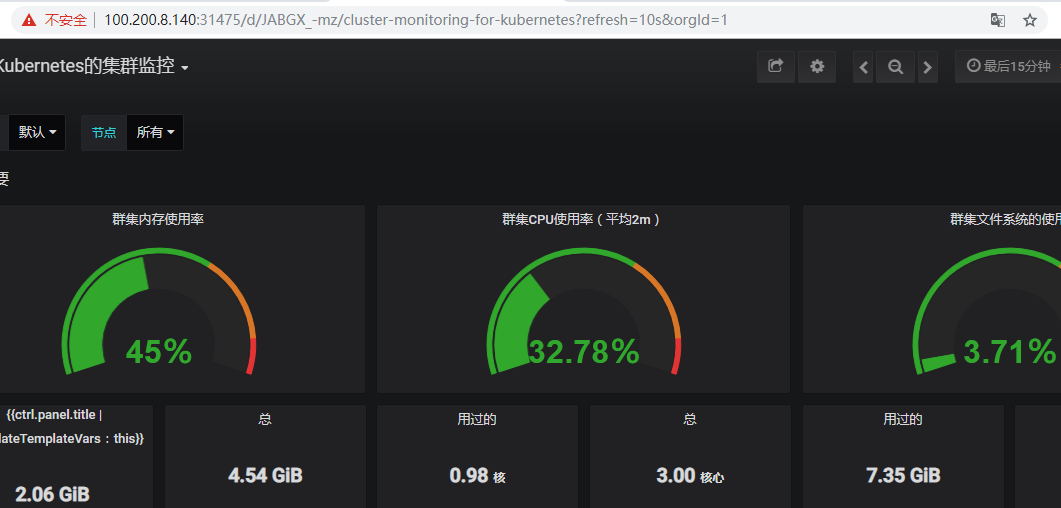

grafana最终展示监控大屏效果