Santesteban, Igor, Miguel A. Otaduy, and Dan Casas. “Learning‐Based Animation of Clothing for Virtual Try‐On.” Computer Graphics Forum. Vol. 38. No. 2. 2019.

1. Motivation

Tight garments follow the deformation of the body.

Inspired by Pose Space Deformation (PSD) and subsequent human body models.

Notification

a deformed human body mesh with shape parameters and pose parameters (joint angles).

a deformed garment mesh worn by the human body mesh

the simulated garment result on a body shape with and . This is our goal.

2. Main work

2.1 Cloth model

-

Body deformation model(PSD)

in which is a skinning function,

is an unposed body mesh including vertices,

may be obtained by deforming a template body mesh to account for body shape and pose-based surface corrections (See, e.g., [LMR∗15]) .

-

Similar cloth deformation pipeline

is a template cloth mesh including vertices

1.compute an unposed cloth mesh

and represent two nonlinear regressors, which

take as input body shape parameters and shape and pose parameters2.use the skinning function to produce the full cloth deformation

the skinning weight matrix by projecting each vertex of the

template cloth mesh onto the closest triangle of the template body

mesh, and interpolating the body skinning weights .3.postprocessing step to get collision-free cloth outputs by pushing them outside

their closest body primitive.

2.2 Garment Fit Regressor (static)

A nonlinear regressor

Input: the shape of the body

output: per-vertex displacements

Ground-truth:

represents a simulation of the garment on a body with shape and pose

represents a smoothing operator.

Function: a single-hidden-layer multilayer perceptron (MLP) neural network

Loss: MSE

2.3 Garment Wrinkle Regressor (dynamic)

A nonlinear regressor

Input: shape and pose

output: per-vertex displacements

Ground-truth:

GT represents the deviation between the simulated cloth worn by the moving body, which is expressed in the body’s rest pose.

Function: a Recurrent Neural Network (RNN) based on Gated Recurrent Units (GRU)

Loss: MSE

2.4 Dataset

-

one garment

-

parametric human model(SMPL) including 17 training body shapes: for each of 4 principal components of , generate 4 samples + the nominal shape with .

-

animation: 56 sequences character motions from the CMU dataset which contain 7117 frames in total(at 30 fps, downsampled from the original CMU dataset of 120 fps). Simulate each of the 56 sequences for each of the 17 body shapes, wearing the same garment mesh( a T-shirt with 8710 triangles).

-

ARCSim:

[parameters]

material: an interlock knit with 60% cotton and 40% polyester

time step: 3.33ms (0.0033s)

store step: 10 time steps

120989 output frames

-

How to get a collision-free initial state for simulation?

1.manually pre-position the garment mesh once on the template

body mesh .2.run the simulation to let the cloth relax, and thus define the initial state for all subsequent simulations.

3.apply a smoothing operator to this initial state to obtain the

template cloth mesh .

-

-

ground-truth garment fit data

interpolate the shape parameters from the template body mesh to the target shape, while simulate the garment from its collision-free initial state. Once the body reaches its target shape, let the cloth rest, and compute the GT garment fit displacements.

-

ground-truth garment wrinkle data

interpolate both shape and pose parameters from the template body mesh to the shape and initial pose of the animation. let the cloth rest.

2.5 Network Implementation and Training

Tensorflow.

MLP for garment fit regression contains a single hidden layer with 20 hidden neurons.

The GRU network for garment wrinkle regression contains a single hidden layer with 1500 hidden neurons.

Dropout regularization. Randomly disable 20% of the hidden neurons

on each optimization step.

Adam for 2000 epochs with an initial learning rate of 0.0001.

Train each network seperatly.

-

The garment fit MLP network

training the ground-truth data from all 17 body shapes.

-

The garment wrinkle GRU network ()

52 animation sequences for training

4 sequences for testing.

batchsize 128.

speed-up: the error gradient using Truncated Backpropagation Through Time

(TBPTT), with a limit of 90 time steps. LSTM中用到。

3. Evaluation

-

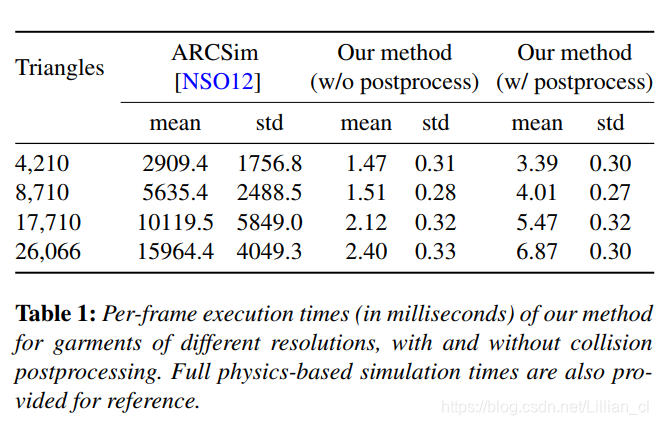

Runtime Performance

-

Quantitative Evaluation

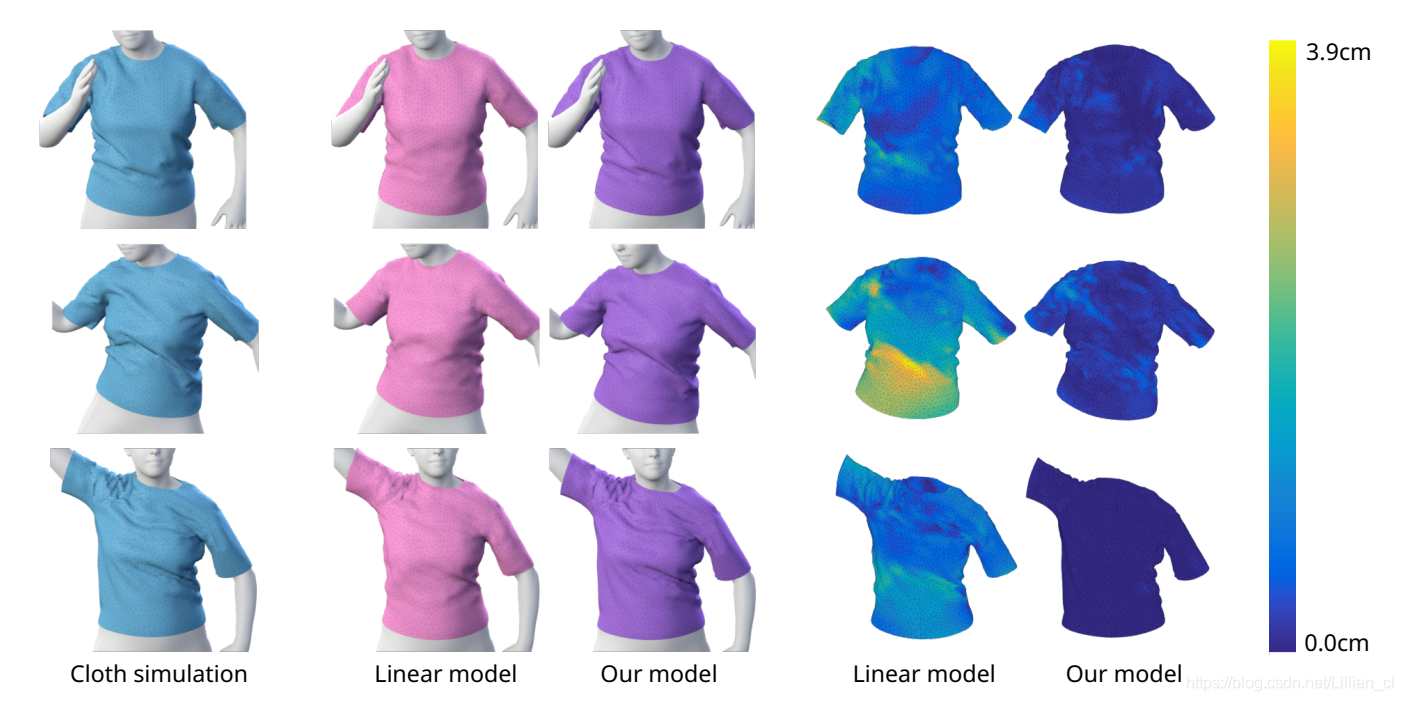

Linear vs. nonlinear regression

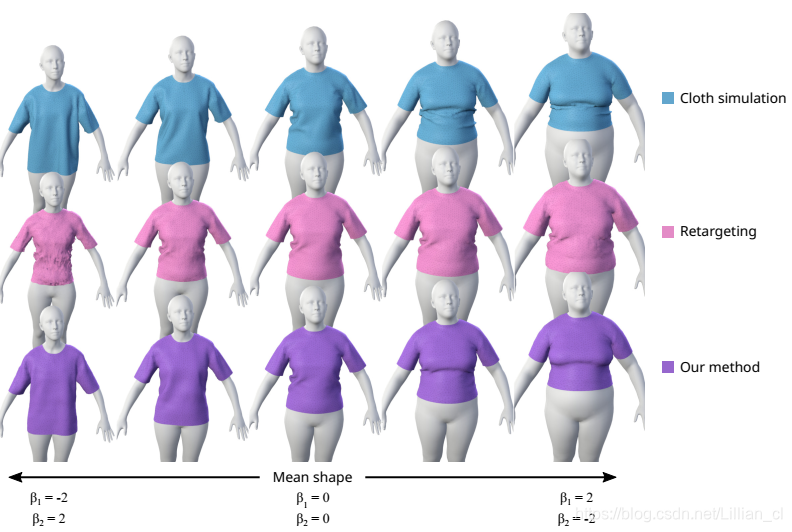

Generalization to new body shapes

Generalization to new body poses: CMU sequences 01_01 and 55_27 -

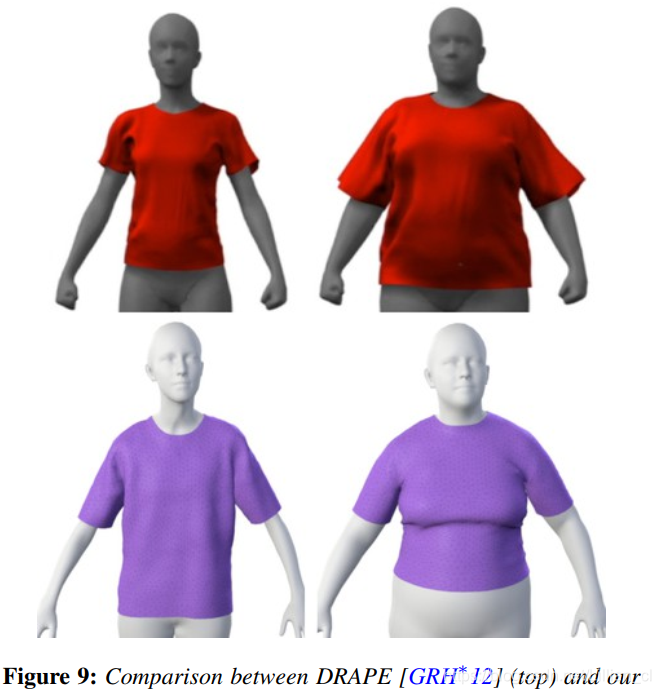

Qualitative Evaluation

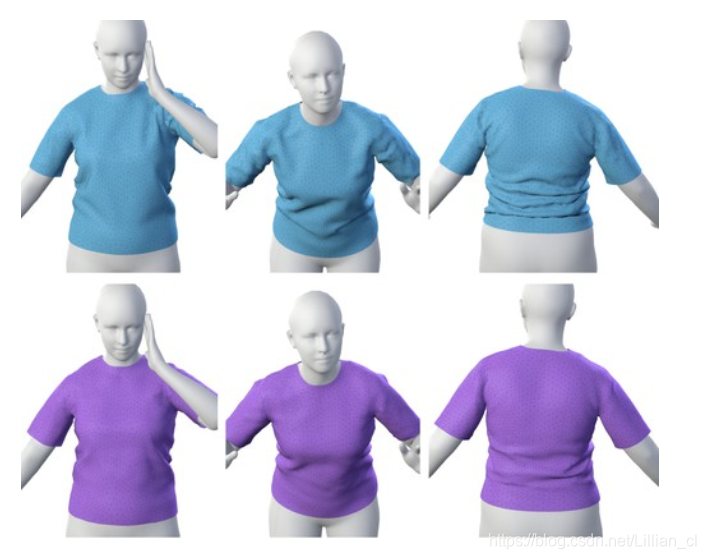

clothing deformations produced by our approach on a static pose while

changing the body shape over time.

DRAPE approximates the deformation of the garment by scaling it such that it fits the target shape, which produces plausible but unrealistic results. In contrast, our method deforms the garment in a realistic manner.

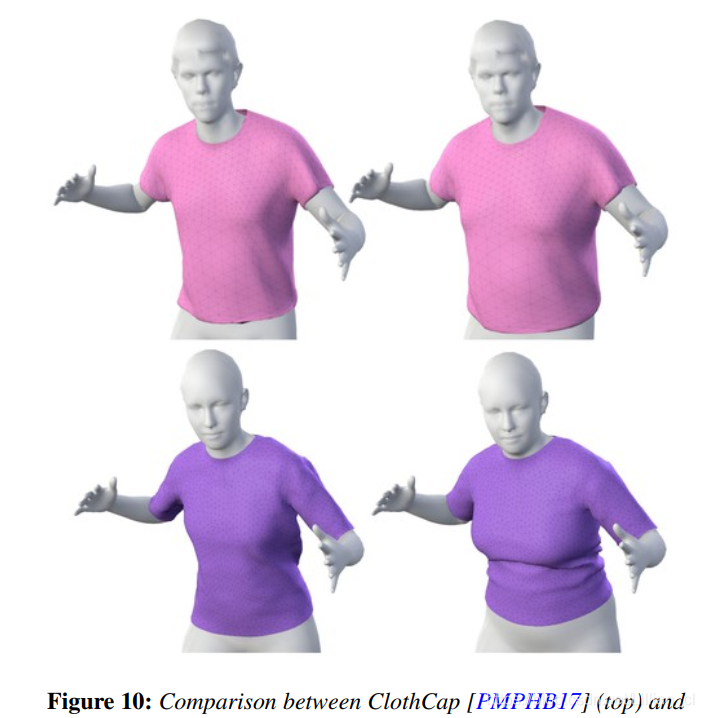

ClothCap’s retargeting lacks realism because cloth deformations are simply copied across different shapes. In contrast, our method produces realistic pose- and shape-dependent deformations.

- Generalization to new poses (01_01)

4. Future work

-

Generalize to multiple garments(with different materials)

-

Collisions(add low-level collision constraints into Network)

-

Smooths excessively high-frequency wrinkles

-

Loose garments (dress).