这个代码自己写到自闭,很多天拼拼凑凑才写完。但是靠理论部分自己写出来的代码真香

理论部分链接:https://blog.csdn.net/jk_chen_acmer/article/details/103066444

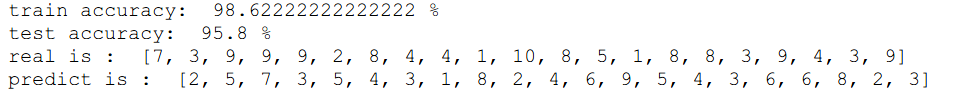

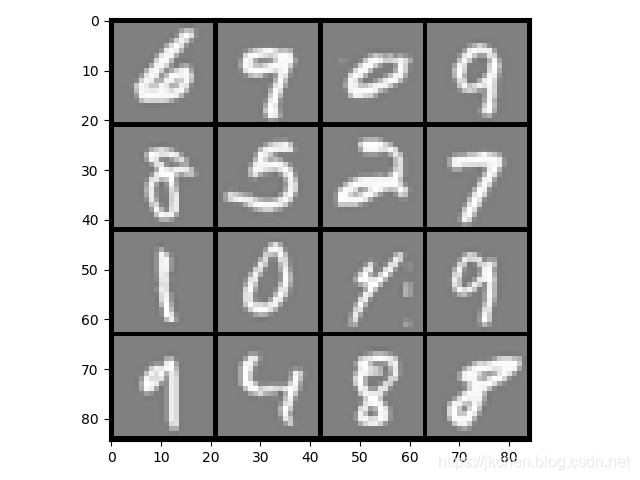

用的是吴恩达老师给的minst(手写数字)数据集,20*20单通道值。

用前面4500个数据训练的,用后面500个数据测试。

最大程度的向量化运算,跑的飞快(用了mini-batch和学习速率递减):

import numpy as np

import matplotlib.pyplot as plt

import copy

import math

from scipy.io import loadmat, savemat

# 读入样本数据(已经随机化处理)

def getData():

# 测试数据个数

Mtrain = 4500

data = loadmat('data_random.mat')

return data['Xtrain'], data['ytrain'], data['Xtest'], data['ytest']

# 展开成行向量

def dealY(y, siz):

m = y.shape[0]

res = np.mat(np.zeros([m, siz]))

for i in range(m):

res[i, y[i, 0] - 1] = 1

return res

# 可视化数据集

def displayData(X):

m, n = np.shape(X)

width = round(math.sqrt(np.size(X, 1)))

height = int(n / width)

drows = math.floor(math.sqrt(m))

dcols = math.ceil(m / drows)

pad = 1 # 建立一个空白“背景布”

darray = -1 * np.ones((pad + drows * (height + pad), pad + dcols * (width + pad)))

curr_ex = 0

for j in range(drows):

for i in range(dcols):

max_val = np.max(np.abs(X[curr_ex]))

darray[pad + j * (height + pad):pad + j * (height + pad) + height,

pad + i * (width + pad):pad + i * (width + pad) + width] \

= X[curr_ex].reshape((height, width)) / max_val

curr_ex += 1

if curr_ex >= m:

break

plt.imshow(darray.T, cmap='gray')

plt.show()

# 激活函数

def sigmoid(z):

return 1 / (1 + np.exp(-z))

def sigmoid_derivative(z):

return np.multiply(sigmoid(z), 1 - sigmoid(z))

# 神经网络结构

L = 2

S = [400, 25, 10]

def gradientDescent(X, y):

m, n = X.shape

mb_size = 512

tmpX = []

tmpy = []

for i in range(0, m, mb_size):

en = min(i + mb_size, m)

tmpX.append(X[i:en, ])

tmpy.append(y[i:en, ])

X = tmpX

y = tmpy

mb_num = X.__len__()

W = [-1, ]

B = [-1, ]

for i in range(1, L + 1):

W.append(np.mat(np.random.rand(S[i], S[i - 1])) * 0.01)

B.append(np.mat(np.zeros([S[i], 1], float)))

Z = []

for i in range(0, L + 1):

Z.append([])

rate = 5e-1 # 学习速率

lbda = 1e-1 # 惩罚力度

epoch = 10001

for T_epoch in range(epoch):

if (T_epoch>0 and T_epoch % 500 == 0):

rate*=0.9

for k in range(mb_num):

Z[0] = X[k].T

mb_size = X[k].shape[0]

for i in range(1, L + 1):

if (i > 1):

Z[i] = W[i] * sigmoid(Z[i - 1]) + B[i]

else:

Z[i] = W[i] * Z[i - 1] + B[i]

pw = -1

for i in range(L, 0, -1):

if i == L:

dz = sigmoid(Z[L]) - y[k].T

else:

dz = np.multiply(pw.T * dz, sigmoid_derivative(Z[i]))

dw = dz * (sigmoid(Z[i - 1]).T) / mb_size

dw += lbda*W[i]/mb_size

db = np.sum(dz, axis=1) / mb_size

pw = copy.copy(W[i])

W[i] -= rate * dw

B[i] -= rate * db

# CostFunction

if (T_epoch % 100 == 0):

Cost = np.sum(np.multiply(y[k].T, np.log(sigmoid(Z[L])))) + \

np.sum(np.multiply(1 - y[k].T, np.log(1 - sigmoid(Z[L]))))

Cost /= -mb_size

Add = 0

for l in range(1, L + 1):

T = np.multiply(W[l], W[l])

Add += np.sum(T)

Cost += Add * lbda / (2 * mb_size)

print(T_epoch, Cost)

savemat('results_minibatch_512siz.mat', mdict={'w1': W[1], 'w2': W[2], 'b1': B[1], 'b2': B[2]})

# 得出预测值

def getAnswer(X):

m, n = X.shape

Answer = []

data = loadmat('results_minibatch_512siz.mat')

W = [-1, np.mat(data['w1']), np.mat(data['w2'])]

B = [-1, np.mat(data['b1']), np.mat(data['b2'])]

Z = X.T

for i in range(1, L + 1):

if i > 1:

Z = sigmoid(Z)

Z = W[i] * Z + B[i]

A = sigmoid(Z)

for I in range(m):

mx = 0

for i in range(0, S[L]):

if A[i, I] > mx:

mx = A[i, I]

id = i + 1

Answer.append(id)

return Answer

if __name__ == "__main__":

Xtrain, ytrain, Xtest, ytest = getData()

mtrain, n = Xtrain.shape

mtest, n = Xtest.shape

print(mtrain, mtest)

# 向量化y

ytrainV = dealY(ytrain, 10)

# 训练参数

gradientDescent(Xtrain, ytrainV)

# 获取预测答案

Htrain = getAnswer(Xtrain)

Htest = getAnswer(Xtest)

# 随机展示测试结果

Nprin = False

if Nprin:

index = np.random.choice(np.random.permutation(mtest), 16)

part = Xtest[index] # 随机选16个画出

displayData(part)

print("对应答案值:") # 输出对应答案值

for i in range(16):

print(ytest[index[i], 0], end=' ')

print('')

if Nprin:

print("对应预测值:") # 输出对应预测值

for i in range(16):

print(Htest[index[i]], end=' ')

print('')

# 统计训练集和测试集的准确率

ct = 0

for i in range(mtrain):

if Htrain[i] == ytrain[i]:

ct += 1

print("train accuracy: ", 100 * ct / mtrain, "%")

ct = 0

for i in range(mtest):

if Htest[i] == ytest[i]:

ct += 1

print("test accuracy: ", 100 * ct / mtest, "%")

# 误差分析

error=np.mat(np.zeros([mtest-ct,n]))

ypredict=[]

yreal=[]

ar=0

for i in range(mtest):

if Htest[i] != ytest[i]:

error[ar,:]=Xtest[i,:]

ar+=1

ypredict.append(Htest[i])

yreal.append(ytest[i][0])

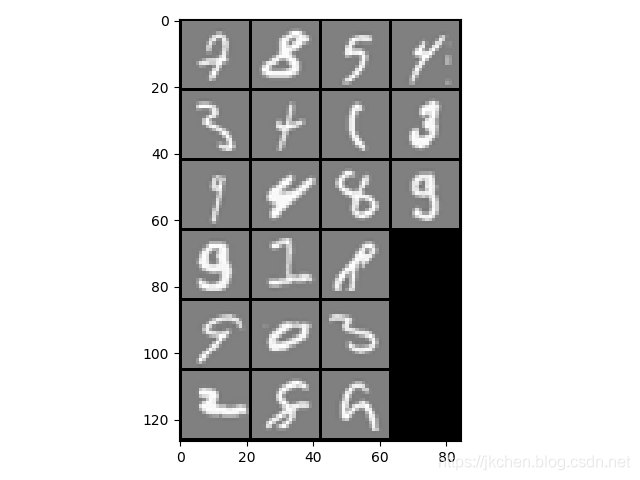

displayData(error)

print("real is : ",yreal)

print("predict is : ",ypredict)

误差图示