import numpy as np

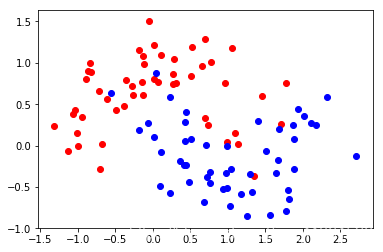

from sklearn import datasets

import matplotlib. pyplot as plt

X, y = datasets. make_moons( n_samples= 100 , noise = 0.3 )

plt. scatter( X[ y== 0 , 0 ] , X[ y== 0 , 1 ] , color = 'r' )

plt. scatter( X[ y== 1 , 0 ] , X[ y== 1 , 1 ] , color = 'b' )

plt. show( )

from sklearn. model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split( X, y, test_size= 0.2 , random_state= 666 , shuffle= True )

plt. scatter( X_train[ y_train== 0 , 0 ] , X_train[ y_train== 0 , 1 ] )

plt. scatter( X_train[ y_train== 1 , 0 ] , X_train[ y_train== 1 , 1 ] )

plt. scatter( X_test[ y_test== 0 , 0 ] , X_test[ y_test== 0 , 1 ] )

plt. scatter( X_test[ y_test== 1 , 0 ] , X_test[ y_test== 1 , 1 ] )

plt. show( )

from sklearn. model_selection import cross_val_score

from sklearn. neighbors import KNeighborsClassifier

knn = KNeighborsClassifier( n_neighbors= 5 )

cross_val_score( knn, X, y, cv = 3 )

array([0.88235294, 0.85294118, 0.875 ])

% % time

best_k, best_p, best_score = 0 , 0 , 0

for k in range ( 2 , 11 ) :

for p in range ( 1 , 6 ) :

knn_clf = KNeighborsClassifier( weights= "distance" , n_neighbors= k, p= p)

scores = cross_val_score( knn_clf, X_train, y_train)

score = np. mean( scores)

if score > best_score:

best_k, best_p, best_score = k, p, score

print ( "Best K =" , best_k)

print ( "Best P =" , best_p)

print ( "Best Score =" , best_score)

Best K = 8

Best P = 3

Best Score = 0.8874643874643874

Wall time: 236 ms

from sklearn. model_selection import GridSearchCV

from sklearn. neighbors import KNeighborsClassifier

param_grid= [

{

'weights' : [ 'uniform' ] ,

'n_neighbors' : [ i for i in range ( 1 , 11 ) ]

} ,

{

'weights' : [ 'distance' ] ,

'n_neighbors' : [ i for i in range ( 1 , 11 ) ] ,

'p' : [ i for i in range ( 1 , 6 ) ]

}

]

grid_search = GridSearchCV( KNeighborsClassifier( ) , param_grid)

% % time

grid_search. fit( X_train, y_train)

Wall time: 375 ms

GridSearchCV(cv=None, error_score='raise',

estimator=KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=1, n_neighbors=5, p=2,

weights='uniform'),

fit_params=None, iid=True, n_jobs=1,

param_grid=[{'weights': ['uniform'], 'n_neighbors': [1, 2, 3, 4, 5, 6, 7, 8, 9, 10]}, {'weights': ['distance'], 'n_neighbors': [1, 2, 3, 4, 5, 6, 7, 8, 9, 10], 'p': [1, 2, 3, 4, 5]}],

pre_dispatch='2*n_jobs', refit=True, return_train_score='warn',

scoring=None, verbose=0)

grid_search. best_estimator_

KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=1, n_neighbors=8, p=3,

weights='distance')

grid_search. best_score_

0.8875

% % time

grid_search = GridSearchCV( KNeighborsClassifier( ) , param_grid, n_jobs= - 1 , verbose= 4 )

grid_search. fit( X_train, y_train)

Fitting 3 folds for each of 60 candidates, totalling 180 fits

[Parallel(n_jobs=-1)]: Done 9 tasks | elapsed: 3.9s

Wall time: 4.41 s

[Parallel(n_jobs=-1)]: Done 180 out of 180 | elapsed: 4.1s finished

grid_search. best_params_

{'n_neighbors': 8, 'p': 3, 'weights': 'distance'}

knn_clf = grid_search. best_estimator_

knn_clf. fit( X_train, y_train)

knn_clf. score( X_test, y_test)

0.9

GridSearchCV( KNeighborsClassifier( ) , param_grid, n_jobs= - 1 , verbose= 4 , cv = 4 )

GridSearchCV(cv=4, error_score='raise',

estimator=KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=1, n_neighbors=5, p=2,

weights='uniform'),

fit_params=None, iid=True, n_jobs=-1,

param_grid=[{'weights': ['uniform'], 'n_neighbors': [1, 2, 3, 4, 5, 6, 7, 8, 9, 10]}, {'weights': ['distance'], 'n_neighbors': [1, 2, 3, 4, 5, 6, 7, 8, 9, 10], 'p': [1, 2, 3, 4, 5]}],

pre_dispatch='2*n_jobs', refit=True, return_train_score='warn',

scoring=None, verbose=4)