一、介绍

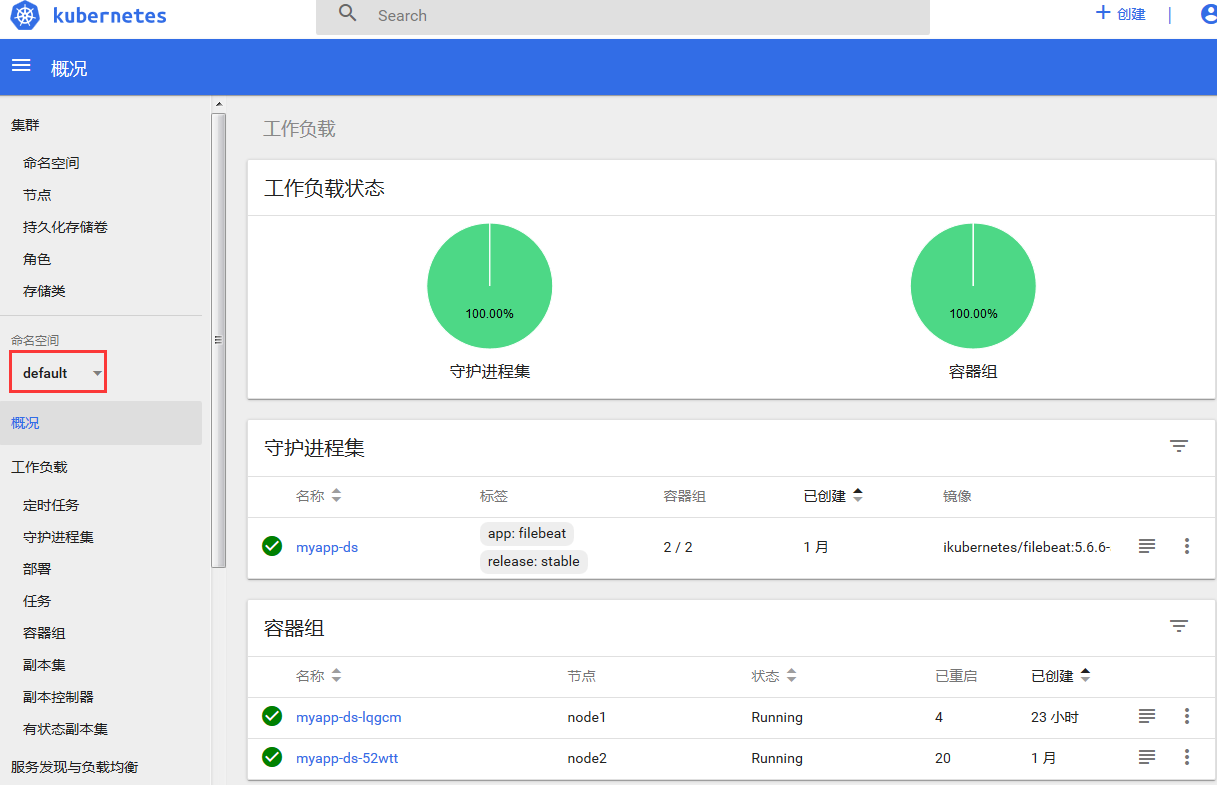

Kubernetes Dashboard是Kubernetes集群的基于Web的通用UI。它允许用户管理在群集中运行的应用程序并对其进行故障排除,以及管理群集本身。

二、搭建dashboard

github网站参考:https://github.com/kubernetes/dashboard

参考:https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc2/aio/deploy/recommended.yaml

1、下载dashboard镜像,并传到harbor仓库

root@k8s-master1:~# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1 # 将google的仓库转到阿里云的仓库下载很快。 root@k8s-master1:~# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1 harbor.struggle.net/baseimages/kubernetes-dashboard-amd64:v1.10.1 # 给下载下来的dashboard打标签 root@k8s-master1:~# docker login harbor.struggle.net # 需要验证登陆harbor仓库 Authenticating with existing credentials... WARNING! Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https//docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded # 登陆成功 root@k8s-master1:~# docker push harbor.struggle.net/baseimages/kubernetes-dashboard-amd64:v1.10.1 # 将打好的标签镜像上传的harbor仓库内

2、新建一个dashboard版本目录,将dashboard目录下的文件全部复制到新建的1.10.1目录下。

root@k8s-master1:~# cd /etc/ansible/manifests/dashboard/ root@k8s-master1:/etc/ansible/manifests/dashboard# mkdir 1.10.1 root@k8s-master1:/etc/ansible/manifests/dashboard# cd 1.10.1/ root@k8s-master1:/etc/ansible/manifests/dashboard/1.10.1# cp ../*.yaml .

3、修改kubernetes-dashboard.yaml文件,指向本地harbor仓库路径。

root@k8s-master1:/etc/ansible/manifests/dashboard/1.10.1# vim kubernetes-dashboard.yaml

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1beta2

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

#image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.8.3

image: mirrorgooglecontainers/kubernetes-dashboard-amd64:v1.10.0 # 只修改此处,将镜像地址指向本地的harbor仓库

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --token-ttl=43200 # 定义一个令牌登陆失效时间,避免短时间内一直失效

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

type: NodePort

4、开始创建dashboard

# kubectl apply -f . # 创建dashboard # kubectl get pods # kubectl get pods -n kube-system # 查看此时的dashboard状态 # kubectl cluster-info # 查询集群状态 Kubernetes master is running at https://192.168.7.248:6443 kubernetes-dashboard is running at https://192.168.7.248:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy # 用此URL在网页进行登录,账号和密码见kubernetes之五时创建的admin账号和密码123456

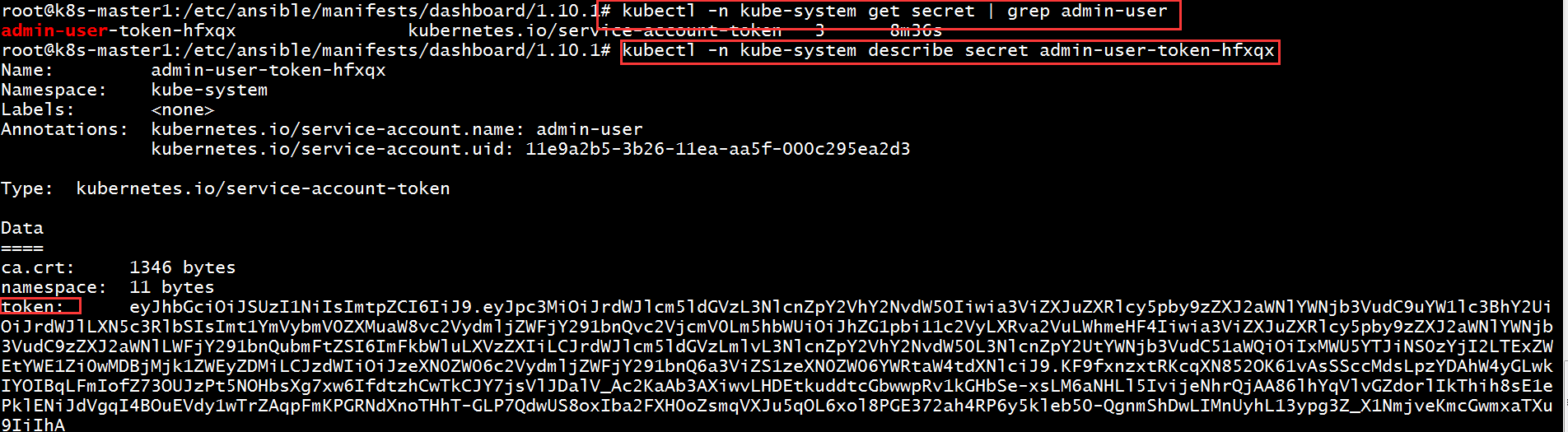

5、获取token令牌进行登录dashboard账号

root@k8s-master1:/etc/ansible/manifests/dashboard/1.10.1# kubectl -n kube-system get secret | grep admin-user # 过滤自己的admin账号文件

admin-user-token-hfxqx kubernetes.io/service-account-token 3 8m36s

root@k8s-master1:/etc/ansible/manifests/dashboard/1.10.1# kubectl -n kube-system describe secret admin-user-token-hfxqx # 输入查到的admin文件,然后出现下面的token令牌

Name: admin-user-token-hfxqx

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 11e9a2b5-3b26-11ea-aa5f-000c295ea2d3

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1346 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWhmeHF4Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIxMWU5YTJiNS0zYjI2LTExZWEtYWE1Zi0wMDBjMjk1ZWEyZDMiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.KF9fxnzxtRKcqXN852OK61vAsSSccMdsLpzYDAhW4yGLwkIYOIBqLFmIofZ73OUJzPt5NOHbsXg7xw6IfdtzhCwTkCJY7jsVlJDalV_Ac2KaAb3AXiwvLHDEtkuddtcGbwwpRv1kGHbSe-xsLM6aNHLl5IvijeNhrQjAA86lhYqVlvGZdorlIkThih8sE1ePklENiJdVgqI4BOuEVdy1wTrZAqpFmKPGRNdXnoTHhT-GLP7QdwUS8oxIba2FXH0oZsmqVXJu5qOL6xol8PGE372ah4RP6y5kleb50-QgnmShDwLIMnUyhL13ypg3Z_X1NmjveKmcGwmxaTXu9IiIhA

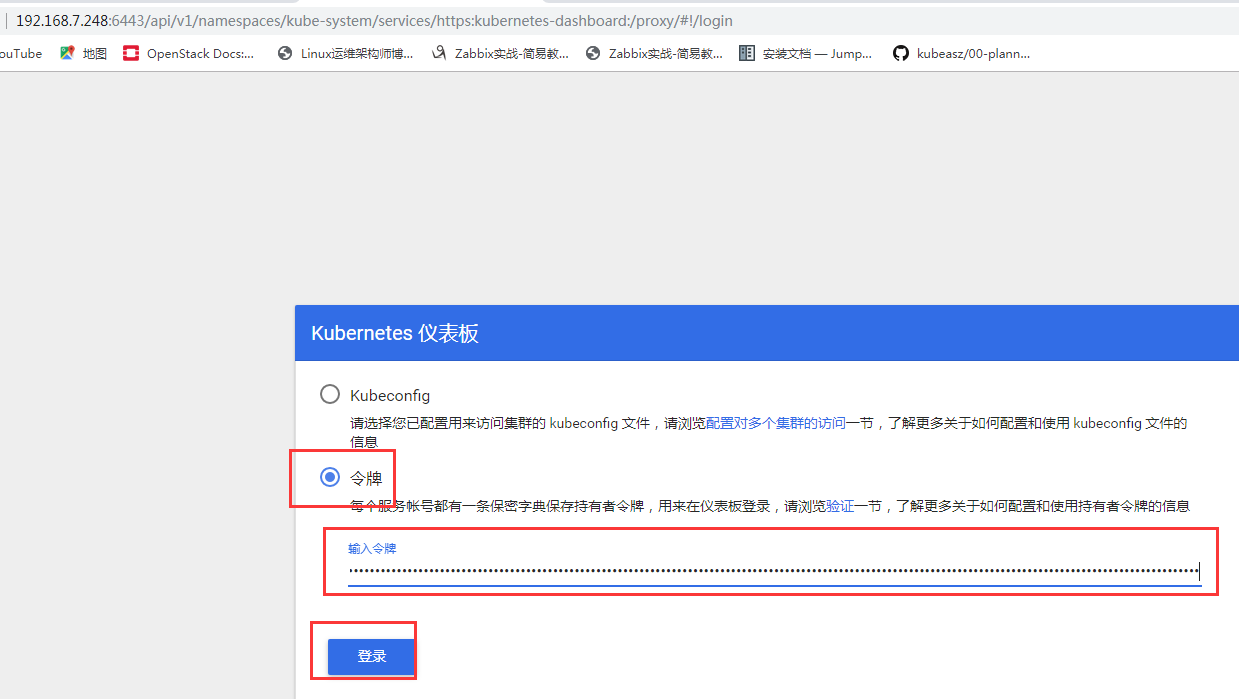

输入上面出现的URL:https://192.168.7.248:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy ,显示登陆页面

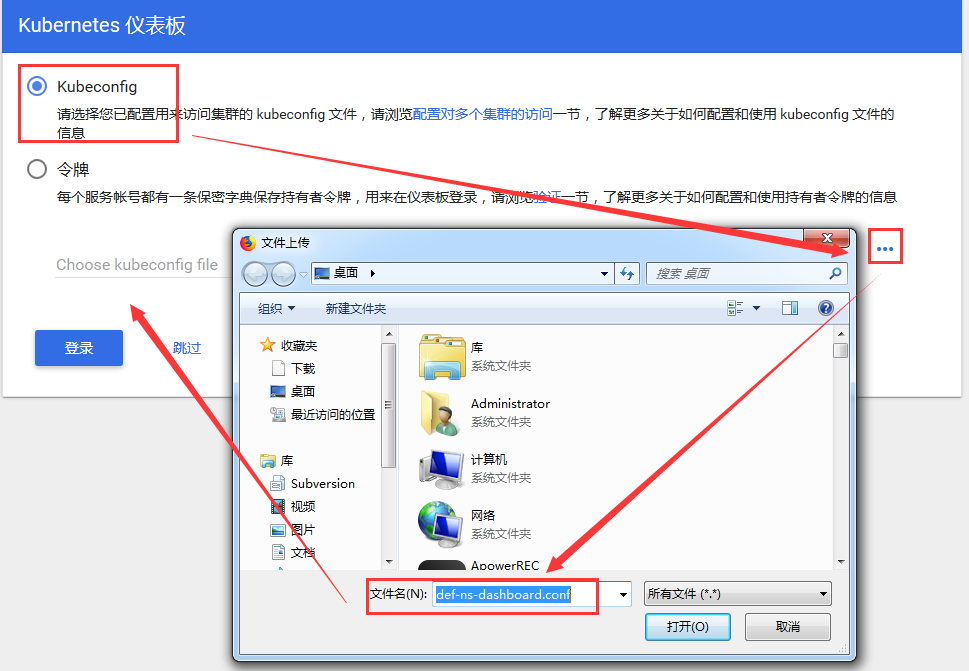

定义kubeconfig认证文件直接登录

1、修改/root/.kube/config文件,将token文件添加到最后一行,然后将配置文件导出

root@k8s-master1:~/.kube# cd /root/.kube root@k8s-master1:~/.kube# ls cache config http-cache root@k8s-master1:~/.kube# cp config /opt/kubeconfig # 复制一份出来,然后再添加生成的token令牌 root@k8s-master1:~/.kube# vim /opt/kubeconfig

2、将令牌信息写在最下面,前面空四格,然后再写token:

root@k8s-master1:~/.kube# vim config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR0akNDQXA2Z0F3SUJBZ0lVRldoTlU5S21ZUUwyZDdFK25zQnVKZ1Z0WXY0d0RRWUpLb1pJa

HZjTkFRRUwKQlFBd1lURUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3Wl

RlWE4wWlcweEV6QVJCZ05WQkFNVENtdDFZbVZ5CmJtVjBaWE13SGhjTk1qQXdNVEU1TURjME56QXdXaGNOTXpVd01URTFNRGMwTnpBd1dqQmhNUXN3Q1FZRFZRUUcKRXdKRFRqRVJNQThHQTF

VRUNCTUlTR0Z1WjFwb2IzVXhDekFKQmdOVkJBY1RBbGhUTVF3d0NnWURWUVFLRXdOcgpPSE14RHpBTkJnTlZCQXNUQmxONWMzUmxiVEVUTUJFR0ExVUVBeE1LYTNWaVpYSnVaWFJsY3pDQ0FT

SXdEUVlKCktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ0VCQU01Q0NJeGRkMm5reHdndGphVnExSFlBaGlJRE5ubWIKSGlQb0VqQTIzekpKQXhpYjVoNG9RNHNOZ2pzTDY5RHVLcHpST

Wk2c1doWmRGbXd1K0Y1TFRLOGdibE04UngyZQpZYk9zUmlsNGgrWSt0VHZlbU5rSXZzZzNnOFZ3RmJzVU1uNzVtUE5WK1NkdlR2SVBSaWUxYVZsT1ppRlcvRFhaClEyYXZBeW9tR2hIcXJQM1

poUFhyaFhtQ1NTMWF3Y3gzck5SVnl3ZzA0Yms5cWQ0b3VsSGkvVTl0RjdFZ1ZhUWMKRE93c1kwTEFTa0MyMEo2QkJGSWxQcUdkNzJzREdvVTBIVkg5REFZc2VZUTlpaXBQS3VYRjNYQmpNVVJ

2cFc0Qgp4VHMyZmVVTytqQ25uU3Z0aWVGTk9TZ1VFekhjL2NxamVENDdvZ1ljZEx5UEthL0FyUTBSdEhrQ0F3RUFBYU5tCk1HUXdEZ1lEVlIwUEFRSC9CQVFEQWdFR01CSUdBMVVkRXdFQi93

UUlNQVlCQWY4Q0FRSXdIUVlEVlIwT0JCWUUKRkcxWmxWNEVyK011UWFXdEhkT2s0SjdPdndrZU1COEdBMVVkSXdRWU1CYUFGRzFabFY0RXIrTXVRYVd0SGRPawo0SjdPdndrZU1BMEdDU3FHU

0liM0RRRUJDd1VBQTRJQkFRRE5jSmUyeXNqQlNNTVowdGxVVndONkhNN2Z4dHlTCjNNaFF0SVp4QVFRSWswb1RBUGZ3eGpuYmg3dFFhV2s1MUhaNWs0MWdPV09qRkVrVkZMdXFPOEtLaS9iTW

tKbW0Kd1dOYlVJa3ZUQlozME1WK3pjSUluU1pseFlYUUNZN1pPTnVRdTZHSlI3Z3BFb2VadGlFWlVFTEM2NjlMU0FoNgp1eVZZdlM1dzRiRGc2QVRqeE03MkZ5Tlh4NUtNcTdlcjZIZk1hUnZ

EWFoybTI0bW5mV3JJSWhKb045NzNOSmJmCldBVXRwN3dqV1UvOFByK2JwVnc3Tm9kV2h0MFRNbmYwL2hRdHd5OWJOOE1VUDhDK21lR0ZsYXJnem9LVEJTMm0KY09rU0gveDMxaURGQmsrMHdh

MXFGU0tIYndyaGUrWUZ5UTRza2xncVl3TXhkQjJ3a3FEWk5oT2YKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://192.168.7.248:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: admin

name: kubernetes

current-context: kubernetes

kind: Config

preferences: {}

users:

- name: admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQxVENDQXIyZ0F3SUJBZ0lVTTVub2lJeTJ4KzIvMW9EdzJZK0VRaFFtVzBZd0RRWUpLb1pJaHZj

TkFRRUwKQlFBd1lURUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlW

E4wWlcweEV6QVJCZ05WQkFNVENtdDFZbVZ5CmJtVjBaWE13SGhjTk1qQXdNVEU1TURnd05qQXdXaGNOTXpBd01URTJNRGd3TmpBd1dqQm5NUXN3Q1FZRFZRUUcKRXdKRFRqRVJNQThHQTFVRU

NCTUlTR0Z1WjFwb2IzVXhDekFKQmdOVkJBY1RBbGhUTVJjd0ZRWURWUVFLRXc1egplWE4wWlcwNmJXRnpkR1Z5Y3pFUE1BMEdBMVVFQ3hNR1UzbHpkR1Z0TVE0d0RBWURWUVFERXdWaFpHMXB

iakNDCkFTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTE9scVBJWGthL0ZMbko4eDdxcUl0TjMKVHF5bkxHQTRDMGR0QXlLc20wTkUzbmFPTmxSUlZPencvZksvVExy

Y2pWNDFRMWRrN0txLytXU0VMbDN4Qkl0UwpFREV1M0t6SG02NzZua0RsaWNNbUxGeW1ia3lybGFONDJSS3lqMWdKdTNROUU0ZktWOGFDdnpObEdqUk9QemJNCmhISStnTFpHRUZBUDdOR0N2Z

XZVK1F6aFFKQklESjNtbzY0R0RGZEs3QXVFaWsvUThqaHFkWk9iUTlmWE93ZmYKWjAvWkNPQlE5aGo4amIvbm5hM3l2UUt6QTR6SS82ZS9VdVZhQnlMaENKaTdSZXhGSDVWYW4zMVY5MXNlU3

@@@

"config" 19L, 6233C 1,1 Top

user: admin

name: kubernetes

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQxVENDQXIyZ0F3SUJBZ0lVTTVub2lJeTJ4KzIvMW9EdzJZK0VRaFFtVzBZd0RRWUpLb1pJaHZj

TkFRRUwKQlFBd1lURUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlW

E4wWlcweEV6QVJCZ05WQkFNVENtdDFZbVZ5CmJtVjBaWE13SGhjTk1qQXdNVEU1TURnd05qQXdXaGNOTXpBd01URTJNRGd3TmpBd1dqQm5NUXN3Q1FZRFZRUUcKRXdKRFRqRVJNQThHQTFVRU

NCTUlTR0Z1WjFwb2IzVXhDekFKQmdOVkJBY1RBbGhUTVJjd0ZRWURWUVFLRXc1egplWE4wWlcwNmJXRnpkR1Z5Y3pFUE1BMEdBMVVFQ3hNR1UzbHpkR1Z0TVE0d0RBWURWUVFERXdWaFpHMXB

iakNDCkFTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTE9scVBJWGthL0ZMbko4eDdxcUl0TjMKVHF5bkxHQTRDMGR0QXlLc20wTkUzbmFPTmxSUlZPencvZksvVExy

Y2pWNDFRMWRrN0txLytXU0VMbDN4Qkl0UwpFREV1M0t6SG02NzZua0RsaWNNbUxGeW1ia3lybGFONDJSS3lqMWdKdTNROUU0ZktWOGFDdnpObEdqUk9QemJNCmhISStnTFpHRUZBUDdOR0N2Z

XZVK1F6aFFKQklESjNtbzY0R0RGZEs3QXVFaWsvUThqaHFkWk9iUTlmWE93ZmYKWjAvWkNPQlE5aGo4amIvbm5hM3l2UUt6QTR6SS82ZS9VdVZhQnlMaENKaTdSZXhGSDVWYW4zMVY5MXNlU3

pETgpsTEVDWkpyRnN0SW02dGtJUk95OTV4dnM1S1VxRDUyMzJJMXVmUzRoRmJab01BWXYreWhyaWdGZWh3SXQ2Z3NDCkF3RUFBYU4vTUgwd0RnWURWUjBQQVFIL0JBUURBZ1dnTUIwR0ExVWR

KUVFXTUJRR0NDc0dBUVVGQndNQkJnZ3IKQmdFRkJRY0RBakFNQmdOVkhSTUJBZjhFQWpBQU1CMEdBMVVkRGdRV0JCUzZsNTBLZGc3d3cwQmxKQWhRdlZCRQpRWDI4d1RBZkJnTlZIU01FR0RB

V2dCUnRXWlZlQksvakxrR2xyUjNUcE9DZXpyOEpIakFOQmdrcWhraUc5dzBCCkFRc0ZBQU9DQVFFQVVjOVdTb2V4d2N4bk1Pb2JYcXpnZjQyZEtNWFp5QUU5djBtVXgvNUFGWVo5QnhmSm93V

nMKa0d5dllRMS9kQUkwYnVpeEgrS0JTN1JKRW13VWk5bi91d2FCNHJUS3dVTld1YWV2ZHIzaXcwbWw1U0dGKzUrcQpsc2RYaFdjMDRTWFBMNnNqYjZrSThwYzI5RE9meXBNMTI1UFNCMW1paV

VNN3gwVmVBN0NvZ1RSU2ErZmlIUld1Ck44Y0FQbnVBSXJJdDFjU2xEN3lOMGFwL3orSWdtL2RPTHk0VWdFaHdaMkJNSmxXYXc3UWtTZnF4UU8vYnVzK3AKdEdUMEE3TGRsTFVKT3Z1Y0JIRGx

aZUE1b2ZtTmhwaGhocTJPVTVld1lrSmlFN0xZOG5BTXF1YU1CUWs3VkpqNQpuR3NZRU5uaWpGZDU5MnRFWDZuOFcvTkVJazZ5YVpmT1V3PT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBczZXbzhoZVJyOFV1Y256SHVxb2kwM2RPcktjc1lEZ0xSMjBESXF5YlEwVGVk

bzQyClZGRlU3UEQ5OHI5TXV0eU5YalZEVjJUc3FyLzVaSVF1WGZFRWkxSVFNUzdjck1lYnJ2cWVRT1dKd3lZc1hLWnUKVEt1Vm8zalpFcktQV0FtN2REMFRoOHBYeG9LL00yVWFORTQvTnN5R

WNqNkF0a1lRVUEvczBZSzk2OVQ1RE9GQQprRWdNbmVhanJnWU1WMHJzQzRTS1Q5RHlPR3AxazV0RDE5YzdCOTluVDlrSTRGRDJHUHlOditlZHJmSzlBck1ECmpNai9wNzlTNVZvSEl1RUltTH

RGN0VVZmxWcWZmVlgzV3g1TE1NMlVzUUprbXNXeTBpYnEyUWhFN0wzbkcremsKcFNvUG5iZllqVzU5TGlFVnRtZ3dCaS83S0d1S0FWNkhBaTNxQ3dJREFRQUJBb0lCQUNmaGplajRySUg5M3p

yUgpVckd3MVh4bWp4T3JKU0hUTDBYVEFKQXhDMkxhcGh4aG85UUNIajFmbnJER0lTMldpdGV3TVZ2MGJzblZSbk0zCkdPZ0grbjlIWDRyYm9XZkdCRnpMREZqUjNFeHpiQVBCZXRrUWVtN0pC

RCt2WGowb0kvSkJJTlhUYUxzTUNaQksKVUkvdUV3Q0NWS0RjR1V6ZHJ2OW5HYWJGUkk1VzRSejdoZFRaNEY1bHpEWmRQZUMzd09tN0QvbE53VFgyeTdtbwpvU2YxRzVCUmh6MVdpKzBNT2ZZa

kdhdVl5TGpodnV5MmF5TDhDVTlCaW5UYXBNTTlPR2xzMVJ1YUdkMXdmcHFKCkJNNmJlMnpKTGZJZGQrQXBGbXFPRkNEWStIZXpUU0ZneHFOYlo4cHhoRTVOcUUxZ3FyQjdHYVQyaWRHdFg1WF

QKcVdneUsza0NnWUVBMmFrU1dqN0FhNy8zUDI2RU1IVlB5RVRvR3MvMkZvVDY0NUx2WVNGb2xBdFZHUXk3eFdFdwpwL29ZRm5HYkxneFB5N3U3YkRkTkZCYXZSVGZNQUJ3VzJ5K3JvdXZLY2p

5T0NqOXZPZG9yWTJCdTZidWhWUTZ3CkVEZ1l5cXVYWm95aU43MkdhZmlWWDBzYUJpR2l4ODhHTXhVcFBjNlo1WmNvcTNVcjE1RlJhNzhDZ1lFQTAwcDEKalV1UjZ3S3NDc1Zpa2NtMGxtTEJm

MC9BeGJZVE4wVnBZSWQ3YytxT1NrVXh3ZzFWQVJBS1kyQVA3N2RwYUcxago0TGZ5VllwY2d3ak43Sm1PVGltSlNMcHRVRk9SSUZYYkVYb2QyeWhzK2xQY1h5T2Vrb3NPRDVZSEx1cXlMeU1JC

nRod3dqSWtqNDFudnplRzM4WU5qd1JJNk5Bb1dhdjQ3UUJTQ1JMVUNnWUVBeGNoOGhNVEExU2g2NDRqcFZaOUUKQUJlZFViL25QazlZSzdNNUVtbnBQWjJPbGxTYnk1K2xOdjVySlBuV3FPRk

hJVHBWOU4vTGlwV1Nick5sRERSNgpFSElnNU1xZUMzQTdJZFRDblM5Q2Zlc0MzaUZCV0trZ0U1emw2a1JDTDAxYm1vcjl1UTNKcmUzd2wrRzRxUmZWCjZsVXdSSm1YL3FoOHJGQ3NwaFhHaHN

FQ2dZQjVqclRpZlQrTnZSUE5mcEdlM2pTTzhISHlGS2dMRngwbkIwQUEKMFBFdFZ4eFZqa2w2SXNGc3d4VzI1bVZFdkhoZ0k4NzZVZG1SYlBDY1VreG1lbEZzbG1qczlwUTlTbGFNQzlqawp6

U1N3R1NuWk9yWGw1bEFzYnVQQUE4aE9MYWdsaGpwVXl4TURSMExtWWErYyt1Y2dnejY0clF2Zk5JNkJMNUpXCjQzV3VvUUtCZ0M1Qm9ud3JPRVREajNFVmJHcWZ1ejVzVERZZVJkY1hWUUJje

TlsVSsyQjBOSnZIUDFzbWdqSkwKM3pZUDYrRk1UR0R6cXA3Ymw1MEFXZnFjSTVTMHM2cldrVGtxUVFnK1RteVNkQ3NrUXZIUmJkMExrcStrbjNLTwpQS09CR05DQVhFbDBlREQvN1d0RDh2d0

13NzU5bTNpbnN1RHpiTVNFWFVkM3NIcjBYbHlBCi0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiO

iJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWhmeHF4Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3

VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIxMWU5YTJiNS0zYjI2LTExZWE

tYWE1Zi0wMDBjMjk1ZWEyZDMiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.KF9fxnzxtRKcqXN852OK61vAsSSccMdsLpzYDAhW4yGLwkI

YOIBqLFmIofZ73OUJzPt5NOHbsXg7xw6IfdtzhCwTkCJY7jsVlJDalV_Ac2KaAb3AXiwvLHDEtkuddtcGbwwpRv1kGHbSe-xsLM6aNHLl5IvijeNhrQjAA86lhYqVlvGZdorlIkThih8sE1eP

klENiJdVgqI4BOuEVdy1wTrZAqpFmKPGRNdXnoTHhT-GLP7QdwUS8oxIba2FXH0oZsmqVXJu5qOL6xol8PGE372ah4RP6y5kleb50-QgnmShDwLIMnUyhL13ypg3Z_X1NmjveKmcGwmxaTXu9

IiIhA

3、将修改后的/opt/kubeconfig放在桌面上,然后倒入到登陆页面即可

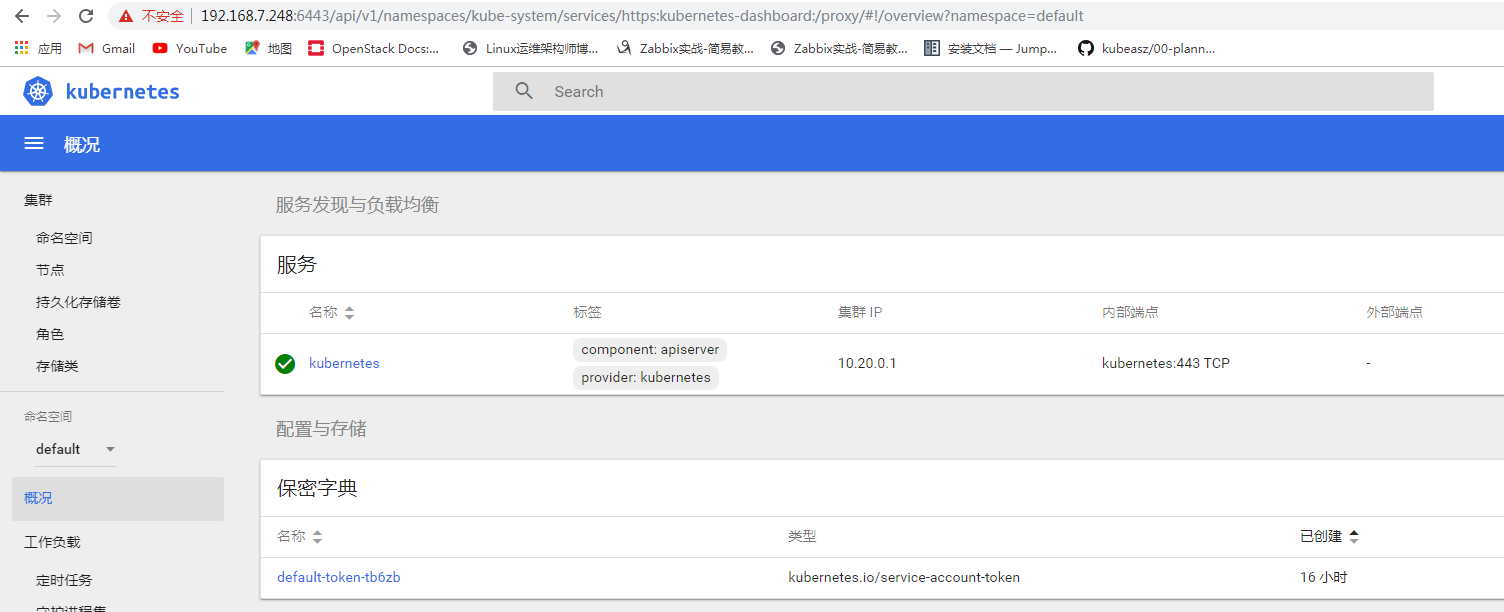

(2)登录成功,但是只有default这个名称空间的admin权限

三、搭建kube-dns

github官方下载地址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.13.md#v1138

1、下载以下四个包:

kubernetes.tar.gz kubernetes-client-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz kubernetes-node-linux-amd64.tar.gz

2、将下载的包解压到指定的目录下

root@k8s-master1:# cd /usr/local/src root@k8s-master1:# tar xf kubernetes-node-linux-amd64.tar.gz root@k8s-master1:# tar xf kubernetes-server-linux-amd64.tar.gz root@k8s-master1:# tar xf kubernetes.tar.gz root@k8s-master1:# tar xf kubernetes-client-darwin-amd64.tar.gz

需要下载的镜像和文件

root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# ll total 649260 -rw-r--r-- 1 root root 3983872 Jul 10 2019 busybox-online.tar.gz -rw-r--r-- 1 root root 41687040 Jul 10 2019 k8s-dns-dnsmasq-nanny-amd64_1.14.13.tar.gz # 提供DNS缓存,降低kubedns负载,提高性能 -rw-r--r-- 1 root root 51441152 Jul 10 2019 k8s-dns-kube-dns-amd64_1.14.13.tar.gz # 提供service name域名的解析 -rw-r--r-- 1 root root 43140608 Jul 10 2019 k8s-dns-sidecar-amd64_1.14.13.tar.gz # 定期检查kubedns和dnsmasq的健康状态

3、将下载的镜像传到本地harbor仓库中

root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# docker login harbor.struggle.net # 登陆harbor仓库 # 将下载的kube-dns传到本地的harbor上 root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# docker load -i k8s-dns-kube-dns-amd64_1.14.13.tar.gz # 将镜像传到docker上 root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# docker images # 查看此时的docker镜像 REPOSITORY TAG IMAGE ID CREATED SIZE alpine latest e7d92cdc71fe 2 days ago 5.59MB harbor.struggle.net/baseimages/alpine latest e7d92cdc71fe 2 days ago 5.59MB harbor.struggle.net/baseimages/kubernetes-dashboard-amd64 latest f9aed6605b81 13 months ago 122MB harbor.struggle.net/baseimages/kubernetes-dashboard-amd64 v1.10.1 f9aed6605b81 13 months ago 122MB registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64 v1.10.1 f9aed6605b81 13 months ago 122MB harbor.struggle.net/baseimages/calico-node v3.3.2 4e9be81e3a59 13 months ago 75.3MB harbor.struggle.net/baseimages/calico/node v3.3.2 4e9be81e3a59 13 months ago 75.3MB calico/node v3.3.2 4e9be81e3a59 13 months ago 75.3MB calico/cni v3.3.2 490d921fa49c 13 months ago 75.4MB harbor.struggle.net/baseimages/calico-cni v3.3.2 490d921fa49c 13 months ago 75.4MB harbor.struggle.net/baseimages/calico/cni v3.3.2 490d921fa49c 13 months ago 75.4MB calico/kube-controllers v3.3.2 22c16a9aecce 13 months ago 56.5MB harbor.struggle.net/baseimages/calico-kube-controllers v3.3.2 22c16a9aecce 13 months ago 56.5MB harbor.struggle.net/baseimages/calico/kube-controllers v3.3.2 22c16a9aecce 13 months ago 56.5MB gcr.io/google-containers/k8s-dns-kube-dns-amd64 1.14.13 82f954458b31 16 months ago 51.2MB harbor.struggle.net/baseimages/pause-amd64 3.1 da86e6ba6ca1 2 years ago 742kB registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64 3.1 da86e6ba6ca1 2 years ago 742kB root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# docker tag 82f954458b31 harbor.struggle.net/baseimages/k8s-dns-kube-dns-amd64:v1.14.13 # 将镜像打标签 root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# docker push harbor.struggle.net/baseimages/k8s-dns-kube-dns-amd64:v1.14.13 # 将镜像传到本地harbor上 # 将下载的dns-dns-dnsmasq-nanny传到harbor仓库上 root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# docker load -i k8s-dns-dnsmasq-nanny-amd64_1.14.13.tar.gz # 将镜像先传到docker上 root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# docker tag 7b15476a7228 harbor.struggle.net/baseimages/k8s-dns-dnsmasq-nanny-amd64:v1.14.13 # 给镜像打标签 root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# docker push harbor.struggle.net/baseimages/k8s-dns-dnsmasq-nanny-amd64:v1.14.13 # 将镜像传到本地harbor上 # 将下载的sidecar镜像传到本地harbor上 root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# docker load -i k8s-dns-sidecar-amd64_1.14.13.tar.gz root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# docker tag 333fb0833870 harbor.struggle.net/baseimages/k8s-dns-sidecar-amd64:v1.14.13 root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# docker push harbor.struggle.net/baseimages/k8s-dns-sidecar-amd64:v1.14.13

4、修改client、node、server、node解压后kubernetes目录下kube-dns.yaml.base的文件

root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# cd kubernetes/cluster/addons/dns/kube-dns/ # 切换到此目录下,修改kube-dns.yaml.base文件

修改kube-dns.yaml.base文件

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "KubeDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.20.254.254 # 修改DNS地址,与前面写的/etc/ansible/hosts文件内容一致

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-dns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-dns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

rollingUpdate:

maxSurge: 10%

maxUnavailable: 0

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

priorityClassName: system-cluster-critical

securityContext:

supplementalGroups: [ 65534 ]

fsGroup: 65534

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

volumes:

- name: kube-dns-config

configMap:

name: kube-dns

optional: true

containers:

- name: kubedns

image: harbor.struggle.net/baseimages/k8s-dns-kube-dns-amd64:v1.14.13 # 指向本地harbor仓库

resources:

# TODO: Set memory limits when we've profiled the container for large

# clusters, then set request = limit to keep this container in

# guaranteed class. Currently, this container falls into the

# "burstable" category so the kubelet doesn't backoff from restarting it.

limits:

cpu: 2 # 独占两核的CPU,否则反映贼慢,需要修改

memory: 4Gi # 硬限制内存改为4Gi

requests:

cpu: 1 # 代表一核的CPU

memory: 2Gi # 软限制内存改为2Gi

livenessProbe:

httpGet:

path: /healthcheck/kubedns

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /readiness

port: 8081

scheme: HTTP

# we poll on pod startup for the Kubernetes master service and

# only setup the /readiness HTTP server once that's available.

initialDelaySeconds: 3

timeoutSeconds: 5

args:

- --domain=linux36.local. # 与/etc/ansible/hosts文件的域名一致

- --dns-port=10053

- --config-dir=/kube-dns-config

- --v=2

env:

- name: PROMETHEUS_PORT

value: "10055"

ports:

- containerPort: 10053

name: dns-local

protocol: UDP

- containerPort: 10053

name: dns-tcp-local

protocol: TCP

- containerPort: 10055

name: metrics

protocol: TCP

volumeMounts:

- name: kube-dns-config

mountPath: /kube-dns-config

- name: dnsmasq

image: harbor.struggle.net/baseimages/k8s-dns-dnsmasq-nanny-amd64:v1.14.13 # 指向本地仓库

livenessProbe:

httpGet:

path: /healthcheck/dnsmasq

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- -v=2

- -logtostderr

- -configDir=/etc/k8s/dns/dnsmasq-nanny

- -restartDnsmasq=true

- --

- -k

- --cache-size=1000

- --no-negcache

- --dns-loop-detect

- --log-facility=-

- --server=/linux36.local/127.0.0.1#10053 # 修改域名,与/etc/ansible/hosts文件内容一致

- --server=/in-addr.arpa/127.0.0.1#10053

- --server=/ip6.arpa/127.0.0.1#10053

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

# see: https://github.com/kubernetes/kubernetes/issues/29055 for details

resources:

requests:

cpu: 150m

memory: 20Mi

volumeMounts:

- name: kube-dns-config

mountPath: /etc/k8s/dns/dnsmasq-nanny

- name: sidecar

image: harbor.struggle.net/baseimages/k8s-dns-sidecar-amd64:v1.14.13 # 指向本地harbor仓库

livenessProbe:

httpGet:

path: /metrics

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- --v=2

- --logtostderr

- --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.linux36.local,5,SRV # 修改为与/etc/ansible/hosts文件内容一致

- --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.linux36.local,5,SRV

ports:

- containerPort: 10054

name: metrics

protocol: TCP

resources:

requests:

memory: 20Mi

cpu: 10m

dnsPolicy: Default # Don't use cluster DNS.

serviceAccountName: kube-dns

5、将修改后的kube-dns.yaml复制到指定的/etc/ansible/manifests/dns/kube-dns/目录下

root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/kubernetes/cluster/addons/dns/kube-dns# cp kube-dns.yaml.base /etc/ansible/manifests/dns/kube-dns/kube-dns.yaml root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# cd /etc/ansible/manifests/dns/kube-dns/ root@k8s-master1:/etc/ansible/manifests/dns/kube-dns# kubectl create -f kube-dns.yaml # 创建DNS

6、验证DNS运行状态及域名解析结果

# kubectl get pods -n kube-system # 查看DNS运行状态 # kubectl exec busybox nslookup kubernetes # 解析域名 Server: 10.20.254.254 Address 1: 10.20.254.254 kube-dns.kube-system.svc.linux36.local # kubectl exec busybox nslookup kubernetes.default.svc.linux36.local # 查看域名解析结果 Server: 10.20.254.254 Address 1: 10.20.254.254 kube-dns.kube-system.svc.linux36.local Name: kubernetes.default.svc.linux36.local Address 1: 10.20.0.1 kubernetes.default.svc.linux36.local

三、部署监控组件heapster

1、下载相关的镜像

root@k8s-master1:~# cd /etc/ansible/manifests/dns/kube-dns/heapster/ # 没有heapster目录就创建一个。 root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# ll total 237196 drwxr-xr-x 2 root root 209 Jan 20 06:11 ./ drwxr-xr-x 3 root root 211 Jan 20 06:09 ../ -rw-r--r-- 1 root root 2158 Jul 10 2019 grafana.yaml -rw-r--r-- 1 root root 12288 Jan 20 06:11 .grafana.yaml.swp -rw-r--r-- 1 root root 75343360 Jul 10 2019 heapster-amd64_v1.5.1.tar -rw-r--r-- 1 root root 154731520 Jul 10 2019 heapster-grafana-amd64-v4.4.3.tar -rw-r--r-- 1 root root 12782080 Jul 10 2019 heapster-influxdb-amd64_v1.3.3.tar -rw-r--r-- 1 root root 1389 Jul 10 2019 heapster.yaml -rw-r--r-- 1 root root 979 Jul 10 2019 influxdb.yaml

2、将heapster-grafana-amd64导入到harbor仓库

root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster#docker login harbor.struggle.net root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# docker load -i heapster-grafana-amd64-v4.4.3.tar root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# docker tag 8cb3de219af7 harbor.struggle.net/baseimages/heapster-grafana-amd64:v4.4.3 root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# docker push harbor.struggle.net/baseimages/heapster-grafana-amd64:v4.4.3

修改对应的grafana.yaml配置文件

root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# cat grafana.yaml

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: monitoring-grafana

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: grafana

spec:

containers:

- name: grafana

image: harbor.struggle.net/baseimages/heapster-grafana-amd64:v4.4.3 # 指定镜像路径

imagePullPolicy: Always

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /var

name: grafana-storage

env:

- name: INFLUXDB_HOST

value: monitoring-influxdb

- name: GF_SERVER_HTTP_PORT

value: "3000"

# The following env variables are required to make Grafana accessible via

# the kubernetes api-server proxy. On production clusters, we recommend

# removing these env variables, setup auth for grafana, and expose the grafana

# service using a LoadBalancer or a public IP.

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

# If you're only using the API Server proxy, set this value instead:

value: /api/v1/namespaces/kube-system/services/monitoring-grafana/proxy/

#value: /

volumes:

- name: grafana-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

name: monitoring-grafana

namespace: kube-system

spec:

# In a production setup, we recommend accessing Grafana through an external Loadbalancer

# or through a public IP.

# type: LoadBalancer

# You could also use NodePort to expose the service at a randomly-generated port

# type: NodePort

ports:

- port: 80

targetPort: 3000

selector:

k8s-app: grafana

3、将heapster-amd64:v1.5.1导入到harbor仓库

root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# docker load -i heapster-amd64_v1.5.1.tar root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# docker tag gcr.io/google-containers/heapster-amd64:v1.5.1 harbor.struggle.net/baseimages/heapster-amd64:v1.5.1 root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# docker push harbor.struggle.net/baseimages/heapster-amd64:v1.5.1

修改对应的heapster.yaml配置文件

root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# cat heapster.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: heapster

subjects:

- kind: ServiceAccount

name: heapster

namespace: kube-system

roleRef:

kind: ClusterRole

name: system:heapster

apiGroup: rbac.authorization.k8s.io

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: heapster

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: heapster

spec:

serviceAccountName: heapster

containers:

- name: heapster

image: harbor.struggle.net/baseimages/heapster-amd64:v1.5.1 # 指定镜像路径

imagePullPolicy: Always

command:

- /heapster

- --source=kubernetes:https://kubernetes.default

- --sink=influxdb:http://monitoring-influxdb.kube-system.svc:8086

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

#kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster

4、将heapster-influxdb-amd64导入到harbor仓库

root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# docker load -i heapster-influxdb-amd64_v1.3.3.tar root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# docker tag gcr.io/google-containers/heapster-influxdb-amd64:v1.3.3 harbor.struggle.net/baseimages/heapster-influxdb-amd64:v1.3.3 root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# docker push harbor.struggle.net/baseimages/heapster-influxdb-amd64:v1.3.3

5、修改influxdb.yaml配置文件

root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# cat influxdb.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: monitoring-influxdb

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: influxdb

spec:

containers:

- name: influxdb

image: harbor.struggle.net/baseimages/heapster-influxdb-amd64:v1.3.3 # 指定镜像路径

volumeMounts:

- mountPath: /data

name: influxdb-storage

volumes:

- name: influxdb-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-influxdb

name: monitoring-influxdb

namespace: kube-system

spec:

ports:

- port: 8086

targetPort: 8086

selector:

k8s-app: influxdb

6、创建heapster监控。

root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# kubectl apply -f . root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# kubectl get pods -n kube-system # 查看此时的heapster状态 root@k8s-master1:/etc/ansible/manifests/dns/kube-dns/heapster# kubectl cluster-info # 查看集群信息