部署环境

$ kubectl get node

NAME STATUS ROLES AGE VERSION

master01 Ready master 13d v1.14.0

master02 Ready master 13d v1.14.0

master03 Ready master 13d v1.14.0

node01 Ready <none> 13d v1.14.0

node02 Ready <none> 13d v1.14.0

node03 Ready <none> 13d v1.14.0

目录结构

# cd efk/

# tree

.

├── es

│ ├── es-statefulset.yaml

│ ├── pvc.yaml

│ ├── pv.yaml

│ ├── rbac.yaml

│ └── service.yaml

├── filebeate

│ ├── config.yaml

│ ├── daemonset.yaml

│ ├── filebeat.tgz

│ └── rbac.yaml

└── kibana

├── deployment.yaml

└── service.yaml

创建 elasticsearch

# cd /root/efk/es

创建 es pv

$ cat pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: "es-pv"

labels:

name: "es-pv"

spec:

capacity:

storage: 3Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

hostPath:

path: /es #一定要是777 的权限,否则创建pod 的时候会报错

# 生成配置文件

kubectl create -f pv.yaml

创建es pvc

$ cat pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: "es-pvc"

namespace: kube-system

labels:

name: "es-pvc"

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 3Gi

selector:

matchLabels:

name: es-pv

# 生成配置文件

kubectl create -f pvc.yaml

创建 es rbac认证

$ cat rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: elasticsearch-logging

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- "services"

- "namespaces"

- "endpoints"

verbs:

- "get"

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: elasticsearch-logging

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addomanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

namespace: kube-system

name: elasticsearch-logging

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

subjects:

- kind: ServiceAccount

name: elasticsearch-logging

namespace: kube-system

apiGroup: ""

roleRef:

kind: ClusterRole

name: elasticsearch-logging

apiGroup: ""

# 生成配置文件

kubectl create -f rbac.yaml

创建 es pod相关StatefulSet

$ cat es-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: elasticsearch-logging

namespace: kube-system

labels:

k8s-app: elasticsearch-logging

version: v6.3.0

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

serviceName: elasticsearch-logging

replicas: 1

selector:

matchLabels:

k8s-app: elasticsearch-logging

version: v6.3.0

template:

metadata:

labels:

k8s-app: elasticsearch-logging

version: v6.3.0

kubernetes.io/cluster-service: "true"

spec:

serviceAccountName: elasticsearch-logging

containers:

- image: docker.io/elasticsearch:6.6.1

name: elasticsearch-logging

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: db

protocol: TCP

- containerPort: 9300

name: transport

protocol: TCP

volumeMounts:

- name: elasticsearch-logging

mountPath: /usr/share/elasticsearch/data

env:

- name: "NAMESPACE"

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumes:

- name: elasticsearch-logging

persistentVolumeClaim:

claimName: es-pvc

initContainers:

- image: alpine:3.7

command: ["/sbin/sysctl", "-w", "vm.max_map_count=262144"]

name: elasticsearch-logging-init

securityContext:

privileged: true

# 生成配置文件

kubectl create -f es-statefulset.yaml

创建 es pod service

$ cat service.yaml

apiVersion: v1

kind: Service

metadata:

name: elasticsearch-logging

namespace: kube-system

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "Elasticsearch"

spec:

ports:

- port: 9200

protocol: TCP

targetPort: db

selector:

k8s-app: elasticsearch-logging

# 生成配置文件

kubectl create -f service.yaml

创建filebeate

# cd /root/efk/filebeate

创建 filebeate rbac认证

$ cat rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""]

resources:

- namespaces

- pods

verbs:

- get

- watch

- list

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: kube-system

labels:

k8s-app: filebeat

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: kube-system

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

# 生成配置文件

kubectl create -f rbac.yaml

创建 filebeate configmap (包括filebeate 配置文件 以及抓取容器日志所需的配置文件)

$ cat config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: kube-system

labels:

k8s-app: filebeat

kubernetes.io/cluster-service: "true"

data:

filebeat.yml: |-

filebeat.config:

prospectors:

path: /usr/share/filebeat/prospectors.d/*.yml

reload.enabled: false

modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

processors:

- add_cloud_metadata:

cloud.id: ${ELASTIC_CLOUD_ID}

cloud.auth: ${ELASTIC_CLOUD_AUTH}

output.elasticsearch:

hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-prospectors

namespace: kube-system

labels:

k8s-app: filebeat

kubernetes.io/cluster-service: "true"

data:

kubernetes.yml: |-

- type: log

enabled: true

symlinks: true

paths:

- /var/log/containers/*.log

exclude_files: ["calico","firewall","filebeat","kube-proxy"]

processors:

- add_kubernetes_metadata:

in_cluster: true

# 生成配置文件

kubectl create -f config.yaml

创建 filebeate pod 相关 DaemonSet 控制器

$ cat daemonset.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: filebeat

namespace: kube-system

labels:

k8s-app: filebeat

kubernetes.io/cluster-service: "true"

spec:

template:

metadata:

labels:

k8s-app: filebeat

kubernetes.io/cluster-service: "true"

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

image: prima/filebeat:6.4.2

args: [

"-c","/etc/filebeat.yml",

"-e",

]

env:

- name: ELASTICSEARCH_HOST

value: elasticsearch-logging

- name: ELASTICSEARCH_USERNAME

value:

- name: ELASTICSEARCH_PASSWORD

value:

- name: ELASTIC_CLOUD_ID

value:

- name: ELASTIC_CLOUD_AUTH

value:

securityContext:

runAsUser: 0

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config #filebeat 配置文件

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: prospectors #k8s 宿主机运行相关日志文件

mountPath: /usr/share/filebeat/prospectors.d

readOnly: true

- name: data #filebeat pod 存放数据的目录

mountPath: /usr/share/filebeat/data

- name: varlog #存放宿主机上 /var/log 的日志

mountPath: /var/log

readOnly: true

- name: varlibdockercontainers #存放宿主机上 关于k8s 相关json文件

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0600

name: filebeat-config

- name: varlog

hostPath:

path: /var/log/

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers/

- name: prospectors

configMap:

defaultMode: 0600

name: filebeat-prospectors

- name: data

hostPath:

path: /var/lib/filebeat-data

type: DirectoryOrCreate # 宿主机上不存在创建此目录

# 生成配置文件

kubectl create -f daemonset.yaml

创建 kibana

# cd /root/efk/kibana

创建 kibana pod 相关deployment控制器

$ cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana-logging

namespace: kube-system

labels:

k8s-app: kibana-logging

kubernetes.io/cluster-serivce: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kibana-logging

template:

metadata:

labels:

k8s-app: kibana-logging

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

containers:

- name: kibana-logging

image: kibana:6.6.1

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch-logging:9200

ports:

- containerPort: 5601

name: ui

protocol: TCP

# 生成配置文件

kubectl create -f deployment.yaml

创建 kibana pod service

$ cat service.yaml

apiVersion: v1

kind: Service

metadata:

name: kibana-logging

namespace: kube-system

labels:

k8s-app: kibana-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "Kibana"

spec:

type: NodePort

ports:

- port: 5601

protocol: TCP

targetPort: ui

nodePort: 30003

selector:

k8s-app: kibana-logging

# 生成配置文件

kubectl create -f service.yaml

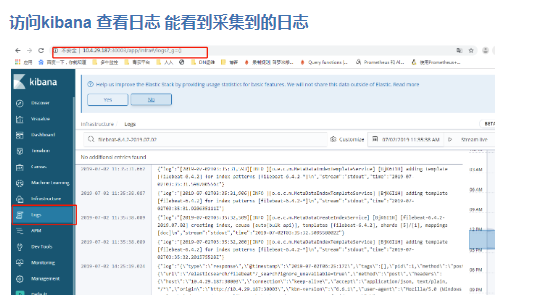

查看 pod service

$ kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch-logging ClusterIP 10.101.1.2 <none> 9200/TCP 22h

kibana-logging NodePort 10.101.121.228 <none> 5601:30003/TCP 21h #30003为kibana 端口

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 20d

kubernetes-dashboard NodePort 10.110.209.252 <none> 443:31021/TCP 20d

traefik-web-ui ClusterIP 10.102.131.255 <none> 80/TCP 19d