参考:

https://learnopenglcn.github.io/05%20Advanced%20Lighting/07%20Bloom/

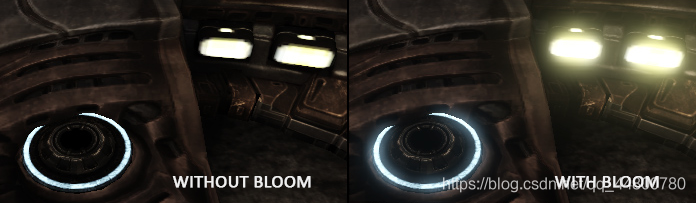

光晕效果可以使用一个后处理特效泛光来实现。泛光使所有明亮区域产生光晕效果。下面是一个使用了和没有使用光晕的对比(图片生成自虚幻引擎):

Bloom是我们能够注意到一个明亮的物体真的有种明亮的感觉。泛光可以极大提升场景中的光照效果,并提供了极大的效果提升,尽管做到这一切只需一点改变。

Bloom和HDR结合使用效果很好。常见的一个误解是HDR和泛光是一样的,很多人认为两种技术是可以互换的。但是它们是两种不同的技术,用于各自不同的目的上。可以使用默认的8位精确度的帧缓冲,也可以在不使用泛光效果的时候,使用HDR。只不过在有了HDR之后再实现泛光就更简单了。

为实现泛光,我们像平时那样渲染一个有光场景,提取出场景的HDR颜色缓冲以及只有这个场景明亮区域可见的图片。被提取的带有亮度的图片接着被模糊,结果被添加到HDR场景上面。

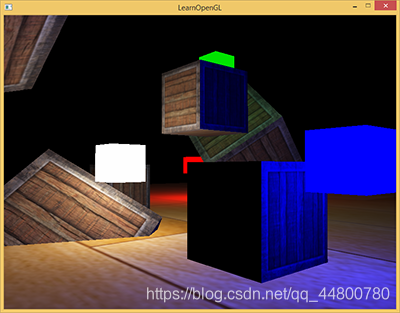

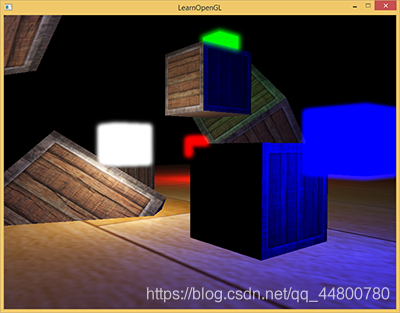

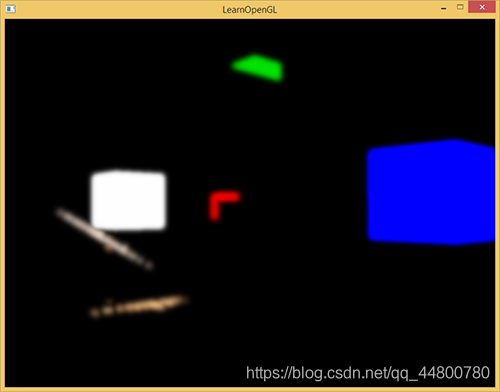

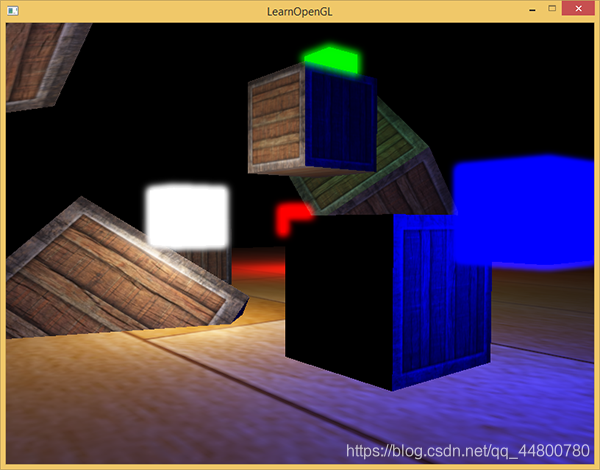

我们在场景中渲染一个带有4个立方体形式不同颜色的明亮的光源。带有颜色的发光立方体的亮度在1.5到15.0之间。如果我们将其渲染至HDR颜色缓冲,场景看起来会是这样的:

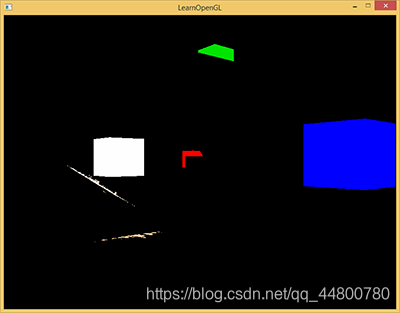

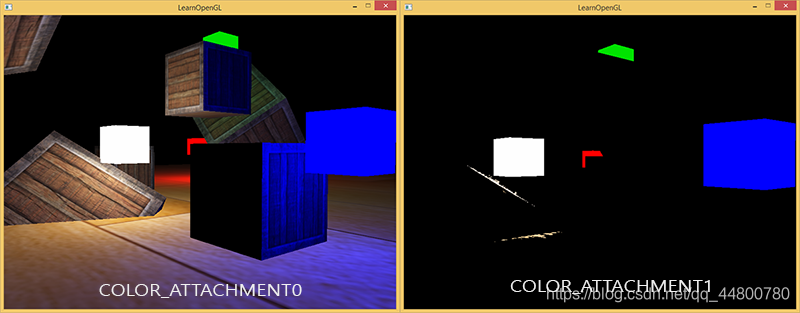

我们得到这个HDR颜色缓冲纹理,提取所有超出一定亮度的fragment。这样我们就会获得一个只有fragment超过了一定阈限的颜色区域:

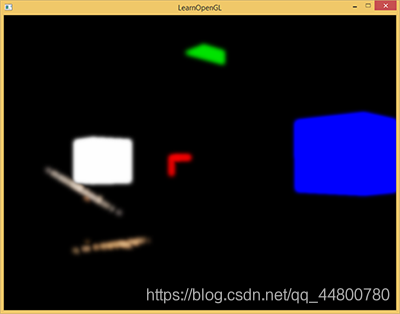

我们将这个超过一定亮度阈限的纹理进行模糊。泛光效果的强度很大程度上被模糊过滤器的范围和强度所决定

最终的被模糊化的纹理就是我们用来获得发出光晕效果的东西。这个已模糊的纹理要添加到原来的HDR场景纹理的上部。因为模糊过滤器的应用明亮区域发出光晕,所以明亮区域在长和宽上都有所扩展

泛光本身并不是个复杂的技术,但很难获得正确的效果。它的品质很大程度上取决于所用的模糊过滤器的质量和类型。简单的改改模糊过滤器就会极大的改变泛光效果的品质。

提取亮色

第一步我们要从渲染出来的场景中提取两张图片。我们可以渲染场景两次,每次使用一个不同的着色器渲染到不同的帧缓冲中,但我们可以使用一个叫做MRT(Multiple Render Targets多渲染目标)的小技巧,这样我们就能定义多个像素着色器了;有了它我们还能够在一个单独渲染处理中提取头两个图片。在像素着色器的输出前,我们指定一个布局location标识符,这样我们便可控制一个像素着色器写入到哪个颜色缓冲:

layout (location = 0) out vec4 FragColor;

layout (location = 1) out vec4 BrightColor;

使用多个像素着色器输出的必要条件是,有多个颜色缓冲附加到了当前绑定的帧缓冲对象上

通过使用GL_COLOR_ATTACHMENT1,我们可以得到一个附加了两个颜色缓冲的帧缓冲对象:

GLuint hdrFBO;

glGenFramebuffers(1, &hdrFBO);

glBindFramebuffer(GL_FRAMEBUFFER, hdrFBO);

GLuint colorBuffers[2];

glGenTextures(2, colorBuffers);

for (GLuint i = 0; i < 2; i++)

{

glBindTexture(GL_TEXTURE_2D, colorBuffers[i]);

glTexImage2D(

GL_TEXTURE_2D, 0, GL_RGB16F, SCR_WIDTH, SCR_HEIGHT, 0, GL_RGB, GL_FLOAT, NULL

);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

// attach texture to framebuffer

glFramebufferTexture2D(

GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0 + i, GL_TEXTURE_2D, colorBuffers[i], 0

);

}

我们需要显式告知OpenGL我们正在通过glDrawBuffers渲染到多个颜色缓冲,否则OpenGL只会渲染到帧缓冲的第一个颜色附件,而忽略所有其他的。我们可以通过传递多个颜色附件的枚举来做这件事,我们以下面的操作进行渲染:

GLuint attachments[2] = { GL_COLOR_ATTACHMENT0, GL_COLOR_ATTACHMENT1 };

glDrawBuffers(2, attachments);

当渲染到这个帧缓冲中的时候,一个着色器使用一个布局location修饰符,那么fragment就会用相应的颜色缓冲就会被用来渲染

#version 330 core

layout (location = 0) out vec4 FragColor;

layout (location = 1) out vec4 BrightColor;

[...]

void main()

{

[...] // first do normal lighting calculations and output results

FragColor = vec4(lighting, 1.0f);

// Check whether fragment output is higher than threshold, if so output as brightness color

float brightness = dot(FragColor.rgb, vec3(0.2126, 0.7152, 0.0722));

if(brightness > 1.0)

BrightColor = vec4(FragColor.rgb, 1.0);

}

这里我们先正常计算光照,将其传递给第一个像素着色器的输出变量FragColor。然后我们使用当前储存在FragColor的东西来决定它的亮度是否超过了一定阈限。我们通过恰当地将其转为灰度的方式计算一个fragment的亮度,如果它超过了一定阈限,我们就把颜色输出到第二个颜色缓冲,那里保存着所有亮部;渲染发光的立方体也是一样的。

这也说明了为什么泛光在HDR基础上能够运行得很好。因为HDR中,我们可以将颜色值指定超过1.0这个默认的范围,我们能够得到对一个图像中的亮度的更好的控制权。没有HDR我们必须将阈限设置为小于1.0的数,虽然可行,但是亮部很容易变得很多,这就导致光晕效果过重。

有了两个颜色缓冲,我们就有了一个正常场景的图像和一个提取出的亮区的图像;这些都在一个渲染步骤中完成

有了一个提取出的亮区图像,我们现在就要把这个图像进行模糊处理

高斯模糊

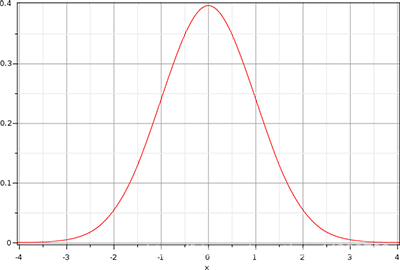

高斯模糊基于高斯曲线,高斯曲线通常被描述为一个钟形曲线,中间的值达到最大化,随着距离的增加,两边的值不断减少。高斯曲线在数学上有不同的形式,但是通常是这样的形状:

要实现高斯模糊过滤我们需要一个二维四方形作为权重,从这个二维高斯曲线方程中去获取它。然而这个过程有个问题,就是很快会消耗极大的性能。以一个32×32的模糊kernel为例,我们必须对每个fragment从一个纹理中采样1024次

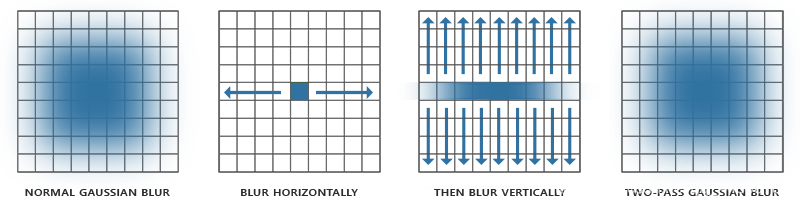

幸运的是,高斯方程有个非常巧妙的特性**,它允许我们把二维方程分解为两个更小的方程:一个描述水平权重,另一个描述垂直权重**。我们首先用水平权重在整个纹理上进行水平模糊,然后在经改变的纹理上进行垂直模糊。利用这个特性,结果是一样的,但是可以节省难以置信的性能,因为我们现在只需做32+32次采样,不再是1024了!这叫做两步高斯模糊

这意味着我们如果对一个图像进行模糊处理,至少需要两步,最好使用帧缓冲对象做这件事

在我们研究帧缓冲之前,先讨论高斯模糊的像素着色器:

#version 330 core

out vec4 FragColor;

in vec2 TexCoords;

uniform sampler2D image;

uniform bool horizontal;

uniform float weight[5] = float[] (0.227027, 0.1945946, 0.1216216, 0.054054, 0.016216);

void main()

{

vec2 tex_offset = 1.0 / textureSize(image, 0); // gets size of single texel

vec3 result = texture(image, TexCoords).rgb * weight[0]; // current fragment's contribution

if(horizontal)

{

for(int i = 1; i < 5; ++i)

{

result += texture(image, TexCoords + vec2(tex_offset.x * i, 0.0)).rgb * weight[i];

result += texture(image, TexCoords - vec2(tex_offset.x * i, 0.0)).rgb * weight[i];

}

}

else

{

for(int i = 1; i < 5; ++i)

{

result += texture(image, TexCoords + vec2(0.0, tex_offset.y * i)).rgb * weight[i];

result += texture(image, TexCoords - vec2(0.0, tex_offset.y * i)).rgb * weight[i];

}

}

FragColor = vec4(result, 1.0);

}

这里我们使用一个比较小的高斯权重做例子,每次我们用它来指定当前fragment的水平或垂直样本的特定权重

通过用1.0除以纹理的大小(从textureSize得到一个vec2)得到一个纹理像素的实际大小,以此作为偏移距离的根据

我们为图像的模糊处理创建两个基本的帧缓冲,每个只有一个颜色缓冲纹理:

GLuint pingpongFBO[2];

GLuint pingpongBuffer[2];

glGenFramebuffers(2, pingpongFBO);

glGenTextures(2, pingpongBuffer);

for (GLuint i = 0; i < 2; i++)

{

glBindFramebuffer(GL_FRAMEBUFFER, pingpongFBO[i]);

glBindTexture(GL_TEXTURE_2D, pingpongBuffer[i]);

glTexImage2D(

GL_TEXTURE_2D, 0, GL_RGB16F, SCR_WIDTH, SCR_HEIGHT, 0, GL_RGB, GL_FLOAT, NULL

);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glFramebufferTexture2D(

GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, pingpongBuffer[i], 0

);

}

得到一个HDR纹理后,我们用提取出来的亮区纹理填充一个帧缓冲,然后对其模糊处理10次(5次垂直5次水平):

GLboolean horizontal = true, first_iteration = true;

GLuint amount = 10;

shaderBlur.Use();

for (GLuint i = 0; i < amount; i++)

{

glBindFramebuffer(GL_FRAMEBUFFER, pingpongFBO[horizontal]);

glUniform1i(glGetUniformLocation(shaderBlur.Program, "horizontal"), horizontal);

glBindTexture(

GL_TEXTURE_2D, first_iteration ? colorBuffers[1] : pingpongBuffers[!horizontal]

);

RenderQuad();

horizontal = !horizontal;

if (first_iteration)

first_iteration = false;

}

glBindFramebuffer(GL_FRAMEBUFFER, 0);

有一对帧缓冲,我们把另一个帧缓冲的颜色缓冲放进当前的帧缓冲的颜色缓冲中,使用不同的着色效果渲染指定的次数。基本上就是不断地切换帧缓冲和纹理去绘制。这样我们先在场景纹理的第一个缓冲中进行模糊,然后在把第一个帧缓冲的颜色缓冲放进第二个帧缓冲进行模糊,接着,将第二个帧缓冲的颜色缓冲放进第一个,循环往复。

通过对提取亮区纹理进行5次模糊,我们就得到了一个正确的模糊的场景亮区图像。

泛光的最后一步是把模糊处理的图像和场景原来的HDR纹理进行结合

把两个纹理混合

有了场景的HDR纹理和模糊处理的亮区纹理,我们只需把它们结合起来就能实现泛光或称光晕效果了。最终的像素着色器(大部分和HDR教程用的差不多)要把两个纹理混合:

#version 330 core

out vec4 FragColor;

in vec2 TexCoords;

uniform sampler2D scene;

uniform sampler2D bloomBlur;

uniform float exposure;

void main()

{

const float gamma = 2.2;

vec3 hdrColor = texture(scene, TexCoords).rgb;

vec3 bloomColor = texture(bloomBlur, TexCoords).rgb;

hdrColor += bloomColor; // additive blending

// tone mapping

vec3 result = vec3(1.0) - exp(-hdrColor * exposure);

// also gamma correct while we're at it

result = pow(result, vec3(1.0 / gamma));

FragColor = vec4(result, 1.0f);

}

要注意的是我们要在应用色调映射之前添加泛光效果。这样添加的亮区的泛光,也会柔和转换为LDR,光照效果相对会更好。

把两个纹理结合以后,场景亮区便有了合适的光晕特效:

完整代码如下:

#include <glad/glad.h>

#include <GLFW/glfw3.h>

#include "shader.h"

#include <iostream>

#include <glm/glm.hpp>

#include <glm/gtc/matrix_transform.hpp>

#include <glm/gtc/type_ptr.hpp>

#include "Camera.h"

#include "Model.h"

#define STB_IMAGE_IMPLEMENTATION

#include "stb_image.h"

void framebuffer_size_callback(GLFWwindow* window, int width, int height);

void mouse_callback(GLFWwindow* window, double xpos, double ypos);

void scroll_callback(GLFWwindow* window, double xoffset, double yoffset);

void processInput(GLFWwindow *window);

unsigned int loadTexture(const char *path, bool gammaCorrection);

unsigned int loadCubemap(vector<std::string> faces);

void renderQuad();

void renderCube();

const unsigned int SCR_WIDTH = 1280;

const unsigned int SCR_HEIGHT = 720;

bool bloom = true;

bool bloomKeyPressed = false;

Camera camera(glm::vec3(0.0f, 0.0f, 5.0f));

float lastX = SCR_WIDTH / 2.0f;

float lastY = SCR_HEIGHT / 2.0f;

bool firstMouse = true;

float deltaTime = 0.0f;

float lastFrame = 0.0f;

GLboolean hdr = true; // Change with 'Space'

GLfloat exposure = 1.0f; // Change with Q and E

bool hdrKeyPressed = false;

//glm::vec3 lightPos(1.2f, 1.0f, 2.0f);

int main(void)

{

//初始化glfw并创建窗口

//-------------------

glfwInit();

glfwWindowHint(GLFW_CONTEXT_VERSION_MAJOR, 3);

glfwWindowHint(GLFW_CONTEXT_VERSION_MINOR, 3);

glfwWindowHint(GLFW_OPENGL_PROFILE, GLFW_OPENGL_CORE_PROFILE);

GLFWwindow * window = glfwCreateWindow(SCR_WIDTH, SCR_HEIGHT, "window", NULL, NULL);

if (window == NULL)

{

std::cout << "Failed to create GLFW window" << std::endl;

glfwTerminate();

return -1;

}

glfwMakeContextCurrent(window);

glfwSetFramebufferSizeCallback(window, framebuffer_size_callback);

glfwSetCursorPosCallback(window, mouse_callback);

glfwSetScrollCallback(window, scroll_callback);

glfwSetInputMode(window, GLFW_CURSOR, GLFW_CURSOR_DISABLED);

//初始化GLAD

//--------

if (!gladLoadGLLoader((GLADloadproc)glfwGetProcAddress))

{

std::cout << "Failed to initialize GLAD" << std::endl;

return -1;

}

glEnable(GL_DEPTH_TEST);

//创建着色器

//---------

Shader shader("D:/OpenGL/LearnOpenGL-master/src/5.advanced_lighting/7.bloom/7.bloom.vs", "D:/OpenGL/LearnOpenGL-master/src/5.advanced_lighting/7.bloom/7.bloom.fs");

Shader hdrShader("D:/OpenGL/LearnOpenGL-master/src/5.advanced_lighting/6.hdr/6.hdr.vs", "D:/OpenGL/LearnOpenGL-master/src/5.advanced_lighting/6.hdr/6.hdr.fs");

Shader shaderLight("D:/OpenGL/LearnOpenGL-master/src/5.advanced_lighting/7.bloom/7.bloom.vs", "D:/OpenGL/LearnOpenGL-master/src/5.advanced_lighting/7.bloom/7.light_box.fs");

Shader shaderBlur("D:/OpenGL/LearnOpenGL-master/src/5.advanced_lighting/7.bloom/7.blur.vs", "D:/OpenGL/LearnOpenGL-master/src/5.advanced_lighting/7.bloom/7.blur.fs");

Shader shaderBloomFinal("D:/OpenGL/LearnOpenGL-master/src/5.advanced_lighting/7.bloom/7.bloom_final.vs", "D:/OpenGL/LearnOpenGL-master/src/5.advanced_lighting/7.bloom/7.bloom_final.fs");

unsigned int woodTexture = loadTexture("D:/OpenGL/LearnOpenGL-master/resources/textures/wood.png", true); // note that we're loading the texture as an SRGB texture

unsigned int containerTexture = loadTexture("D:/OpenGL/LearnOpenGL-master/resources/textures/container2.png", true);

//创建一个浮点帧缓冲

unsigned int hdrFBO;

glGenFramebuffers(1, &hdrFBO);

glBindFramebuffer(GL_FRAMEBUFFER, hdrFBO);

unsigned int colorBuffers[2];

glGenTextures(2, colorBuffers);

for (unsigned int i = 0; i < 2; i++)

{

glBindTexture(GL_TEXTURE_2D, colorBuffers[i]);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB16F, SCR_WIDTH, SCR_HEIGHT, 0, GL_RGB, GL_FLOAT, NULL);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE); // we clamp to the edge as the blur filter would otherwise sample repeated texture values!

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

// attach texture to framebuffer

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0 + i, GL_TEXTURE_2D, colorBuffers[i], 0);

}

unsigned int rboDepth;

glGenRenderbuffers(1, &rboDepth);

glBindRenderbuffer(GL_RENDERBUFFER, rboDepth);

glRenderbufferStorage(GL_RENDERBUFFER, GL_DEPTH_COMPONENT, SCR_WIDTH, SCR_HEIGHT);

glFramebufferRenderbuffer(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, GL_RENDERBUFFER, rboDepth);

unsigned int attachments[2] = { GL_COLOR_ATTACHMENT0, GL_COLOR_ATTACHMENT1 };

glDrawBuffers(2, attachments);

if (glCheckFramebufferStatus(GL_FRAMEBUFFER) != GL_FRAMEBUFFER_COMPLETE)

std::cout << "Framebuffer not complete!" << std::endl;

glBindFramebuffer(GL_FRAMEBUFFER, 0);

unsigned int pingpongFBO[2];

unsigned int pingpongColorbuffers[2];

glGenFramebuffers(2, pingpongFBO);

glGenTextures(2, pingpongColorbuffers);

for (unsigned int i = 0; i < 2; i++)

{

glBindFramebuffer(GL_FRAMEBUFFER, pingpongFBO[i]);

glBindTexture(GL_TEXTURE_2D, pingpongColorbuffers[i]);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB16F, SCR_WIDTH, SCR_HEIGHT, 0, GL_RGB, GL_FLOAT, NULL);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, pingpongColorbuffers[i], 0);

if (glCheckFramebufferStatus(GL_FRAMEBUFFER) != GL_FRAMEBUFFER_COMPLETE)

std::cout << "Framebuffer not complete!" << std::endl;

}

std::vector<glm::vec3> lightPositions;

lightPositions.push_back(glm::vec3(0.0f, 0.5f, 1.5f));

lightPositions.push_back(glm::vec3(-4.0f, 0.5f, -3.0f));

lightPositions.push_back(glm::vec3(3.0f, 0.5f, 1.0f));

lightPositions.push_back(glm::vec3(-.8f, 2.4f, -1.0f));

std::vector<glm::vec3> lightColors;

lightColors.push_back(glm::vec3(5.0f, 5.0f, 5.0f));

lightColors.push_back(glm::vec3(10.0f, 0.0f, 0.0f));

lightColors.push_back(glm::vec3(0.0f, 0.0f, 15.0f));

lightColors.push_back(glm::vec3(0.0f, 5.0f, 0.0f));

shader.use();

shader.setInt("diffuseTexture", 0);

shaderBlur.use();

shaderBlur.setInt("image", 0);

shaderBloomFinal.use();

shaderBloomFinal.setInt("scene", 0);

shaderBloomFinal.setInt("bloomBlur", 1);

//先渲染一个光照的场景到浮点帧缓冲中,之后再在一个铺屏四边形(Screen-filling Quad)上应用这个帧缓冲的颜色缓冲

while (!glfwWindowShouldClose(window))

{

float currentFrame = glfwGetTime();

deltaTime = currentFrame - lastFrame;

lastFrame = currentFrame;

processInput(window);

glClearColor(0.1f, 0.1f, 0.1f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

//1. 渲染场景到浮点帧缓冲区

glBindFramebuffer(GL_FRAMEBUFFER, hdrFBO);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glm::mat4 projection = glm::perspective(glm::radians(camera.Zoom), (GLfloat)SCR_WIDTH / (GLfloat)SCR_HEIGHT, 0.1f, 100.0f);

glm::mat4 view = camera.GetViewMatrix();

glm::mat4 model = glm::mat4(1.0f);

shader.use();

shader.setMat4("projection", projection);

shader.setMat4("view", view);

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, woodTexture);

for (unsigned int i = 0; i < lightPositions.size(); i++)

{

shader.setVec3("lights[" + std::to_string(i) + "].Position", lightPositions[i]);

shader.setVec3("lights[" + std::to_string(i) + "].Color", lightColors[i]);

}

shader.setVec3("viewPos", camera.Position);

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(0.0f, -1.0f, 0.0));

model = glm::scale(model, glm::vec3(12.5f, 0.5f, 12.5f));

shader.setMat4("model", model);

shader.setMat4("model", model);

renderCube();

glBindTexture(GL_TEXTURE_2D, containerTexture);

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(0.0f, 1.5f, 0.0));

model = glm::scale(model, glm::vec3(0.5f));

shader.setMat4("model", model);

renderCube();

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(2.0f, 0.0f, 1.0));

model = glm::scale(model, glm::vec3(0.5f));

shader.setMat4("model", model);

renderCube();

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(-1.0f, -1.0f, 2.0));

model = glm::rotate(model, glm::radians(60.0f), glm::normalize(glm::vec3(1.0, 0.0, 1.0)));

shader.setMat4("model", model);

renderCube();

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(0.0f, 2.7f, 4.0));

model = glm::rotate(model, glm::radians(23.0f), glm::normalize(glm::vec3(1.0, 0.0, 1.0)));

model = glm::scale(model, glm::vec3(1.25));

shader.setMat4("model", model);

renderCube();

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(-2.0f, 1.0f, -3.0));

model = glm::rotate(model, glm::radians(124.0f), glm::normalize(glm::vec3(1.0, 0.0, 1.0)));

shader.setMat4("model", model);

renderCube();

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(-3.0f, 0.0f, 0.0));

model = glm::scale(model, glm::vec3(0.5f));

shader.setMat4("model", model);

renderCube();

shaderLight.use();

shaderLight.setMat4("projection", projection);

shaderLight.setMat4("view", view);

for (unsigned int i = 0; i < lightPositions.size(); i++)

{

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(lightPositions[i]));

model = glm::scale(model, glm::vec3(0.25f));

shaderLight.setMat4("model", model);

shaderLight.setVec3("lightColor", lightColors[i]);

renderCube();

}

glBindFramebuffer(GL_FRAMEBUFFER, 0);

bool horizontal = true, first_iteration = true;

unsigned int amount = 10;

shaderBlur.use();

for (unsigned int i = 0; i < amount; i++)

{

glBindFramebuffer(GL_FRAMEBUFFER, pingpongFBO[horizontal]);

shaderBlur.setInt("horizontal", horizontal);

glBindTexture(GL_TEXTURE_2D, first_iteration ? colorBuffers[1] : pingpongColorbuffers[!horizontal]); // bind texture of other framebuffer (or scene if first iteration)

renderQuad();

horizontal = !horizontal;

if (first_iteration)

first_iteration = false;

}

glBindFramebuffer(GL_FRAMEBUFFER, 0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

shaderBloomFinal.use();

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, colorBuffers[0]);

glActiveTexture(GL_TEXTURE1);

glBindTexture(GL_TEXTURE_2D, pingpongColorbuffers[!horizontal]);

shaderBloomFinal.setInt("bloom", bloom);

shaderBloomFinal.setFloat("exposure", exposure);

renderQuad();

glfwSwapBuffers(window);

glfwPollEvents();

}

glfwTerminate();

return 0;

}

void processInput(GLFWwindow *window)

{

if (glfwGetKey(window, GLFW_KEY_ESCAPE) == GLFW_PRESS)

glfwSetWindowShouldClose(window, true);

if (glfwGetKey(window, GLFW_KEY_W) == GLFW_PRESS)

camera.ProcessKeyboard(FORWARD, deltaTime);

if (glfwGetKey(window, GLFW_KEY_S) == GLFW_PRESS)

camera.ProcessKeyboard(BACKWARD, deltaTime);

if (glfwGetKey(window, GLFW_KEY_A) == GLFW_PRESS)

camera.ProcessKeyboard(LEFT, deltaTime);

if (glfwGetKey(window, GLFW_KEY_D) == GLFW_PRESS)

camera.ProcessKeyboard(RIGHT, deltaTime);

if (glfwGetKey(window, GLFW_KEY_E) == GLFW_PRESS)

camera.ProcessKeyboard(UP, deltaTime);

if (glfwGetKey(window, GLFW_KEY_Q) == GLFW_PRESS)

camera.ProcessKeyboard(DOWN, deltaTime);

if (glfwGetKey(window, GLFW_KEY_G) == GLFW_PRESS)

{

if (exposure > 0.0f)

exposure -= 0.001f;

else

exposure = 0.0f;

}

else if (glfwGetKey(window, GLFW_KEY_H) == GLFW_PRESS)

{

exposure += 0.001f;

}

if (glfwGetKey(window, GLFW_KEY_SPACE) == GLFW_PRESS && !bloomKeyPressed)

{

bloom = !bloom;

bloomKeyPressed = true;

}

if (glfwGetKey(window, GLFW_KEY_SPACE) == GLFW_RELEASE)

{

bloomKeyPressed = false;

}

}

void framebuffer_size_callback(GLFWwindow* window, int width, int height)

{

glViewport(0, 0, width, height);

}

void mouse_callback(GLFWwindow* window, double xpos, double ypos)

{

if (firstMouse)

{

lastX = xpos;

lastY = ypos;

firstMouse = false;

}

float xoffset = xpos - lastX;

float yoffset = lastY - ypos; // reversed since y-coordinates go from bottom to top

lastX = xpos;

lastY = ypos;

camera.ProcessMouseMovement(xoffset, yoffset);

}

void scroll_callback(GLFWwindow* window, double xoffset, double yoffset)

{

camera.ProcessMouseScroll(yoffset);

}

unsigned int loadTexture(char const * path, bool gammaCorrection)

{

unsigned int textureID;

glGenTextures(1, &textureID);

int width, height, nrComponents;

unsigned char *data = stbi_load(path, &width, &height, &nrComponents, 0);

if (data)

{

GLenum internalFormat;

GLenum dataFormat;

if (nrComponents == 1)

{

internalFormat = dataFormat = GL_RED;

}

else if (nrComponents == 3)

{

internalFormat = gammaCorrection ? GL_SRGB : GL_RGB;

dataFormat = GL_RGB;

}

else if (nrComponents == 4)

{

internalFormat = gammaCorrection ? GL_SRGB_ALPHA : GL_RGBA;

dataFormat = GL_RGBA;

}

glBindTexture(GL_TEXTURE_2D, textureID);

glTexImage2D(GL_TEXTURE_2D, 0, internalFormat, width, height, 0, dataFormat, GL_UNSIGNED_BYTE, data);

glGenerateMipmap(GL_TEXTURE_2D);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

stbi_image_free(data);

}

else

{

std::cout << "Texture failed to load at path: " << path << std::endl;

stbi_image_free(data);

}

return textureID;

}

unsigned int loadCubemap(vector<std::string> faces)

{

unsigned int textureID;

glGenTextures(1, &textureID);

glBindTexture(GL_TEXTURE_CUBE_MAP, textureID);

int width, height, nrChannels;

for (unsigned int i = 0; i < faces.size(); i++)

{

unsigned char *data = stbi_load(faces[i].c_str(), &width, &height, &nrChannels, 0);

if (data)

{

glTexImage2D(GL_TEXTURE_CUBE_MAP_POSITIVE_X + i, 0, GL_RGB, width, height, 0, GL_RGB, GL_UNSIGNED_BYTE, data);

stbi_image_free(data);

}

else

{

std::cout << "Cubemap texture failed to load at path: " << faces[i] << std::endl;

stbi_image_free(data);

}

}

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_R, GL_CLAMP_TO_EDGE);

return textureID;

}

unsigned int quadVAO = 0;

unsigned int quadVBO;

void renderQuad()

{

if (quadVAO == 0)

{

float quadVertices[] = {

// positions // texture Coords

-1.0f, 1.0f, 0.0f, 0.0f, 1.0f,

-1.0f, -1.0f, 0.0f, 0.0f, 0.0f,

1.0f, 1.0f, 0.0f, 1.0f, 1.0f,

1.0f, -1.0f, 0.0f, 1.0f, 0.0f,

};

// setup plane VAO

glGenVertexArrays(1, &quadVAO);

glGenBuffers(1, &quadVBO);

glBindVertexArray(quadVAO);

glBindBuffer(GL_ARRAY_BUFFER, quadVBO);

glBufferData(GL_ARRAY_BUFFER, sizeof(quadVertices), &quadVertices, GL_STATIC_DRAW);

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 5 * sizeof(float), (void*)0);

glEnableVertexAttribArray(1);

glVertexAttribPointer(1, 2, GL_FLOAT, GL_FALSE, 5 * sizeof(float), (void*)(3 * sizeof(float)));

}

glBindVertexArray(quadVAO);

glDrawArrays(GL_TRIANGLE_STRIP, 0, 4);

glBindVertexArray(0);

}

unsigned int cubeVAO = 0;

unsigned int cubeVBO = 0;

void renderCube()

{

// initialize (if necessary)

if (cubeVAO == 0)

{

float vertices[] = {

// back face

-1.0f, -1.0f, -1.0f, 0.0f, 0.0f, -1.0f, 0.0f, 0.0f, // bottom-left

1.0f, 1.0f, -1.0f, 0.0f, 0.0f, -1.0f, 1.0f, 1.0f, // top-right

1.0f, -1.0f, -1.0f, 0.0f, 0.0f, -1.0f, 1.0f, 0.0f, // bottom-right

1.0f, 1.0f, -1.0f, 0.0f, 0.0f, -1.0f, 1.0f, 1.0f, // top-right

-1.0f, -1.0f, -1.0f, 0.0f, 0.0f, -1.0f, 0.0f, 0.0f, // bottom-left

-1.0f, 1.0f, -1.0f, 0.0f, 0.0f, -1.0f, 0.0f, 1.0f, // top-left

// front face

-1.0f, -1.0f, 1.0f, 0.0f, 0.0f, 1.0f, 0.0f, 0.0f, // bottom-left

1.0f, -1.0f, 1.0f, 0.0f, 0.0f, 1.0f, 1.0f, 0.0f, // bottom-right

1.0f, 1.0f, 1.0f, 0.0f, 0.0f, 1.0f, 1.0f, 1.0f, // top-right

1.0f, 1.0f, 1.0f, 0.0f, 0.0f, 1.0f, 1.0f, 1.0f, // top-right

-1.0f, 1.0f, 1.0f, 0.0f, 0.0f, 1.0f, 0.0f, 1.0f, // top-left

-1.0f, -1.0f, 1.0f, 0.0f, 0.0f, 1.0f, 0.0f, 0.0f, // bottom-left

// left face

-1.0f, 1.0f, 1.0f, -1.0f, 0.0f, 0.0f, 1.0f, 0.0f, // top-right

-1.0f, 1.0f, -1.0f, -1.0f, 0.0f, 0.0f, 1.0f, 1.0f, // top-left

-1.0f, -1.0f, -1.0f, -1.0f, 0.0f, 0.0f, 0.0f, 1.0f, // bottom-left

-1.0f, -1.0f, -1.0f, -1.0f, 0.0f, 0.0f, 0.0f, 1.0f, // bottom-left

-1.0f, -1.0f, 1.0f, -1.0f, 0.0f, 0.0f, 0.0f, 0.0f, // bottom-right

-1.0f, 1.0f, 1.0f, -1.0f, 0.0f, 0.0f, 1.0f, 0.0f, // top-right

// right face

1.0f, 1.0f, 1.0f, 1.0f, 0.0f, 0.0f, 1.0f, 0.0f, // top-left

1.0f, -1.0f, -1.0f, 1.0f, 0.0f, 0.0f, 0.0f, 1.0f, // bottom-right

1.0f, 1.0f, -1.0f, 1.0f, 0.0f, 0.0f, 1.0f, 1.0f, // top-right

1.0f, -1.0f, -1.0f, 1.0f, 0.0f, 0.0f, 0.0f, 1.0f, // bottom-right

1.0f, 1.0f, 1.0f, 1.0f, 0.0f, 0.0f, 1.0f, 0.0f, // top-left

1.0f, -1.0f, 1.0f, 1.0f, 0.0f, 0.0f, 0.0f, 0.0f, // bottom-left

// bottom face

-1.0f, -1.0f, -1.0f, 0.0f, -1.0f, 0.0f, 0.0f, 1.0f, // top-right

1.0f, -1.0f, -1.0f, 0.0f, -1.0f, 0.0f, 1.0f, 1.0f, // top-left

1.0f, -1.0f, 1.0f, 0.0f, -1.0f, 0.0f, 1.0f, 0.0f, // bottom-left

1.0f, -1.0f, 1.0f, 0.0f, -1.0f, 0.0f, 1.0f, 0.0f, // bottom-left

-1.0f, -1.0f, 1.0f, 0.0f, -1.0f, 0.0f, 0.0f, 0.0f, // bottom-right

-1.0f, -1.0f, -1.0f, 0.0f, -1.0f, 0.0f, 0.0f, 1.0f, // top-right

// top face

-1.0f, 1.0f, -1.0f, 0.0f, 1.0f, 0.0f, 0.0f, 1.0f, // top-left

1.0f, 1.0f , 1.0f, 0.0f, 1.0f, 0.0f, 1.0f, 0.0f, // bottom-right

1.0f, 1.0f, -1.0f, 0.0f, 1.0f, 0.0f, 1.0f, 1.0f, // top-right

1.0f, 1.0f, 1.0f, 0.0f, 1.0f, 0.0f, 1.0f, 0.0f, // bottom-right

-1.0f, 1.0f, -1.0f, 0.0f, 1.0f, 0.0f, 0.0f, 1.0f, // top-left

-1.0f, 1.0f, 1.0f, 0.0f, 1.0f, 0.0f, 0.0f, 0.0f // bottom-left

};

glGenVertexArrays(1, &cubeVAO);

glGenBuffers(1, &cubeVBO);

// fill buffer

glBindBuffer(GL_ARRAY_BUFFER, cubeVBO);

glBufferData(GL_ARRAY_BUFFER, sizeof(vertices), vertices, GL_STATIC_DRAW);

// link vertex attributes

glBindVertexArray(cubeVAO);

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 8 * sizeof(float), (void*)0);

glEnableVertexAttribArray(1);

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, 8 * sizeof(float), (void*)(3 * sizeof(float)));

glEnableVertexAttribArray(2);

glVertexAttribPointer(2, 2, GL_FLOAT, GL_FALSE, 8 * sizeof(float), (void*)(6 * sizeof(float)));

glBindBuffer(GL_ARRAY_BUFFER, 0);

glBindVertexArray(0);

}

// render Cube

glBindVertexArray(cubeVAO);

glDrawArrays(GL_TRIANGLES, 0, 36);

glBindVertexArray(0);

}