1.将.xml文件转为.text格式文件,.text格式文件中,一行是一篇文章

from gensim.corpora import WikiCorpus input_file_name = '/Users/admin/A_NLP/data/zhwiki-20190720-pages-articles-multistream.xml.bz2' output_file_name = 'wiki.cn.txt' input_file = WikiCorpus(input_file_name, lemmatize=False, dictionary={}) output_file = open(output_file_name, 'w', encoding="utf-8") count = 0 for text in input_file.get_texts(): output_file.write(' '.join(text) + '\n') count = count + 1 if count % 10000 == 0: print('目前已处理%d条数据' % count)

input_file.close() output_file.close()

2.将语料库 按行进行,繁转简、清洗、分词

import re import opencc import jieba cc = opencc.OpenCC('t2s') fr = open('wiki.cn.txt','r') fw = open('wiki.sen.txt','a+') for line in fr: simple_format = cc.convert(line)# 繁转简 zh_list = re.findall(u"[\u4e00-\u9fa5]+",simple_format)# 清洗掉非中文数据 sentence = [] for short_sentence in zh_list: sentence += list(jieba.cut(cc.convert(short_sentence))) fw.write(' '.join(sentence)+'\n') fr.close() fw.close()

3.打印出处理完的文件的第一行查看

ft = open('wiki.sen.txt','r') for line in ft: print(line) break

4.训练词向量并保存模型

import multiprocessing from gensim.models import Word2Vec from gensim.models.word2vec import LineSentence input_file_name = 'wiki.sen1k.txt' model_file_name = 'wiki_min_count500.model' model = Word2Vec(LineSentence(input_file_name), size=100, # 词向量长度 window=5, min_count=500, workers=multiprocessing.cpu_count()) model.save(model_file_name)

5.测试几个词语,找出与其最相似的10个词语

from gensim.models import Word2Vec wiki_model = Word2Vec.load('wiki_min_count500.model') test = ['文学', '雨水', '汽车', '怪物', '几何','故宫'] for word in test: res = wiki_model.most_similar(word) print(word) print(res)

6.查看词向量

wiki_model.wv['文学']

7.向量可视化,利用TSNE工具,将词向量降维,降维的原理是使得原本距离相近的向量降维后距离尽可能近,原本距离较远的向量降维后距离尽可能远

from sklearn.manifold import TSNE import matplotlib.pyplot as plt %matplotlib inline def tsne_plot(model): "Creates and TSNE model and plots it" labels = [] tokens = [] for word in model.wv.vocab: tokens.append(model[word]) labels.append(word) tsne_model = TSNE(perplexity=40, n_components=2, init='pca', n_iter=2500, random_state=23) new_values = tsne_model.fit_transform(tokens) x = [] y = [] for value in new_values: x.append(value[0]) y.append(value[1]) plt.figure(figsize=(16, 16)) for i in range(len(x)): plt.scatter(x[i],y[i]) plt.annotate(labels[i], xy=(x[i], y[i]), xytext=(5, 2), textcoords='offset points', ha='right', va='bottom') plt.show()

wiki_model = Word2Vec.load('wiki_min_count500.model') tsne_plot(wiki_model)

第一张图是用所有语料训练处的模型,包含的词语较多,因此成了一个大墨团;由于simhei.ttf文件没有替换,该有的中文文字没有显示出来

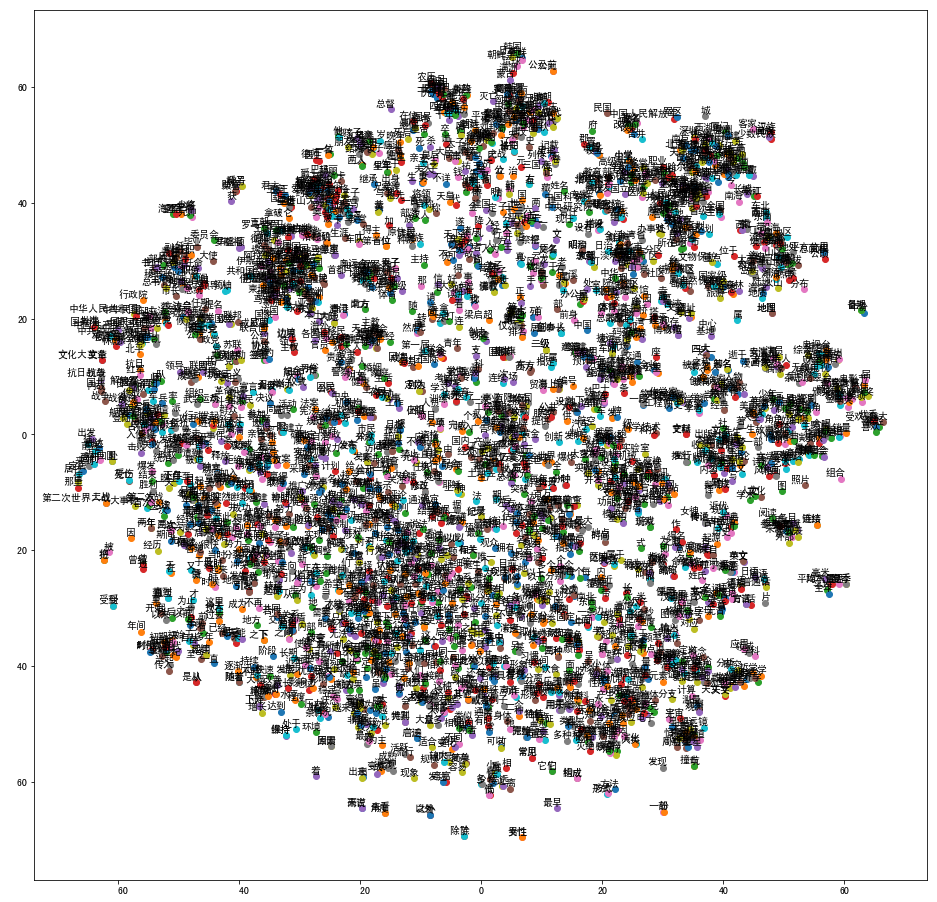

第二张图使用了前1w行数据作为语料库训练,在相应位置替换掉了simhei.ttf文件,是以下效果:

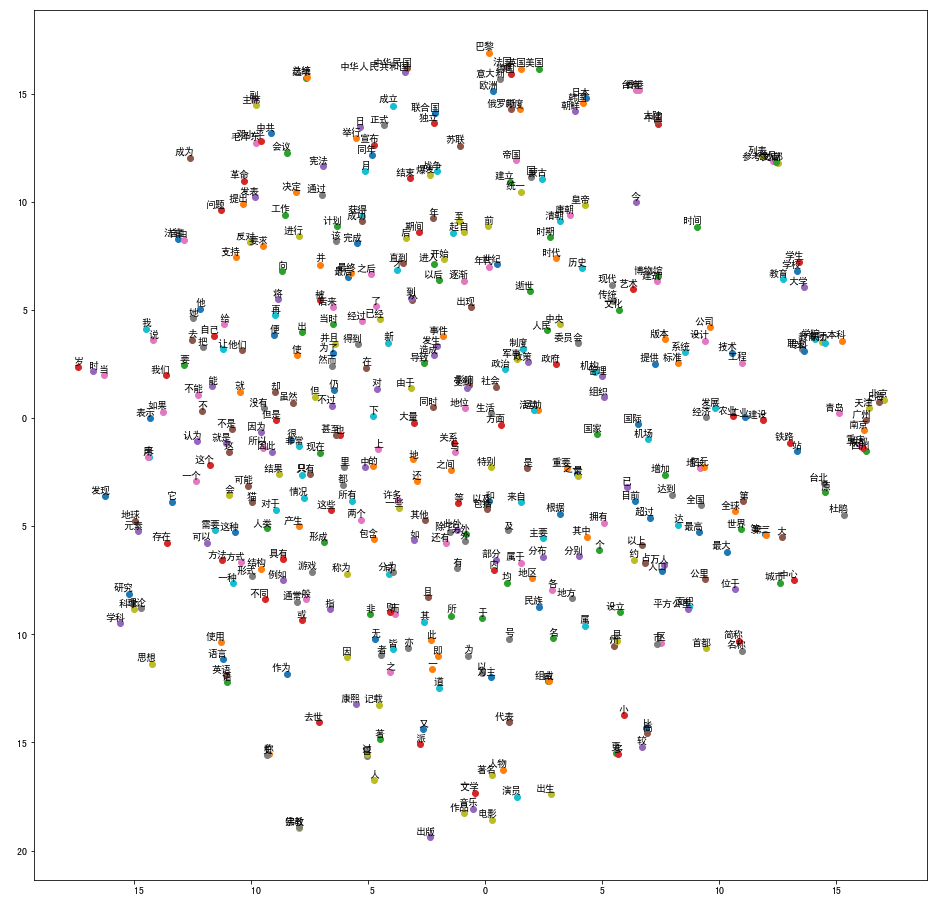

为了看的更清晰一点,第三章图使用的是前1000行数据作训练,效果如下: