首先需要下载中文语料,下载地址为:http://download.wikipedia.com/zhwiki/latest/zhwiki-latest-pages-articles.xml.bz2

下载完中文语料后,需要将XML文件转化为TEXT文件,用python3.x版本可能会出现bytes与str的问题,笔者用以下代码亲测有效

# -*- coding:utf-8 -*-

# Author:Gao

import logging

import os.path

import six

import sys

import warnings

warnings.filterwarnings(action='ignore', category=UserWarning, module='gensim')

from gensim.corpora import WikiCorpus

if __name__ == '__main__':

program = os.path.basename(sys.argv[0])

logger = logging.getLogger(program)

logging.basicConfig(format='%(asctime)s: %(levelname)s: %(message)s')

logging.root.setLevel(level=logging.INFO)

logger.info("running %s" % ' '.join(sys.argv))

# check and process input arguments

if len(sys.argv) != 3:

print("Using: python process.py enwiki.xxx.xml.bz2 wiki.en.text")

sys.exit(1)

inp, outp = sys.argv[1:3]

space = " "

i = 0

output = open(outp, 'w', encoding='utf-8')

wiki = WikiCorpus(inp, lemmatize=False, dictionary={})

for text in wiki.get_texts():

output.write(space.join(text) + "\n")

i = i + 1

if (i % 10000 == 0):

logger.info("Saved " + str(i) + " articles")

output.close()

logger.info("Finished Saved " + str(i) + " articles")运行的时候在cmd终端运行,python D:\xxx\xxx\process.py D:\xxx\xxx\zhwiki-latest-pages-articles.xml.bz2 D:\xxx\xxx\wiki.zh.text,注意写上文件具体路径,此外需要为python配置环境变量,否则python这个指令无效

运行完以后得到wiki.zh.text,这其中包含了繁体字,需要将繁体字转化为简体中文,需要使用相应的工具,下载地址为:https://bintray.com/package/files/byvoid/opencc/OpenCC,

将我们前面生成的wiki.zh.text拖动至opencc-1.0.1-win64文件夹中,打开cmd并在当前文件夹中输入如下指令:opencc -i wiki.zh.text -o wiki.zh.jian.text -c t2s.json,需要注意的是需要加文件路径

转化为中文简体以后需要进行分词,分词代码如下:

import jieba

import jieba.analyse

import jieba.posseg as pseg

import codecs, sys

def cut_words(sentence):

#print sentence

return " ".join(jieba.cut(sentence)).encode('utf-8')

f = codecs.open('wiki.zh.jian.text', 'r', encoding="utf8")

target = codecs.open("zh.jian.wiki.seg.txt", 'w', encoding="utf8")

print('open files')

line_num = 1

line = f.readline()

while line:

print('---- processing ', line_num, ' article----------------')

line_seg = " ".join(jieba.cut(line))

target.writelines(line_seg)

line_num = line_num + 1

line = f.readline()

f.close()

target.close()

分完词后,就要进行词向量训练,代码如下

rom __future__ import print_function

import logging

import os

import sys

import multiprocessing

from gensim.models import Word2Vec

from gensim.models.word2vec import LineSentence

if __name__ == '__main__':

program = os.path.basename(sys.argv[0])

logger = logging.getLogger(program)

logging.basicConfig(format='%(asctime)s: %(levelname)s: %(message)s')

logging.root.setLevel(level=logging.INFO)

logger.info("running %s" % ' '.join(sys.argv))

# check and process input arguments

if len(sys.argv) < 4:

print("Useing: python train_word2vec_model.py input_text "

"output_gensim_model output_word_vector")

sys.exit(1)

inp, outp1, outp2 = sys.argv[1:4]

model = Word2Vec(LineSentence(inp), size=200, window=5, min_count=5,

workers=multiprocessing.cpu_count())

#上面代码中size表示词向量的维度,min_count=5是指去除词频次小于5的词的词向量

model.save(outp1)

model.wv.save_word2vec_format(outp2, binary=False)接着在命令行输入

在命令行中输入

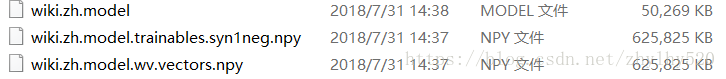

python word2vec_model.py zh.jian.wiki.seg.txt wiki.zh.model wiki.zh.text.vector,与上面一样需要添加相应的文件路径,生成的文件如下

得到训练好的模型以后,我们进行调用如下:

import gensim

model=gensim.models.Word2Vec.load(r'D:\wikicn\wiki.zh.model')

word2='周琦'

result = model.most_similar(word2)

print("与'%s'最相似的词为: " % word2)

for key in result:

print(key[0], key[1])结果如下:

部分代码参照: https://blog.csdn.net/weixin_40400177/article/details/79366065