版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/qq_29027865/article/details/91156836

词性标注-去除停用词

词性标注就是对分词后的词性进行标识,通常分词后其词性也就直接输出了,而词性标注的应用就是可以通过词性来进行过滤(去除助词停用词等),从而得到更有效的文本。

方法是首先自定义字典–确定不想要的词性,第二步是把文件读进来后,先进行分词,根据分词的词语的词性对照词典中的词进行排除并重新拼接组合。

extract_data.py:

from tokenizer import seg_sentences

fp=open("text.txt",'r',encoding='utf8')

fout=open("out.txt",'w',encoding='utf8')

for line in fp:

line=line.strip()

if len(line)>0:

fout.write(' '.join(seg_sentences(line))+"\n")

fout.close()

import os,re

from jpype import *

startJVM(getDefaultJVMPath(),r"-Djava.class.path=E:\NLP\hanlp\hanlp-1.5.0.jar;E:\NLP\hanlp",

"-Xms1g",

"-Xmx1g")

Tokenizer = JClass('com.hankcs.hanlp.tokenizer.StandardTokenizer')

drop_pos_set=set(['xu','xx','y','yg','wh','wky','wkz','wp','ws','wyy','wyz','wb','u','ud','ude1','ude2','ude3','udeng','udh','p','rr'])

def to_string(sentence,return_generator=False):

# 遍历每行的文本,将其切分为词语和词性,并作为元组返回

if return_generator:

# 通过.toString()方法描述迭代器内容

return (word_pos_item.toString().split('/') for word_pos_item in Tokenizer.segment(sentence))

else:

return [(word_pos_item.toString().split('/')[0],word_pos_item.toString().split('/')[1]) for word_pos_item in Tokenizer.segment(sentence)]

def seg_sentences(sentence,with_filter=True,return_generator=False):

segs=to_string(sentence,return_generator=return_generator)

# 使用with_filter来标识是否要删去常用词

if with_filter:

# 如果不在自定义去除的词典中,则保留

g = [word_pos_pair[0] for word_pos_pair in segs if len(word_pos_pair)==2 and word_pos_pair[0]!=' ' and word_pos_pair[1] not in drop_pos_set]

else:

g = [word_pos_pair[0] for word_pos_pair in segs if len(word_pos_pair)==2 and word_pos_pair[0]!=' ']

return iter(g) if return_generator else g

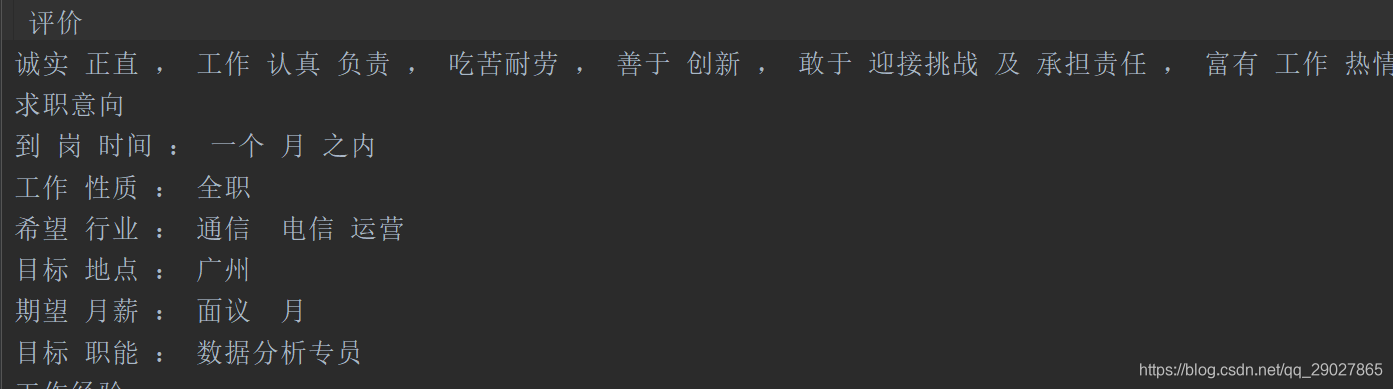

处理结果: