版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/gdkyxy2013/article/details/89530557

声明:代码主要以Scala为主,希望广大读者注意。本博客以代码为主,代码中会有详细的注释。相关文章将会发布在我的个人博客专栏《Spark 2.0机器学习》,欢迎大家关注。

一、矩阵向量计算

Spark MLlib底层的向量、矩阵运算使用了Breeze库,Breeze库提供了Vector/Matrix的实现以及相应计算的接口(Linalg)。但是在MLlib里面同事也提供了Vector和Linalg等的实现。

1、Breeze创建函数

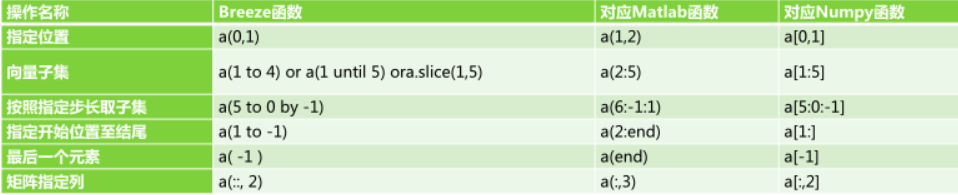

2、Breeze元素访问

3、Breeze元素操作

4、Breeze数值计算函数

5、Breeze求和函数

6、Breeze布尔函数

7、Breeze线性代数函数

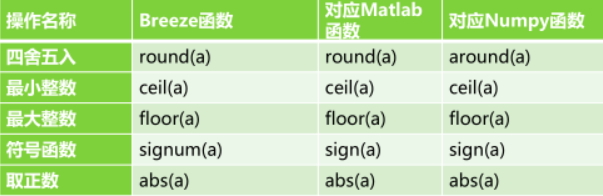

8、Breeze取整函数

9、Breeze其他函数

Breeze三角函数:

sin、sinh、asin、asinh、cos、cosh、acos、acosh、tan、tanh、atan、atanh、atan2、sinc(x),即sin(x)/x、sincpi(x),即sinc(x*pi)

Breeze对数和指数函数:

log、exp、log10、log1p、expm1、sqrt、sbrt、pow二、分类效果评估指标

示例代码:

//正确率

val evaluator1 = new MulticlassClassificationEvaluator()

.setLabelCol("indexedLabel")

.setPredictionCol("prediction")

.setMetricName("accuracy")

val accuracy = evaluator1.evaluate(predictions)

println(accuracy)

//f1

val evaluator2 = new MulticlassClassificationEvaluator()

.setLabelCol("indexedLabel")

.setPredictionCol("prediction")

.setMetricName("f1")

val f1 = evaluator2.evaluate(predictions)

println(f1)

//Precision

val evaluator3 = new MulticlassClassificationEvaluator()

.setLabelCol("indexedLabel")

.setPredictionCol("prediction")

.setMetricName("weightedPrecision")

val Precision = evaluator3.evaluate(predictions)

println(Precision)

//Recall

val evaluator4 = new MulticlassClassificationEvaluator()

.setLabelCol("indexedLabel")

.setPredictionCol("prediction")

.setMetricName("weightedRecall")

val Recall = evaluator4.evaluate(predictions)

println(Recall)

//AUC

val evaluator5 = new BinaryClassificationEvaluator()

.setLabelCol("indexedLabel")

.setRawPredictionCol("prediction")

.setMetricName("areaUnderROC")

val auc = evaluator5.evaluate(predictions)

println(auc)

//aupr

val evaluator6 = new BinaryClassificationEvaluator()

.setLabelCol("indexedLabel")

.setRawPredictionCol("prediction")

.setMetricName("areaUnderPR")

val aupr = evaluator6.evaluate(predictions)

println(aupr)三、交叉-验证方法

交叉验证法先将数据集D划分为k个大小相似的互斥子集,即D=D1并D2并...并Dk,每个子集之间没有交集。然后每次用k-1个子集的并集作为训练集,余下的那个作为测试集,这样得到k组训练/测试集。可以进行k次训练和测试,最终返回的是这个k个结果的均值。可以随机使用不同的划分多次,例如:10次10折交叉验证通常把交叉验证法称为“k折交叉验证”(k-fold cross validation),k最常用的取值时10,为10折交叉验证。

示例:交叉验证

package sparkml

import org.apache.log4j.{Level, Logger}

import org.apache.spark.ml.Pipeline

import org.apache.spark.ml.classification.LogisticRegression

import org.apache.spark.ml.evaluation.BinaryClassificationEvaluator

import org.apache.spark.ml.feature.{HashingTF, Tokenizer}

import org.apache.spark.ml.tuning.{CrossValidator, ParamGridBuilder}

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.Row

import org.apache.spark.ml.linalg.Vector

object JiaoChaYanZheng {

def main(args: Array[String]): Unit = {

//设置日志输出级别

Logger.getLogger("org").setLevel(Level.WARN)

//定义SparkSession

val spark = SparkSession.builder()

.appName("jcyz")

.master("local[*]")

.getOrCreate()

import spark.implicits._

//样本数据,格式为(id, text, label)

val training = spark.createDataFrame(Seq(

(0L, "a b c d e spark", 1.0),

(1L, "b d", 0.0),

(2L, "spark f g h", 0.0),

(3L, "hadoop mapreduce", 0.0),

(4L, "b spark who", 1.0),

(5L, "g d a y", 0.0),

(6L, "spark fly", 1.0),

(7L, "was mapreduce", 0.0),

(8L, "e spark program", 1.0),

(9L, "a e c l", 0.0),

(10L, "spark compile", 1.0),

(11L, "hadoop software", 0.0)

)).toDF("id", "text", "label")

//建立ML管道,包括:tokenizer,hashingTF,lr

val tokenizer = new Tokenizer()

.setInputCol("text")

.setOutputCol("words")

val hashingTF = new HashingTF()

.setInputCol(tokenizer.getOutputCol)

.setOutputCol("features")

val lr = new LogisticRegression()

.setMaxIter(10)

val pipeline = new Pipeline()

.setStages(Array(tokenizer, hashingTF, lr))

//采用ParamGridBuilde方法来建立网格搜索

//网格的参数包括:hashingTF.numFeatures 3个参数,lr.regParam 2个参数

//网格总共大小为:3 * 2 = 6,采用交叉验证来选择最优参数

val paramGrid = new ParamGridBuilder()

.addGrid(hashingTF.numFeatures, Array(10, 100, 1000))

.addGrid(lr.regParam, Array(0.1, 0.01))

.build()

//建立一个交叉验证的评估器,设置评估的参数

val cv = new CrossValidator()

.setEstimator(pipeline)

.setEvaluator(new BinaryClassificationEvaluator())

.setEstimatorParamMaps(paramGrid)

.setNumFolds(2)

//运行交叉验证评估器,得到最佳参数集的模型

val cvModel = cv.fit(training)

//测试数据

val test = spark.createDataFrame(Seq(

(4L, "spark i j k"),

(5L, "l m n"),

(6L, "mapreduce spark"),

(7L, "apache hadoop")

)).toDF("id", "text")

//测试,cvModel会选择最佳的lrModel进行预测

val result = cvModel.transform(test)

result.select("id", "text", "probability", "prediction")

.collect()

.foreach{

case Row(id: Long, text: String, prob: Vector, prediction: Double) =>

println(s"($id, $text) --> prob = $prob, prediction = $prediction")

}

}

}

运行结果如下所示:

(4, spark i j k) --> prob = [0.38806038783805663,0.6119396121619434], prediction = 1.0

(5, l m n) --> prob = [0.9395446853416303,0.06045531465836969], prediction = 0.0

(6, mapreduce spark) --> prob = [0.557958097564678,0.4420419024353221], prediction = 0.0

(7, apache hadoop) --> prob = [0.885348428830688,0.11465157116931203], prediction = 0.0