这几天在学习scrapy框架,感觉有所收获,便尝试使用scrapy框架来爬取一些数据,对自己阶段性学习进行一个小小的总结

本次爬取的目标数据是起点中文网中的免费作品部分,如下图:

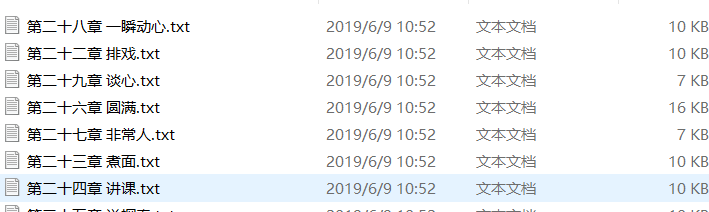

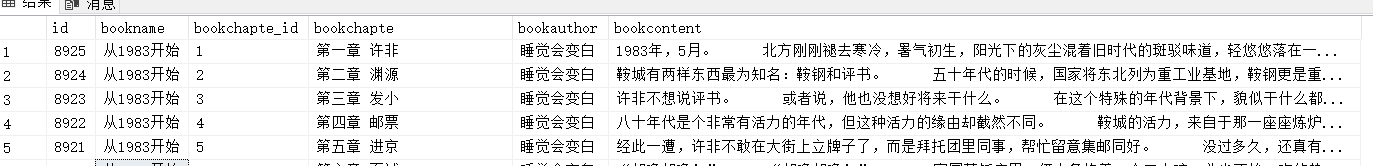

本次一共爬取了100本小说,并对爬取结果进行以下两种存储;

1.把小说内容分章节写入txt中

2.把小说的内容存入sqlserver中

如下:

实现的逻辑:

1.通过书的列表页获得每本书的具体url;

2.通过书籍的url获得书的章节和每个章节对应的url;

3.通过每个章节的url获取每个章节的文本内容;

4.将提取的文本进行存储,txt和sqlserver。

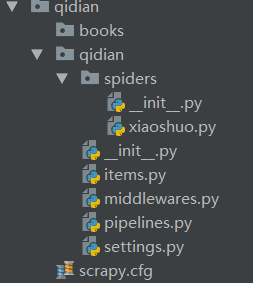

项目代码部分:

新建名为qidian的scrapy项目,新建名为xiaoshuo.py的爬虫

setting.py:

BOT_NAME = 'qidian' SPIDER_MODULES = ['qidian.spiders'] NEWSPIDER_MODULE = 'qidian.spiders' LOG_LEVEL = "WARNING" # Crawl responsibly by identifying yourself (and your website) on the user-agent USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.80 Safari/537.36' # Obey robots.txt rules ROBOTSTXT_OBEY = True ITEM_PIPELINES = { 'qidian.pipelines.QidianPipeline': 300, }

items.py:

import scrapy class QidianItem(scrapy.Item): # define the fields for your item here like: book_name = scrapy.Field() # 书名 book_author = scrapy.Field() #作者 book_type = scrapy.Field() #类型 book_word_number = scrapy.Field() #字数 book_status = scrapy.Field() #状态,表示连载还是完结 book_img = scrapy.Field() #图片 book_url = scrapy.Field() #书的url,用来获取每本书的章节 class Zhangjie(scrapy.Item): book_name = scrapy.Field() #书名 book_zhangjie = scrapy.Field() #章节信息 book_zhangjie_url = scrapy.Field() #章节的url,用来获取章节里的内容 book_author = scrapy.Field() # 作者 zhangjie_id =scrapy.Field() #章节ID class BookItem(scrapy.Item): book_name = scrapy.Field() book_mulu = scrapy.Field() book_text = scrapy.Field() book_author = scrapy.Field() # 作者 zhangjie_id = scrapy.Field() # 章节ID

xiaoshuo.py

# -*- coding: utf-8 -*- import scrapy import os from qidian.items import QidianItem, Zhangjie, BookItem class XiaoshuoSpider(scrapy.Spider): name = 'xiaoshuo' allowed_domains = ["qidian.com"] #开始爬取的页面 start_urls = ['https://www.qidian.com/free/all?orderId=&page=1&vip=hidden&style=1&pageSize=50&siteid=1&pubflag=0&hiddenField=1'] def parse(self, response): #xpath定位html元素 li_list = response.xpath("//div[@class='book-img-text']//ul/li") for li in li_list: #遍历当前页每一本书 item = QidianItem() #实例化 #书名 item["book_name"] = li.xpath("./div[@class='book-mid-info']/h4/a/text()").extract_first() #作者 item["book_author"] = li.xpath("./div[@class='book-mid-info']/p[@class='author']/a[@class='name']/text()").extract_first() #书的类型,玄幻,修真,都市之类的 item["book_type"] = li.xpath("./div[@class='book-mid-info']/p[@class='author']/a[@data-eid='qd_B60']/text()").extract_first() #书的状态,,连载还是完结 item["book_status"] = li.xpath("./div[@class='book-mid-info']/p[@class='author']/span/text()").extract_first() #书的封面图片 item["book_img"] = "http:" + li.xpath("./div[@class='book-img-box']/a/img/@src").extract_first() #书的url item["book_url"] ="http:" + li.xpath("./div[@class='book-img-box']/a/@href").extract_first() yield scrapy.Request( item["book_url"], callback = self.parseBookUrl, #通过每一本书的url地址,获得每一本书的目录信息 # 传递数据给parseBookUrl,字典的形式,这里只传递书名和作者 meta={"book_name":item["book_name"].strip(), "book_author":item["book_author"].strip()} #把每一本书的书名传递给下一个 ) #下一页 page_num = len(response.xpath("//div[@class='pagination fr']//ul/li")) for i in range(page_num - 2): #只有5页,就这么做了 next_url = 'http://www.qidian.com/free/all?orderId=&vip=hidden&style=1&pageSize=20&siteid=1&pubflag=0&hiddenField=1&page={}'.format(i+1) yield scrapy.Request( next_url, callback=self.parse ) def parseBookUrl(self,response): #接收数据 bookname = response.meta["book_name"] bookauthor = response.meta["book_author"] # 实例化,赋值 zhangjie = Zhangjie() zhangjie["book_name"] = bookname zhangjie["book_author"] = bookauthor book_div = response.xpath("//div[@class='volume-wrap']//div[@class='volume']") i = 0 for div in book_div: #遍历小说卷数 li_list = div.xpath("./ul/li") for li in li_list: #遍历小说的章节数 i = i + 1 zhangjie["zhangjie_id"] = i zhangjie["book_zhangjie"] = li.xpath("./a/text()").extract_first() zhangjie["book_zhangjie_url"] ="http:" + li.xpath("./a/@href").extract_first() #通过目录的url地址爬取文本内容 yield scrapy.Request( zhangjie["book_zhangjie_url"], callback=self.parseZhangjieUrl, # meta的传递数据给parseZhangjieUrl meta={"book_name":zhangjie["book_name"].strip(), "zhangjie":zhangjie["book_zhangjie"].strip(), "book_author":bookauthor, "zhangjie_id":zhangjie["zhangjie_id"] } ) def parseZhangjieUrl(self,response): # 接收传递过来的数据 bookname = response.meta["book_name"] zhangjie = response.meta["zhangjie"] book_author = response.meta["book_author"] zhangjie_id =response.meta["zhangjie_id"] bookitem = BookItem() bookitem["book_name"] = bookname bookitem["book_mulu"] = zhangjie bookitem["book_author"] = book_author bookitem["zhangjie_id"] = zhangjie_id div_list = response.xpath("//div[@class='main-text-wrap']") p_list = div_list.xpath("./div[@class='read-content j_readContent']/p") file_path = "./books/"+bookname+ "/" + bookitem["book_mulu"] + ".txt" content = "" #遍历每一p标签获取p中的内容,并组装成文章 for p in p_list: content += p.xpath("./text()").extract_first() +"\n\n" bookitem["book_text"] = content #判断路径是否存在 if os.path.exists("./books/"+ bookname) ==False: os.makedirs("./books/"+bookname) #写入txt with open(file_path, 'a', encoding='utf-8') as f: f.write(" " + bookitem["book_mulu"] + "\n\n\n\n" + content) yield bookitem #把数据提交给pipelines.py

pipelines.py

import pymssql class QidianPipeline(object): def process_item(self, item, spider): print(item["book_name"]) print(item["book_mulu"]) # self.sqlhelper(item["book_name"].strip(), item["book_mulu"].strip(), item["book_text"].strip(),item["book_author"].strip()) self.lists( item["book_name"].strip(), item["zhangjie_id"], item["book_mulu"].strip(), item["book_author"].strip(), item["book_text"].strip() ) return item # 爬虫启动时候的运行 def open_spider(self,spider): self.sql = "INSERT INTO novels VALUES (%s,%d,%s,%s,%s);COMMIT" #sql语句 self.values = [] #存储数据 # 爬虫结束的时候运行 def close_spider(self,spider): self.conn = pymssql.connect(host='szs', server='SZS\SQLEXPRESS', port='51091', user='python', password='python', database='python', charset='utf8', autocommit=True) self.cur = self.conn.cursor() try: self.cur.executemany(self.sql,self.values) #执行insert print("sql插入成功") except: self.conn.rollback() print("sql插入失败") self.cur.close() self.conn.close() # 使用列表存储要插入数据库的数据 def lists(self, bookname,bookchapte_id, bookchapte,bookauthor,bookcontent,): # self.sql += "INSERT INTO [novals] VALUES (N'" + bookname + "',N'" + bookchapte + "',N'" + bookcontent + "',N'" + bookauthor + "');COMMIT " self.values.append((bookname,bookchapte_id,bookchapte,bookauthor,bookcontent))

本次爬取没能用上middleware.py中间件,这是之后的学习目标。