本文为笔者学习《21个项目玩转深度学习:基于TensorFlow的实践详解》这本书第三章的学习笔记。

1.目标

使用TensorFlow在自己的图像数据上训练深度学习模型。这里主要是使用已经训练好的ImageNet模型进行微调(fine-tune)。

2.微调原理了解

对于神经网络训练中网络的层数、滤波器的大小和池化等参数的设定是要经过大量的调参实验来获得的,这个过程相当不容易。因此,大多数实际中使用的模型都是借鉴别人已经训练好的模型,比如著名的AlexNet、VGG16、VGG19、GoogleLeNet、Inception-v3和ResNet等,在此之上修改最后的全连接层参数个数来进行训练。这里的训练分为全部训练和部分训练。全部训练就是在新的数据集上完整的跑一边训练过程,部分训练就是在别人已经训练好的模型上进行微调。借助微调,可以从预训练模型触发,借助预训练模型中大量的已经训练好的卷积滤波器运用到自己的数据集上,可以达到节约训练时间和提升分类器性能的作用。

下面借助VGG16网络来理解一下微调的原理:

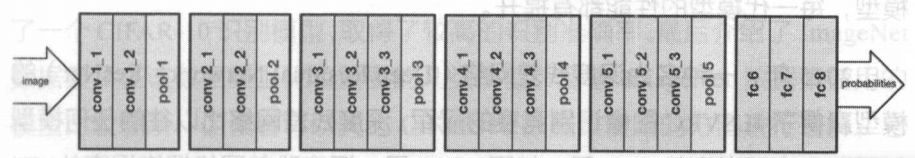

上图为VGG中各个网络的结构图,将VGG16网络单独拿出来结构如下图所示:

VGG16的结构分为卷积层+全连接层,由以上图可以看出卷积层分为5个部分共13层(途中的conv1_1、conv1_2、conv2_1、conv2_2、conv3_1、conv3_2、conv3_3、conv4_1、conv4_2、conv4_3、conv5_1、conv5_2、conv5_3),全连接层为fc6、fc7、fc8三个层。其中fc8输入的是fc7层的特征,输出是1000个分类的概率,这1000个类别就是ImageNet中1000个类别。在我们自己的数据集中,一般分类不会是1000类,所以最后边的全连接层fc8就必须去掉,重新采用复合自己数据集的全连接层作为新的fc8。比如数据集为5类,那么fc8的输出也应该为5类。

3.数据准备

1)将数据分为训练集和验证集

本实验使用的时作者书里提供的卫星拍摄图片数据集。原始数据集保存路径如下,其中train中为训练数据集,validation中为测试数据集。可以看出共有wood(树林)、water(水)、rock(岩石)、wetland(湿地)、glacier(冰川)、urban(城市)这六类图片。

图片长什么样呢?对于本例中使用的6类数据集个随意取一张来看看:

2)将数据转换为tfrecord格式数据

对于大数据,TensorFlow中都需要转换成TFRecord格式的文件,TFRecord文件同样是以二进制进行存储数据的,适合以串行的方式读取大批量数据。其优势是能更好的利用内存,更方便地复制和移动,这更符合TensorFlow执行引擎的处理方式。通常数据转换成tfrecord格式需要写个小程序将每一个样本组装成protocol buffer定义的Example的对象,序列化成字符串,再由tf.python_io.TFRecordWriter写入文件即可。

在data_prepare文件夹下,存放有data_convert.py文件,该文件可将图片转换为tfrecord格式。data_convert.py文件代码如下:

-

# coding:utf-8

-

from __future__ import absolute_import

-

import argparse

-

import os

-

import logging

-

from src.tfrecord import main

-

#默认参数初始化函数

-

def parse_args():

-

parser = argparse.ArgumentParser()

-

parser.add_argument(

'-t',

'--tensorflow-data-dir',

default=

'pic/')

-

parser.add_argument(

'--train-shards',

default=

2, type=

int)

-

parser.add_argument(

'--validation-shards',

default=

2, type=

int)

-

parser.add_argument(

'--num-threads',

default=

2, type=

int)

-

parser.add_argument(

'--dataset-name',

default=

'satellite', type=str)

-

print(type(parser.parse_args()))

-

return parser.parse_args()

-

-

if __name__ ==

'__main__':

-

#指令日志显示级别

-

logging.basicConfig(level=logging.INFO)

-

#参数设置

-

args = parse_args()

-

args.tensorflow_dir = args.tensorflow_data_dir

-

args.train_directory = os.path.

join(args.tensorflow_dir,

'train')

-

args.validation_directory = os.path.

join(args.tensorflow_dir,

'validation')

-

args.output_directory = args.tensorflow_dir

-

args.labels_file = os.path.

join(args.tensorflow_dir,

'label.txt')

-

if os.path.exists(args.labels_file)

is False:

-

logging.warning(

'Can\'t find label.txt. Now create it.')

-

all_entries = os.listdir(args.train_directory)

-

dirnames = []

-

for entry

in all_entries:

-

if os.path.isdir(os.path.

join(args.train_directory, entry)):

-

dirnames.append(entry)

-

with open(args.labels_file, 'w') as f:

-

for dirname in dirnames:

-

f.write(dirname + '\n')

-

#调用tfrecord中的main函数进行转换

-

main(args)

图片格式进行转换时,执行指令如下:

python data_convert.py -t pie/ \

--train-shards 2 \

--validation-shards 2 \

--num-threads 2 \

--dataset-name satellite

执行完以上命令后,可以在pic文件夹中找到5个新生成的文件。分别是训练数据satellite_train_00000-of-00002.tfrecord、satellite_train_00001-of-00002.tfrecord和验证数据satellite_validation_00000-of-00002.tfrecord、satellite_validation_00001-of-00002.tfrecord,另外还有个文本文件label.txt,表示图片的内部标签(数字)到真实类别(字符串)之间的映射顺序 。 如图片在 tfrecord 中的标签为 0 ,那么就对应 label.txt 第一行的类别,在 tfrecord的标签为1 ,就对应 label.txt 中第二行的类别,依此类推 。

以上文件data_convert.py最终调用的main函数存在于tfrecord.py中,顺着main函数可以看到tfrecord转换中都做了哪些操作,该文件代码如下:

-

# coding:utf-8

-

# Copyright 2016 Google Inc. All Rights Reserved.

-

#

-

# Licensed under the Apache License, Version 2.0 (the "License");

-

# you may not use this file except in compliance with the License.

-

# You may obtain a copy of the License at

-

#

-

# http://www.apache.org/licenses/LICENSE-2.0

-

#

-

# Unless required by applicable law or agreed to in writing, software

-

# distributed under the License is distributed on an "AS IS" BASIS,

-

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-

# See the License for the specific language governing permissions and

-

# limitations under the License.

-

# ==============================================================================

-

"""Converts image data to TFRecords file format with Example protos.

-

The image data set is expected to reside in JPEG files located in the

-

following directory structure.

-

data_dir/label_0/image0.jpeg

-

data_dir/label_0/image1.jpg

-

...

-

data_dir/label_1/weird-image.jpeg

-

data_dir/label_1/my-image.jpeg

-

...

-

where the sub-directory is the unique label associated with these images.

-

This TensorFlow script converts the training and evaluation data into

-

a sharded data set consisting of TFRecord files

-

train_directory/train-00000-of-01024

-

train_directory/train-00001-of-01024

-

...

-

train_directory/train-00127-of-01024

-

and

-

validation_directory/validation-00000-of-00128

-

validation_directory/validation-00001-of-00128

-

...

-

validation_directory/validation-00127-of-00128

-

where we have selected 1024 and 128 shards for each data set. Each record

-

within the TFRecord file is a serialized Example proto. The Example proto

-

contains the following fields:

-

image/encoded: string containing JPEG encoded image in RGB colorspace

-

image/height: integer, image height in pixels

-

image/width: integer, image width in pixels

-

image/colorspace: string, specifying the colorspace, always 'RGB'

-

image/channels: integer, specifying the number of channels, always 3

-

image/format: string, specifying the format, always'JPEG'

-

image/filename: string containing the basename of the image file

-

e.g. 'n01440764_10026.JPEG' or 'ILSVRC2012_val_00000293.JPEG'

-

image/class/label: integer specifying the index in a classification layer. start from "class_label_base"

-

image/class/text: string specifying the human-readable version of the label

-

e.g. 'dog'

-

If you data set involves bounding boxes, please look at build_imagenet_data.py.

-

"""

-

from __future__

import absolute_import

-

from __future__

import division

-

from __future__

import print_function

-

-

from datetime

import datetime

-

import os

-

import random

-

import sys

-

import threading

-

-

-

import numpy

as np

-

import tensorflow

as tf

-

import logging

-

-

-

def _int64_feature(value):

-

"""Wrapper for inserting int64 features into Example proto."""

-

if

not isinstance(value, list):

-

value = [value]

-

return tf.train.Feature(int64_list=tf.train.Int64List(value=value))

-

-

-

def _bytes_feature(value):

-

"""Wrapper for inserting bytes features into Example proto."""

-

value = tf.compat.as_bytes(value)

-

return tf.train.Feature(bytes_list=tf.train.BytesList(value=[value]))

-

-

-

def _convert_to_example(filename, image_buffer, label, text, height, width):

-

"""Build an Example proto for an example.

-

Args:

-

filename: string, path to an image file, e.g., '/path/to/example.JPG'

-

image_buffer: string, JPEG encoding of RGB image

-

label: integer, identifier for the ground truth for the network

-

text: string, unique human-readable, e.g. 'dog'

-

height: integer, image height in pixels

-

width: integer, image width in pixels

-

Returns:

-

Example proto

-

"""

-

-

colorspace =

'RGB'

-

channels =

3

-

image_format =

'JPEG'

-

-

example = tf.train.Example(features=tf.train.Features(feature={

-

'image/height': _int64_feature(height),

-

'image/width': _int64_feature(width),

-

'image/colorspace': _bytes_feature(colorspace),

-

'image/channels': _int64_feature(channels),

-

'image/class/label': _int64_feature(label),

-

'image/class/text': _bytes_feature(text),

-

'image/format': _bytes_feature(image_format),

-

'image/filename': _bytes_feature(os.path.basename(filename)),

-

'image/encoded': _bytes_feature(image_buffer)}))

-

return example

-

-

-

class ImageCoder(object):

-

"""Helper class that provides TensorFlow image coding utilities."""

-

-

def __init__(self):

-

# Create a single Session to run all image coding calls.

-

self._sess = tf.Session()

-

-

# Initializes function that converts PNG to JPEG data.

-

self._png_data = tf.placeholder(dtype=tf.string)

-

image = tf.image.decode_png(self._png_data, channels=

3)

-

self._png_to_jpeg = tf.image.encode_jpeg(image, format=

'rgb', quality=

100)

-

-

# Initializes function that decodes RGB JPEG data.

-

self._decode_jpeg_data = tf.placeholder(dtype=tf.string)

-

self._decode_jpeg = tf.image.decode_jpeg(self._decode_jpeg_data, channels=

3)

-

-

def png_to_jpeg(self, image_data):

-

return self._sess.run(self._png_to_jpeg,

-

feed_dict={self._png_data: image_data})

-

-

def decode_jpeg(self, image_data):

-

image = self._sess.run(self._decode_jpeg,

-

feed_dict={self._decode_jpeg_data: image_data})

-

assert len(image.shape) ==

3

-

assert image.shape[

2] ==

3

-

return image

-

-

-

def _is_png(filename):

-

"""Determine if a file contains a PNG format image.

-

Args:

-

filename: string, path of the image file.

-

Returns:

-

boolean indicating if the image is a PNG.

-

"""

-

return

'.png'

in filename

-

-

-

def _process_image(filename, coder):

-

"""Process a single image file.

-

Args:

-

filename: string, path to an image file e.g., '/path/to/example.JPG'.

-

coder: instance of ImageCoder to provide TensorFlow image coding utils.

-

Returns:

-

image_buffer: string, JPEG encoding of RGB image.

-

height: integer, image height in pixels.

-

width: integer, image width in pixels.

-

"""

-

# Read the image file.

-

with open(filename,

'rb')

as f:

-

image_data = f.read()

-

-

# Convert any PNG to JPEG's for consistency.

-

if _is_png(filename):

-

logging.info(

'Converting PNG to JPEG for %s' % filename)

-

image_data = coder.png_to_jpeg(image_data)

-

-

# Decode the RGB JPEG.

-

image = coder.decode_jpeg(image_data)

-

-

# Check that image converted to RGB

-

assert len(image.shape) ==

3

-

height = image.shape[

0]

-

width = image.shape[

1]

-

assert image.shape[

2] ==

3

-

-

return image_data, height, width

-

-

-

def _process_image_files_batch(coder, thread_index, ranges, name, filenames,

-

texts, labels, num_shards, command_args):

-

"""Processes and saves list of images as TFRecord in 1 thread.

-

Args:

-

coder: instance of ImageCoder to provide TensorFlow image coding utils.

-

thread_index: integer, unique batch to run index is within [0, len(ranges)).

-

ranges: list of pairs of integers specifying ranges of each batches to

-

analyze in parallel.

-

name: string, unique identifier specifying the data set

-

filenames: list of strings; each string is a path to an image file

-

texts: list of strings; each string is human readable, e.g. 'dog'

-

labels: list of integer; each integer identifies the ground truth

-

num_shards: integer number of shards for this data set.

-

"""

-

# Each thread produces N shards where N = int(num_shards / num_threads).

-

# For instance, if num_shards = 128, and the num_threads = 2, then the first

-

# thread would produce shards [0, 64).

-

num_threads = len(ranges)

-

assert

not num_shards % num_threads

-

num_shards_per_batch = int(num_shards / num_threads)

-

-

shard_ranges = np.linspace(ranges[thread_index][

0],

-

ranges[thread_index][

1],

-

num_shards_per_batch +

1).astype(int)

-

num_files_in_thread = ranges[thread_index][

1] - ranges[thread_index][

0]

-

-

counter =

0

-

for s

in range(num_shards_per_batch):

-

# Generate a sharded version of the file name, e.g. 'train-00002-of-00010'

-

shard = thread_index * num_shards_per_batch + s

-

output_filename =

'%s_%s_%.5d-of-%.5d.tfrecord' % (command_args.dataset_name, name, shard, num_shards)

-

output_file = os.path.join(command_args.output_directory, output_filename)

-

writer = tf.python_io.TFRecordWriter(output_file)

-

-

shard_counter =

0

-

files_in_shard = np.arange(shard_ranges[s], shard_ranges[s +

1], dtype=int)

-

for i

in files_in_shard:

-

filename = filenames[i]

-

label = labels[i]

-

text = texts[i]

-

-

image_buffer, height, width = _process_image(filename, coder)

-

-

example = _convert_to_example(filename, image_buffer, label,

-

text, height, width)

-

writer.write(example.SerializeToString())

-

shard_counter +=

1

-

counter +=

1

-

-

if

not counter %

1000:

-

logging.info(

'%s [thread %d]: Processed %d of %d images in thread batch.' %

-

(datetime.now(), thread_index, counter, num_files_in_thread))

-

sys.stdout.flush()

-

-

writer.close()

-

logging.info(

'%s [thread %d]: Wrote %d images to %s' %

-

(datetime.now(), thread_index, shard_counter, output_file))

-

sys.stdout.flush()

-

shard_counter =

0

-

logging.info(

'%s [thread %d]: Wrote %d images to %d shards.' %

-

(datetime.now(), thread_index, counter, num_files_in_thread))

-

sys.stdout.flush()

-

-

-

def _process_image_files(name, filenames, texts, labels, num_shards, command_args):

-

"""Process and save list of images as TFRecord of Example protos.

-

Args:

-

name: string, unique identifier specifying the data set

-

filenames: list of strings; each string is a path to an image file

-

texts: list of strings; each string is human readable, e.g. 'dog'

-

labels: list of integer; each integer identifies the ground truth

-

num_shards: integer number of shards for this data set.

-

"""

-

assert len(filenames) == len(texts)

-

assert len(filenames) == len(labels)

-

-

# Break all images into batches with a [ranges[i][0], ranges[i][1]].

-

spacing = np.linspace(

0, len(filenames), command_args.num_threads +

1).astype(np.int)

-

ranges = []

-

for i

in range(len(spacing) -

1):

-

ranges.append([spacing[i], spacing[i +

1]])

-

-

# Launch a thread for each batch.

-

logging.info(

'Launching %d threads for spacings: %s' % (command_args.num_threads, ranges))

-

sys.stdout.flush()

-

-

# Create a mechanism for monitoring when all threads are finished.

-

coord = tf.train.Coordinator()

-

-

# Create a generic TensorFlow-based utility for converting all image codings.

-

coder = ImageCoder()

-

-

threads = []

-

for thread_index

in range(len(ranges)):

-

args = (coder, thread_index, ranges, name, filenames,

-

texts, labels, num_shards, command_args)

-

t = threading.Thread(target=_process_image_files_batch, args=args)

-

t.start()

-

threads.append(t)

-

-

# Wait for all the threads to terminate.

-

coord.join(threads)

-

logging.info(

'%s: Finished writing all %d images in data set.' %

-

(datetime.now(), len(filenames)))

-

sys.stdout.flush()

-

-

-

def _find_image_files(data_dir, labels_file, command_args):

-

"""Build a list of all images files and labels in the data set.

-

Args:

-

data_dir: string, path to the root directory of images.

-

Assumes that the image data set resides in JPEG files located in

-

the following directory structure.

-

data_dir/dog/another-image.JPEG

-

data_dir/dog/my-image.jpg

-

where 'dog' is the label associated with these images.

-

labels_file: string, path to the labels file.

-

The list of valid labels are held in this file. Assumes that the file

-

contains entries as such:

-

dog

-

cat

-

flower

-

where each line corresponds to a label. We map each label contained in

-

the file to an integer starting with the integer 0 corresponding to the

-

label contained in the first line.

-

Returns:

-

filenames: list of strings; each string is a path to an image file.

-

texts: list of strings; each string is the class, e.g. 'dog'

-

labels: list of integer; each integer identifies the ground truth.

-

"""

-

logging.info(

'Determining list of input files and labels from %s.' % data_dir)

-

unique_labels = [l.strip()

for l

in tf.gfile.FastGFile(

-

labels_file,

'r').readlines()]

-

-

labels = []

-

filenames = []

-

texts = []

-

-

# Leave label index 0 empty as a background class.

-

"""非常重要,这里我们调整label从0开始以符合定义"""

-

label_index = command_args.class_label_base

-

-

# Construct the list of JPEG files and labels.

-

for text

in unique_labels:

-

jpeg_file_path =

'%s/%s/*' % (data_dir, text)

-

matching_files = tf.gfile.Glob(jpeg_file_path)

-

-

labels.extend([label_index] * len(matching_files))

-

texts.extend([text] * len(matching_files))

-

filenames.extend(matching_files)

-

-

if

not label_index %

100:

-

logging.info(

'Finished finding files in %d of %d classes.' % (

-

label_index, len(labels)))

-

label_index +=

1

-

-

# Shuffle the ordering of all image files in order to guarantee

-

# random ordering of the images with respect to label in the

-

# saved TFRecord files. Make the randomization repeatable.

-

shuffled_index = list(range(len(filenames)))

-

random.seed(

12345)

-

random.shuffle(shuffled_index)

-

-

filenames = [filenames[i]

for i

in shuffled_index]

-

texts = [texts[i]

for i

in shuffled_index]

-

labels = [labels[i]

for i

in shuffled_index]

-

-

logging.info(

'Found %d JPEG files across %d labels inside %s.' %

-

(len(filenames), len(unique_labels), data_dir))

-

# print(labels)

-

return filenames, texts, labels

-

-

-

def _process_dataset(name, directory, num_shards, labels_file, command_args):

-

"""Process a complete data set and save it as a TFRecord.

-

Args:

-

name: string, unique identifier specifying the data set.

-

directory: string, root path to the data set.

-

num_shards: integer number of shards for this data set.

-

labels_file: string, path to the labels file.

-

"""

-

filenames, texts, labels = _find_image_files(directory, labels_file, command_args)

-

_process_image_files(name, filenames, texts, labels, num_shards, command_args)

-

-

-

def check_and_set_default_args(command_args):

-

if

not(hasattr(command_args,

'train_shards'))

or command_args.train_shards

is

None:

-

command_args.train_shards =

5

-

if

not(hasattr(command_args,

'validation_shards'))

or command_args.validation_shards

is

None:

-

command_args.validation_shards =

5

-

if

not(hasattr(command_args,

'num_threads'))

or command_args.num_threads

is

None:

-

command_args.num_threads =

5

-

if

not(hasattr(command_args,

'class_label_base'))

or command_args.class_label_base

is

None:

-

command_args.class_label_base =

0

-

if

not(hasattr(command_args,

'dataset_name'))

or command_args.dataset_name

is

None:

-

command_args.dataset_name =

''

-

assert

not command_args.train_shards % command_args.num_threads, (

-

'Please make the command_args.num_threads commensurate with command_args.train_shards')

-

assert

not command_args.validation_shards % command_args.num_threads, (

-

'Please make the command_args.num_threads commensurate with '

-

'command_args.validation_shards')

-

assert command_args.train_directory

is

not

None

-

assert command_args.validation_directory

is

not

None

-

assert command_args.labels_file

is

not

None

-

assert command_args.output_directory

is

not

None

-

-

-

def main(command_args):

-

"""

-

command_args:需要有以下属性:

-

command_args.train_directory 训练集所在的文件夹。这个文件夹下面,每个文件夹的名字代表label名称,再下面就是图片。

-

command_args.validation_directory 验证集所在的文件夹。这个文件夹下面,每个文件夹的名字代表label名称,再下面就是图片。

-

command_args.labels_file 一个文件。每一行代表一个label名称。

-

command_args.output_directory 一个文件夹,表示最后输出的位置。

-

-

command_args.train_shards 将训练集分成多少份。

-

command_args.validation_shards 将验证集分成多少份。

-

command_args.num_threads 线程数。必须是上面两个参数的约数。

-

-

command_args.class_label_base 很重要!真正的tfrecord中,每个class的label号从多少开始,默认为0(在models/slim中就是从0开始的)

-

command_args.dataset_name 字符串,输出的时候的前缀。

-

-

图片不可以有损坏。否则会导致线程提前退出。

-

"""

-

check_and_set_default_args(command_args)

-

logging.info(

'Saving results to %s' % command_args.output_directory)

-

-

# Run it!

-

_process_dataset(

'validation', command_args.validation_directory,

-

command_args.validation_shards, command_args.labels_file, command_args)

-

_process_dataset(

'train', command_args.train_directory,

-

command_args.train_shards, command_args.labels_file, command_args)

4.使用TensorFlow Slim微调模型

TensorFlow Slim 是 Google 公司公布的一个图像分类工具包,巴不仅定义了一些方便的接口,还提供了很多 ImageNet 数据集上常用的网络结构和预训练模型 。 截至 2017 年 7 月, Slim 提供包括 VGG16、VGG19、InceptionVl ~ V4、Resi也t 50、ResNet101、MobileNet 在内大多数常用模型的结构以及预训练模型,更多的模型还会被持续添加进来 。

1)下载TensorFlow Slim源码

Slim中最主要的代码结构和描述介绍如下:

本实验采用作者书中所带的下载好的TensorFlow slim代码。

2)定义自己的datasets文件

为了使用前边创建的tfrecord数据进行训练,必须在datasets中定义新的数据库。在 datasets/目录下新建一个文件 satellite.py,并将 flowers.py 文件中的内容复制到 satellite.py 中 。 接下来,需要修改satellite.py文件中以下几处:

第一部分:FILE_PATTERN、SPLITS_TO_ SIZES 、NUM_CLASSES进行以下修改:

-

_FILE_PATTERN =

'satellite_%s_*.tfrecord'

-

SPLITS_TO_SIZES = {

'train':

4800,

'validation':

1200}

-

_NUM_CLASSES =

6

第二部分:修改image/format如下。此处为图片的默认格式,因为提供的卫星图片为jpg格式,因此需要进行修改。

'image/format': tf.FixedLenFeature((), tf.string, default_value='jpg'),

修改完satellite.py文件后,需要在同目录的 dataset_factory. py 文件中注册satellite 数据库。注册完后代码如下,新增加了from datasets import satellite和'satellite': satellite,两行代码。

-

from datasets

import cifar10

-

from datasets

import flowers

-

from datasets

import imagenet

-

from datasets

import mnist

-

from datasets

import satellite

-

-

datasets_map = {

-

'cifar10': cifar10,

-

'flowers': flowers,

-

'imagenet': imagenet,

-

'mnist': mnist,

-

'satellite': satellite,

-

}

3)准备训练文件夹

定义完数据集后,在slim文件夹下再新建一个satellite目录,在这个目录中完成下面几项工作:

第一项:新建一个data目录,并将3中转换好格式的数据复制进去。

第二项:新建一个train_dir目录,用来保存训练过程中的日志和模型。

第三项:新建一个pretrained目录,在 slim 的 GitHub 页面找到 Inception V3 模型的下载地址 http://download.tensorflow.org/models/inception_v3_2016_08_28.tar.gz,下载并解压后,会得到一个 inception_v3 .ckpt 文件,将该文

件复制到 pretrained 目录下。特别注意:国内网如果不能翻墙的话,可能下载不了,我自己是直接在CSDN里搜索inception_v3 .ckpt文件下载的。

以上步骤操作完后,会形成目录结构如下:

-

slim/

-

satellite/

-

data/

-

satellite_train_00000-

of-

00002.tfrecord

-

satellite_train_00001-

of-

00002.tfrecord

-

satellite_validation_00000-

of-

00002.tfrecord

-

satellite_validation_00001-

of-

00002.tfrecord

-

label.txt

-

pretrained/

-

inception_v3.ckpt

-

train dir/

4)开始训练

在slim文件夹下运行以下命令进行训练:

-

python train_image_classifier.py \

-

--train_dir=satellite/train_dir \

-

--dataset_name=satellite \

-

--dataset_split_name=train \

-

--dataset_dir=satellite/data \

-

--model_name=inception_v3 \

-

--checkpoint_path=satellite/pretrained/inception_v3.ckpt \

-

--checkpoint_exclude_scopes=InceptionV3/Logits,InceptionV3/AuxLogits \

-

--trainable_scopes=InceptionV3/Logits,InceptionV3/AuxLogits \

-

--max_number_of_steps=100000 \

-

--batch_size=32 \

-

--learning_rate=0.001 \

-

--learning_rate_decay_type=fixed \

-

--save_interval_secs=300 \

-

--save_summaries_secs=2 \

-

--log_every_n_steps=10 \

-

--optimizer=rmsprop \

-

--weight_decay=0.00004

以上只是训练末端层InceptionV3/Logits,InceptionV3/AuxLogits ,还可以使用以下命令对所有层进行训练:

-

python train_image_classifier.py \

-

--train_dir=satellite/train_dir \

-

--dataset_name=satellite \

-

--dataset_split_name=train \

-

--dataset_dir=satellite/data \

-

--model_name=inception_v3 \

-

--checkpoint_path=satellite/pretrained/inception_v3.ckpt \

-

--checkpoint_exclude_scopes=InceptionV3/Logits,InceptionV3/AuxLogits \

-

--max_number_of_steps=l00000 \

-

--batch_size=32 \

-

--learning_rate=0.001 \

-

--learning_rate_decay_type=fixed \

-

--save_interval_secs=300 \

-

--save_summaries_secs=l0 \

-

--log_every_n_steps=1 \

-

--optimizer=rmsprop \

-

--weight_decay=0.00004

此处运行后要特别注意的一个问题是,slim训练默认是在GPU上进行的,对应代码在train_image_classifier.py中如下所示:

-

tf.app.flags.DEFINE_boolean(

'clone_on_cpu',

False,

-

'Use CPUs to deploy clones.')

要想在CPU上运行slim程序,有两种方式:第一种是修改上边的代码将clone_on_cpu后的False改为True,第二种是在执行命令的时候,后边加上参数--clone_on_cpu=True,表示在CPU上运行。另外,在不支持GPU的设备上运行时会报下面错误,此时按照以上方式修改就可以在CPU上运行。

tensorflow.python.framework.errors_impl.InvalidArgumentError: Cannot assign a device for operation 'InceptionV3/Conv2d_1a_3x3/weights/Initializer/truncated_normal/TruncatedNormal': Could not satisfy explicit device specification '' because the node was colocated with a group of nodes that required incompatible device '/device:GPU:0'

值得关注的是如果在CPU上执行上边的训练指令,会非常耗时。

所以我将--max_number_of_steps=100000改为--max_number_of_steps=300来训练。

5)模型准确率验证

使用eval_image_classifier.py程序验证模型在验证数据集上的准确率,执行以下指令:

-

python eval_image_classifier.py \

-

--checkpoint_path=satellite/train_dir \

-

--eval_dir=satellite/eval_dir \

-

--dataset_name=satellite \

-

--dataset_split_name=validation \

-

--dataset_dir=satellite/data \

-

--model_name=inception_v3

由于本人的设备不支持GPU,所以只在CPU上训练了300次。在此模型上验证准确率,结果如下。

-

INFO:tensorflow:Evaluation [

1/

12]

-

INFO:tensorflow:Evaluation [

2/

12]

-

INFO:tensorflow:Evaluation [

3/

12]

-

INFO:tensorflow:Evaluation [

4/

12]

-

INFO:tensorflow:Evaluation [

5/

12]

-

INFO:tensorflow:Evaluation [

6/

12]

-

INFO:tensorflow:Evaluation [

7/

12]

-

INFO:tensorflow:Evaluation [

8/

12]

-

INFO:tensorflow:Evaluation [

9/

12]

-

INFO:tensorflow:Evaluation [

10/

12]

-

INFO:tensorflow:Evaluation [

11/

12]

-

INFO:tensorflow:Evaluation [

12/

12]

-

eval/Recall_5[

0.9825]

eval/Accuracy[

0.6625]

Accuracy 表示模型的分类准确率,而 Recall_5 表示 Top 5 的准确率,即在输出的各类别概率中,正确的类别只要落在前 5 个就算对。由于此处的类别数比较少,因此可以不执行 Top 5 的准确率,民而执行 Top 2 或者 Top 3的准确率,只要在 eval_image_classifier.py 中修改下面的部分就可以了 :

-

# Define the metrics:

-

names_to_values, names_to_updates = slim.metrics.aggregate_metric_map({

-

'Accuracy': slim.metrics.streaming_accuracy(predictions, labels),

-

'Recall_5': slim.metrics.streaming_recall_at_k(

-

logits, labels,

5),

-

})

eval_image_classifier.py代码如下:

-

# Copyright 2016 The TensorFlow Authors. All Rights Reserved.

-

#

-

# Licensed under the Apache License, Version 2.0 (the "License");

-

# you may not use this file except in compliance with the License.

-

# You may obtain a copy of the License at

-

#

-

# http://www.apache.org/licenses/LICENSE-2.0

-

#

-

# Unless required by applicable law or agreed to in writing, software

-

# distributed under the License is distributed on an "AS IS" BASIS,

-

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-

# See the License for the specific language governing permissions and

-

# limitations under the License.

-

# ==============================================================================

-

"""Generic evaluation script that evaluates a model using a given dataset."""

-

-

from __future__

import absolute_import

-

from __future__

import division

-

from __future__

import print_function

-

-

import math

-

import tensorflow

as tf

-

-

from datasets

import dataset_factory

-

from nets

import nets_factory

-

from preprocessing

import preprocessing_factory

-

-

slim = tf.contrib.slim

-

-

tf.app.flags.DEFINE_integer(

-

'batch_size',

100,

'The number of samples in each batch.')

-

-

tf.app.flags.DEFINE_integer(

-

'max_num_batches',

None,

-

'Max number of batches to evaluate by default use all.')

-

-

tf.app.flags.DEFINE_string(

-

'master',

'',

'The address of the TensorFlow master to use.')

-

-

tf.app.flags.DEFINE_string(

-

'checkpoint_path',

'/tmp/tfmodel/',

-

'The directory where the model was written to or an absolute path to a '

-

'checkpoint file.')

-

-

tf.app.flags.DEFINE_string(

-

'eval_dir',

'/tmp/tfmodel/',

'Directory where the results are saved to.')

-

-

tf.app.flags.DEFINE_integer(

-

'num_preprocessing_threads',

4,

-

'The number of threads used to create the batches.')

-

-

tf.app.flags.DEFINE_string(

-

'dataset_name',

'imagenet',

'The name of the dataset to load.')

-

-

tf.app.flags.DEFINE_string(

-

'dataset_split_name',

'test',

'The name of the train/test split.')

-

-

tf.app.flags.DEFINE_string(

-

'dataset_dir',

None,

'The directory where the dataset files are stored.')

-

-

tf.app.flags.DEFINE_integer(

-

'labels_offset',

0,

-

'An offset for the labels in the dataset. This flag is primarily used to '

-

'evaluate the VGG and ResNet architectures which do not use a background '

-

'class for the ImageNet dataset.')

-

-

tf.app.flags.DEFINE_string(

-

'model_name',

'inception_v3',

'The name of the architecture to evaluate.')

-

-

tf.app.flags.DEFINE_string(

-

'preprocessing_name',

None,

'The name of the preprocessing to use. If left '

-

'as `None`, then the model_name flag is used.')

-

-

tf.app.flags.DEFINE_float(

-

'moving_average_decay',

None,

-

'The decay to use for the moving average.'

-

'If left as None, then moving averages are not used.')

-

-

tf.app.flags.DEFINE_integer(

-

'eval_image_size',

None,

'Eval image size')

-

-

FLAGS = tf.app.flags.FLAGS

-

-

-

def main(_):

-

if

not FLAGS.dataset_dir:

-

raise ValueError(

'You must supply the dataset directory with --dataset_dir')

-

-

tf.logging.set_verbosity(tf.logging.INFO)

-

with tf.Graph().as_default():

-

tf_global_step = slim.get_or_create_global_step()

-

-

######################

-

# Select the dataset #

-

######################

-

dataset = dataset_factory.get_dataset(

-

FLAGS.dataset_name, FLAGS.dataset_split_name, FLAGS.dataset_dir)

-

-

####################

-

# Select the model #

-

####################

-

network_fn = nets_factory.get_network_fn(

-

FLAGS.model_name,

-

num_classes=(dataset.num_classes - FLAGS.labels_offset),

-

is_training=

False)

-

-

##############################################################

-

# Create a dataset provider that loads data from the dataset #

-

##############################################################

-

provider = slim.dataset_data_provider.DatasetDataProvider(

-

dataset,

-

shuffle=

False,

-

common_queue_capacity=

2 * FLAGS.batch_size,

-

common_queue_min=FLAGS.batch_size)

-

[image, label] = provider.get([

'image',

'label'])

-

label -= FLAGS.labels_offset

-

-

#####################################

-

# Select the preprocessing function #

-

#####################################

-

preprocessing_name = FLAGS.preprocessing_name

or FLAGS.model_name

-

image_preprocessing_fn = preprocessing_factory.get_preprocessing(

-

preprocessing_name,

-

is_training=

False)

-

-

eval_image_size = FLAGS.eval_image_size

or network_fn.default_image_size

-

-

image = image_preprocessing_fn(image, eval_image_size, eval_image_size)

-

-

images, labels = tf.train.batch(

-

[image, label],

-

batch_size=FLAGS.batch_size,

-

num_threads=FLAGS.num_preprocessing_threads,

-

capacity=

5 * FLAGS.batch_size)

-

-

####################

-

# Define the model #

-

####################

-

logits, _ = network_fn(images)

-

-

if FLAGS.moving_average_decay:

-

variable_averages = tf.train.ExponentialMovingAverage(

-

FLAGS.moving_average_decay, tf_global_step)

-

variables_to_restore = variable_averages.variables_to_restore(

-

slim.get_model_variables())

-

variables_to_restore[tf_global_step.op.name] = tf_global_step

-

else:

-

variables_to_restore = slim.get_variables_to_restore()

-

-

predictions = tf.argmax(logits,

1)

-

labels = tf.squeeze(labels)

-

-

# Define the metrics:

-

names_to_values, names_to_updates = slim.metrics.aggregate_metric_map({

-

'Accuracy': slim.metrics.streaming_accuracy(predictions, labels),

-

'Recall_5': slim.metrics.streaming_recall_at_k(

-

logits, labels,

5),

-

})

-

-

# Print the summaries to screen.

-

for name, value

in names_to_values.items():

-

summary_name =

'eval/%s' % name

-

op = tf.summary.scalar(summary_name, value, collections=[])

-

op = tf.Print(op, [value], summary_name)

-

tf.add_to_collection(tf.GraphKeys.SUMMARIES, op)

-

-

# TODO(sguada) use num_epochs=1

-

if FLAGS.max_num_batches:

-

num_batches = FLAGS.max_num_batches

-

else:

-

# This ensures that we make a single pass over all of the data.

-

num_batches = math.ceil(dataset.num_samples / float(FLAGS.batch_size))

-

-

if tf.gfile.IsDirectory(FLAGS.checkpoint_path):

-

checkpoint_path = tf.train.latest_checkpoint(FLAGS.checkpoint_path)

-

else:

-

checkpoint_path = FLAGS.checkpoint_path

-

-

tf.logging.info(

'Evaluating %s' % checkpoint_path)

-

-

slim.evaluation.evaluate_once(

-

master=FLAGS.master,

-

checkpoint_path=checkpoint_path,

-

logdir=FLAGS.eval_dir,

-

num_evals=num_batches,

-

eval_op=list(names_to_updates.values()),

-

variables_to_restore=variables_to_restore)

-

-

# slim.evaluation.evaluation_loop(

-

# master=FLAGS.master,

-

# checkpoint_dir=FLAGS.checkpoint_path,

-

# logdir=FLAGS.eval_dir,

-

# num_evals=num_batches,

-

# eval_op=list(names_to_updates.values()),

-

# variables_to_restore=variables_to_restore,

-

# eval_interval_secs=300

-

# )

-

-

-

if __name__ ==

'__main__':

-

tf.app.run()

6)导出模型对单张图片进行识别

模型训练完成后,紧接着就是导出训练模型,并用该模型对图片进行预测。此处提供了freeze_graph.py用于导出识别的模型,classify_image_inception_v3.py是使用inception_v3模型对单张图片进行识别的脚本。

导出模型:

TensorFlow Slim提供了导出网络结构的脚本export_inference_graph.py 。 首先在 slim 文件夹下运行指令:

-

python export_inference_graph.py \

-

--alsologtostderr \

-

--model_name=inception_v3 \

-

--output_file=satellite/inception_v3_inf_graph.pb \

-

--dataset_name satellite

这个命令会在 satellite 文件夹中生成一个 inception_v3_inf_graph.pb 文件 。

export_inference_grap.py代码如下:

-

# Copyright 2017 The TensorFlow Authors. All Rights Reserved.

-

#

-

# Licensed under the Apache License, Version 2.0 (the "License");

-

# you may not use this file except in compliance with the License.

-

# You may obtain a copy of the License at

-

#

-

# http://www.apache.org/licenses/LICENSE-2.0

-

#

-

# Unless required by applicable law or agreed to in writing, software

-

# distributed under the License is distributed on an "AS IS" BASIS,

-

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-

# See the License for the specific language governing permissions and

-

# limitations under the License.

-

# ==============================================================================

-

r"""Saves out a GraphDef containing the architecture of the model.

-

-

To use it, run something like this, with a model name defined by slim:

-

-

bazel build tensorflow_models/slim:export_inference_graph

-

bazel-bin/tensorflow_models/slim/export_inference_graph \

-

--model_name=inception_v3 --output_file=/tmp/inception_v3_inf_graph.pb

-

-

If you then want to use the resulting model with your own or pretrained

-

checkpoints as part of a mobile model, you can run freeze_graph to get a graph

-

def with the variables inlined as constants using:

-

-

bazel build tensorflow/python/tools:freeze_graph

-

bazel-bin/tensorflow/python/tools/freeze_graph \

-

--input_graph=/tmp/inception_v3_inf_graph.pb \

-

--input_checkpoint=/tmp/checkpoints/inception_v3.ckpt \

-

--input_binary=true --output_graph=/tmp/frozen_inception_v3.pb \

-

--output_node_names=InceptionV3/Predictions/Reshape_1

-

-

The output node names will vary depending on the model, but you can inspect and

-

estimate them using the summarize_graph tool:

-

-

bazel build tensorflow/tools/graph_transforms:summarize_graph

-

bazel-bin/tensorflow/tools/graph_transforms/summarize_graph \

-

--in_graph=/tmp/inception_v3_inf_graph.pb

-

-

To run the resulting graph in C++, you can look at the label_image sample code:

-

-

bazel build tensorflow/examples/label_image:label_image

-

bazel-bin/tensorflow/examples/label_image/label_image \

-

--image=${HOME}/Pictures/flowers.jpg \

-

--input_layer=input \

-

--output_layer=InceptionV3/Predictions/Reshape_1 \

-

--graph=/tmp/frozen_inception_v3.pb \

-

--labels=/tmp/imagenet_slim_labels.txt \

-

--input_mean=0 \

-

--input_std=255 \

-

--logtostderr

-

-

"""

-

-

from __future__

import absolute_import

-

from __future__

import division

-

from __future__

import print_function

-

-

import tensorflow

as tf

-

-

from tensorflow.python.platform

import gfile

-

from datasets

import dataset_factory

-

from nets

import nets_factory

-

-

-

slim = tf.contrib.slim

-

-

tf.app.flags.DEFINE_string(

-

'model_name',

'inception_v3',

'The name of the architecture to save.')

-

-

tf.app.flags.DEFINE_boolean(

-

'is_training',

False,

-

'Whether to save out a training-focused version of the model.')

-

-

tf.app.flags.DEFINE_integer(

-

'default_image_size',

224,

-

'The image size to use if the model does not define it.')

-

-

tf.app.flags.DEFINE_string(

'dataset_name',

'imagenet',

-

'The name of the dataset to use with the model.')

-

-

tf.app.flags.DEFINE_integer(

-

'labels_offset',

0,

-

'An offset for the labels in the dataset. This flag is primarily used to '

-

'evaluate the VGG and ResNet architectures which do not use a background '

-

'class for the ImageNet dataset.')

-

-

tf.app.flags.DEFINE_string(

-

'output_file',

'',

'Where to save the resulting file to.')

-

-

tf.app.flags.DEFINE_string(

-

'dataset_dir',

'',

'Directory to save intermediate dataset files to')

-

-

FLAGS = tf.app.flags.FLAGS

-

-

-

def main(_):

-

if

not FLAGS.output_file:

-

raise ValueError(

'You must supply the path to save to with --output_file')

-

tf.logging.set_verbosity(tf.logging.INFO)

-

with tf.Graph().as_default()

as graph:

-

dataset = dataset_factory.get_dataset(FLAGS.dataset_name,

'validation',

-

FLAGS.dataset_dir)

-

network_fn = nets_factory.get_network_fn(

-

FLAGS.model_name,

-

num_classes=(dataset.num_classes - FLAGS.labels_offset),

-

is_training=FLAGS.is_training)

-

if hasattr(network_fn,

'default_image_size'):

-

image_size = network_fn.default_image_size

-

else:

-

image_size = FLAGS.default_image_size

-

placeholder = tf.placeholder(name=

'input', dtype=tf.float32,

-

shape=[

1, image_size, image_size,

3])

-

network_fn(placeholder)

-

graph_def = graph.as_graph_def()

-

with gfile.GFile(FLAGS.output_file,

'wb')

as f:

-

f.write(graph_def.SerializeToString())

-

-

-

if __name__ ==

'__main__':

-

tf.app.run()

注意: inception_v3_inf_graph.pb 文件中只保存了 Inception V3 的网络结构,并不包含训练得到的模型参数,需要将 checkpoint 中的模型参数保存进来。方法是使用 freeze_graph.py 脚本(在书中有提供该文件),在freeze_graph.py所在的目录下执行以下指令:

-

python freeze_graph.py \

-

--input_graph slim/satellite/inception_v3_inf_graph.pb \

-

--input_checkpoint slim/satellite/train_dir/model.ckpt-300 \

-

--input_binary true \

-

--output_node_names InceptionV3/Predictions/Reshape_1 \

-

--output_graph slim/satellite/frozen_graph.pb

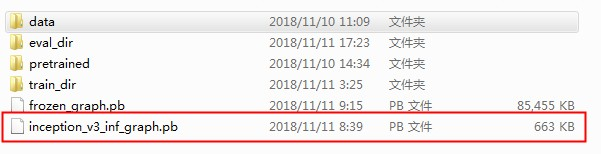

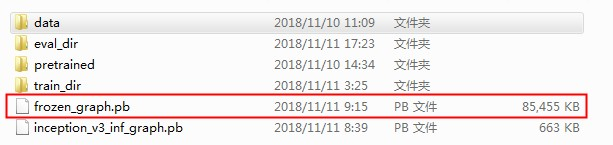

最后导出的模型文件如下:

预测图片:

如何使用导出的frozen_graph.pb文件对单张图片进行预测?此处使用一个编写的文件classify_image_inception_v3.py 脚本来完成这件事 。先来看这个脚本的使用方法:

-

python classify_image_inception_v3.py \

-

--model_path slim/satellite/frozen_graph.pb \

-

--label_path data_prepare/pic/label.txt \

-

--image_file test_image.jpg

预测结果如下,该图属于water的得分值最大。

water (score = 1.41468)

wood (score = 1.12560)

rock (score = 0.34318)

wetland (score = 0.31493

urban (score = -1.02338)

classify_image_inception_v3.py代码如下:

-

# Copyright 2015 The TensorFlow Authors. All Rights Reserved.

-

#

-

# Licensed under the Apache License, Version 2.0 (the "License");

-

# you may not use this file except in compliance with the License.

-

# You may obtain a copy of the License at

-

#

-

# http://www.apache.org/licenses/LICENSE-2.0

-

#

-

# Unless required by applicable law or agreed to in writing, software

-

# distributed under the License is distributed on an "AS IS" BASIS,

-

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-

# See the License for the specific language governing permissions and

-

# limitations under the License.

-

# ==============================================================================

-

-

from __future__

import absolute_import

-

from __future__

import division

-

from __future__

import print_function

-

-

import argparse

-

import os.path

-

import re

-

import sys

-

import tarfile

-

-

import numpy

as np

-

from six.moves

import urllib

-

import tensorflow

as tf

-

-

FLAGS =

None

-

-

class NodeLookup(object):

-

def __init__(self, label_lookup_path=None):

-

self.node_lookup = self.load(label_lookup_path)

-

-

def load(self, label_lookup_path):

-

node_id_to_name = {}

-

with open(label_lookup_path)

as f:

-

for index, line

in enumerate(f):

-

node_id_to_name[index] = line.strip()

-

return node_id_to_name

-

-

def id_to_string(self, node_id):

-

if node_id

not

in self.node_lookup:

-

return

''

-

return self.node_lookup[node_id]

-

-

-

def create_graph():

-

"""Creates a graph from saved GraphDef file and returns a saver."""

-

# Creates graph from saved graph_def.pb.

-

with tf.gfile.FastGFile(FLAGS.model_path,

'rb')

as f:

-

graph_def = tf.GraphDef()

-

graph_def.ParseFromString(f.read())

-

_ = tf.import_graph_def(graph_def, name=

'')

-

-

def preprocess_for_eval(image, height, width,

-

central_fraction=0.875, scope=None):

-

with tf.name_scope(scope,

'eval_image', [image, height, width]):

-

if image.dtype != tf.float32:

-

image = tf.image.convert_image_dtype(image, dtype=tf.float32)

-

# Crop the central region of the image with an area containing 87.5% of

-

# the original image.

-