线程池:

线程池是一种多线程处理形式,处理过程中将任务添加到队列,然后在创建线程后自动启动这些任务。线程池线程都是后台线程。每个线程都使用默认的堆栈大小,以默认的优先级运行,并处于多线程单元中。如果某个线程在托管代码中空闲(如正在等待某个事件),则线程池将插入另一个辅助线程来使所有处理器保持繁忙。如果所有线程池线程都始终保持繁忙,但队列中包含挂起的工作,则线程池将在一段时间后创建另一个辅助线程但线程的数目永远不会超过最大值。超过最大值的线程可以排队,但他们要等到其他线程完成后才启动。

线程池线程数设置:N核服务器,通过执行业务的单线程分析出本地计算时间为x,等待时间为y,则工作线程数(线程池线程数)设置为 N*(x+y)/x,能让CPU的利用率最大化。

python代码,使用from threading import Thread:

import socket

import threading

from threading import Thread

import threading

import sys

import time

import random

from Queue import Queue

host = ''

port = 8888

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

s.bind((host, port))

s.listen(3)

class ThreadPoolManger():

"""线程池管理器"""

def __init__(self, thread_num):

# 初始化参数

self.work_queue = Queue()

self.thread_num = thread_num

self.__init_threading_pool(self.thread_num)

def __init_threading_pool(self, thread_num):

# 初始化线程池,创建指定数量的线程池

for i in range(thread_num):

thread = ThreadManger(self.work_queue)

thread.start()

def add_job(self, func, *args):

# 将任务放入队列,等待线程池阻塞读取,参数是被执行的函数和函数的参数

self.work_queue.put((func, args))

class ThreadManger(Thread):

"""定义线程类,继承threading.Thread"""

def __init__(self, work_queue):

Thread.__init__(self)

self.work_queue = work_queue

self.daemon = True

def run(self):

# 启动线程

while True:

target, args = self.work_queue.get()

target(*args)

self.work_queue.task_done()

# 创建一个有4个线程的线程池

thread_pool = ThreadPoolManger(4)

# 处理http请求,这里简单返回200 hello world

def handle_request(conn_socket):

recv_data = conn_socket.recv(1024)

reply = 'HTTP/1.1 200 OK \r\n\r\n'

reply += 'hello world'

print ('thread %s is running ' % threading.current_thread().name)

conn_socket.send(reply)

conn_socket.close()

# 循环等待接收客户端请求

while True:

# 阻塞等待请求

conn_socket, addr = s.accept()

# 一旦有请求了,把socket扔到我们指定处理函数handle_request处理,等待线程池分配线程处理

thread_pool.add_job(handle_request, *(conn_socket, ))

s.close()

python代码,使用import threadpool

'''

import time

def sayhello(str):

print ("Hello ",str)

time.sleep(2)

name_list =['aa','bb','cc']

start_time = time.time()

for i in range(len(name_list)):

sayhello(name_list[i])

print ('%d second'% (time.time()-start_time))

'''

import time

import threadpool

def sayhello(str):

print ("Hello ",str)

time.sleep(2)

name_list =['aa','bb','cc']

start_time = time.time()

pool = threadpool.ThreadPool(10)

requests = threadpool.makeRequests(sayhello, name_list)

[pool.putRequest(req) for req in requests]

pool.wait()

print ('%d second'% (time.time()-start_time))

TCP和UDP的区别

1、TCP面向连接(如打电话要先拨号建立连接);UDP是无连接的,即发送数据之前不需要建立连接

2、TCP提供可靠的服务。也就是说,通过TCP连接传送的数据,无差错,不丢失,不重复,且按序到达;UDP尽最大努力交付,即不保证可靠交付

3、TCP面向字节流,实际上是TCP把数据看成一连串无结构的字节流;UDP是面向报文的,UDP没有拥塞控制,因此网络出现拥塞不会使源主机的发送速率降低(对实时应用很有用,如IP电话,实时视频会议等)

4、每一条TCP连接只能是点到点的;UDP支持一对一,一对多,多对一和多对多的交互通信

5、TCP首部开销20字节;UDP的首部开销小,只有8个字节

6、TCP的逻辑通信信道是全双工的可靠信道,UDP则是不可靠信道

CUDA编程

简介

#include<stdio.h>

__global__ void add(int a, int b, int *c) {

*c = a + b;

}

int main() {

int c;

int *dev_c;

cudaMalloc((void**)&dev_c, sizeof(int));

add << <1, 1 >> >(2, 7, dev_c);

cudaMemcpy(&c, dev_c, sizeof(int), cudaMemcpyDeviceToHost);

printf("2 + 7 = %d", c);

return 0;

}

函数的定义带有了__global__这个标签,表示这个函数是在GPU上运行,这里涉及了GPU和主机之间的内存交换了,cudaMalloc是在GPU的内存里开辟一片空间,然后通过操作之后,这个内存里有了计算出来内容,再通过cudaMemcpy这个函数把内容从GPU复制出来。就是这么简单。

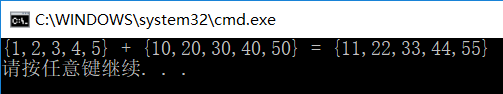

并行编程 kernel.cu

#include "cuda_runtime.h"

#include "device_launch_parameters.h"

#include <stdio.h>

cudaError_t addWithCuda(int *c, const int *a, const int *b, unsigned int size);

__global__ void addKernel(int *c, const int *a, const int *b)

{

int i = threadIdx.x;

c[i] = a[i] + b[i];

}

int main()

{

const int arraySize = 5;

const int a[arraySize] = { 1, 2, 3, 4, 5 };

const int b[arraySize] = { 10, 20, 30, 40, 50 };

int c[arraySize] = { 0 };

// Add vectors in parallel.

cudaError_t cudaStatus = addWithCuda(c, a, b, arraySize);

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "addWithCuda failed!");

return 1;

}

printf("{1,2,3,4,5} + {10,20,30,40,50} = {%d,%d,%d,%d,%d}\n",

c[0], c[1], c[2], c[3], c[4]);

// cudaDeviceReset must be called before exiting in order for profiling and

// tracing tools such as Nsight and Visual Profiler to show complete traces.

cudaStatus = cudaDeviceReset();

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "cudaDeviceReset failed!");

return 1;

}

return 0;

}

// Helper function for using CUDA to add vectors in parallel.

cudaError_t addWithCuda(int *c, const int *a, const int *b, unsigned int size)

{

int *dev_a = 0;

int *dev_b = 0;

int *dev_c = 0;

cudaError_t cudaStatus;

// Choose which GPU to run on, change this on a multi-GPU system.

cudaStatus = cudaSetDevice(0);

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "cudaSetDevice failed! Do you have a CUDA-capable GPU installed?");

goto Error;

}

// Allocate GPU buffers for three vectors (two input, one output) .

cudaStatus = cudaMalloc((void**)&dev_c, size * sizeof(int));

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "cudaMalloc failed!");

goto Error;

}

cudaStatus = cudaMalloc((void**)&dev_a, size * sizeof(int));

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "cudaMalloc failed!");

goto Error;

}

cudaStatus = cudaMalloc((void**)&dev_b, size * sizeof(int));

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "cudaMalloc failed!");

goto Error;

}

// Copy input vectors from host memory to GPU buffers.

cudaStatus = cudaMemcpy(dev_a, a, size * sizeof(int), cudaMemcpyHostToDevice);

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "cudaMemcpy failed!");

goto Error;

}

cudaStatus = cudaMemcpy(dev_b, b, size * sizeof(int), cudaMemcpyHostToDevice);

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "cudaMemcpy failed!");

goto Error;

}

// Launch a kernel on the GPU with one thread for each element.

addKernel<<<1, size>>>(dev_c, dev_a, dev_b);

// Check for any errors launching the kernel

cudaStatus = cudaGetLastError();

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "addKernel launch failed: %s\n", cudaGetErrorString(cudaStatus));

goto Error;

}

// cudaDeviceSynchronize waits for the kernel to finish, and returns

// any errors encountered during the launch.

cudaStatus = cudaDeviceSynchronize();

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "cudaDeviceSynchronize returned error code %d after launching addKernel!\n", cudaStatus);

goto Error;

}

// Copy output vector from GPU buffer to host memory.

cudaStatus = cudaMemcpy(c, dev_c, size * sizeof(int), cudaMemcpyDeviceToHost);

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "cudaMemcpy failed!");

goto Error;

}

Error:

cudaFree(dev_c);

cudaFree(dev_a);

cudaFree(dev_b);

return cudaStatus;

}