negative sampling负采样和nce loss

一、Noise contrastive estimation(NCE)

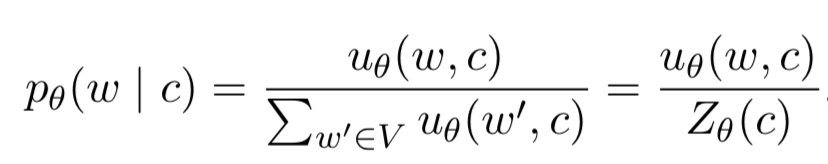

语言模型中,在最后一层往往需要:根据上下文c,在整个语料库V中预测某个单词w的概率,一般采用softmax形式

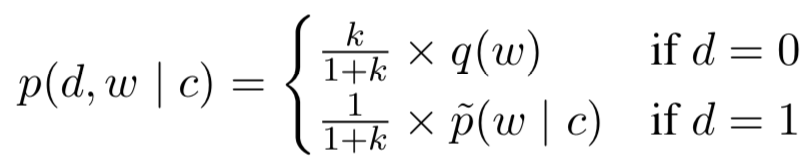

此时NCE就闪亮登场了,为了避免巨大的计算量,NCE的思路是将softmax的参数估计问题 转化成 二分类。二分类两类样本分别是真实样本和噪声样本:正样本是由经验分布![]() 生成的(即真实分布)标签D=0,负样本则是噪声由q(w)生成 对应标签D=1。假设c代表上下文context,从噪声分布中提取k个噪声样本,在总样本(真实样本+噪声样本)中w代表预测的目标词。 那么(d,w)的联合概率分布如下:

生成的(即真实分布)标签D=0,负样本则是噪声由q(w)生成 对应标签D=1。假设c代表上下文context,从噪声分布中提取k个噪声样本,在总样本(真实样本+噪声样本)中w代表预测的目标词。 那么(d,w)的联合概率分布如下:

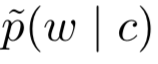

Tips:P指的是正负样本的整体分布,这与之前的正样本的经验分布

不同

不同

继续根据条件联合概率公式 可以得出:p(d=0/w,c) = p(d=0,w/c) / p(w/c)

p(d=1/w,c)类似

即下面公式:

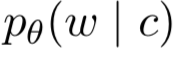

NCE利用模型分布 来代替经验分布

来代替经验分布  ,到此就与最开始讲的softmax联系起来了,通过最大化likelihood来得到最优的参数theta。但是此处还是没有解决问题,因为

,到此就与最开始讲的softmax联系起来了,通过最大化likelihood来得到最优的参数theta。但是此处还是没有解决问题,因为 和最开始的公式一样,需要遍历所有V计算partition function Z(c)。

和最开始的公式一样,需要遍历所有V计算partition function Z(c)。

-

NCE接下来提出了两个假设:

- partition function Z(c)不需要遍历V得到,而是通过参数Zc来进行估计

- 由于神经网络具有很多参数,因此可以将Zc设定为一个固定值Zc=1,这种设定对于所有的c都是有效的([Mnih and Teh2012])

根据上述假设,公式可以进一步写成:

二、Negative Sampling

负采样Negative Sampling是NCE的一个变种,概率的定义有所区别:

但是,除了上述情况以外 两个概率公式并不同,即便negative sampling在词向量方面表现优异,但是negative sampling依旧不能具备NCE的特性(如asymptotic consistency guarantees)。

三、nce loss in tensorflow

一、loss计算

经过负采样得到k个负样本后,那么对于每个样本来说,要么是正样本label=1,要么是负样本label=0。将上文中最后的NCE loss的最大化log变为最小化-log,那么NCE的损失函数可以表示为二分类的logistics loss(cross entropy):

-

采用tensorflow中的符号:

- x = logits 表示上文中的u(w,c),也就是最后一层(词w对应的)网络参数与c的词向量乘积

- z = labels 正样本=1,负样本=0 上面的logits和labels都是向量和矩阵,表示所有样本(包含正样本和采样负样本)

那么 损失函数可以表示为:

L = z * -log(sigmoid(x)) + (1 - z) * -log(1 - sigmoid(x))

<code><pre>

z * -log(sigmoid(x)) + (1 - z) * -log(1 - sigmoid(x))

= z * -log(1 / (1 + exp(-x))) + (1 - z) * -log(exp(-x) / (1 + exp(-x)))

= z * log(1 + exp(-x)) + (1 - z) * (-log(exp(-x)) + log(1 + exp(-x)))

= z * log(1 + exp(-x)) + (1 - z) * (x + log(1 + exp(-x))

= (1 - z) * x + log(1 + exp(-x))

= x - x * z + log(1 + exp(-x))

当x<0的时候:

For x < 0, to avoid overflow in exp(-x), we reformulate the above

x - x * z + log(1 + exp(-x))

= log(exp(x)) - x * z + log(1 + exp(-x))

= - x * z + log(1 + exp(x))

因此,将x>0和x<0整合到一起,得到下面公式:

Hence, to ensure stability and avoid overflow, the implementation uses this

equivalent formulation

max(x, 0) - x * z + log(1 + exp(-abs(x)))

</pre></code>

二、计算cross entropy的源码

根据labels和logits计算Loss的代码:https://github.com/tensorflow/tensorflow/blob/r1.13/tensorflow/python/ops/nn_impl.pydef sigmoid_cross_entropy_with_logits( # pylint: disable=invalid-name _sentinel=None, labels=None, logits=None, name=None): """Computes sigmoid cross entropy given `logits`. Args: _sentinel: Used to prevent positional parameters. Internal, do not use. labels: A `Tensor` of the same type and shape as `logits`. logits: A `Tensor` of type `float32` or `float64`. name: A name for the operation (optional). Returns: A `Tensor` of the same shape as `logits` with the componentwise logistic losses. nn_ops._ensure_xent_args("sigmoid_cross_entropy_with_logits", _sentinel, labels, logits) # pylint: enable=protected-access with ops.name_scope(name, "logistic_loss", [logits, labels]) as name: logits = ops.convert_to_tensor(logits, name="logits") labels = ops.convert_to_tensor(labels, name="labels") try: labels.get_shape().merge_with(logits.get_shape()) except ValueError: raise ValueError("logits and labels must have the same shape (%s vs %s)" % (logits.get_shape(), labels.get_shape())) # The logistic loss formula from above is # x - x * z + log(1 + exp(-x)) # For x < 0, a more numerically stable formula is # -x * z + log(1 + exp(x)) # Note that these two expressions can be combined into the following: # max(x, 0) - x * z + log(1 + exp(-abs(x))) # To allow computing gradients at zero, we define custom versions of max and # abs functions. zeros = array_ops.zeros_like(logits, dtype=logits.dtype) cond = (logits >= zeros) relu_logits = array_ops.where(cond, logits, zeros) neg_abs_logits = array_ops.where(cond, -logits, logits) return math_ops.add( relu_logits - logits * labels, math_ops.log1p(math_ops.exp(neg_abs_logits)), name=name)

三、负采样、计算logits和label

首先根据tf.nn.log_uniform_candidate_sampler进行采样(常用的采样方法),得到num_sampled个负样本: 默认利用log-uniform (Zipfian) distribution进行采样,因此labels需要根据frequency降序从而得到比较好的效果。具体细节可以参考:tf.nn.log_uniform_candidate_sampler

-

接下来就是计算所有样本的logits和labels,有以下几点需要注意:

- logits:最后一层权重矩阵weights 中样本对应的向量*inputs

- label:(1)采样的负样本就是0;(2)默认情况下inputs只对应一个正样本那么label=1,但是如果num_true>0,那么每个正样本的label=1/num_true

def _compute_sampled_logits(weights, biases, labels, inputs, num_sampled, num_classes, num_true=1, sampled_values=None, subtract_log_q=True, remove_accidental_hits=False, partition_strategy="mod", name=None, seed=None): """Helper function for nce_loss and sampled_softmax_loss functions. Computes sampled output training logits and labels suitable for implementing e.g. noise-contrastive estimation (see nce_loss) or sampled softmax (see sampled_softmax_loss). Note: In the case where num_true > 1, we assign to each target class the target probability 1 / num_true so that the target probabilities sum to 1 per-example. Args: weights: A `Tensor` of shape `[num_classes, dim]`, or a list of `Tensor` objects whose concatenation along dimension 0 has shape `[num_classes, dim]`. The (possibly-partitioned) class embeddings. biases: A `Tensor` of shape `[num_classes]`. The (possibly-partitioned) class biases. labels: A `Tensor` of type `int64` and shape `[batch_size, num_true]`. The target classes. Note that this format differs from the `labels` argument of `nn.softmax_cross_entropy_with_logits_v2`. inputs: A `Tensor` of shape `[batch_size, dim]`. The forward activations of the input network. num_sampled: An `int`. The number of classes to randomly sample per batch. num_classes: An `int`. The number of possible classes. num_true: An `int`. The number of target classes per training example. sampled_values: a tuple of (`sampled_candidates`, `true_expected_count`, `sampled_expected_count`) returned by a `*_candidate_sampler` function. (if None, we default to `log_uniform_candidate_sampler`) subtract_log_q: A `bool`. whether to subtract the log expected count of the labels in the sample to get the logits of the true labels. Default is True. Turn off for Negative Sampling. remove_accidental_hits: A `bool`. whether to remove "accidental hits" where a sampled class equals one of the target classes. Default is False. partition_strategy: A string specifying the partitioning strategy, relevant if `len(weights) > 1`. Currently `"div"` and `"mod"` are supported. Default is `"mod"`. See `tf.nn.embedding_lookup` for more details. name: A name for the operation (optional). seed: random seed for candidate sampling. Default to None, which doesn't set the op-level random seed for candidate sampling. Returns: out_logits: `Tensor` object with shape `[batch_size, num_true + num_sampled]`, for passing to either `nn.sigmoid_cross_entropy_with_logits` (NCE) or `nn.softmax_cross_entropy_with_logits_v2` (sampled softmax). out_labels: A Tensor object with the same shape as `out_logits`. """

四、NCE Loss 源码

将上面两部结合到一起,就可以直接计算NCE Loss了:首先计算所有样本的logits和labels(第三步),之后计算cross entropy loss(第二步)。def nce_loss(weights, biases, labels, inputs, num_sampled, num_classes, num_true=1, sampled_values=None, remove_accidental_hits=False, partition_strategy="mod", name="nce_loss"): """Computes and returns the noise-contrastive estimation training loss. See [Noise-contrastive estimation: A new estimation principle for unnormalized statistical models](http://www.jmlr.org/proceedings/papers/v9/gutmann10a/gutmann10a.pdf). Also see our [Candidate Sampling Algorithms Reference](https://www.tensorflow.org/extras/candidate_sampling.pdf) A common use case is to use this method for training, and calculate the full sigmoid loss for evaluation or inference. In this case, you must set `partition_strategy="div"` for the two losses to be consistent, as in the Note: In the case where `num_true` > 1, we assign to each target class the target probability 1 / `num_true` so that the target probabilities sum to 1 per-example. Note: It would be useful to allow a variable number of target classes per example. We hope to provide this functionality in a future release. For now, if you have a variable number of target classes, you can pad them out to a constant number by either repeating them or by padding with an otherwise unused class. Args: weights: A `Tensor` of shape `[num_classes, dim]`, or a list of `Tensor` objects whose concatenation along dimension 0 has shape [num_classes, dim]. The (possibly-partitioned) class embeddings. biases: A `Tensor` of shape `[num_classes]`. The class biases. labels: A `Tensor` of type `int64` and shape `[batch_size, num_true]`. The target classes. inputs: A `Tensor` of shape `[batch_size, dim]`. The forward activations of the input network. num_sampled: An `int`. The number of negative classes to randomly sample per batch. This single sample of negative classes is evaluated for each element in the batch. num_classes: An `int`. The number of possible classes. num_true: An `int`. The number of target classes per training example. sampled_values: a tuple of (`sampled_candidates`, `true_expected_count`, `sampled_expected_count`) returned by a `*_candidate_sampler` function. (if None, we default to `log_uniform_candidate_sampler`) remove_accidental_hits: A `bool`. Whether to remove "accidental hits" where a sampled class equals one of the target classes. If set to `True`, this is a "Sampled Logistic" loss instead of NCE, and we are learning to generate log-odds instead of log probabilities. See our [Candidate Sampling Algorithms Reference] (https://www.tensorflow.org/extras/candidate_sampling.pdf). Default is False. partition_strategy: A string specifying the partitioning strategy, relevant if `len(weights) > 1`. Currently `"div"` and `"mod"` are supported. Default is `"mod"`. See `tf.nn.embedding_lookup` for more details. name: A name for the operation (optional). Returns: A `batch_size` 1-D tensor of per-example NCE losses. """ logits, labels = _compute_sampled_logits( weights=weights, biases=biases, labels=labels, inputs=inputs, num_sampled=num_sampled, num_classes=num_classes, num_true=num_true, sampled_values=sampled_values, subtract_log_q=True, remove_accidental_hits=remove_accidental_hits, partition_strategy=partition_strategy, name=name) sampled_losses = sigmoid_cross_entropy_with_logits( labels=labels, logits=logits, name="sampled_losses") # sampled_losses is batch_size x {true_loss, sampled_losses...} # We sum out true and sampled losses. return _sum_rows(sampled_losses)

参考文献:

[Mnih and Teh2012] Andriy Mnih and Yee Whye Teh. 2012. A fast and simple algorithm for training neural proba- bilistic language models. In Proc. ICML.

Notes on Noise Contrastive Estimation and Negative Sampling(https://arxiv.org/pdf/1410.8251.pdf)