@Caffe网络中Conv层和 Pooling层的输出尺寸计算

- 根据Caffe深度框架的源码分析及实际网络层输出,获得Conv层和Pooling的输出尺寸计算公式,如下:

Conv层

Pooling层

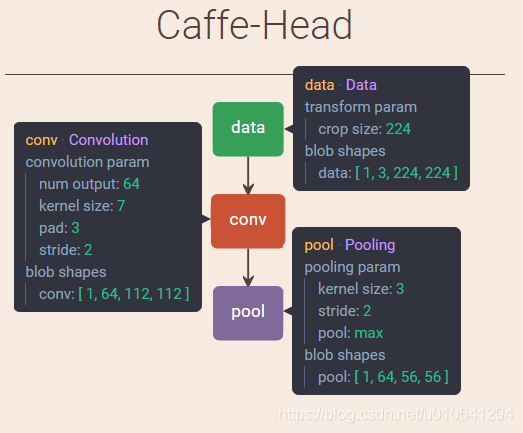

效果展示图

Caffe网络可视化 (将下列代码放置在左侧编辑栏里,Shift+Enter)

# Enter your network definition here.

# Use Shift+Enter to update the visualization.

name: "Caffe-Head"

layer {

name: "data"

type: "Data"

top: "data"

transform_param {

crop_size: 224

}

}

layer {

bottom: "data"

top: "conv"

name: "conv"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 7

pad: 3

stride: 2

}

}

layer {

bottom: "conv"

top: "pool"

name: "pool"

type: "Pooling"

pooling_param {

kernel_size: 3

stride: 2

pool: MAX

}

}

源码分析

Conv层

// ./src/caffe/layers/conv_layer.cpp

void ConvolutionLayer<Dtype>::compute_output_shape() {

const int* kernel_shape_data = this->kernel_shape_.cpu_data();

const int* stride_data = this->stride_.cpu_data();

const int* pad_data = this->pad_.cpu_data();

const int* dilation_data = this->dilation_.cpu_data();

this->output_shape_.clear();

for (int i = 0; i < this->num_spatial_axes_; ++i) {

// i + 1 to skip channel axis

const int input_dim = this->input_shape(i + 1);

const int kernel_extent = dilation_data[i] * (kernel_shape_data[i] - 1) + 1;

/* 计算输出尺寸 */

const int output_dim = (input_dim + 2 * pad_data[i] - kernel_extent) / stride_data[i] + 1;

this->output_shape_.push_back(output_dim);

}

}

...

Pooling层

ceil函数:同上述公式中的Ceil。

// ./src/caffe/layers/pooling_layer.cpp

void PoolingLayer<dtype>::Reshape(const vector<blob<dtype>*>& bottom, const vector<blob<dtype>*>& top) {

/*检查输入图像的blob轴的个数, (num, channels, height, width)表示图像有这4个轴*/

CHECK_EQ(4, bottom[0]->num_axes()) << "Input must have 4 axes, " << "corresponding to (num, channels, height, width)";

channels_ = bottom[0]->channels(); /*获得图像通道数*/

height_ = bottom[0]->height(); /*获得图像高*/

width_ = bottom[0]->width(); /*获得图像宽*/

if (global_pooling_) {

kernel_h_ = bottom[0]->height();

kernel_w_ = bottom[0]->width();

}

/*计算图像池化后的宽高: ceil */

pooled_height_ = static_cast<int>(ceil(static_cast<float>(height_ + 2 * pad_h_ - kernel_h_) / stride_h_)) + 1;

pooled_width_ = static_cast<int>(ceil(static_cast<float>(width_ + 2 * pad_w_ - kernel_w_) / stride_w_)) + 1;

...

}

总结

- Conv层和Pooling的输出尺寸计算公式不一样。