版权声明:版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/z_feng12489/article/details/89182394

3. 张量高阶操作

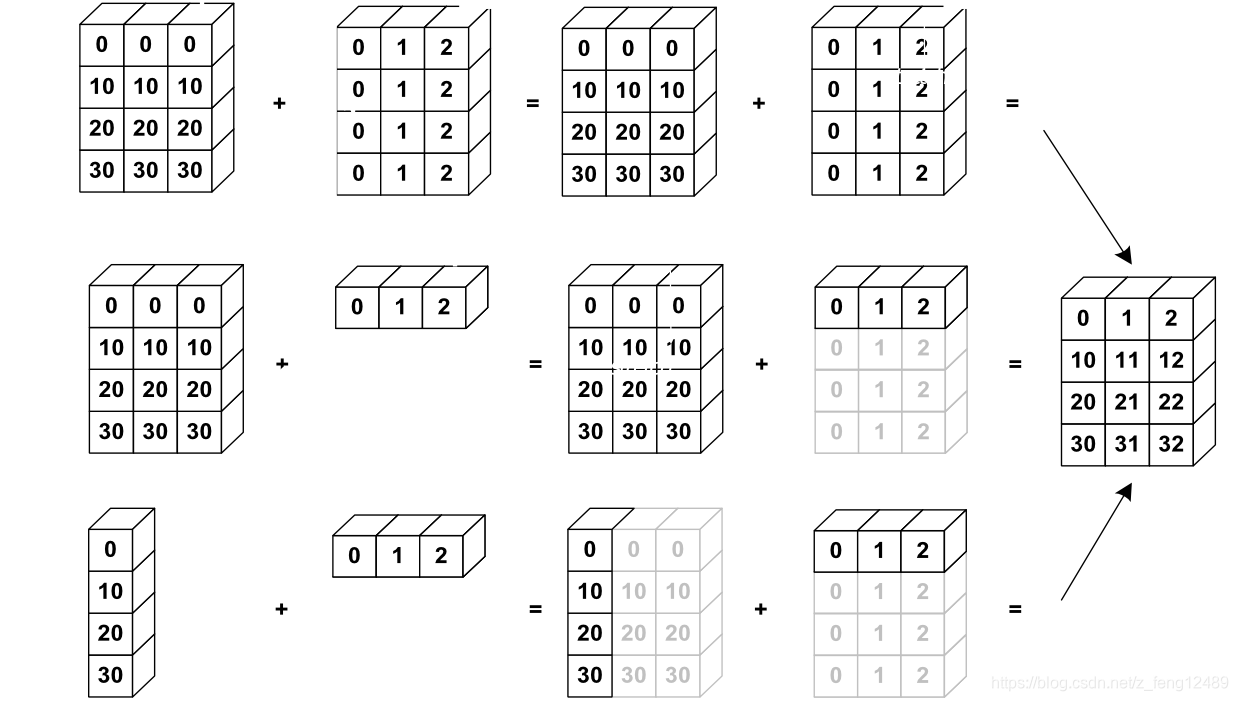

Broadcast 机制

Broadcast (expand+withoutcopying) [广播机制]

关键步骤:

- Insert 1 dim ahead (unsqueeze)

- Expand dims with size 1 to same size

- Feature maps: [4, 32, 14, 14]

- Bias: [32, 1, 1] => [1, 32, 1, 1] => [4, 32, 14, 14]

存在意义:

- for actual demanding(实际需求)

- [class,students,scores]

- Addbiasforeverystudents : +5score

- [4,32,8] + [4,32,8]

- [4,32,8] + [5.0]

- memory consumption(节约内存)

- [4,32,8] => 1024

- [5.0] => 1

使用环境

- If current dim=1, expand to same

- If either has no dim, insert one dim and expand to same

- otherwise, NOT broadcasting-able

具体案例

- 情形一:

- [4,32,14,14]

- [1,32,1,1] => [4,32,14,14]

- 情形二:

- [4,32,14,14]

- [14,14] => [1,1,14,14] => [4,32,14,14]

- 情形三:

- [4,32,14,14]

- [2,32,14,14] => error

a = torch.rand(1,3)

b = torch.rand(3,1)

(a+b).shape #torch.Size([3, 3])

a = torch.rand(4, 32, 14, 14)

b = torch.rand(1, 32, 1, 1)

(a+b).shape #torch.Size([4, 32, 14, 14])

a = torch.rand(4, 32, 14, 14)

b = torch.rand(14, 14)

(a+b).shape #torch.Size([4, 32, 14, 14])

a = torch.rand(4, 32, 14, 14)

b = torch.rand(2, 32, 14, 14)

(a+b).shape error

(a+b[0]).shape #torch.Size([4, 32, 14, 14]) 手动指定

a = torch.rand(2, 3, 6, 6)

b = torch.rand(1, 3, 6, 1) 给每一个通道的每一行加上相同的像素值

(a+b).shape

Tensor 分割与合并

Tensor 分割与合并(Merge or splite)

- Cat 合并 , 不增加维度,cat维度可以不同。

a = torch.rand(4, 32, 8) #[classes, students, scores]

b = torch.rand(5, 32, 8)

torch.cat([a, b],dim=0).shape #torch.Size([9, 32, 8])

- Stack 合并,创建新的维度,旧维度必须一致。

a = torch.rand(32, 8) #[students, scores]

b = torch.rand(32, 8)

c = torch.rand(32, 8)

torch.stack([a, b, c],dim=0).shape #torch.Size([3, 32, 8])

- Split 根据长度来拆分。

a = torch.rand(4, 32, 8) #[classes, students, scores]

aa, bb, cc = a.split([1,2,1], dim=0)

aaa, bbb = a.split(2, dim=0)

aa.shape #torch.Size([1, 32, 8])

bb.shape #torch.Size([2, 32, 8])

cc.shape #torch.Size([1, 32, 8])

aaa.shape #torch.Size([2, 32, 8])

bbb.shape #torch.Size([2, 32, 8])

- Chunk 根据数量来拆分。

a = torch.rand(6, 32, 8) #[classes, students, scores]

aa, bb= a.chunk(2, dim=0)

cc, dd, ee =a.split(2, dim=0)

aa.shape #torch.Size([3, 32, 8])

bb.shape #torch.Size([3, 32, 8])

cc.shape #torch.Size([2, 32, 8])

dd.shape #torch.Size([2, 32, 8])

ee.shape #torch.Size([2, 32, 8])

Tensor 运算

tensor 矩阵的基本运算

- Add/minus/multiply/divide

a = torch.rand(4,3)

b = torch.rand(3)

torch.all(torch.eq(a+b, torch.add(a,b))) #tensor(1, dtype=torch.uint8)

a-b #torch.sub

a*b #torch.mul

a/b #torch.div

a//b # 地板除

- Matmul

最后两维做矩阵乘运算,其他符合broadcast机制

a = torch.rand(4,3)

b = torch.rand(3,8)

torch.mm(a, b) #only for 2d

(a @ b).shape #torch.matmul torch.Size([4, 8])

- Pow

a**2 #torch.pow

- Sqrt/rsqrt/exp/log

平方根/平方根的倒数/自然常数幂/自然常数底

a.sqrt() #a**0.5

a.rsqrt()

torch.exp(a) #e**a

- Round 近似运算

a = torch.tensor(3.14) #tensor(3.14)

a.floor(), a.ceil(), a.round() #tensor(3.) tensor(4.) tensor(3.)

a.trunc() #tensor(3.)

a.frac() #tensor(0.1400)

- clamp 数字裁剪

使用环境 ’梯度裁剪’

梯度弥散(梯度非常小 < 0.∗),梯度爆炸(梯度非常大 > ∗00)

打印梯度的 L2 范数观察 (W.grad.norm(2))

grad = torch.rand(3,4)*15

grad.min() #min number

grad.max() #max number

grad.median() #medoan number

grad.clamp(10) #min number is 10

grad.clamp(0, 10) #all numbers is [0,10]

Tensor 统计

- norm

表示范数,不同于 normalize(正则化)

a = torch.full([8], 1)

b = a.reshape(2, 4)

c = b.reshape(2, 2, 2)

a #tensor([1., 1., 1., 1., 1., 1., 1., 1.])

b #tensor([[1., 1., 1., 1.], [1., 1., 1., 1.]])

a.norm(1), b.norm(1), c.norm(1) #tensor(8.)

a.norm(2), b.norm(2), c.norm(2) #tensor(2.8284)

#two parameters norm, dimension

a.norm(1, dim=0) #tensor(8.)

b.norm(1, dim=1) #tensor([4., 4.])

c.norm(2, dim=2) #tensor([[1.4142, 1.4142], [1.4142, 1.4142]])

- max, min, mean, sum, prod(累乘)

a = torch.arange(8).reshape(2,4).float()

a.min() #tensor(0.)

a.max() #tensor(7.)

a.mean() #tensor(3.5)

a.mean(1) #tensor([1.5000, 5.5000])

a.sum() #tensor(28.)

a.prod() #tensor(0.)

- argmin, argmax(参数 dim, keepdim)

a = torch.randn(4, 10) #4张照片 0-9 10个概率值

a.argmin() a.argmax() #无参数默认打平

a.argmax(1) #返回每张照片概率最大的数字

a.argmax(1, keepdim=True) #返回每张照片概率最大的数字并保持维度信息

a.max(1) #返回每张照片最大的概率及数字

- Kthvalue, topk(比 max 返回更多的数据)

a = torch.randn(4, 10) 4张照片 0-9 10个概率值

a.topk(2, dim=1, largest=True)) largest = False 表示最小的 k 个

a.kthvalue(10, dim=1) 返回第10小的概率及位置

- compare (比较操作)

>,<,>=,<=,! =,==

torch.eq() 可 braodcast,返回 0/1 同型

torch.equal() 比较每一值,都相等返回 True

Tensor 高阶操作

- where (GPU 离散复制)

'''

torch.where(condition, x, y) --> Tensor 满足条件取 x,否则取 y

其功能可由for 逻辑功能实现,但运行在CPU,难以高度并行

condition 必须是与 x, y 同型的1/0型 x, y可 broadcast

'''

a = torch.rand(2, 2)

b = torch.ones(2, 2)

c = torch.zeros(2, 2)

torch.where(a>0.5, b, c)

- Gather (GPU 收集查表操作)

'''

torch.gather(input, dim, index, out=None) --> Tensor 查表操作

'''

out[i][j][k] = input[index[i][j][k]][j][k] dim=0

out[i][j][k] = input[i][index[i][j][k]][k] dim=1

out[i][j][k] = input[i][j][index[i][j][k]] dim=2

'''

Gather 查表用来索引全局标签

'''

prob = torch.rand(4, 10) 四张图片十个概率值

idx = prob.topk(3, dim=1)[1]

label = torch.arange(10)+100

torch.gather(label.expand(4, 10), dim=1, index=idx)

# 共四张图片每张查概率最大的三个标签