版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/baidu_27643275/article/details/88695396

network

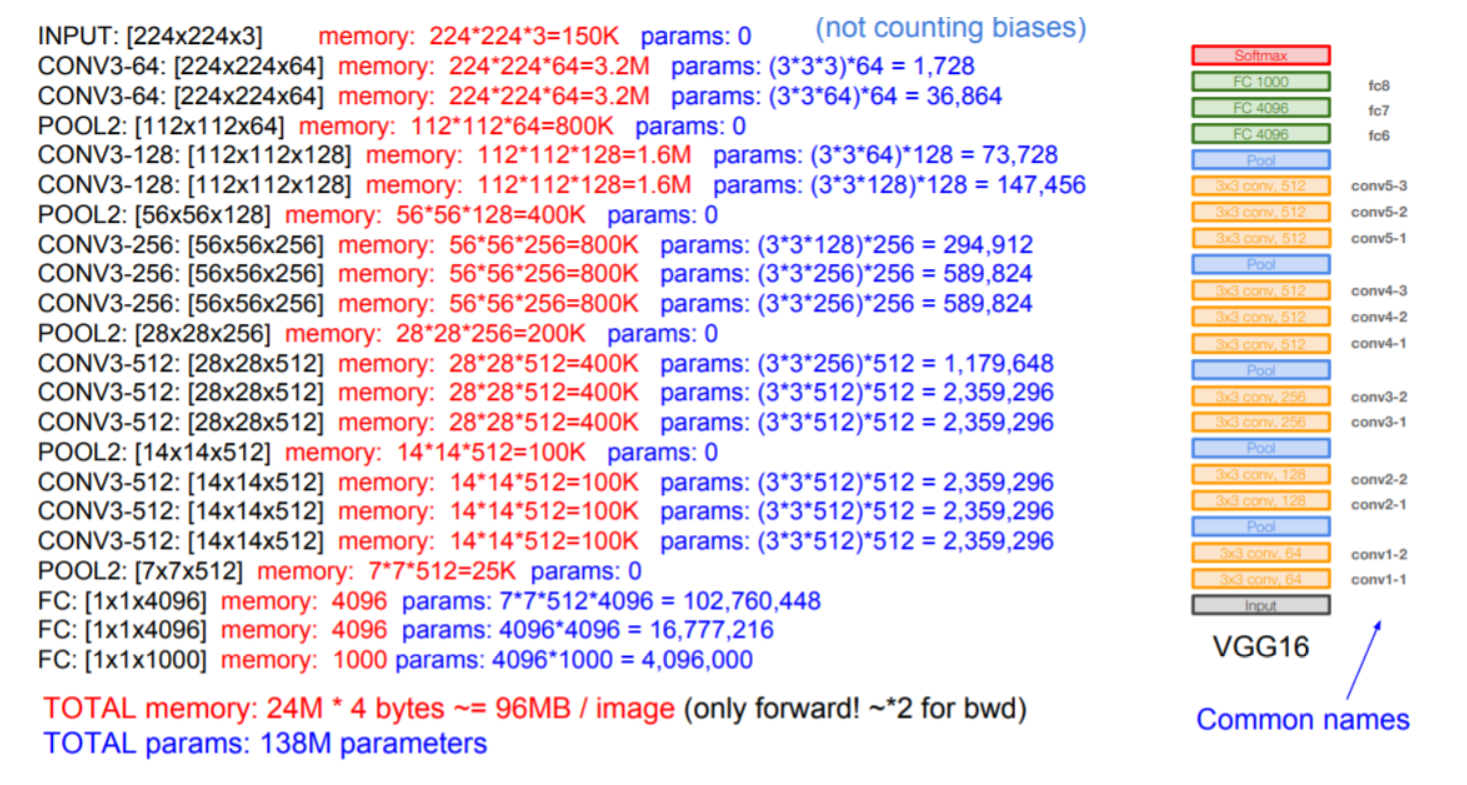

模型参数:

https://github.com/tensorflow/models/tree/master/research/slim

slim实现

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import tensorflow as tf

import tensorflow.contrib.slim as slim

def vgg_16(inputs,

num_classes=1000,

is_training=True,

dropout_keep_prob=0.5,

spatial_squeeze=True,

scope='vgg_16',

fc_conv_padding='VALID',

global_pool=False):

"""Oxford Net VGG 16-Layers version D Example.

Note: All the fully_connected layers have been transformed to conv2d layers.

To use in classification mode, resize input to 224x224.

Args:

inputs: a tensor of size [batch_size, height, width, channels].

spatial_squeeze: whether or not should squeeze the spatial dimensions of the outputs. Useful to remove unnecessary dimensions for classification.

global_pool: Optional boolean flag. If True, the input to the classification layer is avgpooled to size 1x1, for any input size. (This is not part of the original VGG architecture.)

Returns:

end_points: a dict of tensors with intermediate activations.

"""

with tf.variable_scope(scope, 'vgg_16', [inputs]) as sc:

end_points_collection = sc.original_name_scope + '_end_points'

# Collect outputs for conv2d, fully_connected and max_pool2d.

with slim.arg_scope([slim.conv2d, slim.fully_connected, slim.max_pool2d],

outputs_collections=end_points_collection):

net = slim.repeat(inputs, 2, slim.conv2d, 64, [3, 3], scope='conv1')

net = slim.max_pool2d(net, [2, 2], scope='pool1')

net = slim.repeat(net, 2, slim.conv2d, 128, [3, 3], scope='conv2')

net = slim.max_pool2d(net, [2, 2], scope='pool2')

net = slim.repeat(net, 3, slim.conv2d, 256, [3, 3], scope='conv3')

net = slim.max_pool2d(net, [2, 2], scope='pool3')

net = slim.repeat(net, 3, slim.conv2d, 512, [3, 3], scope='conv4')

net = slim.max_pool2d(net, [2, 2], scope='pool4')

net = slim.repeat(net, 3, slim.conv2d, 512, [3, 3], scope='conv5')

net = slim.max_pool2d(net, [2, 2], scope='pool5')

# Use conv2d instead of fully_connected layers.

net = slim.conv2d(net, 4096, [7, 7], padding=fc_conv_padding, scope='fc6')

net = slim.dropout(net, dropout_keep_prob, is_training=is_training,

scope='dropout6')

net = slim.conv2d(net, 4096, [1, 1], scope='fc7')

# Convert end_points_collection into a end_point dict.

end_points = slim.utils.convert_collection_to_dict(end_points_collection)

if global_pool:

net = tf.reduce_mean(net, [1, 2], keep_dims=True, name='global_pool')

end_points['global_pool'] = net

if num_classes:

net = slim.dropout(net, dropout_keep_prob, is_training=is_training,

scope='dropout7')

net = slim.conv2d(net, num_classes, [1, 1],

activation_fn=None,

normalizer_fn=None,

scope='fc8')

if spatial_squeeze:

net = tf.squeeze(net, [1, 2], name='fc8/squeezed')

end_points[sc.name + '/fc8'] = net

return net, end_points

vgg_16.default_image_size = 224

with slim.arg_scope([slim.conv2d, slim.fully_connected],

activation_fn=tf.nn.relu,

weights_regularizer=slim.l2_regularizer(weight_decay),

biases_initializer=tf.zeros_initializer()):

with slim.arg_scope([slim.conv2d], padding='SAME') as arg_sc:

return arg_sc

Usage:

with slim.arg_scope(vgg.vgg_arg_scope()):

outputs, end_points = vgg.vgg_16(inputs)