教程目录

0x00 教程内容

- Dockerfile文件的编写

- 校验Hive准备工作

- 校验是否Hive安装成功

0x01 Dockerfile文件的编写

1. 编写Dockerfile文件

为了方便,我复制了一份hbase_sny_all的文件,取名hive_sny_all。

a. Hive安装步骤

参考文章:D007 复制粘贴玩大数据之安装与配置Hive

| 常规安装 | Dockerfile安装 |

|---|---|

| 1.将安装包放于容器 | 1.添加安装包并解压 |

| 2.解压并配置Hive | 2.添加环境变量 |

| 3.添加环境变量 | 3.添加配置文件(含环境变量) |

| 4.启动并校验 | 4.启动Hive |

- 其实安装内容都是一样的,这里只是就根据我写的步骤整理了一下

2. 编写Dockerfile文件的关键点

与D006 复制粘贴玩大数据之Dockerfile安装HBase集群的“0x01 3. a. Dockerfile参考文件”相比较,不同点体现在:

具体步骤:

a. 添加安装包并解压(ADD指令会自动解压)

#添加Hive

ADD ./apache-hive-2.3.3-bin.tar.gz /usr/local/

b. 添加环境变量(HIVE_HOME、PATH)

#Hive环境变量

ENV HIVE_HOME /usr/local/apache-hive-2.3.3-bin

#PATH里面追加内容

$HIVE_HOME/bin:

c. 添加配置文件(注意给之前的语句加“&& \”,表示未结束)

&& \

mv /tmp/hive-env.sh $HIVE_HOME/conf/hive-env.sh

3. 完整的Dockerfile文件参考

a. 安装hadoop、spark、zookeeper、hbase、hive

FROM ubuntu

MAINTAINER shaonaiyi [email protected]

ENV BUILD_ON 2019-03-11

RUN apt-get update -qqy

RUN apt-get -qqy install vim wget net-tools iputils-ping openssh-server

#添加JDK

ADD ./jdk-8u161-linux-x64.tar.gz /usr/local/

#添加hadoop

ADD ./hadoop-2.7.5.tar.gz /usr/local/

#添加scala

ADD ./scala-2.11.8.tgz /usr/local/

#添加spark

ADD ./spark-2.2.0-bin-hadoop2.7.tgz /usr/local/

#添加zookeeper

ADD ./zookeeper-3.4.10.tar.gz /usr/local/

#添加HBase

ADD ./hbase-1.2.6-bin.tar.gz /usr/local/

#添加Hive

ADD ./apache-hive-2.3.3-bin.tar.gz /usr/local/

ENV CHECKPOINT 2019-03-11

#增加JAVA_HOME环境变量

ENV JAVA_HOME /usr/local/jdk1.8.0_161

#hadoop环境变量

ENV HADOOP_HOME /usr/local/hadoop-2.7.5

#scala环境变量

ENV SCALA_HOME /usr/local/scala-2.11.8

#spark环境变量

ENV SPARK_HOME /usr/local/spark-2.2.0-bin-hadoop2.7

#zk环境变量

ENV ZK_HOME /usr/local/zookeeper-3.4.10

#HBase环境变量

ENV HBASE_HOME /usr/local/hbase-1.2.6

#Hive环境变量

ENV HIVE_HOME /usr/local/apache-hive-2.3.3-bin

#将环境变量添加到系统变量中

ENV PATH $HIVE_HOME/bin:$HBASE_HOME/bin:$ZK_HOME/bin:$SCALA_HOME/bin:$SPARK_HOME/bin:$HADOOP_HOME/bin:$JAVA_HOME/bin:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib

/tools.jar:$PATH

RUN ssh-keygen -t rsa -f ~/.ssh/id_rsa -P '' && \

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys && \

chmod 600 ~/.ssh/authorized_keys

#复制配置到/tmp目录

COPY config /tmp

#将配置移动到正确的位置

RUN mv /tmp/ssh_config ~/.ssh/config && \

mv /tmp/profile /etc/profile && \

mv /tmp/masters $SPARK_HOME/conf/masters && \

cp /tmp/slaves $SPARK_HOME/conf/ && \

mv /tmp/spark-defaults.conf $SPARK_HOME/conf/spark-defaults.conf && \

mv /tmp/spark-env.sh $SPARK_HOME/conf/spark-env.sh && \

mv /tmp/hadoop-env.sh $HADOOP_HOME/etc/hadoop/hadoop-env.sh && \

mv /tmp/hdfs-site.xml $HADOOP_HOME/etc/hadoop/hdfs-site.xml && \

mv /tmp/core-site.xml $HADOOP_HOME/etc/hadoop/core-site.xml && \

mv /tmp/yarn-site.xml $HADOOP_HOME/etc/hadoop/yarn-site.xml && \

mv /tmp/mapred-site.xml $HADOOP_HOME/etc/hadoop/mapred-site.xml && \

mv /tmp/master $HADOOP_HOME/etc/hadoop/master && \

mv /tmp/slaves $HADOOP_HOME/etc/hadoop/slaves && \

mv /tmp/start-hadoop.sh ~/start-hadoop.sh && \

mv /tmp/init_zk.sh ~/init_zk.sh && \

mkdir -p /usr/local/hadoop2.7/dfs/data && \

mkdir -p /usr/local/hadoop2.7/dfs/name && \

mkdir -p /usr/local/zookeeper-3.4.10/datadir && \

mkdir -p /usr/local/zookeeper-3.4.10/log && \

mv /tmp/zoo.cfg $ZK_HOME/conf/zoo.cfg && \

mv /tmp/hbase-env.sh $HBASE_HOME/conf/hbase-env.sh && \

mv /tmp/hbase-site.xml $HBASE_HOME/conf/hbase-site.xml && \

mv /tmp/regionservers $HBASE_HOME/conf/regionservers && \

mv /tmp/hive-env.sh $HIVE_HOME/conf/hive-env.sh

RUN echo $JAVA_HOME

#设置工作目录

WORKDIR /root

#启动sshd服务

RUN /etc/init.d/ssh start

#修改start-hadoop.sh权限为700

RUN chmod 700 start-hadoop.sh

#修改init_zk.sh权限为700

RUN chmod 700 init_zk.sh

#修改root密码

RUN echo "root:shaonaiyi" | chpasswd

CMD ["/bin/bash"]

0x02 校验Hive前准备工作

1. 环境及资源准备

a. 安装Docker

请参考:D001.5 Docker入门(超级详细基础篇)的“0x01 Docker的安装”小节

b. 准备Hive的安装包,放于与Dockerfile同级目录下

c. 准备Hive的配置文件(放于config目录下)

cd /home/shaonaiyi/docker_bigdata/hive_sny_all/config

配置文件一:vi hive-env.sh

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Set Hive and Hadoop environment variables here. These variables can be used

# to control the execution of Hive. It should be used by admins to configure

# the Hive installation (so that users do not have to set environment variables

# or set command line parameters to get correct behavior).

#

# The hive service being invoked (CLI etc.) is available via the environment

# variable SERVICE

export HADOOP_HOME=/usr/local/hadoop-2.7.5

# Hive Client memory usage can be an issue if a large number of clients

# are running at the same time. The flags below have been useful in

# reducing memory usage:

#

# if [ "$SERVICE" = "cli" ]; then

# if [ -z "$DEBUG" ]; then

# export HADOOP_OPTS="$HADOOP_OPTS -XX:NewRatio=12 -Xms10m -XX:MaxHeapFreeRatio=40 -XX:MinHeapFreeRatio=15 -XX:+UseParNewGC -XX:-UseGCOverheadLimit"

# else

# export HADOOP_OPTS="$HADOOP_OPTS -XX:NewRatio=12 -Xms10m -XX:MaxHeapFreeRatio=40 -XX:MinHeapFreeRatio=15 -XX:-UseGCOverheadLimit"

# fi

# fi

# The heap size of the jvm stared by hive shell script can be controlled via:

#

# export HADOOP_HEAPSIZE=1024

#

# Larger heap size may be required when running queries over large number of files or partitions.

# By default hive shell scripts use a heap size of 256 (MB). Larger heap size would also be

# appropriate for hive server.

# Set HADOOP_HOME to point to a specific hadoop install directory

# HADOOP_HOME=${bin}/../../hadoop

# Hive Configuration Directory can be controlled by:

# export HIVE_CONF_DIR=

# Folder containing extra libraries required for hive compilation/execution can be controlled by:

# export HIVE_AUX_JARS_PATH=

d. 修改环境变量配置文件(放于config目录下)

配置文件二:vi profile

添加内容:

export HIVE_HOME=/usr/local/apache-hive-2.3.3-bin

export PATH=$PATH:$HIVE_HOME/bin

0x03 校验是否Hive安装成功

1. 修改生成容器脚本

a. 修改start_containers.sh文件(样本镜像名称成shaonaiyi/hive)

本人把里面的三个shaonaiyi/hbase改为了shaonaiyi/hive

ps:当然,你可以新建一个新的网络,换ip,这里偷懒,用了旧的网络,只换了ip

2. 生成镜像

a. 删除之前的hbase集群容器(节省资源),如已删可省略此步

cd /home/shaonaiyi/docker_bigdata/hbase_sny_all/config/

chmod 700 stop_containers.sh

./stop_containers.sh

b. 生成装好hadoop、spark、zookeeper、hbase、hive的镜像(如果之前shaonaiyi/hbase未删除,则此次会快很多)

cd /home/shaonaiyi/docker_bigdata/hive_sny_all

docker build -t shaonaiyi/hive .

3. 生成容器

a. 生成容器(start_containers.sh如果没权限则给权限):

config/start_containers.sh

b. 进入master容器

sh ~/master.sh

4. 启动Hive

a. 确保HDFS已经启动,没有则启动:

start-hadoop.sh

b. 初始化Hive

cd /usr/local/apache-hive-2.3.3-bin

./bin/schematool -dbType derby -initSchema

c. 启动Hive

hive

d. 查看Hive中的表与函数:

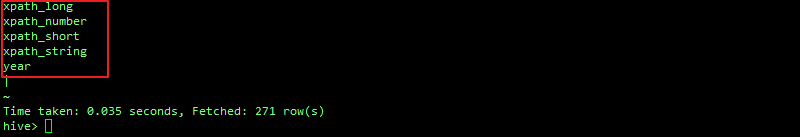

show tables;

show functions;

e. 没有报错则表示安装成功,退出:

exit;

0xFF 总结

- 安装很简单,只需要知道步骤,不清楚请参考文章:D007 复制粘贴玩大数据之安装与配置Hive。

- Dockerfile常用指令,请参考文章:D004.1 Dockerfile例子详解及常用指令

- 下次启动不需要再执行初始化Hive语句。

作者简介:邵奈一

大学大数据讲师、大学市场洞察者、专栏编辑

公众号、微博、CSDN:邵奈一

本系列课均为本人:邵奈一原创,如转载请标明出处