Jupyter Notebook

k近邻算法

import numpy as np

from matplotlib import pyplot as plt

import math

X

X_train = np.array([[1,1.2],[3,3.3],[5,2.2],[7,5.7],[9,8.4]])

X

X_train

array([[1. , 1.2],

[3. , 3.3],

[5. , 2.2],

[7. , 5.7],

[9. , 8.4]])

Y

Y_train = np.array([0,0,0,1,1])

Y

Y_train

array([0, 0, 0, 1, 1])

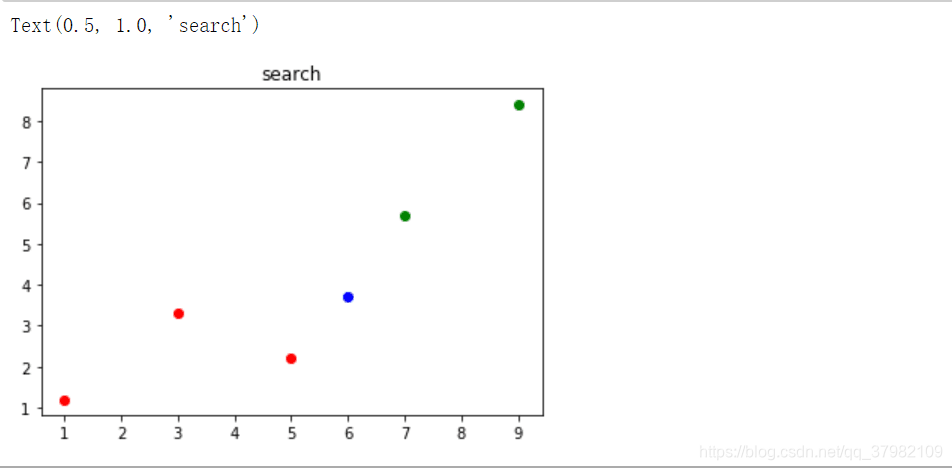

plt.scatter(x_train[y_train == 0,0],x_train[y_train == 0,1],color=“r”,label=“normal”)

plt.scatter(x_train[y_train == 1,0],x_train[y_train == 1,1],color=“g”,label=“bad”)

plt.title(“search”)

Text(0.5, 1.0, ‘search’)

#x是我们要测试的值在下图用蓝色表示

x = np.array([6,3.7])

b

plt.scatter(x_train[y_train == 0,0],x_train[y_train == 0,1],color=“r”,label=“normal”)

plt.scatter(x_train[y_train == 1,0],x_train[y_train == 1,1],color=“g”,label=“bad”)

plt.scatter(x[0],x[1],color=“b”)

plt.title(“search”)

Text(0.5, 1.0, ‘search’)

KNN,求x与其他点的距离,并保存进distant中去

distant = []

for x_train in X_train:

d = math.sqrt(np.sum((x - x_train)**2))

distant.append(d)

distant

[5.5901699437494745,

3.026549190084311,

1.8027756377319946,

2.23606797749979,

5.57584074378026]

对所获取到的距离进行下标排序

np.argsort(distant)

np.argsort(distant)

array([2, 3, 1, 4, 0], dtype=int64)

nearst = np.argsort(distant)

获取到排序号的点坐标,进行相应Y_train中坐标的值,才是我们想要的

k = 3

k = 3

k =3 说明与三个数进行比较,看离谁近,则进行投票

topk_y = [Y_train[i] for i in nearst[:k]]

topk_y

[0, 1, 0]

此时我们得出了0,1,0说明 0胜出,预测到的结果应该是0

from collections import Counter

Counter(topk_y)

Counter({0: 2, 1: 1})

计数完进行投票

votes = Counter(topk_y)

votes.most_common(1)

[(0, 2)]

votes.most_common(1)[0][0]

votes.most_common(1)[0][0]

0

把值存在predict中来

predict =

predict = votes.most_common(1)[0][0]

predict

0

至此,简单的K临近算法已经差不多了呀