版权声明:本文为博主原创文章,转载请注明出处。 https://blog.csdn.net/u012319493/article/details/87125745

导入数据集

from keras.datasets import mnist

import numpy as np

(X_train, Y_train), (X_test, Y_test) = mnist.load_data()

print(np.shape(X_train), np.shape(X_test))

(60000, 28, 28) (10000, 28, 28)

数据预处理

# 把三维的张量(tensor)转换成二维的数值

X_train = X_train.reshape(60000, 28*28)

X_test = X_test.reshape(10000, 28*28)

print(np.shape(X_train), np.shape(X_test))

(60000, 784) (10000, 784)

# 每个图片的的数值位于 (0, 255) --> (0, 1):可加速模型训练

X_train /= 255

X_test /= 255

# 设定每个数值是 float32 型的:GPU 可使用这些变量加速训练

X_train = X_train.astype("float32")

X_test = X_test.astype("float32")

# 1 of K encoding: [0, 1, 2], y=1->(0, 1, 0), y=2->(0, 0, 1), y=0->(1, 0, 0)

from keras.utils import np_utils

num_classes = 10

Y_train = np_utils.to_categorical(Y_train, num_classes)

Y_test = np_utils.to_categorical(Y_test, num_classes)

print(Y_train)

[[0. 0. 0. … 0. 0. 0.]

[1. 0. 0. … 0. 0. 0.]

[0. 0. 0. … 0. 0. 0.]

…

[0. 0. 0. … 0. 0. 0.]

[0. 0. 0. … 0. 0. 0.]

[0. 0. 0. … 0. 1. 0.]]

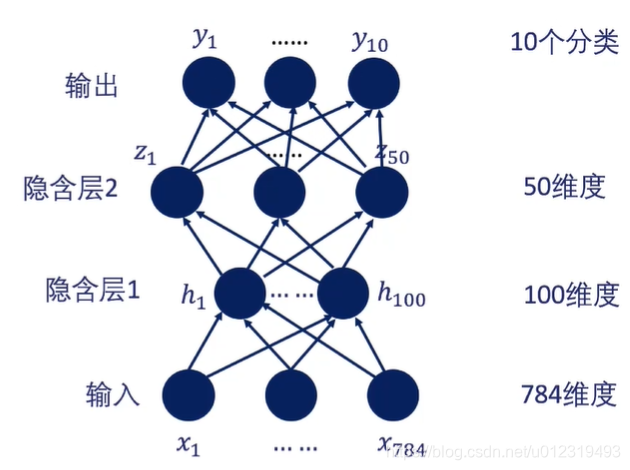

搭建深度学习神经网络

以下为所设计的神经网络:

# 利用 keras 定义深度学习网络

from keras.models import Sequential

from keras.layers import Dense

from keras.optimizers import SGD

model = Sequential()

# 添加了输入层的信息

model.add(Dense(100, activation='sigmoid', input_shape=(784,))) # batch_size = 20, 30, 50, ...

# 添加第一个隐含层的信息

model.add(Dense(100, activation='relu'))

# 添加第二个隐含层

model.add(Dense(num_classes, activation='softmax'))

# 目标函数,优化算法,评估方法(准确,AUC)

model.compile(loss='categorical_crossentropy', optimizer=SGD(), metrics=['accuracy'])

# 训练模型

model.fit(X_train, Y_train, batch_size=50, epochs=10, verbose=1, validation_data=(X_test, Y_test))

score = model.evaluate(X_test, Y_test, verbose=1)

print("Test loss: ", score[0]) # 目标函数,损失函数

print("Test accuracy: ", score[1]) # 正确率

结果:

Instructions for updating:

Use tf.cast instead.

Train on 60000 samples, validate on 10000 samples

Epoch 1/10

60000/60000 [==============================] - 5s 81us/step - loss: 0.7790 - acc: 0.7981 - val_loss: 0.4146 - val_acc: 0.8900

Epoch 2/10

60000/60000 [==============================] - 3s 52us/step - loss: 0.3786 - acc: 0.8926 - val_loss: 0.3383 - val_acc: 0.9064

Epoch 3/10

60000/60000 [==============================] - 3s 54us/step - loss: 0.3267 - acc: 0.9070 - val_loss: 0.3010 - val_acc: 0.9156

Epoch 4/10

60000/60000 [==============================] - 3s 52us/step - loss: 0.3021 - acc: 0.9120 - val_loss: 0.2824 - val_acc: 0.9176

Epoch 5/10

60000/60000 [==============================] - 3s 53us/step - loss: 0.2856 - acc: 0.9167 - val_loss: 0.2696 - val_acc: 0.9197

Epoch 6/10

60000/60000 [==============================] - 3s 52us/step - loss: 0.2687 - acc: 0.9206 - val_loss: 0.2563 - val_acc: 0.9250

Epoch 7/10

60000/60000 [==============================] - 3s 52us/step - loss: 0.2571 - acc: 0.9241 - val_loss: 0.2679 - val_acc: 0.9238

Epoch 8/10

60000/60000 [==============================] - 3s 52us/step - loss: 0.2458 - acc: 0.9269 - val_loss: 0.2433 - val_acc: 0.9270

Epoch 9/10

60000/60000 [==============================] - 3s 54us/step - loss: 0.2472 - acc: 0.9266 - val_loss: 0.2378 - val_acc: 0.9279

Epoch 10/10

60000/60000 [==============================] - 3s 53us/step - loss: 0.2280 - acc: 0.9322 - val_loss: 0.2294 - val_acc: 0.9320

10000/10000 [==============================] - 0s 32us/step

Test loss: 0.22936600434184073

Test accuracy: 0.932