版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/u012292754/article/details/86481601

1 单词统计

package beifeng.wc;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

import java.util.StringTokenizer;

public class WordCount {

public static void main(String[] args) throws InterruptedException, IOException, ClassNotFoundException {

int status = new WordCount().run(args);

System.exit(status);

}

public static class WordCountMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

private Text mapOutputKey = new Text();

private final static IntWritable mapOutputValue = new IntWritable(1);

@Override

public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String lineValue = value.toString();

StringTokenizer stringTokenizer = new StringTokenizer(lineValue);

while (stringTokenizer.hasMoreTokens()) {

String wordValue = stringTokenizer.nextToken();

mapOutputKey.set(wordValue);

context.write(mapOutputKey, mapOutputValue);

}

}

}

public static class WordCountReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

private IntWritable ouputValue = new IntWritable();

@Override

public void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable value : values) {

sum += value.get();

}

ouputValue.set(sum);

context.write(key, ouputValue);

}

}

public int run(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, this.getClass().getSimpleName());

job.setJarByClass(this.getClass());

Path inPath = new Path(args[0]);

FileInputFormat.addInputPath(job, inPath);

job.setMapperClass(WordCountMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setReducerClass(WordCountReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

Path outPath = new Path(args[1]);

FileOutputFormat.setOutputPath(job, outPath);

boolean isSuccess = job.waitForCompletion(true);

return isSuccess ? 0 : 1;

}

}

- Maven 打包,jar 包上传到集群,然后运行

hadoop jar /home/hadoop/testhadoop-1.0.jar beifeng.wc.WordCount hdfs://node1:8020/words.txt hdfs://node1:8020/wcout

9/01/14 19:18:59 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

19/01/14 19:19:00 INFO client.RMProxy: Connecting to ResourceManager at node1/192.168.30.131:8032

19/01/14 19:19:00 WARN mapreduce.JobResourceUploader: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

19/01/14 19:19:01 INFO input.FileInputFormat: Total input paths to process : 1

19/01/14 19:19:01 INFO mapreduce.JobSubmitter: number of splits:1

19/01/14 19:19:01 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1547456322468_0001

19/01/14 19:19:02 INFO impl.YarnClientImpl: Submitted application application_1547456322468_0001

19/01/14 19:19:02 INFO mapreduce.Job: The url to track the job: http://node1:8088/proxy/application_1547456322468_0001/

19/01/14 19:19:02 INFO mapreduce.Job: Running job: job_1547456322468_0001

19/01/14 19:19:10 INFO mapreduce.Job: Job job_1547456322468_0001 running in uber mode : false

19/01/14 19:19:10 INFO mapreduce.Job: map 0% reduce 0%

19/01/14 19:19:16 INFO mapreduce.Job: map 100% reduce 0%

19/01/14 19:19:21 INFO mapreduce.Job: map 100% reduce 100%

19/01/14 19:19:21 INFO mapreduce.Job: Job job_1547456322468_0001 completed successfully

19/01/14 19:19:21 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=271

FILE: Number of bytes written=223315

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=223

HDFS: Number of bytes written=51

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=3484

Total time spent by all reduces in occupied slots (ms)=2077

Total time spent by all map tasks (ms)=3484

Total time spent by all reduce tasks (ms)=2077

Total vcore-seconds taken by all map tasks=3484

Total vcore-seconds taken by all reduce tasks=2077

Total megabyte-seconds taken by all map tasks=3567616

Total megabyte-seconds taken by all reduce tasks=2126848

Map-Reduce Framework

Map input records=4

Map output records=23

Map output bytes=219

Map output materialized bytes=271

Input split bytes=92

Combine input records=0

Combine output records=0

Reduce input groups=7

Reduce shuffle bytes=271

Reduce input records=23

Reduce output records=7

Spilled Records=46

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=486

CPU time spent (ms)=1670

Physical memory (bytes) snapshot=487391232

Virtual memory (bytes) snapshot=5599584256

Total committed heap usage (bytes)=468189184

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=131

File Output Format Counters

Bytes Written=51

2 改进版 wordcount

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

import java.io.IOException;

import java.util.StringTokenizer;

public class WordCount extends Configured implements Tool {

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

int status = ToolRunner.run(conf,new WordCount(),args);

System.exit(status);

}

public static class WordCountMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

private Text mapOutputKey = new Text();

private final static IntWritable mapOutputValue = new IntWritable(1);

@Override

public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String lineValue = value.toString();

StringTokenizer stringTokenizer = new StringTokenizer(lineValue);

while (stringTokenizer.hasMoreTokens()) {

String wordValue = stringTokenizer.nextToken();

mapOutputKey.set(wordValue);

context.write(mapOutputKey, mapOutputValue);

}

}

}

public static class WordCountReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

private IntWritable ouputValue = new IntWritable();

@Override

public void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable value : values) {

sum += value.get();

}

ouputValue.set(sum);

context.write(key, ouputValue);

}

}

public int run(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = getConf();

Job job = Job.getInstance(conf, this.getClass().getSimpleName());

job.setJarByClass(this.getClass());

Path inPath = new Path(args[0]);

FileInputFormat.addInputPath(job, inPath);

job.setMapperClass(WordCountMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setReducerClass(WordCountReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

Path outPath = new Path(args[1]);

FileOutputFormat.setOutputPath(job, outPath);

boolean isSuccess = job.waitForCompletion(true);

return isSuccess ? 0 : 1;

}

}

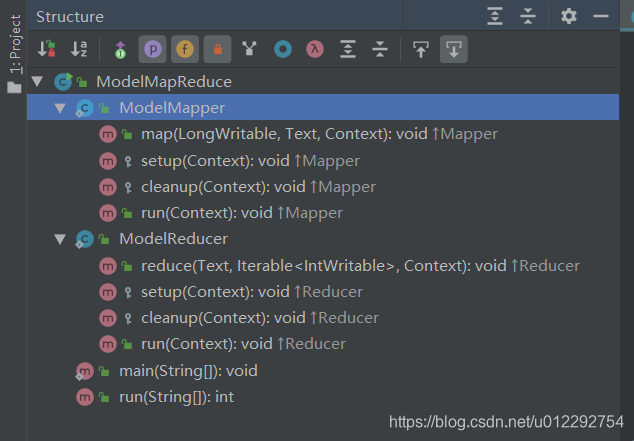

3 MapReduce 编程模板

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

import java.io.IOException;

public class ModelMapReduce extends Configured implements Tool {

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

int status = ToolRunner.run(conf,new WordCount(),args);

System.exit(status);

}

public static class ModelMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

@Override

public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

}

@Override

protected void setup(Context context) throws IOException, InterruptedException {

}

@Override

protected void cleanup(Context context) throws IOException, InterruptedException {

}

@Override

public void run(Context context) throws IOException, InterruptedException {

}

}

public static class ModelReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

@Override

public void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

}

@Override

protected void setup(Context context) throws IOException, InterruptedException {

}

@Override

protected void cleanup(Context context) throws IOException, InterruptedException {

}

@Override

public void run(Context context) throws IOException, InterruptedException {

}

}

public int run(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = getConf();

Job job = Job.getInstance(conf, this.getClass().getSimpleName());

job.setJarByClass(this.getClass());

Path inPath = new Path(args[0]);

FileInputFormat.addInputPath(job, inPath);

job.setMapperClass(ModelMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setReducerClass(ModelReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

Path outPath = new Path(args[1]);

FileOutputFormat.setOutputPath(job, outPath);

boolean isSuccess = job.waitForCompletion(true);

return isSuccess ? 0 : 1;

}

}