本文针对本人学习pytorch过程记录,本文主要是针对CNN中的LetNet5

文章目录

import os

import torch

import torch.nn as nn

import torch.utils.data as Data

import torchvision

from torch.autograd import Variable

#由于minist数据集市28*28的单通道图像,60000个训练集,10000个测试集

# Hyper Parameters

EPOCH = 5 # 一共训练5次,条件允许可以多训练几次

BATCH_SIZE = 100 #批训练 一批100个

LR = 0.001 # 学习效率

DOWNLOAD_MNIST = False

if not(os.path.exists('./mnist/')) or not os.listdir('./mnist/'):

# not mnist dir or mnist is empyt dir

DOWNLOAD_MNIST = True

# 下载数据

train_data = torchvision.datasets.MNIST(

root='./mnist/',

train=True, # True 是训练集

transform=torchvision.transforms.ToTensor(), # 把shape=(H x W x C)的像素值范围为[0, 255]的PIL.Image或者numpy.ndarray转换成

#shape=(C x H x W)的像素值范围为[0.0, 1.0]的torch.FloatTensor,黑白图是单通道

download=DOWNLOAD_MNIST,

)

test_data = torchvision.datasets.MNIST(

root='./mnist/',

train=False, # False 是测试集

transform=torchvision.transforms.ToTensor(),

download=DOWNLOAD_MNIST,

)

#放进DataLoader进行批处理

train_loader = Data.DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True) #shuffle代表是否打乱顺序

test_loader = Data.DataLoader(dataset=test_data, batch_size=BATCH_SIZE, shuffle=True) #shuffle代表是否打乱顺序

#LetNet5 是七层的结构

class LetNet5(nn.Module):

def __init__(self):

super(LetNet5, self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d( #卷积

in_channels=1, # 输入高度 黑白图片为1,RGB为3

out_channels=16, # 输出高度

kernel_size=5, # 卷积核 5*5

stride=1, # 步长

padding=2,

),

nn.ReLU(), # 激励

nn.MaxPool2d(kernel_size=2), # 池化

)

self.conv2 = nn.Sequential( # input shape (16, 14, 14)

nn.Conv2d( #卷积

in_channels=16, # 输入高度 黑白图片为1,RGB为3

out_channels=32, # 输出高度

kernel_size=5, # 卷积核 5*5

stride=1, # 步长

padding=2,

),

nn.ReLU(),

nn.MaxPool2d(2),

)

self.connect = nn.Linear(32 * 7 * 7, 50) #全连接层1

self.out = nn.Linear(50, 10) # 分为10类

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size(0), -1) # 将输出展平为(batch_size,32 * 7 * 7)

tem = self.connect(x)

output = self.out(tem)

return output

letnet5 = LetNet5()

optimizer = torch.optim.Adam(letnet5.parameters(), lr=LR) # 采用的是Adam优化器,也可以使用SGD等

loss_func = nn.CrossEntropyLoss() # 分类采用的误差函数

for epoch in range(EPOCH):

for step, data in enumerate(train_loader): #训练集包括数据和标签

inputs, labels = data

inputs, labels = Variable(inputs), Variable(labels)

outputs = letnet5(inputs)

loss = loss_func(outputs, labels) # 计算误差

optimizer.zero_grad() #每次训练完清零

loss.backward() #反向传递

optimizer.step() #每步加上优化

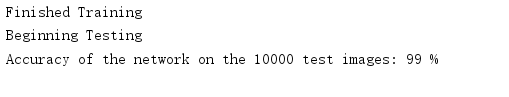

print("Finished Training")

print("Beginning Testing")

correct = 0

total = 0

for data in test_loader:

images, labels = data

outputs = letnet5(Variable(images))

predicted = torch.max(outputs, 1)[1].data.numpy()

total += labels.size(0)

correct += (predicted == labels.data.numpy()).sum()

print('Accuracy of the network on the 10000 test images: %d %%' % (100 * correct / total))