版权声明:转载请注明出处。 https://blog.csdn.net/Xin_101/article/details/85540140

0 环境

- Ubuntu18.04

- openssh-server

- Hadoop3.0.2

- JDK1.8.0_191

1 ssh配置

1.0 安装openssh-server

sudo apt-get install openssh-server

1.2 配置ssh登录

# 进入ssh目录

cd ~/.ssh

# 使用rsa算法生成秘钥和公钥对

ssh-keygen -t rsa

# 授权

cat ./id_rsa.pub >> ./authroized_keys

2 安装Hadoop

2.1 下载Hadoop

Hadoop:镜像地址

进入链接:HTTP

http:mirror

选择版本

Hadoop:备用地址

2.2 解压至指定目录

tar -zxvf hadoop-3.0.2.tar.gz -C /xindaqi/hadoop

2.3 配置hadoop文件

2.3.1 core-site.xml

<configuration>

<property>

<name>hadoop.tmp</name>

<value>file:/home/xdq/xinPrj/hadoop/hadoop-3.0.2/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

</configuration>

2.3.2 hadoop-env.sh

export JAVA_HOME=/usr/java/jdk1.8.0_191

2.3.3 hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.permission</name>

<value>false</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/xdq/xinPrj/hadoop/hadoop-3.0.2/tmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/xdq/xinPrj/hadoop/hadoop-3.0.2/tmp/dfs/data</value>

</property>

<property>

<name>dfs.namenode.rpc-address</name>

<value>localhost:8080</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>localhost:9001</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

2.3.4 log4j.properties

log4j.logger.org.apache.hadoop.util.NativeCodeLoader=ERROR

2.3.5 yarn-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

2.3.6 新建文件夹

hadoop-3.0.2建立存储文件夹,目录结构:

-- hadoop-3.0.2

|

`-- tmp

`-- dfs

|-- data

`-- name

3 测试

hadoop各部分的启停文件在sbin路径下,sbin目录结构如下:

-- hadoop-3.0.2

|-- sbin

| |-- FederationStateStore

| | |-- MySQL

| | `-- SQLServer

| |-- distribute-exclude.sh

| |-- hadoop-daemon.sh

| |-- hadoop-daemons.sh

| |-- httpfs.sh

| |-- kms.sh

| |-- mr-jobhistory-daemon.sh

| |-- refresh-namenodes.sh

| |-- start-all.cmd

| |-- start-all.sh

| |-- start-balancer.sh

| |-- start-dfs.cmd

| |-- start-dfs.sh

| |-- start-secure-dns.sh

| |-- start-yarn.cmd

| |-- start-yarn.sh

| |-- stop-all.cmd

| |-- stop-all.sh

| |-- stop-balancer.sh

| |-- stop-dfs.cmd

| |-- stop-dfs.sh

| |-- stop-secure-dns.sh

| |-- stop-yarn.cmd

| |-- stop-yarn.sh

| |-- workers.sh

| |-- yarn-daemon.sh

| `-- yarn-daemons.sh

3.1 启停hdfs

3.1.0 启动

start-dfs.sh

3.1.2 停止

stop-dfs.sh

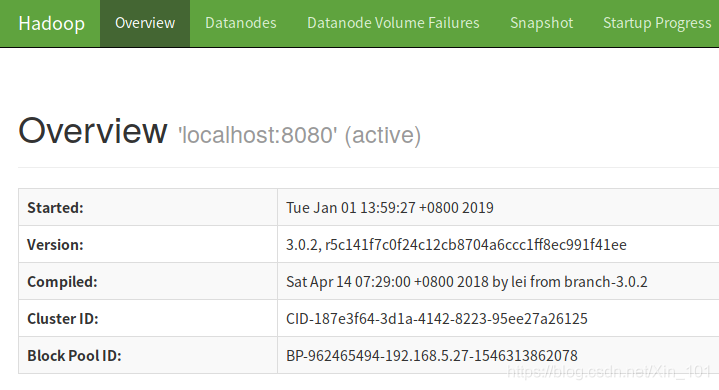

3.1.3 web测试

localhost:9870

Hadoop文件系统

3.2 启停yarn

3.2.1 启动

start-yarn.sh

3.2.2 停止

stop-yarn.sh

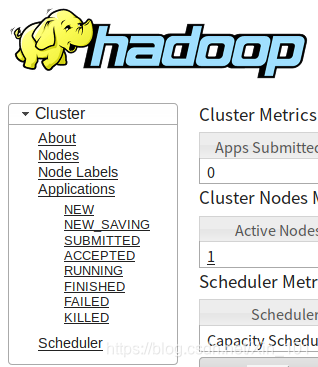

3.2.3 web测试

localhost:8088

Hadoop资源管理系统

4 总结

- Hadoop3.x以后的版本,dfs文件系统的端口为9870;

- Hadoop2.x版本,dfs文件系统接口为50070;

- Hadoop2x和3.x的yarn接口均为8088;

- 相对于Mac部署Hadoop,Ubuntu部署没走弯路,除了端口9870;

- (网上资源很重要呀,找对了少走弯路!哈哈哈!)

Mac部署Hadoop参考:Mac部署Hadoop环境

[参考文献]

[1]https://blog.csdn.net/weixin_42001089/article/details/81865101

[2]https://blog.csdn.net/Xin_101/article/details/85225604