前提:配置JDK1.8环境,并配置相应的环境变量,JAVA_HOME

一.Hadoop的安装

1.1 下载Hadoop (2.6.0) http://hadoop.apache.org/releases.html

1.1.1 下载对应版本的winutils(https://github.com/steveloughran/winutils)并将其bin目录下的文件,全部复制到hadoop的安装目录的bin文件下,进行替换。

1.2 解压hadoop-2.6.0.tar.gz到指定目录,并配置相应的环境变量。

1.2.1 新建HADOOP_HOME环境变量,并将其添加到path目录(;%HADOOP_HOME%\bin)

1.2.2 打开cmd窗口,输入hadoop version 命令进行验证,环境变量是否正常

1.3 对Hadoop进行配置:(无同名配置文件,可通过其同名template文件复制,再进行编辑)

1.3.1 编辑core-site.xml文件:(在hadoop的安装目录下创建workplace文件夹,在workplace下创建tmp和name文件夹)

<configuration> <property> <name>hadoop.tmp.dir</name> <value>/E:/software/hadoop-2.6.0/workplace/tmp</value> </property> <property> <name>dfs.name.dir</name> <value>/E:/software/hadoop-2.6.0/workplace/name</value> </property> <property> <name>fs.default.name</name> <value>hdfs://127.0.0.1:9000</value> </property> <property> <name>hadoop.proxyuser.gl.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.gl.groups</name> <value>*</value> </property> </configuration>

1.3.4 编辑hdfs-site.xml文件

<configuration> <!-- 这个参数设置为1,因为是单机版hadoop --> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/hadoop/data/dfs/namenode</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/hadoop/data/dfs/datanode</value> </property> </configuration>

1.3.5 编辑mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapred.job.tracker</name> <value>127.0.0.1:9001</value> </property> </configuration>

1.3.5 编辑yarn-site.xml文件

<configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> </configuration>

1.4 初始化并启动Hadoop

1.4.1 在cmd窗口输入: hadoop namenode –format(或者hdfs namenode -format) 命令,对节点进行初始化

1.4.2 进入Hadoop安装目录下的sbin:(E:\software\hadoop-2.6.0\sbin),点击运行 start-all.bat 批处理文件,启动hadoop

1.4.3 验证hadoop是否成功启动:新建cmd窗口,输入:jps 命令,查看所有运行的服务,如果NameNode、NodeManager、DataNode、ResourceManager服务都存在,则说明启动成功。

1.5 文件传输测试

1.5.1 创建输入目录,新建cmd窗口,输入如下命令:(hdfs://localhost:9000 该路径为core-site.xml文件中配置的 fs.default.name 路径)

hadoop fs -mkdir hdfs://localhost:9000/user/

hadoop fs -mkdir hdfs://localhost:9000/user/wcinput

1.5.2 上传数据到指定目录:在cmd窗口输入如下命令:

hadoop fs -put E:\temp\MM.txt hdfs://localhost:9000/user/wcinput

hadoop fs -put E:\temp\react文档.txt hdfs://localhost:9000/user/wcinput

1.5.3 查看文件是否上传成功,在cmd窗口输入如下命令:

hadoop fs -ls hdfs://localhost:9000/user/wcinput

1.5.4 前台页面显示情况:

1.5.4 在前台查看hadoop的运行情况:(资源管理界面:http://localhost:8088/)

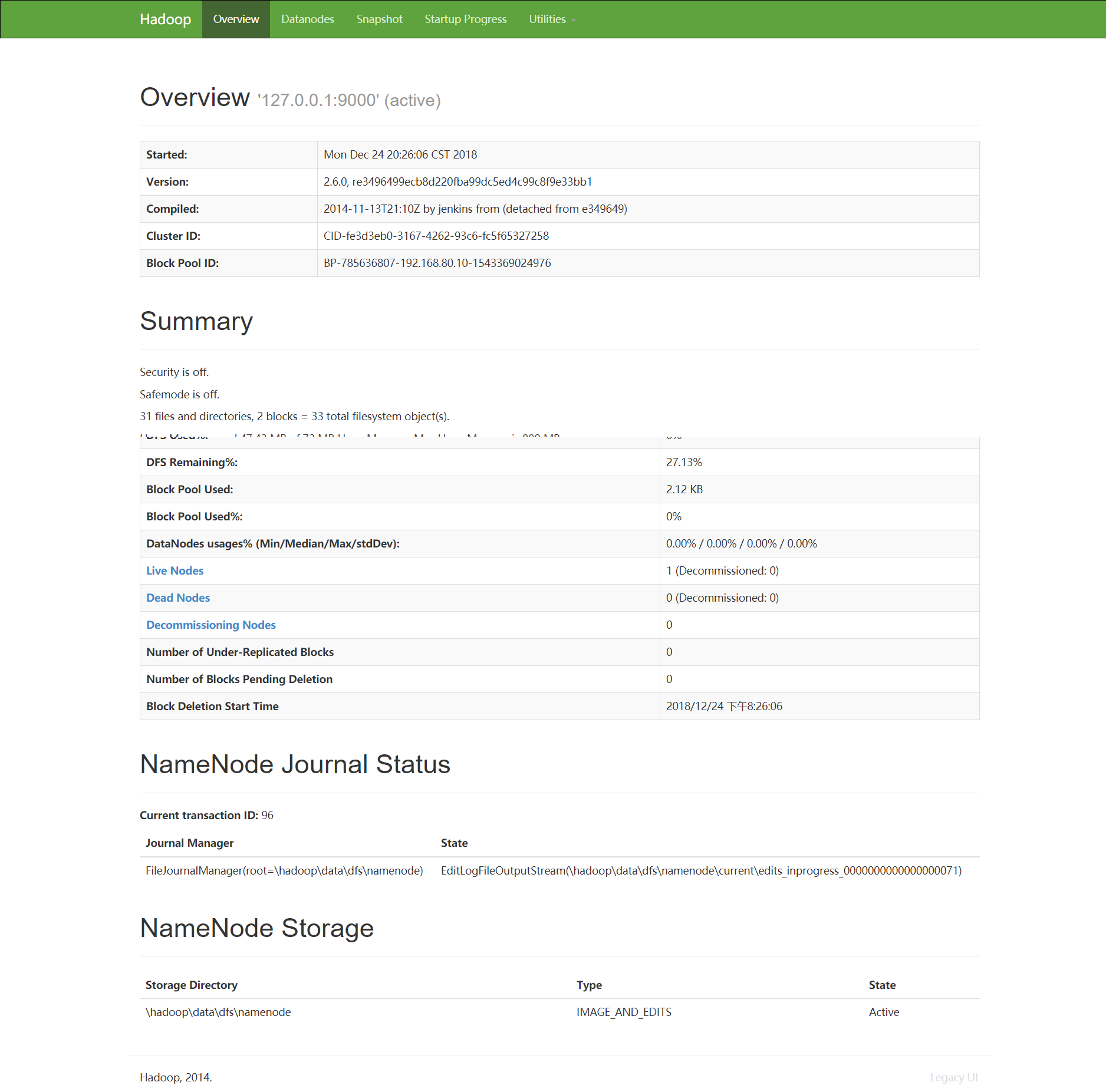

1.5.5 节点管理界面(http://localhost:50070/)

1.5.5 节点管理界面(http://localhost:50070/)

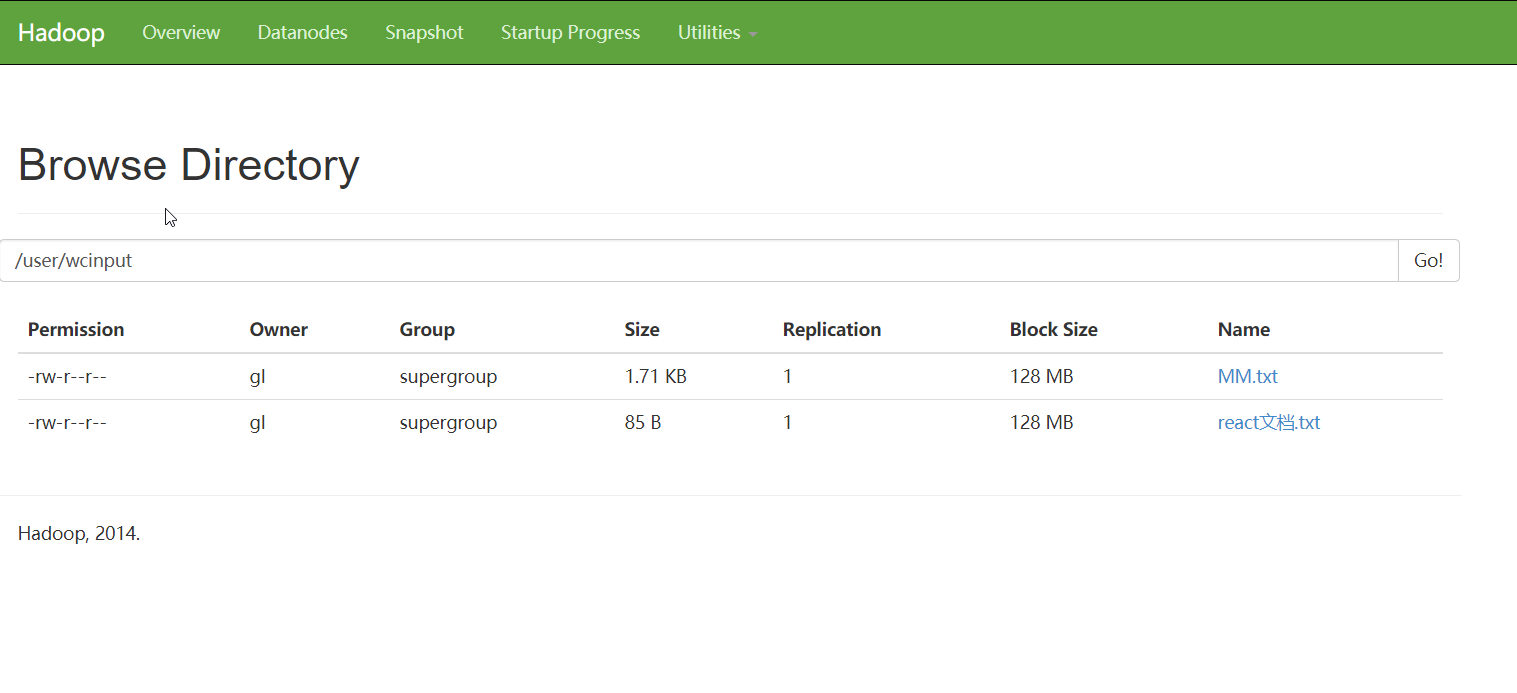

1.5.6 通过前台查看Hadoop的文件系统:(点击进入Utilities下拉菜单,再点击Browse the file system,依次进入user和wcinput,即可查看到上传的文件列表)如下图:

二. 安装并配置Hive

2.1 下载Hive 地址:http://mirror.bit.edu.cn/apache/hive/

2.2 将apache-hive-2.2.0-bin.tar.gz解压到指定的安装目录,并配置环境变量

2.2.1 新建HIVE_HOME环境变量,并将其添加到path目录(;%HIVE_HOME%\bin)

2.2.2 打开cmd窗口,输入hive version 命令进行验证环境变量是否正常

2.3 配置hive-site.xml文件(不解释,可以直接看配置的相关描述)

<property> <name>hive.metastore.warehouse.dir</name> <value>/user/hive/warehouse</value> <description>location of default database for the warehouse</description> </property> <property> <name>hive.exec.scratchdir</name> <value>/tmp/hive</value> <description>HDFS root scratch dir for Hive jobs which gets created with write all (733) permission. For each connecting user, an HDFS scratch dir: ${hive.exec.scratchdir}/<username> is created, with ${hive.scratch.dir.permission}.</description> </property> <property> <name>hive.exec.local.scratchdir</name> <value>E:/software/apache-hive-2.2.0-bin/scratch_dir</value> <description>Local scratch space for Hive jobs</description> </property> <property> <name>hive.downloaded.resources.dir</name> <value>E:/software/apache-hive-2.2.0-bin/resources_dir/${hive.session.id}_resources</value> <description>Temporary local directory for added resources in the remote file system.</description> </property> <property> <name>hive.querylog.location</name> <value>E:/software/apache-hive-2.2.0-bin/querylog_dir</value> <description>Location of Hive run time structured log file</description> </property> <property> <name>hive.server2.logging.operation.log.location</name> <value>E:/software/apache-hive-2.2.0-bin/operation_dir</value> <description>Top level directory where operation logs are stored if logging functionality is enabled</description> </property> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://127.0.0.1:3306/hive?createDatabaseIfNotExist=true</value> <description> JDBC connect string for a JDBC metastore. To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL. For example, jdbc:postgresql://myhost/db?ssl=true for postgres database. </description> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> <description>Driver class name for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>root</value> <description>Username to use against metastore database</description> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>123</value> <description>password to use against metastore database</description> </property> <property> <name>hive.metastore.schema.verification</name> <value>false</value> <description> Enforce metastore schema version consistency. True: Verify that version information stored in is compatible with one from Hive jars. Also disable automatic schema migration attempt. Users are required to manually migrate schema after Hive upgrade which ensures proper metastore schema migration. (Default) False: Warn if the version information stored in metastore doesn't match with one from in Hive jars. </description> </property> <!--配置用户名和密码--> <property> <name>hive.jdbc_passwd.auth.zhangsan</name> <value>123</value> </property>

2.4 在安装目录下创建配置文件中相应的文件夹:

E:\software\apache-hive-2.2.0-bin\scratch_dir

E:\software\apache-hive-2.2.0-bin\resources_dir

E:\software\apache-hive-2.2.0-bin\querylog_dir

E:\software\apache-hive-2.2.0-bin\operation_dir

2.5 对Hive元数据库进行初始化:(将mysql-connector-java-*.jar拷贝到安装目录lib下)

进入安装目录:apache-hive-2.2.0-bin/bin/,在新建cmd窗口执行如下命令(MySql数据库中会产生相应的用户和数据表)

hive --service schematool

(程序会自动进入***apache-hive-2.2.0-bin\scripts\metastore\upgrade\mysql\文件中读取对应版本的sql文件)

2.6 启动Hive ,新建cmd窗口,并输入以下命令:

启动metastore : hive --service metastore

启动hiveserver2:hive --service hiveserver2

(HiveServer2(HS2)是服务器接口,使远程客户端执行对hive的查询和检索结果(更详细的介绍这里)。目前基于Thrift RPC的实现,是HiveServer的改进版本,并支持多客户端并发和身份验证。

它旨在为JDBC和ODBC等开放API客户端提供更好的支持。)

2.7 验证:

新建cmd窗口,并输入以下 hive 命令,进入hive库交互操作界面:

hive> create table test_table(id INT, name string);

hive> create table student(id int,name string,gender int,address string);

hive> show tables;

student

test_table

2 rows selected (1.391 seconds)

三. 通过Kettle 6.0 连接Hadoop2.6.0 + Hive 2.2.0 大数据环境进行数据迁移

(待续)