Storm kafka zookeeper 集群

我们知道storm的作用主要是进行流式计算,对于源源不断的均匀数据流流入处理是非常有效的,而现实生活中大部分场景并不是均匀的数据流,而是时而多时而少的数据流入,这种情况下显然用批量处理是不合适的,如果使用storm做实时计算的话可能因为数据拥堵而导致服务器挂掉,应对这种情况,使用kafka作为消息队列是非常合适的选择,kafka可以将不均匀的数据转换成均匀的消息流,从而和storm比较完善的结合,这样才可以实现稳定的流式计算。

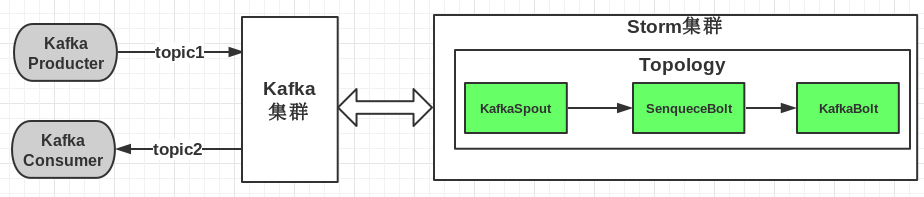

storm和kafka结合,实质上无非是之前我们说过的计算模式结合起来,就是数据先进入kafka生产者,然后storm作为消费者进行消费,最后将消费后的数据输出或者保存到文件、数据库、分布式存储等等,具体框图如下:

这张图片摘自博客地址:http://www.cnblogs.com/tovin/p/3974417.html 在此感谢作者的奉献

一、环境安装前准备:

(1)准备三台机器:操作系统centos7

(2)JDK: jdk-8u191-linux-x64.tar.gz 可以到官网下载: wget https://download.oracle.com/otn-pub/java/jdk/8u191-b12/2787e4a523244c269598db4e85c51e0c/jdk-8u191-linux-x64.tar.gz

(3)zookeeper:zookeeper-3.4.13 wget http://archive.apache.org/dist/zookeeper/zookeeper-3.4.13/zookeeper-3.4.13.tar.gz

(4)kafka: kafka_2.11-2.0.0 wget http://mirrors.hust.edu.cn/apache/kafka/2.0.0/kafka_2.11-2.0.0.tgz

(5)storm:apache-storm-1.2.2.tar.gz wget http://www.apache.org/dist/storm/apache-storm-1.2.2/apache-storm-1.2.2.tar.gz

(6)进行解压 配置环境变量 vi /ect/profile

# JAVA_HOME

export JAVA_HOME=/usr/local/java/jdk1.8.0_191

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export ZOOKEEPER_HOME=/usr/local/java/zookeeper-3.4.13

export PATH=$PATH:$ZOOKEEPER_HOME/bin/:$JAVA_HOME/bin

#KAFKA_HOME

export KAFKA_HOME=/usr/local/java/kafka_2.11-2.0.0

export PATH=$PATH:$KAFKA_HOME/bin

# STORM_HOME

export STORM_HOME=/usr/local/java/apache-storm-1.2.2

export PATH=.:${JAVA_HOME}/bin:${ZK_HOME}/bin:${STORM_HOME}/bin:$PATH

环境变量需要重启生效 source /ect/profile

二、zookeeper集群安装(三台机器上都需要安装)

(1)tar -zxvf zookeeper-3.4.13.tar.gz

(2)cd /usr/local/java/zookeeper-3.4.13/conf 进入解压后zk conf目录

(3)mv zoo_sample.cfg zoo.cfg 拷贝文件 为 zoo.cfg

(4)配置zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/usr/local/java/zookeeper-3.4.13/dateDir

dataLogDir=/usr/local/java/zookeeper-3.4.13/logs

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1 = 0.0.0.0:2888:3888

server.2 = 192.168.164.134:2888:3888

server.3 = 192.168.164.135:2888:3888

(5)创建 mkdir dataDir=/usr/local/java/zookeeper-3.4.13/dateDir

(6)创建 mkdir dataLogDir=/usr/local/java/zookeeper-3.4.13/logs

(7)创建 echo “1” >/usr/local/java/zookeeper-3.4.13/dateDir/myid

(8)需要把zookeeper-3.4.13 这个目录拷贝到其他两台机器上 scp -r zookeeper-3.4.13 [email protected]:/usr/local/java/ 等待输入密码即可

(9)server.2 和 server.3 相对应机器 /usr/local/java/zookeeper-3.4.13/dateDir/myid 改成 2 和 3

虚拟机 互相拷贝,新增IP ,输入密码

ssh -o StrictHostKeyChecking=no [email protected]

(10)启动 ./bin/zkServer.sh start 三台机器都需要启动 启动过程会报错,等待三台都启动成功后

./zkServer.sh status

注意:查看zookeeper集群的状态,出现Mode:follower或是Mode:leader则代表成功

[root@hadoop bin]# ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/java/zookeeper-3.4.13/bin/../conf/zoo.cfg

Mode: follower

[root@hadoop bin]#

[root@hadoop bin]# ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/java/zookeeper-3.4.13/bin/../conf/zoo.cfg

Mode: leader

[root@hadoop bin]#

[root@hadoop bin]# ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/java/zookeeper-3.4.13/bin/../conf/zoo.cfg

Mode: follower

[root@hadoop bin]#

三、kafka集群安装(三台机器上都需要安装)

(1)tar -zxvf kafka_2.11-2.0.0.tgz

(2)cd /usr/local/java/kafka_2.11-2.0.0/config 进入解压后 config 目录

(3)vi server.properties 进行配置

(4)server.properties

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# see kafka.server.KafkaConfig for additional details and defaults

############################# Server Basics #############################

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=1

############################# Socket Server Settings #############################

# The address the socket server listens on. It will get the value returned from

# java.net.InetAddress.getCanonicalHostName() if not configured.

# FORMAT:

# listeners = listener_name://host_name:port

# EXAMPLE:

# listeners = PLAINTEXT://your.host.name:9092

listeners=PLAINTEXT://:9092

# Hostname and port the broker will advertise to producers and consumers. If not set,

# it uses the value for "listeners" if configured. Otherwise, it will use the value

# returned from java.net.InetAddress.getCanonicalHostName().

#advertised.listeners=PLAINTEXT://your.host.name:9092

# Maps listener names to security protocols, the default is for them to be the same. See the config documentation for more details

#listener.security.protocol.map=PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

# The number of threads that the server uses for receiving requests from the network and sending responses to the network

num.network.threads=3

# The number of threads that the server uses for processing requests, which may include disk I/O

num.io.threads=8

# The send buffer (SO_SNDBUF) used by the socket server

socket.send.buffer.bytes=102400

# The receive buffer (SO_RCVBUF) used by the socket server

socket.receive.buffer.bytes=102400

# The maximum size of a request that the socket server will accept (protection against OOM)

socket.request.max.bytes=104857600

############################# Log Basics #############################

# A comma separated list of directories under which to store log files

log.dirs=/usr/local/java/kafka_2.11-2.0.0/logs

# The default number of log partitions per topic. More partitions allow greater

# parallelism for consumption, but this will also result in more files across

# the brokers.

num.partitions=1

# The number of threads per data directory to be used for log recovery at startup and flushing at shutdown.

# This value is recommended to be increased for installations with data dirs located in RAID array.

num.recovery.threads.per.data.dir=1

############################# Internal Topic Settings #############################

# The replication factor for the group metadata internal topics "__consumer_offsets" and "__transaction_state"

# For anything other than development testing, a value greater than 1 is recommended for to ensure availability such as 3.

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

############################# Log Flush Policy #############################

# Messages are immediately written to the filesystem but by default we only fsync() to sync

# the OS cache lazily. The following configurations control the flush of data to disk.

# There are a few important trade-offs here:

# 1. Durability: Unflushed data may be lost if you are not using replication.

# 2. Latency: Very large flush intervals may lead to latency spikes when the flush does occur as there will be a lot of data to flush.

# 3. Throughput: The flush is generally the most expensive operation, and a small flush interval may lead to excessive seeks.

# The settings below allow one to configure the flush policy to flush data after a period of time or

# every N messages (or both). This can be done globally and overridden on a per-topic basis.

# The number of messages to accept before forcing a flush of data to disk

#log.flush.interval.messages=10000

# The maximum amount of time a message can sit in a log before we force a flush

#log.flush.interval.ms=1000

############################# Log Retention Policy #############################

# The following configurations control the disposal of log segments. The policy can

# be set to delete segments after a period of time, or after a given size has accumulated.

# A segment will be deleted whenever *either* of these criteria are met. Deletion always happens

# from the end of the log.

# The minimum age of a log file to be eligible for deletion due to age

log.retention.hours=168

# A size-based retention policy for logs. Segments are pruned from the log unless the remaining

# segments drop below log.retention.bytes. Functions independently of log.retention.hours.

#log.retention.bytes=1073741824

# The maximum size of a log segment file. When this size is reached a new log segment will be created.

log.segment.bytes=1073741824

# The interval at which log segments are checked to see if they can be deleted according

# to the retention policies

log.retention.check.interval.ms=300000

############################# Zookeeper #############################

# Zookeeper connection string (see zookeeper docs for details).

# This is a comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002".

# You can also append an optional chroot string to the urls to specify the

# root directory for all kafka znodes.

zookeeper.connect=hadoop1:2181,hadoop2:2181,hadoop3:2181/kafka

# Timeout in ms for connecting to zookeeper

zookeeper.connection.timeout.ms=6000

############################# Group Coordinator Settings #############################

# The following configuration specifies the time, in milliseconds, that the GroupCoordinator will delay the initial consumer rebalance.

# The rebalance will be further delayed by the value of group.initial.rebalance.delay.ms as new members join the group, up to a maximum of max.poll.interval.ms.

# The default value for this is 3 seconds.

# We override this to 0 here as it makes for a better out-of-the-box experience for development and testing.

# However, in production environments the default value of 3 seconds is more suitable as this will help to avoid unnecessary, and potentially expensive, rebalances during application startup.

group.initial.rebalance.delay.ms=0

(5)创建 mkdir log.dirs=/usr/local/java/kafka_2.11-2.0.0/logs

(6)需要把kafka_2.11-2.0.0 这个目录拷贝到其他两台机器上 scp -r kafka_2.11-2.0.0 [email protected]:/usr/local/java/ 等待输入密码即可

(7)要修改其他两台机器 server.properties broker.id=2 和 broker.id=3

ssh -o StrictHostKeyChecking=no [email protected]

(8)启动

[root@hadoop java]# cd kafka_2.11-2.0.0

[root@hadoop kafka_2.11-2.0.0]# cd bin/

[root@hadoop bin]# ./bin/kafka-server-start.sh -daemon ./config/server.properties

四、storm集群安装(三台机器上都需要安装)

(1)tar -zxvf apache-storm-1.2.2.tar.gz

(2)cd /usr/local/java/apache-storm-1.2.2/conf 进入解压后conf 目录

(3)vi storm.yaml 进行配置

(4)storm.yaml

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

########### These MUST be filled in for a storm configuration

storm.zookeeper.servers:

- "hadoop1"

- "hadoop2"

- "hadoop3"

storm.zookeeper.port: 2181

nimbus.seeds: ["hadoop1"]

storm.local.dir: "/usr/local/java/apache-storm-1.2.2/logs"

supervisor.slots.ports:

- 6700

- 6701

- 6702

- 6703

# nimbus.seeds: ["host1", "host2", "host3"]

#

#

# ##### These may optionally be filled in:

#

## List of custom serializations

# topology.kryo.register:

# - org.mycompany.MyType

# - org.mycompany.MyType2: org.mycompany.MyType2Serializer

#

## List of custom kryo decorators

# topology.kryo.decorators:

# - org.mycompany.MyDecorator

#

## Locations of the drpc servers

# drpc.servers:

# - "server1"

# - "server2"

## Metrics Consumers

## max.retain.metric.tuples

## - task queue will be unbounded when max.retain.metric.tuples is equal or less than 0.

## whitelist / blacklist

## - when none of configuration for metric filter are specified, it'll be treated as 'pass all'.

## - you need to specify either whitelist or blacklist, or none of them. You can't specify both of them.

## - you can specify multiple whitelist / blacklist with regular expression

## expandMapType: expand metric with map type as value to multiple metrics

## - set to true when you would like to apply filter to expanded metrics

## - default value is false which is backward compatible value

## metricNameSeparator: separator between origin metric name and key of entry from map

## - only effective when expandMapType is set to true

# topology.metrics.consumer.register:

# - class: "org.apache.storm.metric.LoggingMetricsConsumer"

# max.retain.metric.tuples: 100

# parallelism.hint: 1

# - class: "org.mycompany.MyMetricsConsumer"

# max.retain.metric.tuples: 100

# whitelist:

# - "execute.*"

# - "^__complete-latency$"

# parallelism.hint: 1

# argument:

# - endpoint: "metrics-collector.mycompany.org"

# expandMapType: true

# metricNameSeparator: "."

## Cluster Metrics Consumers

# storm.cluster.metrics.consumer.register:

# - class: "org.apache.storm.metric.LoggingClusterMetricsConsumer"

# - class: "org.mycompany.MyMetricsConsumer"

# argument:

# - endpoint: "metrics-collector.mycompany.org"

#

# storm.cluster.metrics.consumer.publish.interval.secs: 60

# Event Logger

# topology.event.logger.register:

# - class: "org.apache.storm.metric.FileBasedEventLogger"

# - class: "org.mycompany.MyEventLogger"

# arguments:

# endpoint: "event-logger.mycompany.org"

# Metrics v2 configuration (optional)

#storm.metrics.reporters:

# # Graphite Reporter

# - class: "org.apache.storm.metrics2.reporters.GraphiteStormReporter"

# daemons:

# - "supervisor"

# - "nimbus"

# - "worker"

# report.period: 60

# report.period.units: "SECONDS"

# graphite.host: "localhost"

# graphite.port: 2003

#

# # Console Reporter

# - class: "org.apache.storm.metrics2.reporters.ConsoleStormReporter"

# daemons:

# - "worker"

# report.period: 10

# report.period.units: "SECONDS"

# filter:

# class: "org.apache.storm.metrics2.filters.RegexFilter"

# expression: ".*my_component.*emitted.*"

(5)创建 mkdir /usr/local/java/apache-storm-1.2.2/logs

(6)需要把apache-storm-1.2.2 这个目录拷贝到其他两台机器上 scp -r kafka_2.11-2.0.0 [email protected]:/usr/local/java/ 等待输入密码即可

(7)启动 storm

#在192.168.164.133 启动

[root@hadoop apache-storm-1.2.2]# cd bin/

[root@hadoop bin]# ./storm nimbus >/dev/null 2>&1 &

[root@hadoop apache-storm-1.2.2]# cd bin/

[root@hadoop bin]# ./storm ui &

在其他两台机器启动

#在192.168.164.134, 192.168.164.135 启动

[root@hadoop apache-storm-1.2.2]# cd bin/

[root@hadoop bin]# ./storm supervisor >/dev/null 2>&1 &

(8)访问 http://192.168.164.133:8080/

五、虚拟机 centos7 一些注意

(1)修改了hosts 需要重启 service network restart

127.0.0.1 hadoop1

192.168.164.134 hadoop2

192.168.164.135 hadoop3

(2)防火墙配置

1、通过systemctl status firewalld查看firewalld状态,发现当前是dead状态,即防火墙未开启

2、通过systemctl start firewalld开启防火墙,没有任何提示即开启成功。

3、再次通过systemctl status firewalld查看firewalld状态,显示running即已开启了

4、systemctl stop firewalld 关闭防火墙

5、开启以下端口

firewall-cmd --zone=public --add-port=2888/tcp --permanent

firewall-cmd --zone=public --add-port=3888/tcp --permanent

firewall-cmd --zone=public --add-port=2181/tcp --permanent

firewall-cmd --zone=public --add-port=8080/tcp --permanent

firewall-cmd --zone=public --add-port=9092/tcp --permanent

6、firewall-cmd --reload 重新启动防火墙

7、firewall-cmd --list-all 查询开放端口

8、sudo iptables -F 把虚拟机中的防火墙给清了一下

(3)安装 telnet centos、ubuntu安装telnet命令的方法

yum list telnet* 列出telnet相关的安装包

yum install telnet-server 安装telnet服务

yum install telnet.* 安装telnet客户端