版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/u012292754/article/details/84777691

1 Flume介绍

1.1 设计目标

- 可靠性

- 扩展性

- 管理性

1.2 同类产品

- Flume: Cloudera/Apache,Java

- Scribe: Facebook ,C/C++(不维护了)

- Chukwa: Yahoo/Apache,Java

- Fluentd: Ruby

- Logstash:ELK(ElasticSearch,Kibana)

1.3 Flume发展史

- Cloudera ,0.9.2,Flume-OG

- Apache,flume-728,flume-NG

1.4 Event

Event是Flume中传输的基本数据单元,

Event = 可选header + byte array

2 Flume 架构及核心组件

- Source ,收集

- Channel, 聚集

- Sink,输出

2 Flume环境部署

2.1 配置JDK

2.2 下载 Flume

http://archive-primary.cloudera.com/cdh5/cdh/5/

[hadoop@node1 ~]$ ll

total 66760

drwxrwxr-x. 15 hadoop hadoop 4096 Nov 1 08:52 apps

drwxrwxr-x. 4 hadoop hadoop 30 Oct 25 21:59 elasticsearchData

-rw-r--r--. 1 hadoop hadoop 67321333 Dec 3 19:37 flume-ng-1.6.0-cdh5.7.0.tar.gz

drwxrwxr-x. 4 hadoop hadoop 28 Sep 14 19:02 hbase

drwxrwxr-x. 4 hadoop hadoop 32 Sep 14 14:44 hdfsdir

drwxrwxrwx. 3 hadoop hadoop 26 Oct 30 16:53 hdp2.6-cdh5.7-data

drwxrwxrwx. 3 hadoop hadoop 18 Oct 24 21:45 kafkaData

drwxrwxr-x. 5 hadoop hadoop 133 Oct 23 14:40 metastore_db

-rw-r--r--. 1 hadoop hadoop 999635 Aug 29 2017 mysql-connector-java-5.1.44-bin.jar

drwxr-xr-x. 30 hadoop hadoop 4096 Dec 2 18:40 spark-2.2.0

drwxrwxr-x. 3 hadoop hadoop 63 Oct 24 21:21 zookeeperData

-rw-rw-r--. 1 hadoop hadoop 26108 Oct 25 17:54 zookeeper.out

[hadoop@node1 ~]$ pwd

/home/hadoop

[hadoop@node1 ~]$ tar -zxvf flume-ng-1.6.0-cdh5.7.0.tar.gz -C /home/hadoop/apps

2.3 把 flume 配置到环境变量

vim /ect/profile

export FLUME_HOME=/home/hadoop/apps/apache-flume-1.6.0-cdh5.7.0-bin

export PATH=$PATH:$FLUME_HOME/bin

2.4 配置 flume-env.sh

[hadoop@node1 ~]$ cd $FLUME_HOME

[hadoop@node1 apache-flume-1.6.0-cdh5.7.0-bin]$ cd conf

[hadoop@node1 conf]$ ll

total 16

-rw-r--r--. 1 hadoop hadoop 1661 Mar 24 2016 flume-conf.properties.template

-rw-r--r--. 1 hadoop hadoop 1110 Mar 24 2016 flume-env.ps1.template

-rw-r--r--. 1 hadoop hadoop 1214 Mar 24 2016 flume-env.sh.template

-rw-r--r--. 1 hadoop hadoop 3107 Mar 24 2016 log4j.properties

[hadoop@node1 conf]$ cp flume-env.sh.template flume-env.sh

[hadoop@node1 conf]$ ll

total 20

-rw-r--r--. 1 hadoop hadoop 1661 Mar 24 2016 flume-conf.properties.template

-rw-r--r--. 1 hadoop hadoop 1110 Mar 24 2016 flume-env.ps1.template

-rw-r--r--. 1 hadoop hadoop 1214 Dec 3 19:48 flume-env.sh

-rw-r--r--. 1 hadoop hadoop 1214 Mar 24 2016 flume-env.sh.template

-rw-r--r--. 1 hadoop hadoop 3107 Mar 24 2016 log4j.properties

[hadoop@node1 conf]$

配置里面的 JAVA_HOME

3 Flume 测试案例

3.1 从指定网络端口采集数据到控制台

- 编写配置文件

参考https://flume.apache.org/FlumeUserGuide.html

[hadoop@node1 conf]$ pwd

/home/hadoop/apps/apache-flume-1.6.0-cdh5.7.0-bin/conf

[hadoop@node1 conf]$ mkdir myconf

[hadoop@node1 conf]$ cd myconf/

[hadoop@node1 myconf]$ vim logger.conf

# example.conf: A single-node Flume configuration

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = netcat

a1.sources.r1.bind = node1

a1.sources.r1.port = 44444

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

- 启动 agent

flume-ng agent --name a1 --conf $FLUME_HOME/conf --conf-file $FLUME_HOME/conf/myconf/logger.conf -Dflume.root.logger=INFO,console

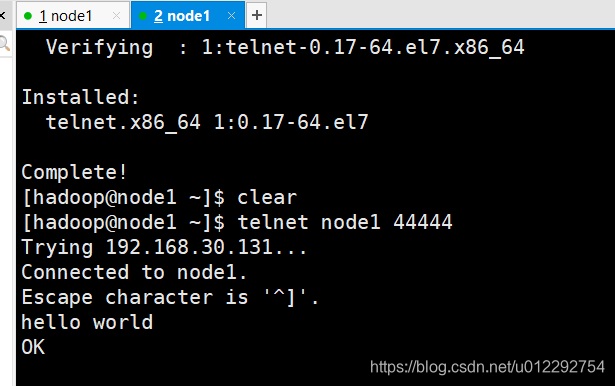

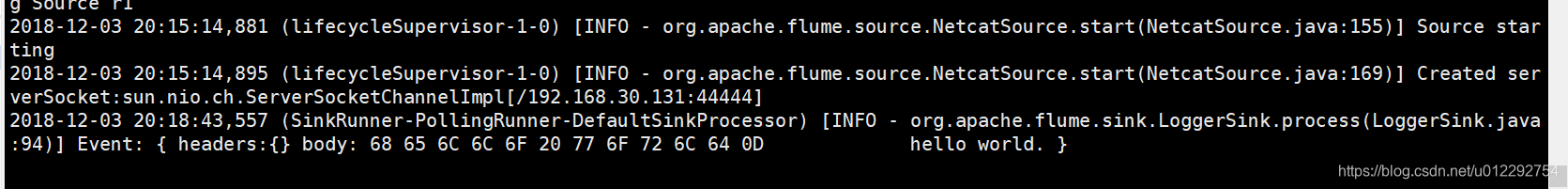

- 测试

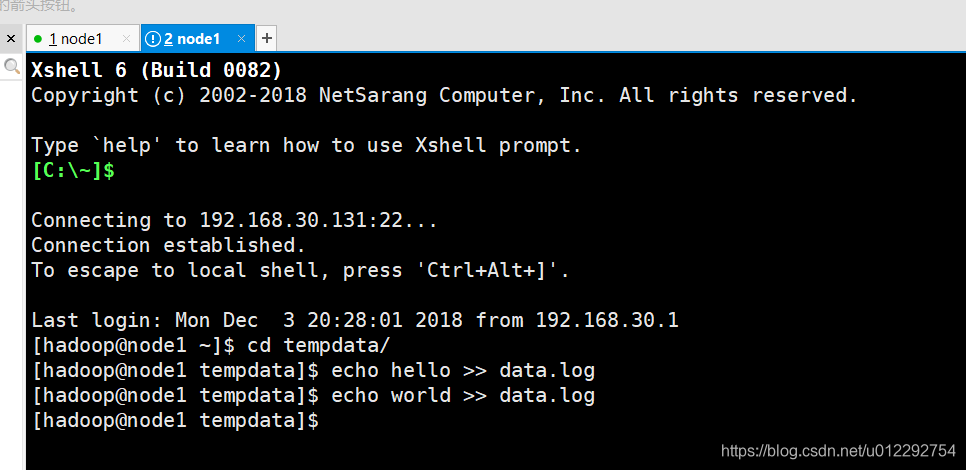

3.2 监控一个文件实时采集新增的数据输出到控制台

- Agent选型

exec source + memory channel + logger sink - 新建配置文件

[hadoop@node1 myconf]$ vim exec-memory-logger.conf

# example.conf: A single-node Flume configuration

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /home/hadoop/tempdata/data.log

a1.sources.r1.shell = /bin/sh -c

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

- 启动客户端

flume-ng agent --name a1 --conf $FLUME_HOME/conf --conf-file $FLUME_HOME/conf/myconf/exec-memory-logger.conf -Dflume.root.logger=INFO,console

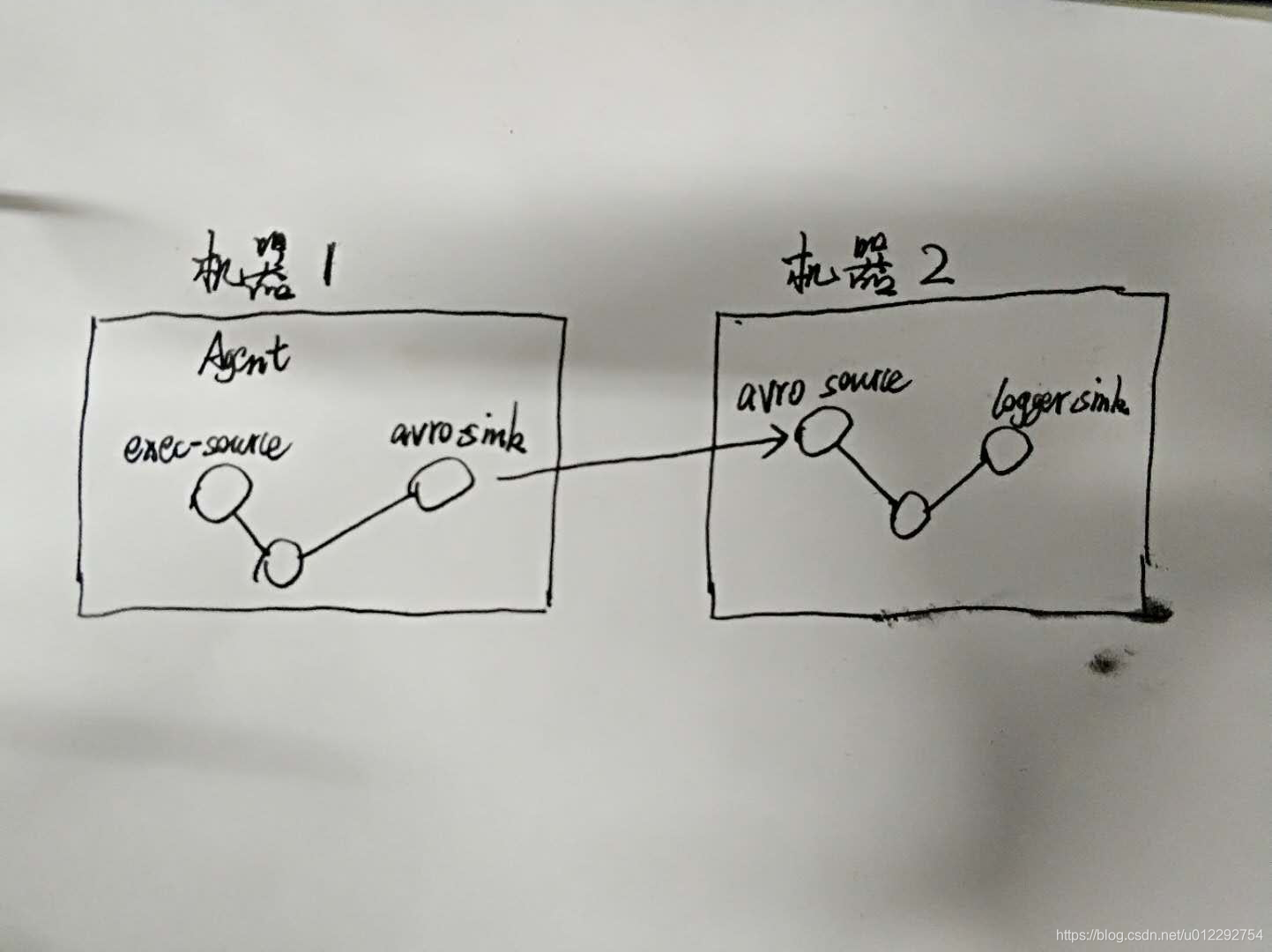

3.3 将A服务器上的日志实时采集到B服务器

3.3.1 技术选型

- 第一组:

exec source + memory channel +avro sink - 第二组 :

avro source + memory channel + logger sink

3.3.2 配置文件

exec-memory-avro.conf

# Name the components on this agent

exec-memory-avro.sources = exec-source

exec-memory-avro.sinks = avro-sink

exec-memory-avro.channels = memory-channel

# Describe/configure the source

exec-memory-avro.sources.exec-source.type = exec

exec-memory-avro.sources.exec-source.command = tail -F /home/hadoop/tempdata/data.log

exec-memory-avro.sources.exec-source.shell = /bin/sh -c

# Describe the sink

exec-memory-avro.sinks.avro-sink.type = avro

exec-memory-avro.sinks.avro-sink.hostname = node1

exec-memory-avro.sinks.avro-sink.port = 44444

# Use a channel which buffers events in memory

exec-memory-avro.channels.memory-channel.type = memory

# Bind the source and sink to the channel

exec-memory-avro.sources.exec-source.channels = memory-channel

exec-memory-avro.sinks.avro-sink.channel = memory-channel

avro-memory-logger.conf

# Name the components on this agent

avro-memory-logger.sources = avro-source

avro-memory-logger.sinks = logger-sink

avro-memory-logger.channels = memory-channel

# Describe/configure the source

avro-memory-logger.sources.avro-source.type = avro

avro-memory-logger.sources.avro-source.bind = node1

avro-memory-logger.sources.avro-source.port = 44444

# Describe the sink

avro-memory-logger.sinks.logger-sink.type = logger

# Use a channel which buffers events in memory

avro-memory-logger.channels.memory-channel.type = memory

# Bind the source and sink to the channel

avro-memory-logger.sources.avro-source.channels = memory-channel

avro-memory-logger.sinks.logger-sink.channel = memory-channel

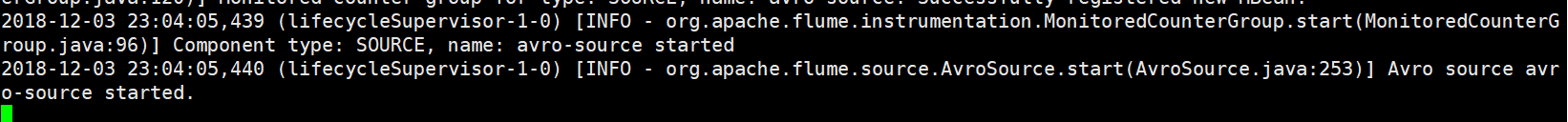

3.3.3 启动客户端

先启动

flume-ng agent --name avro-memory-logger --conf $FLUME_HOME/conf --conf-file $FLUME_HOME/conf/myconf/avro-memory-logger.conf -Dflume.root.logger=INFO,console

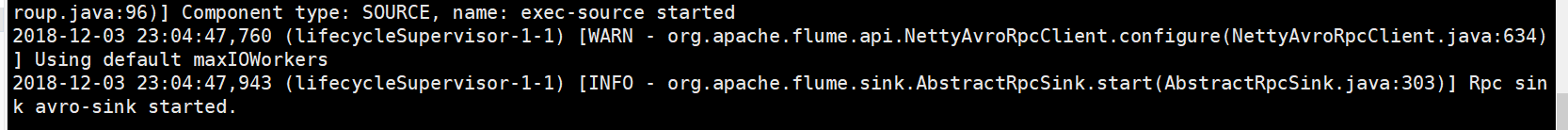

再启动

flume-ng agent --name exec-memory-avro --conf $FLUME_HOME/conf --conf-file $FLUME_HOME/conf/myconf/exec-memory-avro.conf -Dflume.root.logger=INFO,console

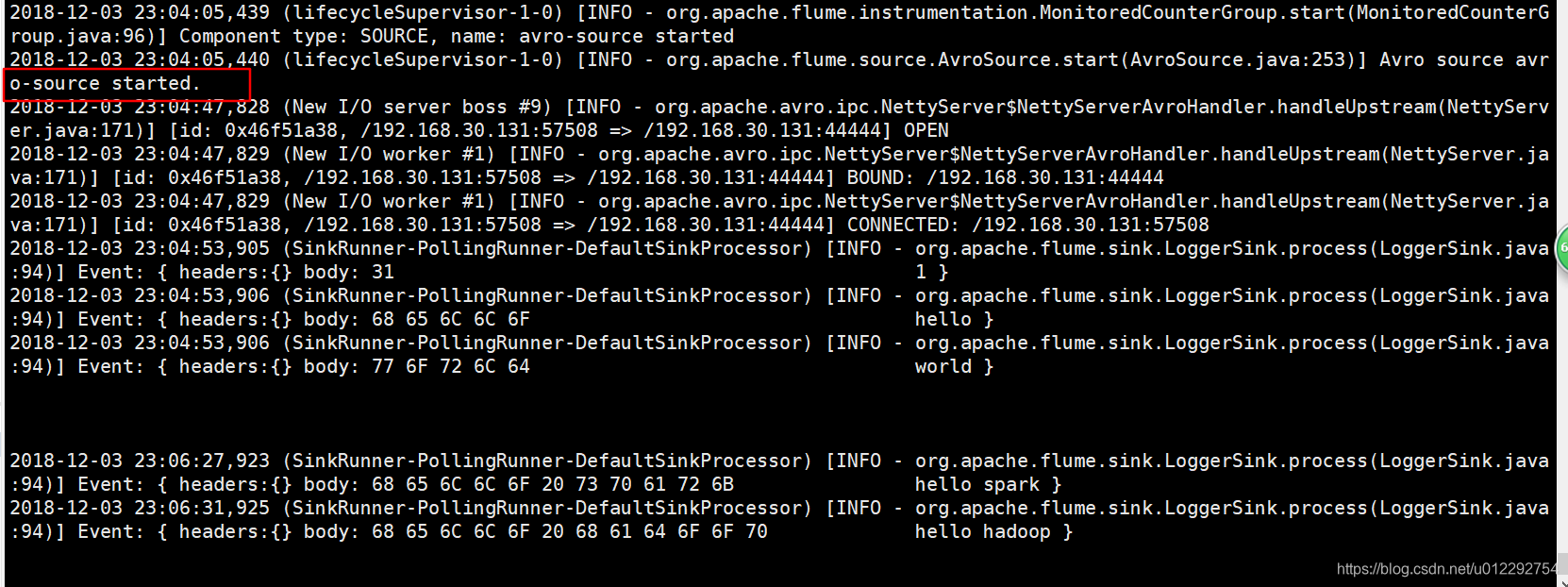

3.3.4 结果