vv

package com.liming.flux;

import java.util.UUID;

import backtype.storm.Config;

import backtype.storm.LocalCluster;

import backtype.storm.generated.StormTopology;

import backtype.storm.spout.SchemeAsMultiScheme;

import backtype.storm.topology.TopologyBuilder;

import backtype.storm.utils.Utils;

import storm.kafka.BrokerHosts;

import storm.kafka.KafkaSpout;

import storm.kafka.SpoutConfig;

import storm.kafka.StringScheme;

import storm.kafka.ZkHosts;

public class FluxTopology {

public static void main(String[] args) {

//SPOUT的id 要求唯一

String KAFKA_SPOUT_ID = "flux_spout";

//要连接的kafka的topic

String CONSUME_TOPIC = "flux_topic";

//要连接的zookeeper的地址

String ZK_HOSTS = "192.168.239.129:2181";

//设定连接服务器的参数

BrokerHosts hosts = new ZkHosts(ZK_HOSTS);

SpoutConfig spoutConfig = new SpoutConfig(hosts, CONSUME_TOPIC, "/" + CONSUME_TOPIC, UUID.randomUUID().toString());

spoutConfig.scheme = new SchemeAsMultiScheme(new StringScheme());

//从kafka读取数据发射

KafkaSpout kafkaSpout = new KafkaSpout(spoutConfig);

//创建TopologyBuilder类实例

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout(KAFKA_SPOUT_ID, kafkaSpout);

//清理数据

builder.setBolt("ClearBolt", new ClearBolt()).shuffleGrouping(KAFKA_SPOUT_ID);

//计算PV

builder.setBolt("PvBolt", new PvBolt()).shuffleGrouping("ClearBolt");

//计算Uv

builder.setBolt("UvBolt", new UvBolt()).shuffleGrouping("PvBolt");

//计算vv

builder.setBolt("VvBolt", new VvBolt()).shuffleGrouping("UvBolt");

builder.setBolt("PrintBolt", new PrintBolt()).shuffleGrouping("VvBolt");

builder.setBolt("ToHbaseBolt", new ToHbaseBolt()).shuffleGrouping("VvBolt");

StormTopology topology = builder.createTopology();

//--提交Topology给集群运行

Config conf = new Config();

LocalCluster cluster = new LocalCluster();

cluster.submitTopology("MyTopology", conf, topology);

//--运行10秒钟后杀死Topology关闭集群

Utils.sleep(1000 * 1000);

cluster.killTopology("MyTopology");

cluster.shutdown();

}

}

package com.liming.flux;

import java.util.List;

import java.util.Map;

import com.liming.flux.dao.HBaseDao;

import com.liming.flux.domain.FluxInfo;

import backtype.storm.task.OutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichBolt;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Tuple;

import backtype.storm.tuple.Values;

public class VvBolt extends BaseRichBolt {

OutputCollector collector;

@Override

public void prepare(Map map, TopologyContext topology, OutputCollector collector) {

this.collector = collector;

}

@Override

public void execute(Tuple input) {

List<Object> values = input.getValues();

//如果ss_id在今天的其他数据中没有出现过,则输出1,否则输出0

List<FluxInfo> list = HBaseDao.queryData("^"+input.getStringByField("time")+"_[^_]*_"+input.getStringByField("ss_id")+"_.*$");

values.add(list.size() == 0 ? 1 : 0);

collector.emit(new Values(values.toArray()));

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("time","uv_id","ss_id","ss_time","urlname","cip","pv","uv","vv"));

}

}

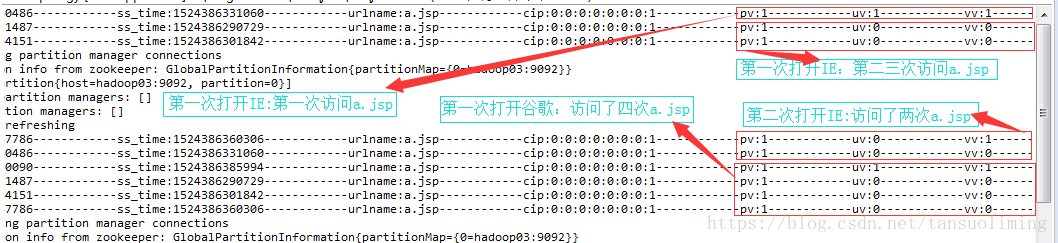

测试结果如下:

newip

package com.liming.flux;

import java.util.UUID;

import backtype.storm.Config;

import backtype.storm.LocalCluster;

import backtype.storm.generated.StormTopology;

import backtype.storm.spout.SchemeAsMultiScheme;

import backtype.storm.topology.TopologyBuilder;

import backtype.storm.utils.Utils;

import storm.kafka.BrokerHosts;

import storm.kafka.KafkaSpout;

import storm.kafka.SpoutConfig;

import storm.kafka.StringScheme;

import storm.kafka.ZkHosts;

public class FluxTopology {

public static void main(String[] args) {

//SPOUT的id 要求唯一

String KAFKA_SPOUT_ID = "flux_spout";

//要连接的kafka的topic

String CONSUME_TOPIC = "flux_topic";

//要连接的zookeeper的地址

String ZK_HOSTS = "192.168.239.129:2181";

//设定连接服务器的参数

BrokerHosts hosts = new ZkHosts(ZK_HOSTS);

SpoutConfig spoutConfig = new SpoutConfig(hosts, CONSUME_TOPIC, "/" + CONSUME_TOPIC, UUID.randomUUID().toString());

spoutConfig.scheme = new SchemeAsMultiScheme(new StringScheme());

//从kafka读取数据发射

KafkaSpout kafkaSpout = new KafkaSpout(spoutConfig);

//创建TopologyBuilder类实例

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout(KAFKA_SPOUT_ID, kafkaSpout);

//清理数据

builder.setBolt("ClearBolt", new ClearBolt()).shuffleGrouping(KAFKA_SPOUT_ID);

//计算PV

builder.setBolt("PvBolt", new PvBolt()).shuffleGrouping("ClearBolt");

//计算Uv

builder.setBolt("UvBolt", new UvBolt()).shuffleGrouping("PvBolt");

//计算vv

builder.setBolt("VvBolt", new VvBolt()).shuffleGrouping("UvBolt");

//计算newip

builder.setBolt("NewipBolt", new NewipBolt()).shuffleGrouping("VvBolt");

builder.setBolt("PrintBolt", new PrintBolt()).shuffleGrouping("NewipBolt");

builder.setBolt("ToHbaseBolt", new ToHbaseBolt()).shuffleGrouping("NewipBolt");

StormTopology topology = builder.createTopology();

//--提交Topology给集群运行

Config conf = new Config();

LocalCluster cluster = new LocalCluster();

cluster.submitTopology("MyTopology", conf, topology);

//--运行10秒钟后杀死Topology关闭集群

Utils.sleep(1000 * 1000);

cluster.killTopology("MyTopology");

cluster.shutdown();

}

}

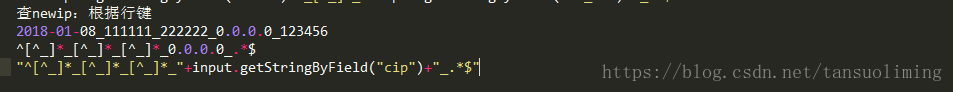

如下查找中间数据

package com.liming.flux;

import java.util.List;

import java.util.Map;

import com.liming.flux.dao.HBaseDao;

import com.liming.flux.domain.FluxInfo;

import backtype.storm.task.OutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichBolt;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Tuple;

import backtype.storm.tuple.Values;

public class NewipBolt extends BaseRichBolt {

private OutputCollector collector;

@Override

public void prepare(Map map, TopologyContext context, OutputCollector collector) {

this.collector = collector;

}

@Override

public void execute(Tuple input) {

List<Object> values = input.getValues();

//如果newip在历史的其他数据中没有出现过,则输出1,否则输出0

List<FluxInfo> list = HBaseDao.queryData("^[^_]*_[^_]*_[^_]*_"+input.getStringByField("cip")+"_.*$");

values.add(list.size() == 0 ? 1 : 0);

collector.emit(new Values(values.toArray()));

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("time","uv_id","ss_id","ss_time","urlname","cip","pv","uv","vv","newip"));

}

}

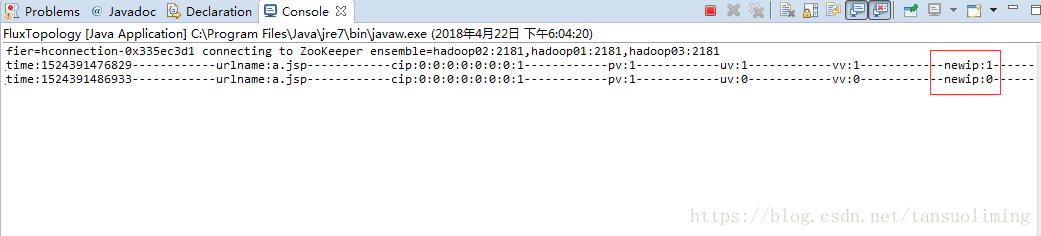

测试结果如下:第一次访问newip为1,第二次访问newip为0因为是同一个ip访问

newcust

package com.liming.flux;

import java.util.UUID;

import backtype.storm.Config;

import backtype.storm.LocalCluster;

import backtype.storm.generated.StormTopology;

import backtype.storm.spout.SchemeAsMultiScheme;

import backtype.storm.topology.TopologyBuilder;

import backtype.storm.utils.Utils;

import storm.kafka.BrokerHosts;

import storm.kafka.KafkaSpout;

import storm.kafka.SpoutConfig;

import storm.kafka.StringScheme;

import storm.kafka.ZkHosts;

public class FluxTopology {

public static void main(String[] args) {

//SPOUT的id 要求唯一

String KAFKA_SPOUT_ID = "flux_spout";

//要连接的kafka的topic

String CONSUME_TOPIC = "flux_topic";

//要连接的zookeeper的地址

String ZK_HOSTS = "192.168.239.129:2181";

//设定连接服务器的参数

BrokerHosts hosts = new ZkHosts(ZK_HOSTS);

SpoutConfig spoutConfig = new SpoutConfig(hosts, CONSUME_TOPIC, "/" + CONSUME_TOPIC, UUID.randomUUID().toString());

spoutConfig.scheme = new SchemeAsMultiScheme(new StringScheme());

//从kafka读取数据发射

KafkaSpout kafkaSpout = new KafkaSpout(spoutConfig);

//创建TopologyBuilder类实例

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout(KAFKA_SPOUT_ID, kafkaSpout);

//清理数据

builder.setBolt("ClearBolt", new ClearBolt()).shuffleGrouping(KAFKA_SPOUT_ID);

//计算PV

builder.setBolt("PvBolt", new PvBolt()).shuffleGrouping("ClearBolt");

//计算Uv

builder.setBolt("UvBolt", new UvBolt()).shuffleGrouping("PvBolt");

//计算vv

builder.setBolt("VvBolt", new VvBolt()).shuffleGrouping("UvBolt");

//计算newip

builder.setBolt("NewipBolt", new NewipBolt()).shuffleGrouping("VvBolt");

//计算newcust

builder.setBolt("NewcustBolt", new NewcustBolt()).shuffleGrouping("NewipBolt");

builder.setBolt("PrintBolt", new PrintBolt()).shuffleGrouping("NewcustBolt");

builder.setBolt("ToHbaseBolt", new ToHbaseBolt()).shuffleGrouping("NewcustBolt");

StormTopology topology = builder.createTopology();

//--提交Topology给集群运行

Config conf = new Config();

LocalCluster cluster = new LocalCluster();

cluster.submitTopology("MyTopology", conf, topology);

//--运行10秒钟后杀死Topology关闭集群

Utils.sleep(1000 * 1000);

cluster.killTopology("MyTopology");

cluster.shutdown();

}

}

如下查找中间数据

package com.liming.flux;

import java.util.List;

import java.util.Map;

import com.liming.flux.dao.HBaseDao;

import com.liming.flux.domain.FluxInfo;

import backtype.storm.task.OutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichBolt;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Tuple;

import backtype.storm.tuple.Values;

public class NewcustBolt extends BaseRichBolt {

private OutputCollector collector;

@Override

public void prepare(Map arg0, TopologyContext arg1, OutputCollector collector) {

this.collector = collector;

}

@Override

public void execute(Tuple input) {

List<Object> values = input.getValues();

//如果此uv在历史上没有出现过值为1,否则值为0

List<FluxInfo> list = HBaseDao.queryData("^[^_]*_"+input.getStringByField("uv_id")+"_.*$");

values.add(list.size()==0?1:0);

collector.emit(new Values(values.toArray()));

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("time","uv_id","ss_id","ss_time","urlname","cip","pv","uv","vv","newip","newcust"));

}

}测试如下:

将数据落地到mysql中

1.对mysql的操作

创建数据库 create database flux; 创建表 create table flux(id int primary key auto_increment,time date,pv int,uv int,vv int,newip int,newcust int); 查看是否创建 show tables;

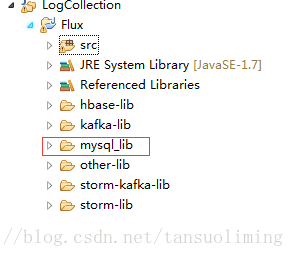

2.导包

资源:fef3

扫描二维码关注公众号,回复:

43626 查看本文章

3.编码

package com.liming.flux;

import java.util.UUID;

import backtype.storm.Config;

import backtype.storm.LocalCluster;

import backtype.storm.generated.StormTopology;

import backtype.storm.spout.SchemeAsMultiScheme;

import backtype.storm.topology.TopologyBuilder;

import backtype.storm.utils.Utils;

import storm.kafka.BrokerHosts;

import storm.kafka.KafkaSpout;

import storm.kafka.SpoutConfig;

import storm.kafka.StringScheme;

import storm.kafka.ZkHosts;

public class FluxTopology {

public static void main(String[] args) {

//SPOUT的id 要求唯一

String KAFKA_SPOUT_ID = "flux_spout";

//要连接的kafka的topic

String CONSUME_TOPIC = "flux_topic";

//要连接的zookeeper的地址

String ZK_HOSTS = "192.168.239.129:2181";

//设定连接服务器的参数

BrokerHosts hosts = new ZkHosts(ZK_HOSTS);

SpoutConfig spoutConfig = new SpoutConfig(hosts, CONSUME_TOPIC, "/" + CONSUME_TOPIC, UUID.randomUUID().toString());

spoutConfig.scheme = new SchemeAsMultiScheme(new StringScheme());

//从kafka读取数据发射

KafkaSpout kafkaSpout = new KafkaSpout(spoutConfig);

//创建TopologyBuilder类实例

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout(KAFKA_SPOUT_ID, kafkaSpout);

//清理数据

builder.setBolt("ClearBolt", new ClearBolt()).shuffleGrouping(KAFKA_SPOUT_ID);

//计算PV

builder.setBolt("PvBolt", new PvBolt()).shuffleGrouping("ClearBolt");

//计算Uv

builder.setBolt("UvBolt", new UvBolt()).shuffleGrouping("PvBolt");

//计算vv

builder.setBolt("VvBolt", new VvBolt()).shuffleGrouping("UvBolt");

//计算newip

builder.setBolt("NewipBolt", new NewipBolt()).shuffleGrouping("VvBolt");

//计算newcust

builder.setBolt("NewcustBolt", new NewcustBolt()).shuffleGrouping("NewipBolt");

//落地到mysql

builder.setBolt("ToMysqlBolt", new ToMysqlBolt()).shuffleGrouping("NewcustBolt");

builder.setBolt("PrintBolt", new PrintBolt()).shuffleGrouping("NewcustBolt");

builder.setBolt("ToHbaseBolt", new ToHbaseBolt()).shuffleGrouping("NewcustBolt");

StormTopology topology = builder.createTopology();

//--提交Topology给集群运行

Config conf = new Config();

LocalCluster cluster = new LocalCluster();

cluster.submitTopology("MyTopology", conf, topology);

//--运行10秒钟后杀死Topology关闭集群

Utils.sleep(1000 * 1000);

cluster.killTopology("MyTopology");

cluster.shutdown();

}

}

package com.liming.flux;

import java.util.Date;

import java.util.Map;

import com.liming.flux.dao.MysqlDao;

import com.liming.flux.domain.ResultInfo;

import com.liming.flux.utils.FluxUtils;

import backtype.storm.task.OutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichBolt;

import backtype.storm.tuple.Tuple;

public class ToMysqlBolt extends BaseRichBolt {

@Override

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

}

@Override

public void execute(Tuple input) {

ResultInfo ri = new ResultInfo();

String time = input.getStringByField("time");

Date date = FluxUtils.parseDateStr(time);

ri.setTime(new java.sql.Date(date.getTime()));

ri.setPv(input.getIntegerByField("pv"));

ri.setUv(input.getIntegerByField("uv"));

ri.setVv(input.getIntegerByField("vv"));

ri.setNewip(input.getIntegerByField("newip"));

ri.setNewcust(input.getIntegerByField("newcust"));

MysqlDao.insert(ri);

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

}

}

操作mysql

package com.liming.flux.dao;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import com.liming.flux.domain.ResultInfo;

public class MysqlDao {

private MysqlDao() {

}

public static void insert(ResultInfo ri){

Connection conn = null;

PreparedStatement ps = null;

try{

Class.forName("com.mysql.jdbc.Driver");

conn = DriverManager.getConnection("jdbc:mysql://hadoop01:3306/flux","root","root");

ps = conn.prepareStatement("insert into flux values (null,?,?,?,?,?,?)");

ps.setDate(1, ri.getTime());

ps.setInt(2, ri.getPv());

ps.setInt(3, ri.getUv());

ps.setInt(4, ri.getVv());

ps.setInt(5, ri.getNewip());

ps.setInt(6, ri.getNewcust());

ps.executeUpdate();

}catch(Exception e){

e.printStackTrace();

throw new RuntimeException(e);

}finally {

if(ps!=null){

try {

ps.close();

} catch (SQLException e) {

e.printStackTrace();

throw new RuntimeException(e);

} finally {

ps = null;

}

}

if(conn!=null){

try {

conn.close();

} catch (SQLException e) {

e.printStackTrace();

throw new RuntimeException(e);

} finally {

conn = null;

}

}

}

}

}

实体类

package com.liming.flux.domain;

import java.sql.Date;

public class ResultInfo {

private Date time;

private int pv;

private int uv;

private int vv;

private int newip;

private int newcust;

public ResultInfo() {

}

public ResultInfo(Date time, int pv, int uv, int vv, int newip, int newcust) {

super();

this.time = time;

this.pv = pv;

this.uv = uv;

this.vv = vv;

this.newip = newip;

this.newcust = newcust;

}

public Date getTime() {

return time;

}

public void setTime(Date time) {

this.time = time;

}

public int getPv() {

return pv;

}

public void setPv(int pv) {

this.pv = pv;

}

public int getUv() {

return uv;

}

public void setUv(int uv) {

this.uv = uv;

}

public int getVv() {

return vv;

}

public void setVv(int vv) {

this.vv = vv;

}

public int getNewip() {

return newip;

}

public void setNewip(int newip) {

this.newip = newip;

}

public int getNewcust() {

return newcust;

}

public void setNewcust(int newcust) {

this.newcust = newcust;

}

}

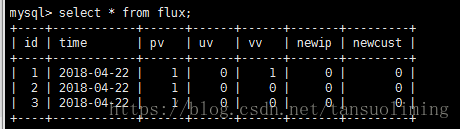

测试:mysql中是否收到正确数据

同一个用户新打开浏览器访问三次收集到的数据