模块开发----统计分析

每一种统计指标都可以跟各维度表进行钻取。

-

分组条件判别技巧

如果需求中出现

每xxx 各xxx 按xxx, 很大可能就是分组的字段条件 -

设置智能本地模式 :

set hive.exec.mode.local.auto=true;

1. 流量分析

1.1 多维度统计pv总量

-

需求 : 计算该处理批次(一天)中各小时pvs

处理数据所在的表 : ods_weblog_detail

分组条件 : 时间维度 (day hour)

表中天是分区字段 , 可以不通过group by即可过滤出所需要的天

-- 查询到的就是一天中各小时的pvs , 自己编写的查询sql

select

t.month,t.day,t.hour,count(*) as pvs

from ods_weblog_detail t

where t.datestr='20130918'

group by t.month,t.day,t.hour;

------------------------------------------------

-- 将查询到的结果保存到hdfs上

-- 第一种方式:直接在ods_weblog_detail单表上进行查询

-- 计算该处理批次(一天)中的各小时pvs

-- 首先创建一个表用于存储查询得到的数据

drop table dw_pvs_everyhour_oneday;

create table dw_pvs_everyhour_oneday(month string,day string,hour string,pvs bigint) partitioned by(datestr string);

-- 将查询的结果存储到新建的表中

insert into table dw_pvs_everyhour_oneday partition(datestr='20130918')

select a.month as month,a.day as day,a.hour as hour,count(*) as pvs from ods_weblog_detail a

where a.datestr='20130918' group by a.month,a.day,a.hour;

- 需求 : 计算每天的pvs

方式一 : 在上一个基础上sum每个小时就构成了一天

Insert into table dw_pvs_everyday

Select sum(pvs) as pvs,month,day from dw_pvs_everyhour_oneday group by month,day having day='18';

方式二 : 只能查询出一天的pvs量

select

count(*) as pvs

from ods_weblog_detail t

where t.datestr='20130918';

方式三 : 可以按天和月进行分组

select

t.month,t.day,count(*) as pvs

from ods_weblog_detail t

where t.datestr='20130918'

group by t.month,t.day;

---------------------------------------------

-- 将查询的结果保存到hdfs上

-- 直接在ods_weblog_detail单表上进行查询

--计算每天的pvs

drop table dw_pvs_everyday;

create table dw_pvs_everyday(pvs bigint,month string,day string);

insert into table dw_pvs_everyday

select count(*) as pvs,a.month as month,a.day as day from ods_weblog_detail a

group by a.month,a.day;

---------------------------------------

方式四 :跟时间的维度表进行join

--维度 : 月

drop table dw_pvs_everymonth;

create table dw_pvs_everymonth (pvs bigint,month string);

insert into table dw_pvs_everymonth

select count(*) as pvs,a.month from (select distinct month from t_dim_time) a

join ods_weblog_detail b on a.month=b.month group by a.month;

--维度 : 日

计算每天pvs

select count(*) as pvs,a.month as month,a.day as day from (select distinct month, day from t_dim_time) a

join ods_weblog_detail b

on a.month=b.month and a.day=b.day

group by a.month,a.day;

--维度 : 小时

计算每小时pvs

select count(*) as pvs,a.month as month,a.day as day,a.hour as hour from (select distinct month, day ,hour from t_dim_time) a

join ods_weblog_detail b

on a.month=b.month and a.day=b.day and a.hour=b.hour

group by a.month,a.day,a.hour;

执行最终无结果 原因是:宽表中hour字段的提取有误

substring(time_local,11,3) as hour

变成substring(time_local,12,2) as hour

-

需求 : 统计每小时各来访url产生的pvs

表 : ods_weblog_detail

分组字段 : 时间(hour) url(http_referer)

select

t.http_referer,t.ref_host,t.month,t.day,t.hour,count(*) as pvs

from ods_weblog_detail t

where t.datestr='20130918'

group by t.http_referer,t.ref_host,t.month,t.day,t.hour limit 10;

能执行 没考虑无意义数据

select

t.http_referer,t.ref_host,t.month,t.day,t.hour,count(*) as pvs

from ods_weblog_detail t

where t.datestr='20130918'

group by t.http_referer,t.ref_host,t.month,t.day,t.hour

having t.ref_host is not null limit 10;

如果再根据pvs数量倒序排序

select

t.http_referer,t.ref_host,t.month,t.day,t.hour,count(*) as pvs

from ods_weblog_detail t

where t.datestr='20130918'

group by t.http_referer,t.ref_host,t.month,t.day,t.hour

having t.ref_host is not null

order by pvs desc limit 10;

--统计每小时各来访url产生的pv量,查询结果存入:("dw_pvs_referer_everyhour" )

--并且将数据保存到hdfs

drop table dw_pvs_referer_everyhour;

create table dw_pvs_referer_everyhour(referer_url string,referer_host string,month string,day string,hour string,pv_referer_cnt bigint) partitioned by(datestr string);

insert into table dw_pvs_referer_everyhour partition(datestr='20130918')

select http_referer,ref_host,month,day,hour,count(1) as pv_referer_cnt

from ods_weblog_detail

group by http_referer,ref_host,month,day,hour

having ref_host is not null

order by hour asc,day asc,month asc,pv_referer_cnt desc;

-

需求:统计每小时各来访host的产生的pv数并排序

表 : ods_weblog_detail

分组 : 时间(hour) host

方式一:在上一个基础之上 根据host分组 sum每个pvs

方式二:去除无意义数据

select

t.ref_host,t.hour,count(1) as pvs

from ods_weblog_detail t

group by t.ref_host,t.hour

having t.ref_host is not null

order by pvs desc limit 10;

--统计每小时各来访host的产生的pv数并排序

--并且将数据保存到hdfs

drop table dw_pvs_refererhost_everyhour;

create table dw_pvs_refererhost_everyhour(ref_host string,month string,day string,hour string,ref_host_cnts bigint) partitioned by(datestr string);

insert into table dw_pvs_refererhost_everyhour partition(datestr='20130918')

select ref_host,month,day,hour,count(1) as ref_host_cnts

from ods_weblog_detail

group by ref_host,month,day,hour

having ref_host is not null

order by hour asc,day asc,month asc,ref_host_cnts desc;

扩展了解:User Agent 也简称 UA。它是一个特殊字符串头,是一种向访问网站提供所使用的浏览器类型及版本、操作系统及版本、浏览器内核、等信息的标识。

https://blog.csdn.net/laozhaokun/article/details/40621605

- 按终端维度(了解)

下列查询参考即可

select distinct(http_user_agent) from ods_weblog_detail where http_user_agent like '%Chrome%' limit 200;

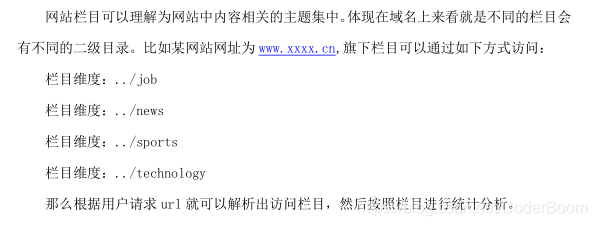

- 按栏目维度(了解)

- 按 按 referer 维度(了解)

-- 统计每小时各来访 url 产生的 pv 量 drop table dw_pvs_referer_everyhour; create table dw_pvs_referer_everyhour(referer_url string,referer_host string,month string,day string,hour string,pv_referer_cnt bigint) partitioned by(datestr string); insert into table dw_pvs_referer_everyhour partition(datestr='20130918') select http_referer,ref_host,month,day,hour,count(1) as pv_referer_cnt from ods_weblog_detail group by http_referer,ref_host,month,day,hour having ref_host is not null order by hour asc,day asc,month asc,pv_referer_cnt desc; -- 统计每小时各来访 host 的产生的 pv 数并排序 drop table dw_pvs_refererhost_everyhour; create table dw_pvs_refererhost_everyhour(ref_host string,month string,day string,hour string,ref_host_cnts bigint) partitioned by(datestr string); insert into table dw_pvs_refererhost_everyhour partition(datestr='20130918') select ref_host,month,day,hour,count(1) as ref_host_cnts from ods_weblog_detail group by ref_host,month,day,hour having ref_host is not null order by hour asc,day asc,month asc,ref_host_cnts desc;

注:还可以按来源地域维度、访客终端维度等计算

总结多维分析步骤:

- 理清需求的意义 包括指标的含义

- 确定数据在哪 表(可能是已有的表 可能需求子查询先得出这个表)

- 确定分组条件 字段(

每 按 各) - 得出度量值(max min count sum avg topN)

-

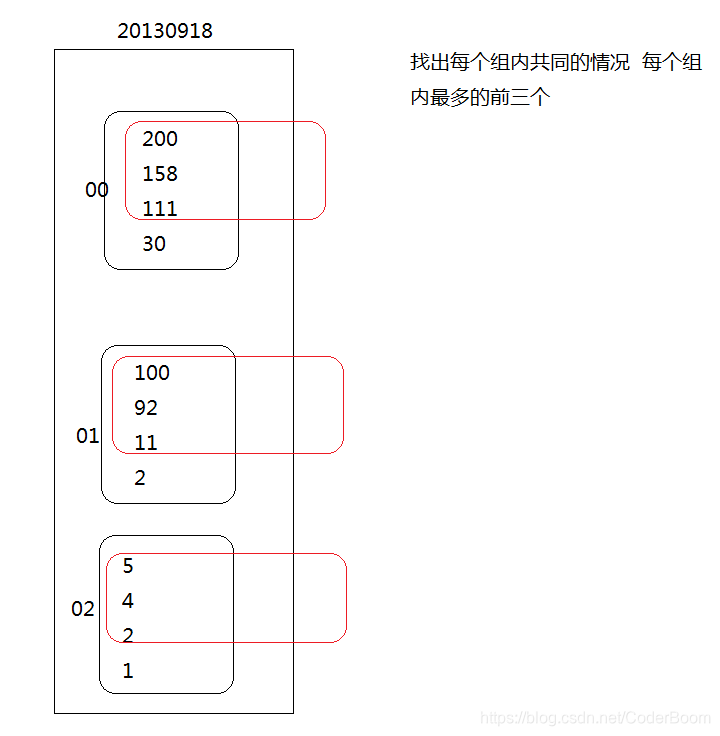

需求:按照时间维度,统计一天内各小时产生最多pvs的来源(host)topN(分组Top)

分组字段 : 时间(hour) 来源(host) pvs

表数据 : dw_pvs_refererhost_everyhour

度量:top3

知识点 : TOPN ( 分组 TOP)

row_number()函数

语法:row_number() over (partition by xxx order by xxx) rank,rank 为分组的别名,相当于新增一个字段为 rank。

row_number ,rank ,dense_ran

示例数据:

1 a 10

2 a 12

3 b 13

4 b 12

5 a 14

6 a 15

7 a 13

8 b 11

9 a 16

10 b 17

11 a 14

sql语句:

select id,

name,

sal,

rank()over(partition by name order by sal desc ) rp,

dense_rank() over(partition by name order by sal desc ) drp,

row_number()over(partition by name order by sal desc) rmp

from f_test

结果展示

10 b 17 1 1 1

3 b 13 2 2 2

4 b 12 3 3 3

8 b 11 4 4 4

9 a 16 1 1 1

6 a 15 2 2 2

11 a 14 3 3 3

5 a 14 3 3 4

7 a 13 5 4 5

2 a 12 6 5 6

1 a 10 7 6 7

详情请见下图

row over()考虑了数据的重复性 , 挤占坑位

语法 : row() over(partition by xxx order by xxx) as rank

dense_rank over()考虑了数据的重复性 , 不挤占坑位

语法 : dense_rank() over(partition by xxx order by xxx) as rank

row_number_over()不考虑数据的重复性

语法 : row_number() over(partition by xxx order by xxx) as rank

一般找出topN我们都采用dense_rank over()

- 统计pv总量最大的来源TOPN

--需求:按照时间维度,统计一天内各小时产生最多pvs的来源topN

select ref_host,ref_host_cnts,concat(month,day,hour),

row_number() over (partition by concat(month,day,hour) order by ref_host_cnts desc) od

from dw_pvs_refererhost_everyhour;

--row_number函数

select ref_host,ref_host_cnts,concat(month,day,hour),

row_number() over (partition by concat(month,day,hour) order by ref_host_cnts desc) as od

from dw_pvs_refererhost_everyhour;

--综上可以得出

--将数据保存到hdfs中

drop table dw_pvs_refhost_topn_everyhour;

create table dw_pvs_refhost_topn_everyhour(

hour string,

toporder string,

ref_host string,

ref_host_cnts string

)partitioned by(datestr string);

insert into table dw_pvs_refhost_topn_everyhour partition(datestr='20130918')

select t.hour,t.od,t.ref_host,t.ref_host_cnts from

(select ref_host,ref_host_cnts,concat(month,day,hour) as hour,

row_number() over (partition by concat(month,day,hour) order by ref_host_cnts desc) as od

from dw_pvs_refererhost_everyhour) t where od<=3;

tips:

concat : concat()函数用于将多个字符串连接成一个字符串

concat(str1,str2,…)

返回结果为连接参数产生的字符串。如有任何一个参数为NULL ,则返回值为 NULL。

contcat_ws(separator,str1,str2,…)

contcat_ws() 代表 CONCAT With Separator ,是CONCAT()的特殊形式。第一个参数是其它参数的分隔符。分隔符的位置放在要连接的两个字符串之间。分隔符可以是一个字符串,也可以是其它参数。注意:如果分隔符为 NULL,则结果为 NULL。函数会忽略任何分隔符参数后的 NULL 值。

如连接后以逗号分隔

和MySQL中concat函数不同的是, concat_ws函数在执行的时候,不会因为NULL值而返回NULL

-

需求:统计今日所有来访者平均请求的页面数。

-

总的请求页面数/总人数

表 : ods_weblog_detail

--首先计算每个人产生的请求页面数 select t.remote_addr,count(1) as pvs from ods_weblog_detail t where t.datestr='20130918' group by t.remote_addr; --再算平均每个人的请求页面数 select sum(a.pvs)/count(a.t.remote_addr) from (select t.remote_addr,count(1) as pvs from ods_weblog_detail t where t.datestr='20130918' group by t.remote_addr) a; 报错: Invalid column reference 't' 在嵌套子查询中 不能多级嵌套引用 可以采用别名的方式引用 --正确写法 select sum(a.pvs)/count(a.ip) from (select t.remote_addr as ip,count(1) as pvs from ods_weblog_detail t where t.datestr='20130918' group by t.remote_addr) a; ---------------------------------------------------- --需求描述:统计今日所有来访者平均请求的页面数。 --总页面请求数/去重总人数 --将数据保存到hdfs上 drop table dw_avgpv_user_everyday; create table dw_avgpv_user_everyday( day string, avgpv string); insert into table dw_avgpv_user_everyday select '20130918',sum(b.pvs)/count(b.remote_addr) from (select remote_addr,count(1) as pvs from ods_weblog_detail where datestr='20130918' group by remote_addr) b; -------------------- --或者如下形式也行 select allen.avgpvs from (select sum(a.pvs)/count(a.ip) as avgpvs from (select t.remote_addr as ip,count(*) as pvs from ods_weblog_detail t where t.datestr='20130918' group by t.remote_addr) a) allen -

2. 受访分析(从页面的角度分析)

-

需求: 统计每日最热门的页面 top10

表:ods_weblog_detail

分组:天(分区字段 where)页面(request)

方式一 : row_number() over (partition by xxx order by xx) as step因为此处的分组字段天恰巧也是分区字段 就不需要通过groupby 过滤

select t.request,count(*) as pages from ods_weblog_detail t where t.datestr='20130918' group by t.request order by pages desc limit 10; --------------------------------------- --热门页面统计 --统计每日最热门的页面top10 --并且将数据保存到hdfs中 drop table dw_hotpages_everyday; create table dw_hotpages_everyday(day string,url string,pvs string); insert into table dw_hotpages_everyday select '20130918',a.request,a.request_counts from (select request as request,count(request) as request_counts from ods_weblog_detail where datestr='20130918' group by request having request is not null) a order by a.request_counts desc limit 10;

3. 访客分析

3.1 独立访客

- 需求 : 按照时间维度比如小时来统计独立访客及其产生的 pv。

--时间维度:时

--将查询到的数据保存到hdfs中

drop table dw_user_dstc_ip_h;

create table dw_user_dstc_ip_h(

remote_addr string,

pvs bigint,

hour string);

insert into table dw_user_dstc_ip_h

select remote_addr,count(1) as pvs,concat(month,day,hour) as hour

from ods_weblog_detail

Where datestr='20130918'

group by concat(month,day,hour),remote_addr;

--在上述基础之上,可以继续分析,比如每小时独立访客总数

select count(1) as dstc_ip_cnts,hour from dw_user_dstc_ip_h group by hour;

--时间维度:日

select remote_addr,count(1) as counts,concat(month,day) as day

from ods_weblog_detail

Where datestr='20130918'

group by concat(month,day),remote_addr;

--时间维度: 月

select remote_addr,count(1) as counts,month

from ods_weblog_detail

group by month,remote_addr;

tips : concat可以将三个字段作为一个来划分

-

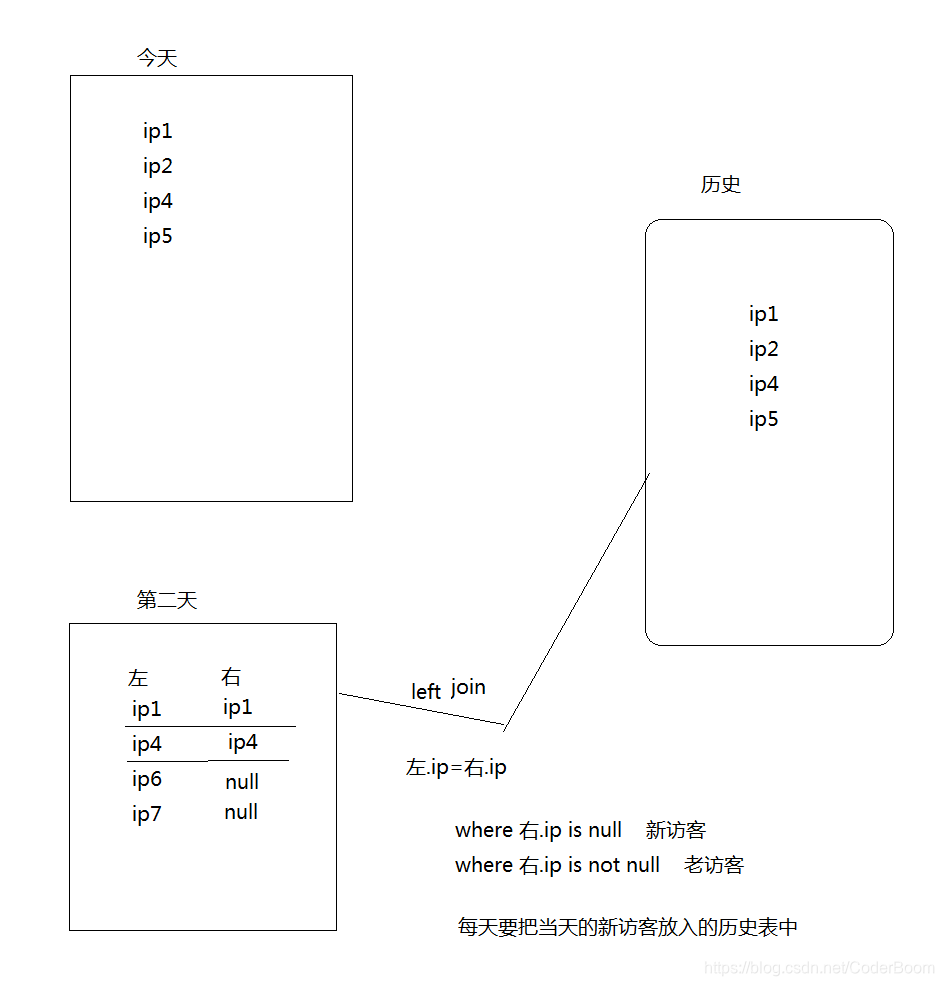

需求:每日新访客

思路见画图

--获取的是新访客

select

今天.ip

from 今天 left join 历史 on 今天.ip=历史.ip

where 历史.ip is null;

今天 : (如何获取今天所有的访客) 也就是今天的独立访客(UV)

select

distinct t.remote_addr

from ods_weblog_detail t

where t.datestr='20130918';

历史:dw_user_dsct_history

--代入公式:

--新访客

select

today.ip

from(select

distinct t.remote_addr

from ods_weblog_detail t

where t.datestr='20130918') today left join dw_user_dsct_history history on today.ip=history.ip

where history.ip is null;

--老访客

select

today.ip

from (select

distinct t.remote_addr as ip

from ods_weblog_detail t

where t.datestr='20130918') today left join dw_user_dsct_history history on today.ip=history.ip

where history.ip is not null;

------------------------------------------------

--历日去重访客累积表

drop table dw_user_dsct_history;

create table dw_user_dsct_history(

day string,

ip string

)

partitioned by(datestr string);

--每日新用户追加到累计表

insert into table dw_user_dsct_history partition(datestr='20130918')

select day,ip from dw_user_new_d where datestr='20130918';

--每日新访客表

--并且将数据保存到hdfs中

drop table dw_user_new_d;

create table dw_user_new_d (

day string,

ip string

)

partitioned by(datestr string);

--每日新用户插入新访客表

insert into table dw_user_new_d partition(datestr='20130918')

select tmp.day as day,tmp.today_addr as new_ip from

(

select today.day as day,today.remote_addr as today_addr,old.ip as old_addr

from

(select distinct remote_addr as remote_addr,"20130918" as day from ods_weblog_detail where datestr="20130918") today

left outer join

dw_user_dsct_history old

on today.remote_addr=old.ip

) tmp

where tmp.old_addr is null;

--验证:

select count(distinct remote_addr) from ods_weblog_detail;

select count(1) from dw_user_dsct_history where datestr='20130918';

select count(1) from dw_user_new_d where datestr='20130918';

注:还可以按来源地域维度、访客终端维度等计算

4. 访客Visit分析(点击流模型)

4.1 回头/单次访客统计

-

需求:查询今日所有回头访客及其访问次数(session)。

表 : ods_click_stream_visit

--查询每天访客访问次数

select

t.remote_addr,count(t.session) as visits

from ods_click_stream_visit t

where t.datestr='20130918'

group by t.remote_addr;

--单次访客

select

*

from (select t.remote_addr,count(t.session) as visits

from ods_click_stream_visit t

where t.datestr='20130918'

group by t.remote_addr) a

where a.visits=1;

--回头访客

select

*

from (select t.remote_addr,count(t.session) as visits

from ods_click_stream_visit t

where t.datestr='20130918'

group by t.remote_addr) a

where a.visits>1;

或者 :

--回头访客

select

t.remote_addr,count(t.session) as visits

from ods_click_stream_visit t

where t.datestr='20130918'

group by t.remote_addr

having visits >1;

--单次访客

select

t.remote_addr,count(t.session) as visits

from ods_click_stream_visit t

where t.datestr='20130918'

group by t.remote_addr

having visits =1;

----------------------------------------------------

-- 回头/单次访客统计

-- 并将查询的数据保存到hdfs上

drop table dw_user_returning;

create table dw_user_returning(

day string,

remote_addr string,

acc_cnt string)

partitioned by (datestr string);

insert overwrite table dw_user_returning partition(datestr='20130918')

select tmp.day,tmp.remote_addr,tmp.acc_cnt

from

(select '20130918' as day,remote_addr,count(session) as acc_cnt from ods_click_stream_visit group by remote_addr) tmp

where tmp.acc_cnt>1;

-

需求:人均访问频次

总的session个数/人==人均会话数

总的pv数/人==人均页面访问次数

如果存在需求上的模糊点 需要进行沟通。

表:ods_click_stream_visit

--人均会话数

select

count(t.session)/count(distinct t.remote_addr)

from ods_click_stream_visit t

where t.datestr='20130918';

--人均页面访问次数

select

sum(t.pagevisits)/count(distinct t.remote_addr)

from ods_click_stream_visit t

where t.datestr='20130918';

5. 关键路径转化率分析 (漏斗模型)

-

级联求和思路

如果发现需求中的指标计算需要当前的数据跟之前的数据有关,解题方向就是自己join自己。

只有当自己和自己join的时候 当前的状态就会跟之前的状态出现在一行中。

测试 :

create table t_salary_detail(username string,month string,salary int)

row format delimited fields terminated by ',';

load data local inpath '/root/hivedata/t_salary_detail.dat' into table t_salary_detail;

A,2015-01,5

A,2015-01,15

B,2015-01,5

A,2015-01,8

B,2015-01,25

A,2015-01,5

A,2015-02,4

A,2015-02,6

B,2015-02,10

B,2015-02,5

A,2015-03,7

A,2015-03,9

B,2015-03,11

B,2015-03,6

select * from t_salary_detail;

+--------------------------+-----------------------+------------------------+--+

| t_salary_detail.username | t_salary_detail.month | t_salary_detail.salary |

+--------------------------+-----------------------+------------------------+--+

| A | 2015-01 | 5 |

| A | 2015-01 | 15 |

| B | 2015-01 | 5 |

| A | 2015-01 | 8 |

| B | 2015-01 | 25 |

| A | 2015-01 | 5 |

| A | 2015-02 | 4 |

| A | 2015-02 | 6 |

| B | 2015-02 | 10 |

| B | 2015-02 | 5 |

| A | 2015-03 | 7 |

| A | 2015-03 | 9 |

| B | 2015-03 | 11 |

| B | 2015-03 | 6 |

+--------------------------+-----------------------+------------------------+--+

1、第一步,先求个用户的月总金额 , 有上述

select username,month,sum(salary) as salary from t_salary_detail group by username,month;

+-----------+----------+---------+-

| username | month | salary | total(累加)

+-----------+----------+---------+-

| A | 2015-01 | 33 | 33

| A | 2015-02 | 10 | 43

| A | 2015-03 | 16 | 59

| B | 2015-01 | 33 | 33

| B | 2015-02 | 15 | 48

| B | 2015-03 | 17 | 65

+-----------+----------+---------+--+

2、第二步,将月总金额表 自己连接 自己连接

由于我们需要将需求中的数据整理到一行 , 因此我们使用自己join自己

select A.*,B.* FROM

(select username,month,sum(salary) as salary from t_salary_detail group by username,month) A

inner join

(select username,month,sum(salary) as salary from t_salary_detail group by username,month) B

on

A.username=B.username

where B.month <= A.month;

+-------------+----------+-----------+-------------+----------+-----------+--+

| a.username | a.month | a.salary | b.username | b.month | b.salary |

+-------------+----------+-----------+-------------+----------+-----------+--+

| A | 2015-01 | 33 | A | 2015-01 | 33 |

| A | 2015-02 | 10 | A | 2015-01 | 33 |

| A | 2015-02 | 10 | A | 2015-02 | 10 |

| A | 2015-03 | 16 | A | 2015-01 | 33 |

| A | 2015-03 | 16 | A | 2015-02 | 10 |

| A | 2015-03 | 16 | A | 2015-03 | 16 |

| B | 2015-01 | 30 | B | 2015-01 | 30 |

| B | 2015-02 | 15 | B | 2015-01 | 30 |

| B | 2015-02 | 15 | B | 2015-02 | 15 |

| B | 2015-03 | 17 | B | 2015-01 | 30 |

| B | 2015-03 | 17 | B | 2015-02 | 15 |

| B | 2015-03 | 17 | B | 2015-03 | 17 |

+-------------+----------+-----------+-------------+----------+-----------+--+

3、第三步,从上一步的结果中

进行分组查询,分组的字段是a.username a.month

求月累计值: 将b.month <= a.month的所有b.salary求和即可

select A.username,A.month,max(A.salary) as salary,sum(B.salary) as accumulate

from

(select username,month,sum(salary) as salary from t_salary_detail group by username,month) A

inner join

(select username,month,sum(salary) as salary from t_salary_detail group by username,month) B

on

A.username=B.username

where B.month <= A.month

group by A.username,A.month

order by A.username,A.month;

+-------------+----------+---------+-------------+--+

| a.username | a.month | salary | accumulate |

+-------------+----------+---------+-------------+--+

| A | 2015-01 | 33 | 33 |

| A | 2015-02 | 10 | 43 |

| A | 2015-03 | 16 | 59 |

| B | 2015-01 | 30 | 30 |

| B | 2015-02 | 15 | 45 |

| B | 2015-03 | 17 | 62 |

+-------------+----------+---------+-------------+--+

-

需求 : 在一条指定的业务流程中,各个步骤的完成人数及相对上一个步骤的百分比。

tips: union操作符合并两个或多个 SELECT 语句的结果。

load data local inpath '/root/hivedata/click-part-r-00000' overwrite into table ods_click_pageviews partition(datestr='20130920');

----------------------------------------------------------

---1、查询每一个步骤的总访问人数

UNION将多个SELECT语句的结果集合并为一个独立的结果集

create table dw_oute_numbs as

select 'step1' as step,count(distinct remote_addr) as numbs from ods_click_pageviews where datestr='20130920' and request like '/item%'

union

select 'step2' as step,count(distinct remote_addr) as numbs from ods_click_pageviews where datestr='20130920' and request like '/category%'

union

select 'step3' as step,count(distinct remote_addr) as numbs from ods_click_pageviews where datestr='20130920' and request like '/order%'

union

select 'step4' as step,count(distinct remote_addr) as numbs from ods_click_pageviews where datestr='20130920' and request like '/index%';

select * from dw_oute_numbs;

+---------------------+----------------------+--+

| dw_oute_numbs.step | dw_oute_numbs.numbs |

+---------------------+----------------------+--+

| step1 | 1029 |

| step2 | 1029 |

| step3 | 1028 |

| step4 | 1018 |

+---------------------+----------------------+--+

----------------------------------------------------------------------------

--2、查询每一步骤相对于路径起点人数的比例

--级联查询,自己跟自己join

select rn.step as rnstep,rn.numbs as rnnumbs,rr.step as rrstep,rr.numbs as rrnumbs from dw_oute_numbs rn

inner join

dw_oute_numbs rr;

自join后结果如下图所示:

+---------+----------+---------+----------+--+

| rnstep | rnnumbs | rrstep | rrnumbs |

+---------+----------+---------+----------+--+

| step1 | 1029 | step1 | 1029 |

| step2 | 1029 | step1 | 1029 |

| step3 | 1028 | step1 | 1029 |

| step4 | 1018 | step1 | 1029 |

| step1 | 1029 | step2 | 1029 |

| step2 | 1029 | step2 | 1029 |

| step3 | 1028 | step2 | 1029 |

| step4 | 1018 | step2 | 1029 |

| step1 | 1029 | step3 | 1028 |

| step2 | 1029 | step3 | 1028 |

| step3 | 1028 | step3 | 1028 |

| step4 | 1018 | step3 | 1028 |

| step1 | 1029 | step4 | 1018 |

| step2 | 1029 | step4 | 1018 |

| step3 | 1028 | step4 | 1018 |

| step4 | 1018 | step4 | 1018 |

+---------+----------+---------+----------+--+

--每一步的人数/第一步的人数==每一步相对起点人数比例

select tmp.rnstep,tmp.rnnumbs/tmp.rrnumbs as ratio

from

(

select rn.step as rnstep,rn.numbs as rnnumbs,rr.step as rrstep,rr.numbs as rrnumbs from dw_oute_numbs rn

inner join

dw_oute_numbs rr) tmp

where tmp.rrstep='step1';

tmp

+---------+----------+---------+----------+--+

| rnstep | rnnumbs | rrstep | rrnumbs |

+---------+----------+---------+----------+--+

| step1 | 1029 | step1 | 1029 |

| step2 | 1029 | step1 | 1029 |

| step3 | 1028 | step1 | 1029 |

| step4 | 1018 | step1 | 1029 |

--------------------------------------------------------------------------------

--3、查询每一步骤相对于上一步骤的漏出率

--首先通过自join表过滤出每一步跟上一步的记录

select rn.step as rnstep,rn.numbs as rnnumbs,rr.step as rrstep,rr.numbs as rrnumbs from dw_oute_numbs rn

inner join

dw_oute_numbs rr

where cast(substr(rn.step,5,1) as int)=cast(substr(rr.step,5,1) as int)-1;

注意:cast为Hive内置函数 类型转换

select cast(1 as float); --1.0

select cast('2016-05-22' as date); --2016-05-22

| step1 | 1029 | step2 | 1029 |

| step2 | 1029 | step3 | 1028 |

| step3 | 1028 | step4 | 1018 |

+---------+----------+---------+----------+--+

| rnstep | rnnumbs | rrstep | rrnumbs |

+---------+----------+---------+----------+--+

| step1 | 1029 | step2 | 1029 |

| step2 | 1029 | step3 | 1028 |

| step3 | 1028 | step4 | 1018 |

+---------+----------+---------+----------+--+

--然后就可以非常简单的计算出每一步相对上一步的漏出率

select tmp.rrstep as step,tmp.rrnumbs/tmp.rnnumbs as leakage_rate

from

(

select rn.step as rnstep,rn.numbs as rnnumbs,rr.step as rrstep,rr.numbs as rrnumbs from dw_oute_numbs rn

inner join

dw_oute_numbs rr) tmp

where cast(substr(tmp.rnstep,5,1) as int)=cast(substr(tmp.rrstep,5,1) as int)-1;

-----------------------------------------------------------------------------------

--4、汇总以上两种指标

select abs.step,abs.numbs,abs.rate as abs_ratio,rel.rate as leakage_rate

from

(

select tmp.rnstep as step,tmp.rnnumbs as numbs,tmp.rnnumbs/tmp.rrnumbs as rate

from

(

select rn.step as rnstep,rn.numbs as rnnumbs,rr.step as rrstep,rr.numbs as rrnumbs from dw_oute_numbs rn

inner join

dw_oute_numbs rr) tmp

where tmp.rrstep='step1'

) abs

left outer join

(

select tmp.rrstep as step,tmp.rrnumbs/tmp.rnnumbs as rate

from

(

select rn.step as rnstep,rn.numbs as rnnumbs,rr.step as rrstep,rr.numbs as rrnumbs from dw_oute_numbs rn

inner join

dw_oute_numbs rr) tmp

where cast(substr(tmp.rnstep,5,1) as int)=cast(substr(tmp.rrstep,5,1) as int)-1

) rel

on abs.step=rel.step;