openAI GPT(Generative Pre-trained Transformer)词向量模型,2018年在《Improving Language Understanding by Generative Pre-Training》这篇论文中被提出,下面从几个方面来介绍。

1.产生场景

将训练好的词向量应用到特定任务中有两个主要挑战:

(1)it is unclear what type of optimization objectives are most effective at learning text representations that are useful for transfer

(2)there is no consensus on the most effective way to transfer these learned representations to the target task

而openAI GPT模型使用了一种半监督方式,它结合了无监督的预训练(pre-training)和有监督的微调(fine-tuning),旨在学习一种通用的表示方式,它转移到各种类型的NLP任务中都可以做很少的改变。

2.特征

是一种fine-tuning方法,在fine-tuning期间利用task-aware input Transformations来实现有效传输,同时对模型架构进行最少的更改。

3.训练

语料库:BooksCorpus (800M words)

employ a two-stage training procedure. First, we use a language modeling objective on the unlabeled data to learn the initial parameters of a neural network model. Subsequently, we adapt these parameters to a target task using the corresponding supervised objective.

(1)Unsupervised pre-training

use a multi-layer Transformer decoder for the language model。This model applies a multi-headed self-attention operation over the input context tokens followed by position-wise feedforward layers to produce an output distribution over target tokens

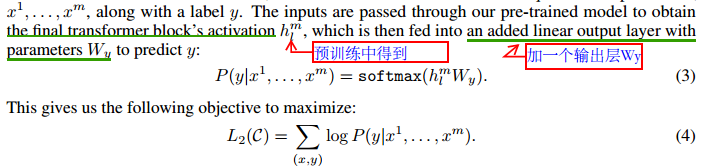

(2)Supervised fine-tuning

首先求目标函数

将语言模型作为微调(fine-tuning)的辅助目标有助于学习NLP任务,所以我们最后可以加权重组合起来,得到最新的目标函数

![]()

注意:

4.评估

对四种类型的NLP任务(12个task)进行了评估 ,其中有的dataset来自GLUE benchmark,achieves new state-of-the-art results in 9 out of the 12 datasets

(1)natural language inference(textual entailment)

dataset:MNLI、QNLI、RTE(来自GLUE benchmark)和SciTail、SNLI

(2)question answering and commonsense reasoning

dataset:Story Cloze Test和RACE dataset(consisting of English passages with associated questions from middle and high school exams)

(3)semantic similarity(predicting whether two sentences are semantically equivalent or not)

dataset:MRPC、QQP、STS-B(来自GLUE benchmark)

(4)text classification

dataset:CoLA、SST-2(来自GLUE benchmark)