Spark_SQL项目编码(Scala)

一、 新建项目

二、 引入pom文件依赖

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi=http://www.w3.org/2001/XMLSchema-instance

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.simon.spark</groupId>

<artifactId>spark-first</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>1.7</maven.compiler.source>

<maven.compiler.target>1.7</maven.compiler.target>

<encoding>UTF-8</encoding>

<scala.version>2.11.8</scala.version>

<scala.compat.version>2.11</scala.compat.version>

</properties>

<dependencies>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>2.1.1</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>2.1.1</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>2.1.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.6.4</version>

</dependency>

</dependencies>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.2.0</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

<configuration>

<args>

<!--<arg>-make:transitive</arg>-->

<arg>-dependencyfile</arg>

<arg>${project.build.directory}/.scala_dependencies</arg>

</args>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.18.1</version>

<configuration>

<useFile>false</useFile>

<disableXmlReport>true</disableXmlReport>

<includes>

<include>**/*Test.*</include>

<include>**/*Suite.*</include>

</includes>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers>

<transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>com.simon.spark.SparkSqlFirst</mainClass>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

三、 读取Txt文本(本地运行)

1. 本地Scala类

package com.simon.spark

import org.apache.spark.sql.types.{StringType, IntegerType, StructField, StructType}

import org.apache.spark.sql.{Row, SparkSession}

/**

* Created by Simon on 2017/11/24.

*/

object SparkSqlFirst {

def main(args: Array[String]) {

/**

* SparkSession-Spark的一个全新的切入点 .

* SparkSession是Spark 2.0引如的新概念。SparkSession为用户提供了统一的切入点。

* 在spark的早期版本中,SparkContext是spark的主要切入点,

* 由于RDD是主要的API,我们通过sparkcontext来创建和操作RDD.

* 对于每个其他的API,我们需要使用不同的context。

* 例如,对于Streming,我们需要使用StreamingContext;

* 对于sql,使用sqlContext.

* 对于Hive,使用hiveContext。

* 但是随着DataSet和DataFrame的API逐渐成为标准的API,就需要为他们建立接入点。

* 所以在spark2.0中,引入SparkSession作为DataSet和DataFrame API的切入点,

* SparkSession封装了SparkConf、SparkContext和SQLContext。为了向后兼容,

* SQLContext和HiveContext也被保存下来。

*/

//创建sparkSession

val spark = SparkSession.builder().appName("SparkSqlFirst").master("local").getOrCreate()

//从本地读取数据文件并创建dataFrame

val dataFrame = spark.sparkContext.textFile("F:\\hadoopsourcetest\\sparksqlperson.txt")

//将RDD映射到rowRDD

val rowRDD = dataFrame.map{x => x.split(" ")}.map(p => Row(p(0).toInt,p(1).toString.trim,p(2).toInt))

//通过StructType直接指定每个字段的schema

val schema = StructType(

List(

StructField("id", IntegerType, true),

StructField("name", StringType, true),

StructField("age", IntegerType, true)

)

)

//将schema信息应用到rowRDD上

val rowDF = spark.createDataFrame(rowRDD,schema)

//创建临时视图,如果这个视图已经存在就覆盖

rowDF.createOrReplaceTempView("sparkPerson")

val result = spark.sql("select * from sparkPerson")

println(result.show())

}

}

2. 运行程序

输出结果:

报错信息:

由于内存不足导致,并不影响查询结果。

四、 读取Txt文本(jar包运行)

1. 编写Scala类

package com.simon.spark

import org.apache.spark.sql.types.{StringType, IntegerType, StructField, StructType}

import org.apache.spark.sql.{Row, SparkSession}

/**

* Created by Simon on 2017/11/24.

*/

object SparkSqlFirst {

def main(args: Array[String]) {

/**

* SparkSession-Spark的一个全新的切入点 .

* SparkSession是Spark 2.0引如的新概念。SparkSession为用户提供了统一的切入点。

* 在spark的早期版本中,SparkContext是spark的主要切入点,

* 由于RDD是主要的API,我们通过sparkcontext来创建和操作RDD.

* 对于每个其他的API,我们需要使用不同的context。

* 例如,对于Streming,我们需要使用StreamingContext;

* 对于sql,使用sqlContext.

* 对于Hive,使用hiveContext。

* 但是随着DataSet和DataFrame的API逐渐成为标准的API,就需要为他们建立接入点。

* 所以在spark2.0中,引入SparkSession作为DataSet和DataFrame API的切入点,

* SparkSession封装了SparkConf、SparkContext和SQLContext。为了向后兼容,

* SQLContext和HiveContext也被保存下来。

*/

//创建sparkSession

val spark = SparkSession.builder().appName("SparkSqlFirst").master("local").getOrCreate()

//从本地读取数据文件并创建dataFrame

val dataFrame = spark.sparkContext.textFile("F:\\hadoopsourcetest\\sparksqlperson.txt")

//将RDD映射到rowRDD

val rowRDD = dataFrame.map{x => x.split(" ")}.map(p => Row(p(0).toInt,p(1).toString.trim,p(2).toInt))

//通过StructType直接指定每个字段的schema

val schema = StructType(

List(

StructField("id", IntegerType, true),

StructField("name", StringType, true),

StructField("age", IntegerType, true)

)

)

//将schema信息应用到rowRDD上

val rowDF = spark.createDataFrame(rowRDD,schema)

//创建临时视图,如果这个视图已经存在就覆盖

rowDF.createOrReplaceTempView("sparkPerson")

val result = spark.sql("select * from sparkPerson")

println(result.show())

}

}

2. 打包

3. 上传jar包

4. 运行spark-submit

命令:

./spark-submit \ --class com.simon.spark.SparkSqlFirst \ /usr/simonsource/spark-sql-first-1.0-SNAPSHOT.jar

5. 运行结果:

五、 练习SparkSQL API操作

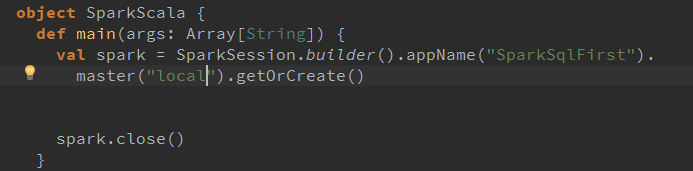

1. 定义main方法

def main(args: Array[String]) {

val spark = SparkSession.builder().appName("SparkSqlFirst").

master("local").getOrCreate()

spark.close()

}

2. 读取文件转换为Student对象进行操作

1) 读取文件生成RDD

2) 解析RDD 生成student

此时需要引入case对象

在方法中导入spark.implicits._

3) 将student对象注册成临时表

4) 添加到主函数运行

5) 运行结果