Spark_SQL项目编码(Java)

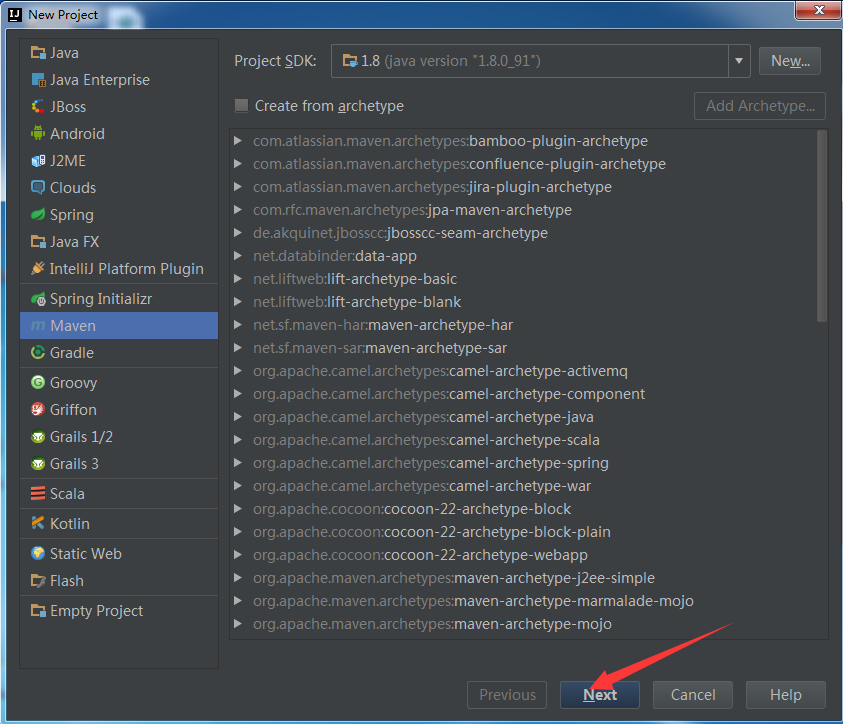

一、 新建项目maven项目

二、 导入pom文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.simon.spark</groupId>

<artifactId>spark-sql-java</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<encoding>UTF-8</encoding>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>2.1.1</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>2.1.1</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>2.1.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.6.4</version>

</dependency>

</dependencies>

</project>

三、 新建Java类

package com.simon.spark;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

import org.apache.spark.sql.RowFactory;

import org.apache.spark.sql.SparkSession;

import org.apache.spark.sql.types.DataTypes;

import org.apache.spark.sql.types.StructField;

import org.apache.spark.sql.types.StructType;

import java.util.ArrayList;

import java.util.List;

/**

* Created by Simon on 2017/11/25.

*/

public class SparkSqlJavaFirst {

public static void main(String[] args) {

//初始化SparkContext

/* SparkConf conf = new SparkConf();

conf.setAppName("SparkSqlJavaFirst").setMaster("local");

JavaSparkContext sc = new JavaSparkContext(conf);*/

SparkSession spark = SparkSession.builder().appName("SparkSqlJavaFirst").master("local").getOrCreate();

JavaSparkContext sc = new JavaSparkContext(spark.sparkContext());

JavaRDD<String> lines = sc.textFile("F:\\hadoopsourcetest\\sparksqlperson.txt");

JavaRDD<Row> coms = lines.map(new Function<String,Row>()

{

@Override

public Row call(String line) throws Exception {

String[] splited = line.split(" ");

return RowFactory.create(Integer.parseInt(splited[0]),splited[1].trim(),Integer.parseInt(splited[2]));

}

});

List<StructField> structFieldList = new ArrayList<StructField>();

structFieldList.add(DataTypes.createStructField("ID", DataTypes.IntegerType,true));

structFieldList.add(DataTypes.createStructField("NAME", DataTypes.StringType,true));

structFieldList.add(DataTypes.createStructField("AGE", DataTypes.IntegerType,true));

//构建表的StructType

StructType structType = DataTypes.createStructType(structFieldList);

//将表转换成DataFrame

Dataset<Row> dataFrame = spark.createDataFrame(coms, structType);

//创建临时表

dataFrame.createOrReplaceTempView("person");

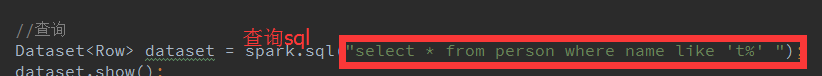

//查询

Dataset<Row> dataset = spark.sql("select * from person where name like 't%' ");

dataset.show();

sc.close();

}

}

四、 运行

五、 修改master和hdfs文件

六、 打包上传

七、 上传

八、 运行

./spark-submit \ --class com.simon.spark.SparkSqlJavaFirst \ /usr/simonsource/spark-sql-java-1.0-SNAPSHOT.jar

九、 运行结果

SparkSQL API参考(本文参考):

http://blog.csdn.net/slq1023/article/details/51100777