我的理解:就是通过1*1的卷积来大幅度改变通道数的操作,在残差网络中就是为了能减少训练参数,能加深网络层数。

在深度学习中经常听闻Bottleneck Layer 或 Bottleneck Features ,亦或 Bottleneck Block,其虽然容易理解,其意思就是输入输出维度差距较大,就像一个瓶颈一样,上窄下宽亦或上宽下窄,然而其正儿八经的官方出处没有一个能说出其所以然来,下面本文将对Bottleneck Layer 或 Bottleneck Features ,亦或 Bottleneck Block追根溯源,使之对Bottleneck Layer 或 Bottleneck Features ,亦或 Bottleneck Block有一个全面的认识。

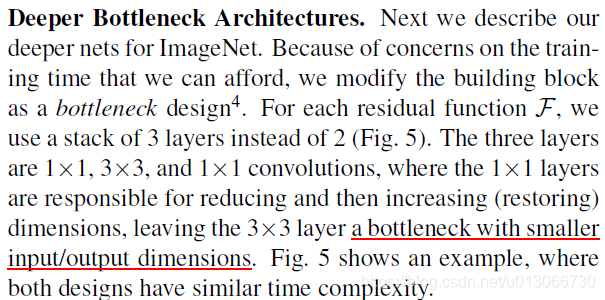

首先来看一篇关于深度神经网络的有效处理 的综述文章对Bottleneck Building Block的表述:"In order to reduce the number of weights, 1x1 filters are applied as a "bottleneck" to reduce the number of channels for each filter",在这句话中,1x1 filters 最初的出处即"Network In Network",1x1 filters 可以起到一个改变输出维数(channels)的作用(elevation or dimensionality reduction)。下面来看一下ResNet对Bottleneck Building Block的表述:

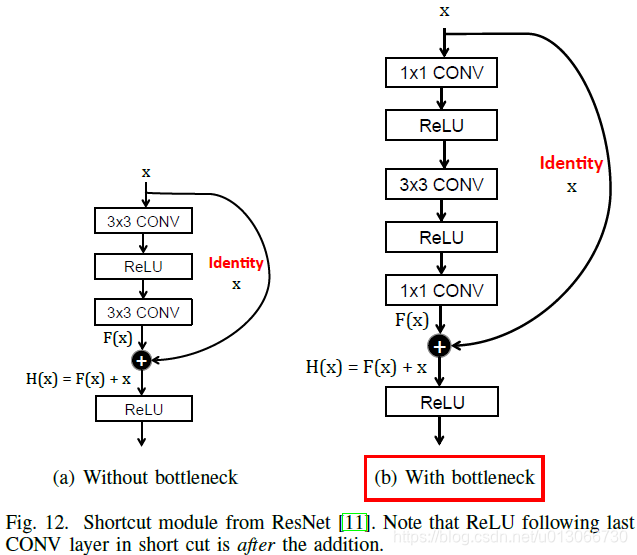

其对应的图示如下:

可以看到,右图中 1x1 filters把维度(channels)升高了,输入输出维度差距较大。继续如下图所示:

还有一篇论文 "Improved Bottleneck Features Using Pretrained Deep Neural Networks" 对Bottleneck Building Block作了简单的描述:"Bottleneck features are generated from a multi-layer perceptron in which one of the internal layers has a small number of hidden units, relative to the size of the other layers."

转自:https://blog.csdn.net/u011501388/article/details/80389164