使用预训练网络的bottleneck特征

在规模较大的数据集上训练好的网络,一般都具有非常好的特征提取能力.以VGG16为例,其网络就是通过卷基层提取到图像特征后通过后面的全连接层进行分类.现在我们通过使用VGG16的卷基层对我们自己的图像数据集进行特征提取以提高

通过

from keras.applications.vgg16 import VGG16

from keras.utils import plot_model

model = VGG16(include_top=True, weights='imagenet')

model.summary()

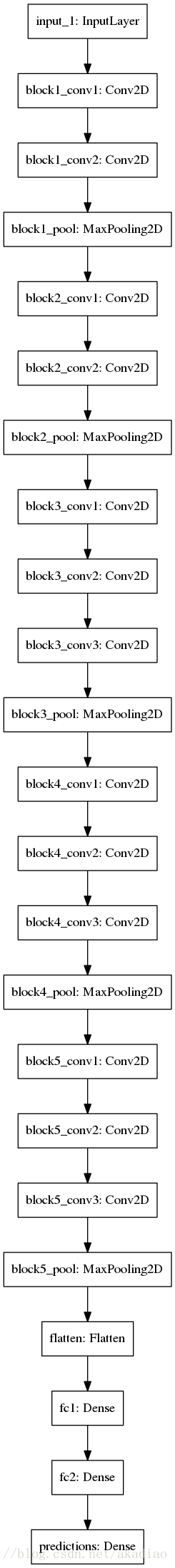

plot_model(model, to_file='VGG16.png')可以看到VGG16的网络结构为:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) (None, 224, 224, 3) 0

_________________________________________________________________

block1_conv1 (Conv2D) (None, 224, 224, 64) 1792

_________________________________________________________________

block1_conv2 (Conv2D) (None, 224, 224, 64) 36928

_________________________________________________________________

block1_pool (MaxPooling2D) (None, 112, 112, 64) 0

_________________________________________________________________

block2_conv1 (Conv2D) (None, 112, 112, 128) 73856

_________________________________________________________________

block2_conv2 (Conv2D) (None, 112, 112, 128) 147584

_________________________________________________________________

block2_pool (MaxPooling2D) (None, 56, 56, 128) 0

_________________________________________________________________

block3_conv1 (Conv2D) (None, 56, 56, 256) 295168

_________________________________________________________________

block3_conv2 (Conv2D) (None, 56, 56, 256) 590080

_________________________________________________________________

block3_conv3 (Conv2D) (None, 56, 56, 256) 590080

_________________________________________________________________

block3_pool (MaxPooling2D) (None, 28, 28, 256) 0

_________________________________________________________________

block4_conv1 (Conv2D) (None, 28, 28, 512) 1180160

_________________________________________________________________

block4_conv2 (Conv2D) (None, 28, 28, 512) 2359808

_________________________________________________________________

block4_conv3 (Conv2D) (None, 28, 28, 512) 2359808

_________________________________________________________________

block4_pool (MaxPooling2D) (None, 14, 14, 512) 0

_________________________________________________________________

block5_conv1 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

block5_conv2 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

block5_conv3 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

block5_pool (MaxPooling2D) (None, 7, 7, 512) 0

_________________________________________________________________

flatten (Flatten) (None, 25088) 0

_________________________________________________________________

fc1 (Dense) (None, 4096) 102764544

_________________________________________________________________

fc2 (Dense) (None, 4096) 16781312

_________________________________________________________________

predictions (Dense) (None, 1000) 4097000

=================================================================

Total params: 138,357,544

Trainable params: 138,357,544

Non-trainable params: 0

_________________________________________________________________当我们需要使用VGG16的卷积部分时,我们将全连接以上的部分抛掉.即另

VGG16(include_top=True, weights='imagenet')中的include_top=False.

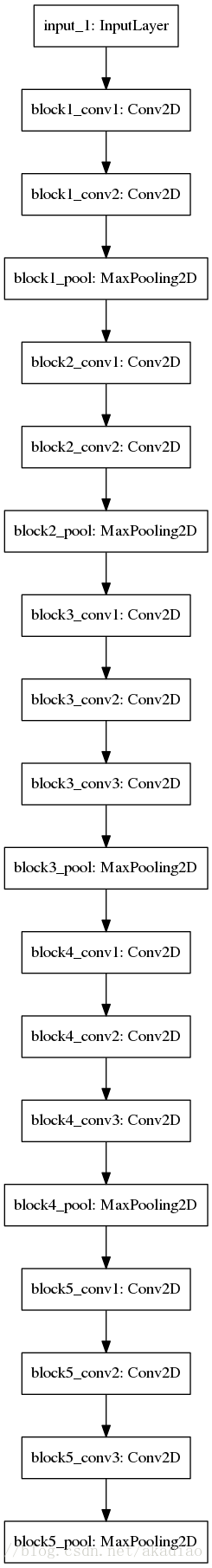

此时,网络仅仅保留了卷基层部分,如图:

这样使用.flow_from_directory()从图片中产生数据,利用网络的卷基层部分得到训练集和测试集的bottleneck特征,将得到的特征记录在numpy array里.

这里使用的数据量很小,每个文件夹下仅30幅图像.存放结构为:

train\

0\

1\

validation\

0\

1\在生成训练集与验证集的bottleneck特征数据时,steps分别选了200和80,这样得到的bottleneck特征的shape分别为(6000, 4, 4, 512)与(2400, 4, 4, 512).(即200*30=6000,80*30=2400)

关于bottleneck特征的shape查看可用:

import numpy as np

test = np.load('bottleneck_features_train.npy')

print (test.shape)生成训练集与验证集的bottleneck特征数据:

#!/usr/bin/python

# coding:utf8

from keras.preprocessing.image import ImageDataGenerator

import numpy as np

from keras.applications.vgg16 import VGG16

# include_top:是否保留顶层的3个全连接网络

# 'imagenet'代表加载预训练权重

model = VGG16(include_top=False, weights='imagenet')

# 加载pre-model的权重

model.load_weights('vgg16_weights_tf_dim_ordering_tf_kernels_notop.h5')

datagen = ImageDataGenerator(rescale=1./255)

# 训练集图像生成器,以文件夹路径为参数,生成经过数据提升/归一化后的数据

train_generator = datagen.flow_from_directory('train',

target_size=(150, 150),

batch_size=32,

class_mode=None,

shuffle=False)

# 验证集图像生成器

validation_generator = datagen.flow_from_directory('validation',

target_size=(150, 150),

batch_size=32,

class_mode=None,

shuffle=False)

# 得到bottleneck feature

# 使用一个生成器作为数据源预测模型,生成器应返回与test_on_batch的输入数据相同类型的数据

bottleneck_features_train = model.predict_generator(train_generator, steps=200)

print (bottleneck_features_train)

# steps是生成器要返回数据的轮数

# 将得到的特征记录在numpy array里

np.save(open('bottleneck_features_train.npy', 'w'), bottleneck_features_train)

bottleneck_features_validation = model.predict_generator(validation_generator, steps=80)

# 一个epoch有800张图片,验证集

np.save(open('bottleneck_features_validation.npy', 'w'), bottleneck_features_validation)然后训练我们的全连接网络,这里由于之前我们得到的训练集与测试集的bottleneck特征分别有200*30=6000,80*30=2400组,因此对应labels分别设为:

train_labels = np.array([0]*3000 + [1]*3000)validation_labels = np.array([0]*1200 + [1]*1200)然后将得到的数据载入,开始训练全连接网络:

扫描二维码关注公众号,回复:

1590327 查看本文章

#!/usr/bin/python

# coding:utf8

# fine-tune网络的后面几层.Fine-tune以一个预训练好的网络为基础,在新的数据集上重新训练一小部分权重

from keras.models import Sequential

from keras.layers import Dropout, Flatten, Dense

import numpy as np

train_data = np.load(open('bottleneck_features_train.npy'))

train_labels = np.array([0]*3000 + [1]*3000)

print (train_labels)

validation_data = np.load(open('bottleneck_features_validation.npy'))

validation_labels = np.array([0]*1200 + [1]*1200)

model = Sequential()

model.add(Flatten(input_shape=train_data.shape[1:]))

model.add(Dense(256, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(1, activation='sigmoid'))

model.compile(optimizer='rmsprop', loss='binary_crossentropy', metrics=['accuracy'])

model.fit(train_data, train_labels, epochs=5, batch_size=32, validation_data=(validation_data,validation_labels))

model.save_weights('bottleneck_fc_model.h5')