版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/wangxingfan316/article/details/79696297

1标识化处理

何为标识化处理?实际上就是一个将原生字符串分割成一系列有意义的分词,其复杂性根据不同NLP应用而异,目标语言的复杂性也占了很大部分,例如中文的标识化是要比英文要复杂。

word_tokenize()是一种通用的,面向所有语料库的标识化方法,基本能应付绝大多数。

reges_tokenize()基于正则表达式,自定义程度更高。

#!/user/bin/env python

#-*- coding:utf-8 -*-

import re

import operator

import nltk

string = "Thanks to a hands-on guide introducing programming fundamentals alongside topics in computational linguistics. plus comprehensive API documentation. NLTK is suitable for linguists ."

w = re.split('\W+',string)#匹配所有非单词性字符

word = nltk.word_tokenize(string)#分析单词包括标点符号

sentence = nltk.sent_tokenize(string)#分词句子,只能按照英文.分割

w2 = nltk.tokenize.regexp_tokenize(string,'\w+')#正则去匹配所有字符数字

w3 = nltk.tokenize.regexp_tokenize(string,'\d+')#匹配所有数字

print(w)

print(word)

print(sentence)

print(w2)

print(w3)结果为:

['Thanks', 'to', 'a', 'hands', 'on', 'guide', 'introducing', 'programming', 'fundamentals', 'alongside', 'topics', 'in', 'computational', 'linguistics', 'plus', 'comprehensive', 'API', 'documentation', 'NLTK', 'is', 'suitable', 'for', 'linguists', '']

['Thanks', 'to', 'a', 'hands-on', 'guide', 'introducing', 'programming', 'fundamentals', 'alongside', 'topics', 'in', 'computational', 'linguistics', '.', 'plus', 'comprehensive', 'API', 'documentation', '.', 'NLTK', 'is', 'suitable', 'for', 'linguists', '.']

['Thanks to a hands-on guide introducing programming fundamentals alongside topics in computational linguistics.', 'plus comprehensive API documentation.', 'NLTK is suitable for linguists .']

['Thanks', 'to', 'a', 'hands', 'on', 'guide', 'introducing', 'programming', 'fundamentals', 'alongside', 'topics', 'in', 'computational', 'linguistics', 'plus', 'comprehensive', 'API', 'documentation', 'NLTK', 'is', 'suitable', 'for', 'linguists']

[]

['Thanks', 'to', 'a', 'hands', '-', 'on', 'guide', 'introducing', 'programming', 'fundamentals', 'alongside', 'topics', 'in', 'computational', 'linguistics', '.', 'plus', 'comprehensive', 'API', 'documentation', '.', 'NLTK', 'is', 'suitable', 'for', 'linguists', '.']2词干提取

通常会使用词干提取忽略语法上的变化,将其归结为相同的词根,词干提取法较为简单,对于复杂的NLP任务,就必须用到词形还原(lemmatization),Porter词干提取器一般回答道70%以上的精准度。基本的已经够用了。

from nltk.stem import PorterStemmer

pst = PorterStemmer()

pst.stem('looking')

#Out[4]: 'look'3词形还原

词形还原是一种更条理化的方法,它涵盖了词根所有的方法和变化形式,词形还原操作会利用上下文语境和词性来确定单词的变化形式,并运用不同的标准化规则,根据词性来获取相关的词根(lemma)。

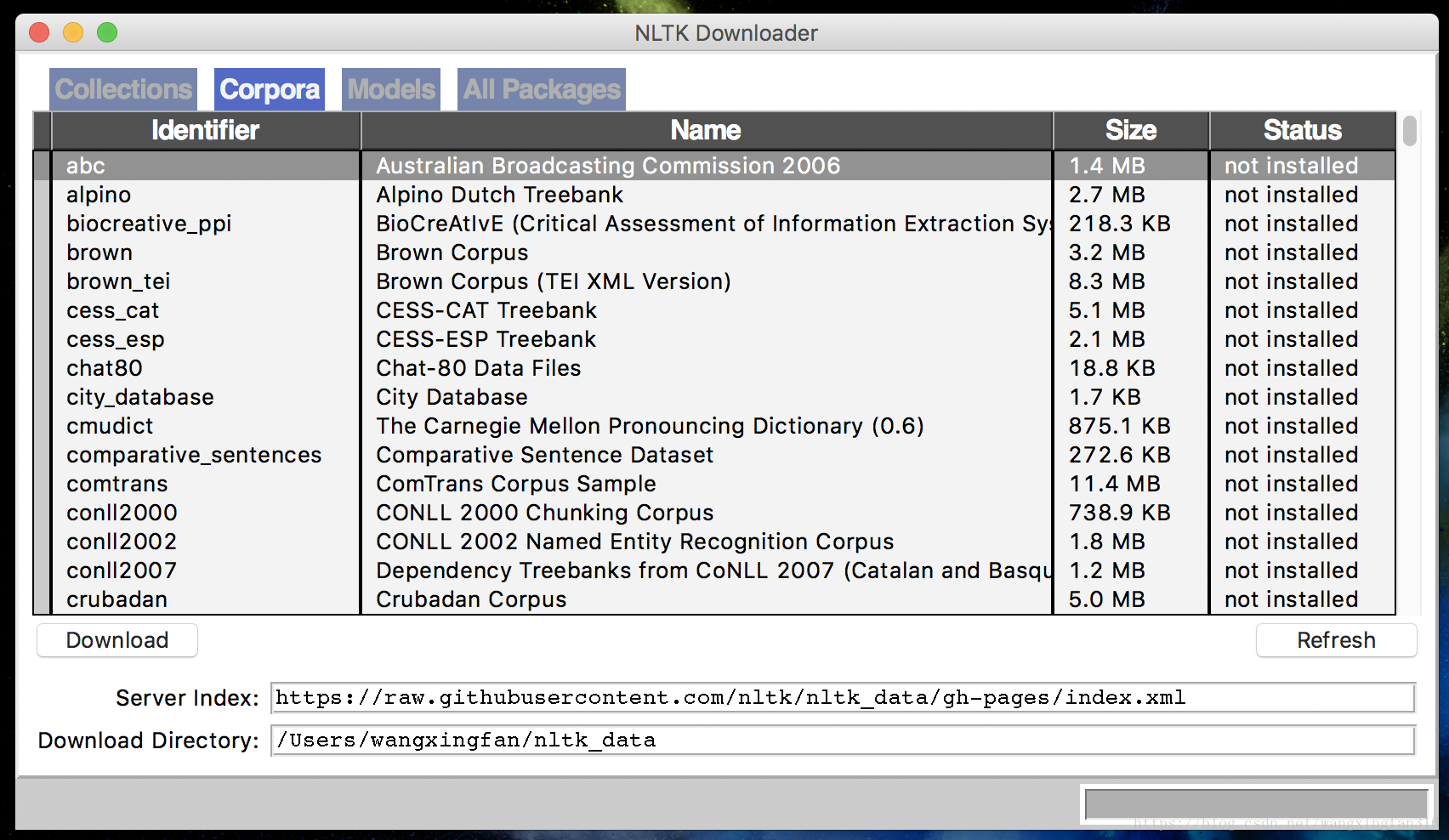

首先下载wordnet语意字典

import nltk

nltk.download()弹出一个窗口,选择wordnet的两个库下载即可。

在Mac执行出错窗口卡顿,另一种解决办法直接从网站下载:

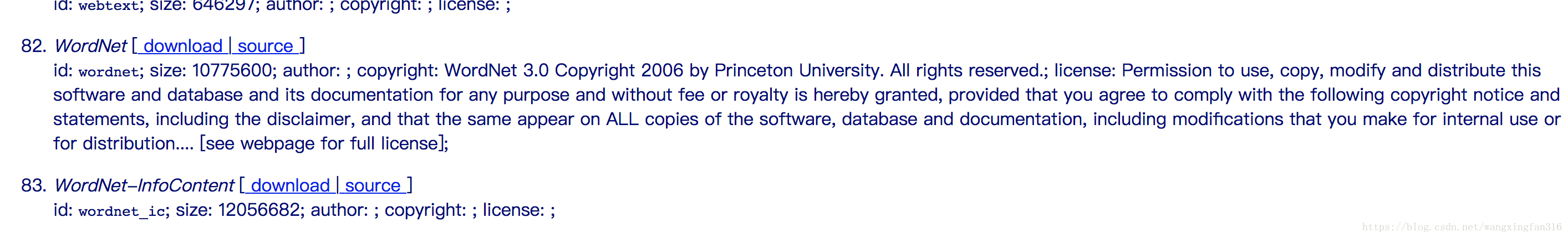

http://www.nltk.org/nltk_data/

选择:

下载后放到文件目录即可。

4停用词

停用词的移除是不同NLP应用中的常用预处理步骤,简单的理解就是停用语料库中所有文档中都会出现的单词。

from nltk.corpus import stopwords

stoplist = stopwords.words('english')#选择语言

text = "This is just a test"

cleanlist = [word for word in text.split() if word not in stoplist]#移除停用词

cleanlist

Out[13]: ['This', 'test']

5 edit_distance算法拼写纠错

举例说明,此算法主要是计算两个词之间要经过【至少】多少次转换才能转变为另一个词。

例如从rain到shine要经过三个步骤:"rain" -> "sain" -> "shin" -> "shine"

from nltk.metrics import edit_distance

edit_distance('he','she')

Out[18]: 1

edit_distance('rain','shine')

Out[19]: 3

edit_distance('a','b')

Out[20]: 1

edit_distance('like','love')

Out[21]: 2

官方文档如下:

def edit_distance(s1, s2, substitution_cost=1, transpositions=False):

"""

Calculate the Levenshtein edit-distance between two strings.

The edit distance is the number of characters that need to be

substituted, inserted, or deleted, to transform s1 into s2. For

example, transforming "rain" to "shine" requires three steps,

consisting of two substitutions and one insertion:

"rain" -> "sain" -> "shin" -> "shine". These operations could have

been done in other orders, but at least three steps are needed.

Allows specifying the cost of substitution edits (e.g., "a" -> "b"),

because sometimes it makes sense to assign greater penalties to substitutions.

This also optionally allows transposition edits (e.g., "ab" -> "ba"),

though this is disabled by default.

:param s1, s2: The strings to be analysed

:param transpositions: Whether to allow transposition edits

:type s1: str

:type s2: str

:type substitution_cost: int

:type transpositions: bool

:rtype int

"""

# set up a 2-D array

len1 = len(s1)

len2 = len(s2)

lev = _edit_dist_init(len1 + 1, len2 + 1)

# iterate over the array

for i in range(len1):

for j in range(len2):

_edit_dist_step(lev, i + 1, j + 1, s1, s2,

substitution_cost=substitution_cost, transpositions=transpositions)

return lev[len1][len2]