首先膜拜一下何凯明大神,其实首次知道FCN做语义分割的时候,也产生过是否可以与Faster Rcnn结合的想法,不过也就那么一个念头闪过而已,没具体想估计也想不明白。看了Mask Rcnn后有种豁然开朗的感觉,除了膜拜没别的想法了。这篇只写怎么使用,原理后面在写吧。

必要的开发环境我就不啰嗦了,在代码链接里有,如果只是训练自己的训练集,coco tools就不用下了,windows下安装还挺烦。

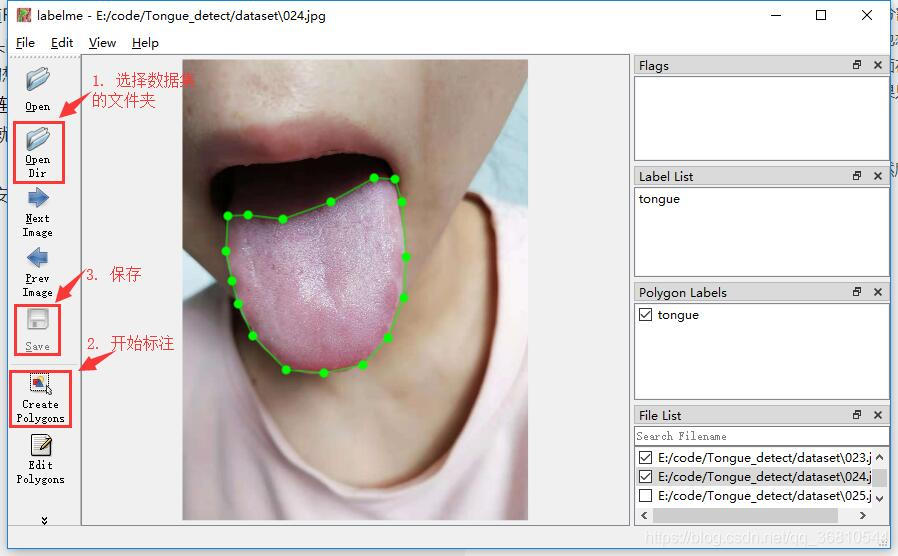

一、 数据集标注

使用labelme来标注图像,安装前需要先安装pyqt5,然后就ok了。安装的时候,可能会出现诸如:“XXX不是合法的utf-8编码”之类的错误,这是中文版windows的问题,定位到报错的那个地方,然后把编码方式"utf-8’修改成"gb2312"。忘截图了,如果还报错,就百度吧。 安装好后,直接启动labelme.exe即可。选择数据集所在的文件夹,然后一张张的手动标注吧,存的时候标签注意下,如果图中有同一个类的不同物体,则标注成label_1,label_2。比如两只小猫就标注成cat_1,cat_2。我这里每张图片只有一个目标,所以没那么麻烦了。

二、 数据处理

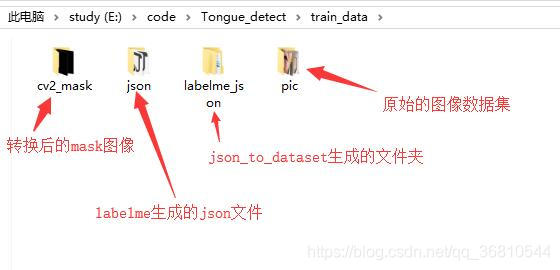

labelme保存的都是xxx.json文件,需要用labelme_json_to_dataset.exe转换一下,在cmd下定位到labelme_json_to_dataset.exe文件所在的文件夹下,其中‘E:\code\Tongue_detect\dataset’就是json文件所在的文件夹

for /r E:\code\Tongue_detect\dataset %i in (*.json) do labelme_json_to_dataset %i

这样会生成一个同名文件夹,里面包含了我们需要的mask文件,label.png,不过这个文件是16bit点,而cv2中使用的都是8bit点,所以需要转换一下。说明:这样转换后,打开转换后的图片一片漆黑,如果想看效果可以把"img = Image.fromarray(np.uint8(np.array(img)))"改成“img = Image.fromarray(np.uint8(np.array(img)) * 20 )”,不过这样不符合mask rcnn的要求,看看效果即可,后面运行还是需要不乘倍数的!

def img_16to8():

from PIL import Image

import numpy as np

import shutil

import os

src_dir = r'E:\code\Tongue_detect\train_data\labelme_json'

dest_dir = r'E:\code\Tongue_detect\train_data\cv2_mask'

for child_dir in os.listdir(src_dir):

new_name = child_dir.split('_')[0] + '.png'

old_mask = os.path.join(os.path.join(src_dir, child_dir), 'label.png')

img = Image.open(old_mask)

img = Image.fromarray(np.uint8(np.array(img)))

new_mask = os.path.join(dest_dir, new_name)

img.save(new_mask)

最后,把得到的文件统一一下,便于后续程序执行,最后文件夹如下:

三、 修改mask rcnn

在mask rcnn的根目录下,新建两个文件夹“models”和“logs” ,models用于保存已经预训练好的coco模型,可以在这里下载,logs用于保存训练产生的模型。在samples文件夹下新建一个“tongue”文件夹,创建tongue.py,代码中的 init_with = “last” 第一次训练时请改成 init_with = “coco”,代码主要参考https://blog.csdn.net/disiwei1012/article/details/79928679 略微修改

import os

import sys

import random

import math

import re

import time

import numpy as np

import cv2

import matplotlib

import matplotlib.pyplot as plt

import yaml

from PIL import Image

# Root directory of the project

ROOT_DIR = os.path.abspath("../../")

sys.path.append(ROOT_DIR) # To find local version of the library

from mrcnn.config import Config

from mrcnn import utils

from mrcnn import model as modellib

# Directory to save logs and trained models

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

iter_num = 0

# Local path to trained weights file

COCO_MODEL_PATH = os.path.join(ROOT_DIR, "models\mask_rcnn_coco.h5")

# Download COCO trained weights from Releases if needed

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

class ShapesConfig(Config):

"""Configuration for training on the toy shapes dataset.

Derives from the base Config class and overrides values specific

to the toy shapes dataset.

"""

# Give the configuration a recognizable name

NAME = "shapes"

# Train on 1 GPU and 8 images per GPU. We can put multiple images on each

# GPU because the images are small. Batch size is 8 (GPUs * images/GPU).

GPU_COUNT = 1

IMAGES_PER_GPU = 4

# Number of classes (including background)

NUM_CLASSES = 1 + 1 # background + 1 class

# Use small images for faster training. Set the limits of the small side

# the large side, and that determines the image shape.

IMAGE_MIN_DIM = 256

IMAGE_MAX_DIM = 256

# Use smaller anchors because our image and objects are small

RPN_ANCHOR_SCALES = (8 * 6, 16 * 6, 32 * 6, 64 * 6, 128 * 6) # anchor side in pixels

# Reduce training ROIs per image because the images are small and have

# few objects. Aim to allow ROI sampling to pick 33% positive ROIs.

TRAIN_ROIS_PER_IMAGE = 32

# Use a small epoch since the data is simple

STEPS_PER_EPOCH = 100

# use small validation steps since the epoch is small

VALIDATION_STEPS = 5

class DrugDataset(utils.Dataset):

# 得到该图中有多少个实例(物体)

def get_obj_index(self, image):

n = np.max(image)

return n

# 解析labelme中得到的yaml文件,从而得到mask每一层对应的实例标签

def from_yaml_get_class(self, image_id):

info = self.image_info[image_id]

with open(info['yaml_path']) as f:

temp = yaml.load(f.read())

labels = temp['label_names']

del labels[0]

return labels

# 重新写draw_mask

def draw_mask(self, num_obj, mask, image, image_id):

# print("draw_mask-->",image_id)

# print("self.image_info",self.image_info)

info = self.image_info[image_id]

# print("info-->",info)

# print("info[width]----->",info['width'],"-info[height]--->",info['height'])

for index in range(num_obj):

for i in range(info['width']):

for j in range(info['height']):

# print("image_id-->",image_id,"-i--->",i,"-j--->",j)

# print("info[width]----->",info['width'],"-info[height]--->",info['height'])

at_pixel = image.getpixel((i, j))

if at_pixel == index + 1:

mask[j, i, index] = 1

return mask

# 重新写load_shapes,里面包含自己的自己的类别

# 并在self.image_info信息中添加了path、mask_path 、yaml_path

# yaml_pathdataset_root_path = "/tongue_dateset/"

# img_floder = dataset_root_path + "rgb"

# mask_floder = dataset_root_path + "mask"

# dataset_root_path = "/tongue_dateset/"

def load_shapes(self, count, img_floder, mask_floder, imglist, dataset_root_path):

"""Generate the requested number of synthetic images.

count: number of images to generate.

height, width: the size of the generated images.

"""

# Add classes

self.add_class("shapes", 1, "tongue") # 黑色素瘤

for i in range(count):

# 获取图片宽和高

filestr = imglist[i].split(".")[0]

# print(imglist[i],"-->",cv_img.shape[1],"--->",cv_img.shape[0])

# print("id-->", i, " imglist[", i, "]-->", imglist[i],"filestr-->",filestr)

# filestr = filestr.split("_")[1]

mask_path = mask_floder + "/" + filestr + ".png"

yaml_path = dataset_root_path + "/labelme_json/" + filestr + "_json/info.yaml"

print(dataset_root_path + "/labelme_json/" + filestr + "_json/img.png")

cv_img = cv2.imread(dataset_root_path + "/labelme_json/" + filestr + "_json/img.png")

self.add_image("shapes", image_id=i, path=img_floder + "/" + imglist[i],

width=cv_img.shape[1], height=cv_img.shape[0], mask_path=mask_path, yaml_path=yaml_path)

# 重写load_mask

def load_mask(self, image_id):

"""Generate instance masks for shapes of the given image ID.

"""

global iter_num

print("image_id", image_id)

info = self.image_info[image_id]

count = 1 # number of object

img = Image.open(info['mask_path'])

num_obj = self.get_obj_index(img)

mask = np.zeros([info['height'], info['width'], num_obj], dtype=np.uint8)

mask = self.draw_mask(num_obj, mask, img, image_id)

occlusion = np.logical_not(mask[:, :, -1]).astype(np.uint8)

for i in range(count - 2, -1, -1):

mask[:, :, i] = mask[:, :, i] * occlusion

occlusion = np.logical_and(occlusion, np.logical_not(mask[:, :, i]))

labels = []

labels = self.from_yaml_get_class(image_id)

labels_form = []

for i in range(len(labels)):

if labels[i].find("tongue") != -1:

# print "box"

labels_form.append("tongue")

class_ids = np.array([self.class_names.index(s) for s in labels_form])

return mask, class_ids.astype(np.int32)

def get_ax(rows=1, cols=1, size=8):

"""Return a Matplotlib Axes array to be used in

all visualizations in the notebook. Provide a

central point to control graph sizes.

Change the default size attribute to control the size

of rendered images

"""

_, ax = plt.subplots(rows, cols, figsize=(size * cols, size * rows))

return ax

def train_model():

# 基础设置

dataset_root_path = r"E:\code\Tongue_detect\train_data"

img_floder = os.path.join(dataset_root_path, "pic")

mask_floder = os.path.join(dataset_root_path, "cv2_mask")

# yaml_floder = dataset_root_path

imglist = os.listdir(img_floder)

count = len(imglist)

# train与val数据集准备

dataset_train = DrugDataset()

dataset_train.load_shapes(count, img_floder, mask_floder, imglist, dataset_root_path)

dataset_train.prepare()

# print("dataset_train-->",dataset_train._image_ids)

dataset_val = DrugDataset()

dataset_val.load_shapes(7, img_floder, mask_floder, imglist, dataset_root_path)

dataset_val.prepare()

# Create models in training mode

config = ShapesConfig()

config.display()

model = modellib.MaskRCNN(mode="training", config=config, model_dir=MODEL_DIR)

# Which weights to start with?

# 第一次训练时,这里填coco,在产生训练后的模型后,改成last

init_with = "last" # imagenet, coco, or last

if init_with == "imagenet":

model.load_weights(model.get_imagenet_weights(), by_name=True)

elif init_with == "coco":

# Load weights trained on MS COCO, but skip layers that

# are different due to the different number of classes

# See README for instructions to download the COCO weights

model.load_weights(COCO_MODEL_PATH, by_name=True,

exclude=["mrcnn_class_logits", "mrcnn_bbox_fc",

"mrcnn_bbox", "mrcnn_mask"])

elif init_with == "last":

# Load the last models you trained and continue training

checkpoint_file = model.find_last()

model.load_weights(checkpoint_file, by_name=True)

# Train the head branches

# Passing layers="heads" freezes all layers except the head

# layers. You can also pass a regular expression to select

# which layers to train by name pattern.

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE,

epochs=10,

layers='heads')

# Fine tune all layers

# Passing layers="all" trains all layers. You can also

# pass a regular expression to select which layers to

# train by name pattern.

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE / 10,

epochs=30,

layers="all")

class TongueConfig(ShapesConfig):

GPU_COUNT = 1

IMAGES_PER_GPU = 1

def predict():

import skimage.io

from mrcnn import visualize

# Create models in training mode

config = TongueConfig()

config.display()

model = modellib.MaskRCNN(mode="inference", config=config, model_dir=MODEL_DIR)

model_path = model.find_last()

# Load trained weights (fill in path to trained weights here)

assert model_path != "", "Provide path to trained weights"

print("Loading weights from ", model_path)

model.load_weights(model_path, by_name=True)

class_names = ['BG', 'tongue']

# Load a random image from the images folder

file_names = r'E:\code\Tongue_detect\temp\038.jpg' # next(os.walk(IMAGE_DIR))[2]

# image = skimage.io.imread(os.path.join(IMAGE_DIR, random.choice(file_names)))

image = skimage.io.imread(file_names)

# Run detection

results = model.detect([image], verbose=1)

# Visualize results

r = results[0]

visualize.display_instances(image, r['rois'], r['masks'], r['class_ids'], class_names, r['scores'])

if __name__ == "__main__":

train_model()

# predict()

修改 mrcnn/model.py文件中的第26行,修改成:

from mrcnn import utils

# import utils

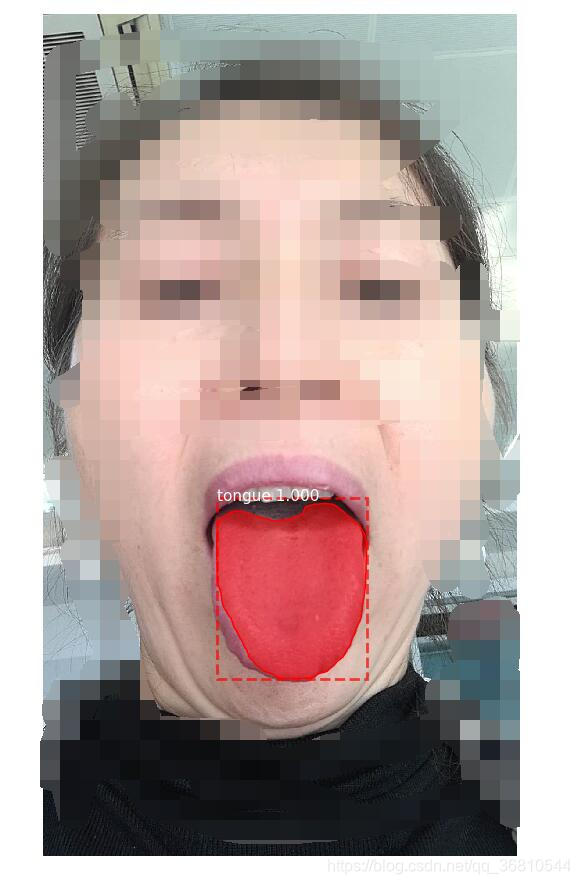

再训练了20个epoch后,总的loss降到0.0524,得到测试图像如下:

如果使用矩形框中的图像做下一步的输入已经满足要求了。