版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/byhook/article/details/83582269

目录

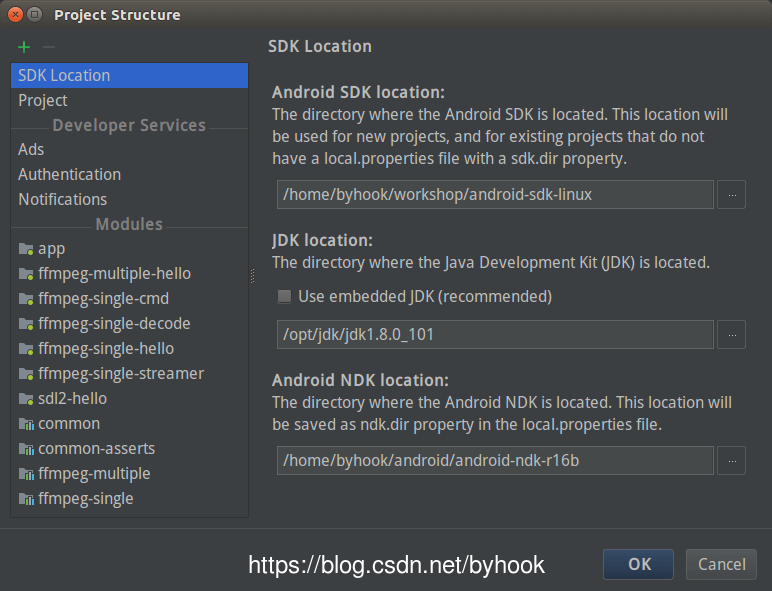

配置环境

操作系统: ubuntu 16.05

注意: ffmpeg库的编译使用的是android-ndk-r10e版本,使用高版本编译会报错

而android-studio工程中配合cmake使用的版本则是android-ndk-r16b版本

新建工程ffmpeg-single-hello

- 配置

build.gradle如下

android {

......

defaultConfig {

......

externalNativeBuild {

cmake {

cppFlags ""

}

ndk {

abiFilters "armeabi-v7a"

}

}

sourceSets {

main {

//库地址

jniLibs.srcDirs = ['libs']

}

}

}

......

externalNativeBuild {

cmake {

path "CMakeLists.txt"

}

}

}

- 新建

CMakeLists.txt文件,配置如下

cmake_minimum_required(VERSION 3.4.1)

add_library(ffmpeg-decode

SHARED

src/main/cpp/ffmpeg_decode.c)

find_library(log-lib

log)

#获取上级目录

get_filename_component(PARENT_DIR ${CMAKE_SOURCE_DIR} PATH)

set(LIBRARY_DIR ${PARENT_DIR}/ffmpeg-single)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=gnu++11")

set(CMAKE_VERBOSE_MAKEFILE on)

add_library(ffmpeg-single

SHARED

IMPORTED)

set_target_properties(ffmpeg-single

PROPERTIES IMPORTED_LOCATION

${LIBRARY_DIR}/libs/${ANDROID_ABI}/libffmpeg.so

)

#头文件

include_directories(${LIBRARY_DIR}/libs/${ANDROID_ABI}/include)

target_link_libraries(ffmpeg-decode ffmpeg-single ${log-lib})

- 新建类

FFmpegHello.java

package com.onzhou.ffmpeg.decode;

/**

* @anchor: andy

* @date: 2018-10-30

* @description:

*/

public class FFmpegDecode {

static {

System.loadLibrary("ffmpeg");

System.loadLibrary("ffmpeg-decode");

}

public native int decode(String input, String output);

}

- 在

src/main/cpp目录新建源文件ffmpeg_decode.c

#include <stdio.h>

#include <time.h>

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include "libavutil/log.h"

#ifdef ANDROID

#include <jni.h>

#include <android/log.h>

#define LOGE(format, ...) __android_log_print(ANDROID_LOG_ERROR, "(>_<)", format, ##__VA_ARGS__)

#define LOGI(format, ...) __android_log_print(ANDROID_LOG_INFO, "(^_^)", format, ##__VA_ARGS__)

#else

#define LOGE(format, ...) printf("(>_<) " format "\n", ##__VA_ARGS__)

#define LOGI(format, ...) printf("(^_^) " format "\n", ##__VA_ARGS__)

#endif

//输出日志

void log_callback(void *ptr, int level, const char *fmt, va_list vl) {

FILE *fp = fopen("/storage/emulated/0/Android/data/com.onzhou.ffmpeg.decode/files/av_log.txt",

"a+");

if (fp) {

vfprintf(fp, fmt, vl);

fflush(fp);

fclose(fp);

}

}

JNIEXPORT jint JNICALL Java_com_onzhou_ffmpeg_decode_FFmpegDecode_decode

(JNIEnv *env, jobject obj, jstring input_jstr, jstring output_jstr) {

AVFormatContext *pFormatCtx;

int i, videoindex;

AVCodecContext *pCodecCtx;

AVCodec *pCodec;

AVFrame *pFrame, *pFrameYUV;

uint8_t *out_buffer;

AVPacket *packet;

int y_size;

int ret, got_picture;

struct SwsContext *img_convert_ctx;

FILE *fp_yuv;

int frame_cnt;

clock_t time_start, time_finish;

double time_duration = 0.0;

char input_str[500] = {0};

char output_str[500] = {0};

char info[1000] = {0};

sprintf(input_str, "%s", (*env)->GetStringUTFChars(env, input_jstr, NULL));

sprintf(output_str, "%s", (*env)->GetStringUTFChars(env, output_jstr, NULL));

//日志回调

av_log_set_callback(log_callback);

av_register_all();

avformat_network_init();

pFormatCtx = avformat_alloc_context();

if (avformat_open_input(&pFormatCtx, input_str, NULL, NULL) != 0) {

LOGE("Couldn't open input stream.\n");

return -1;

}

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

LOGE("Couldn't find stream information.\n");

return -1;

}

videoindex = -1;

for (i = 0; i < pFormatCtx->nb_streams; i++)

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) {

videoindex = i;

break;

}

if (videoindex == -1) {

LOGE("Couldn't find a video stream.\n");

return -1;

}

pCodecCtx = pFormatCtx->streams[videoindex]->codec;

pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

if (pCodec == NULL) {

LOGE("Couldn't find Codec.\n");

return -1;

}

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {

LOGE("Couldn't open codec.\n");

return -1;

}

pFrame = av_frame_alloc();

pFrameYUV = av_frame_alloc();

out_buffer = (unsigned char *) av_malloc(

av_image_get_buffer_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height, 1));

av_image_fill_arrays(pFrameYUV->data, pFrameYUV->linesize, out_buffer,

AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height, 1);

packet = (AVPacket *) av_malloc(sizeof(AVPacket));

img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height, AV_PIX_FMT_YUV420P,

SWS_BICUBIC, NULL, NULL, NULL);

sprintf(info, "[Input ]%s\n", input_str);

sprintf(info, "%s[Output ]%s\n", info, output_str);

sprintf(info, "%s[Format ]%s\n", info, pFormatCtx->iformat->name);

sprintf(info, "%s[Codec ]%s\n", info, pCodecCtx->codec->name);

sprintf(info, "%s[Resolution]%dx%d\n", info, pCodecCtx->width, pCodecCtx->height);

fp_yuv = fopen(output_str, "wb+");

if (fp_yuv == NULL) {

printf("Cannot open output file.\n");

return -1;

}

frame_cnt = 0;

time_start = clock();

while (av_read_frame(pFormatCtx, packet) >= 0) {

if (packet->stream_index == videoindex) {

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

if (ret < 0) {

LOGE("Decode Error.\n");

return -1;

}

if (got_picture) {

sws_scale(img_convert_ctx, (const uint8_t *const *) pFrame->data, pFrame->linesize,

0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

y_size = pCodecCtx->width * pCodecCtx->height;

fwrite(pFrameYUV->data[0], 1, y_size, fp_yuv); //Y

fwrite(pFrameYUV->data[1], 1, y_size / 4, fp_yuv); //U

fwrite(pFrameYUV->data[2], 1, y_size / 4, fp_yuv); //V

//Output info

char pictype_str[10] = {0};

switch (pFrame->pict_type) {

case AV_PICTURE_TYPE_I:

sprintf(pictype_str, "I");

break;

case AV_PICTURE_TYPE_P:

sprintf(pictype_str, "P");

break;

case AV_PICTURE_TYPE_B:

sprintf(pictype_str, "B");

break;

default:

sprintf(pictype_str, "Other");

break;

}

LOGI("Frame Index: %5d. Type:%s", frame_cnt, pictype_str);

frame_cnt++;

}

}

av_free_packet(packet);

}

//flush decoder

//FIX: Flush Frames remained in Codec

while (1) {

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

if (ret < 0)

break;

if (!got_picture)

break;

sws_scale(img_convert_ctx, (const uint8_t *const *) pFrame->data, pFrame->linesize, 0,

pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

int y_size = pCodecCtx->width * pCodecCtx->height;

fwrite(pFrameYUV->data[0], 1, y_size, fp_yuv); //Y

fwrite(pFrameYUV->data[1], 1, y_size / 4, fp_yuv); //U

fwrite(pFrameYUV->data[2], 1, y_size / 4, fp_yuv); //V

//Output info

char pictype_str[10] = {0};

switch (pFrame->pict_type) {

case AV_PICTURE_TYPE_I:

sprintf(pictype_str, "I");

break;

case AV_PICTURE_TYPE_P:

sprintf(pictype_str, "P");

break;

case AV_PICTURE_TYPE_B:

sprintf(pictype_str, "B");

break;

default:

sprintf(pictype_str, "Other");

break;

}

LOGI("Frame Index: %5d. Type:%s", frame_cnt, pictype_str);

frame_cnt++;

}

time_finish = clock();

time_duration = (double) (time_finish - time_start);

sprintf(info, "%s[Time ]%fms\n", info, time_duration);

sprintf(info, "%s[Count ]%d\n", info, frame_cnt);

sws_freeContext(img_convert_ctx);

fclose(fp_yuv);

av_frame_free(&pFrameYUV);

av_frame_free(&pFrame);

avcodec_close(pCodecCtx);

avformat_close_input(&pFormatCtx);

return 0;

}

public void onDecodeClick(View view) {

if (fFmpegDecode == null) {

fFmpegDecode = new FFmpegDecode();

}

btnDecode.setEnabled(false);

final File fileDir = getExternalFilesDir(null);

Schedulers.newThread().scheduleDirect(new Runnable() {

@Override

public void run() {

fFmpegDecode.decode(fileDir.getAbsolutePath() + "/input.mp4", fileDir.getAbsolutePath() + "/output.yuv");

}

});

}

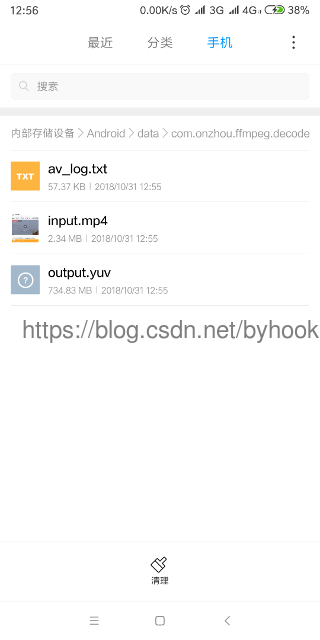

- 编译打包运行,

输出如下信息:

输出的yuv文件体积很大

项目地址:

https://github.com/byhook/ffmpeg4android

参考雷神:

https://blog.csdn.net/leixiaohua1020/article/details/47010637