1、jieba分词原理(0.39版)

- 基于词典,对句子进行词图扫描,生成所有成词情况所构成的有向无环图(

Directed Acyclic Graph) - 根据DAG,反向计算最大概率路径

- 根据路径获取最大概率的分词序列

import jieba

sentence = '中心小学放假'

print(jieba.get_DAG(sentence))

{0: [0, 1, 3], 1: [1], 2: [2, 3], 3: [3], 4: [4, 5], 5: [5]}

0: [0, 1, 3] 是词在句中位置 0~0、0~1、0~3,表示中、中心、中心小学

import jieba

sentence = '中心小学放假'

DAG = jieba.get_DAG(sentence)

route = {}

jieba.calc(sentence, DAG, route)

print(route)

{6: (0, 0), 5: (-9.4, 5), 4: (-12.6, 5), 3: (-20.8, 3), 2: (-22.5, 3), 1: (-30.8, 1), 0: (-29.5, 3)}

- 根据DGA反向(

从6~0)计算最大概率

概率<1,log(概率)<0,取对数防止下溢,乘法运算转为加法

2、源码展示(部分)

- 对句子进行词图扫描,生成DAG

def get_DAG(self, sentence):

self.check_initialized()

DAG = {}

N = len(sentence)

for k in xrange(N):

tmplist = []

i = k

frag = sentence[k]

while i < N and frag in self.FREQ:

if self.FREQ[frag]:

tmplist.append(i)

i += 1

frag = sentence[k:i + 1]

if not tmplist:

tmplist.append(k)

DAG[k] = tmplist

return DAG

- 根据DAG,反向计算最大概率路径

def calc(self, sentence, DAG, route):

N = len(sentence)

route[N] = (0, 0)

logtotal = log(self.total)

for idx in xrange(N - 1, -1, -1):

route[idx] = max((log(self.FREQ.get(sentence[idx:x + 1]) or 1) -

logtotal + route[x + 1][0], x) for x in DAG[idx])

- 根据路径获取最大概率的分词序列

def __cut_DAG_NO_HMM(self, sentence):

DAG = self.get_DAG(sentence)

route = {}

self.calc(sentence, DAG, route)

x = 0

N = len(sentence)

buf = ''

while x < N:

y = route[x][1] + 1

l_word = sentence[x:y]

if re_eng.match(l_word) and len(l_word) == 1:

buf += l_word

x = y

else:

if buf:

yield buf

buf = ''

yield l_word

x = y

3、图论知识补充

3.1、图的表示方法

%matplotlib inline

import networkx as nx

# 创建图

G = nx.DiGraph()

# 添加边

G.add_edges_from([(0, 1), (0, 2), (1, 2), (2, 3)])

# 绘图

nx.draw(G, with_labels=True, font_size=36, node_size=1500, width=4, node_color='lightgreen')

- 矩阵

class G:

def __init__(self, nodes):

self.matrix = [[0] * nodes for _ in range(nodes)]

def add_edge(self, start, end, value=1):

self.matrix[start][end] = value

g = G(4)

g.add_edge(0, 1)

g.add_edge(0, 2)

g.add_edge(1, 2)

g.add_edge(2, 3)

print(g.matrix)

- 字典

class G:

def __init__(self):

self.dt = dict()

def add_edge(self, start, end, value=1):

self.dt[start] = self.dt.get(start, dict())

self.dt[start][end] = value

g = G()

g.add_edge(0, 1)

g.add_edge(0, 2)

g.add_edge(1, 2)

g.add_edge(2, 3)

print(g.dt)

3.2、词图扫描句子生成DAG

- 获取DAG

def get_dag(sentence, corpus, size=4):

length = len(sentence)

dag = dict()

for head in range(length):

tail = head + size

if tail > length:

tail = length

dag.update({head: []})

for middle in range(head + 1, tail + 1):

word = sentence[head: middle]

if word in corpus:

dag[head].append(middle - 1)

return dag

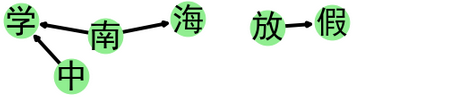

corpus = {'南海中学', '南海', '中学', '放假', '南', '海', '中', '学', '放', '假'}

sentence = '南海中学放假'

DAG = get_dag(sentence, corpus)

print(DAG)

{0: [0, 1, 3], 1: [1], 2: [2, 3], 3: [3], 4: [4, 5], 5: [5]}

- 可视化

%matplotlib inline

import networkx as nx, matplotlib.pyplot as mp

mp.rcParams['font.sans-serif']=['SimHei'] # 显示中文

G = nx.DiGraph() # 创建图

for k in DAG:

for i in DAG[k]:

G.add_edge(sentence[k], sentence[i])

nx.draw(G, with_labels=True, font_size=36, node_size=1500, width=4, node_color='lightgreen')

4、仿照jieba改写的分词算法

import os, re, pandas as pd

from math import log

from time import time

# 基础目录

BASE_PATH = os.path.dirname(__file__)

# 生成绝对路径

_get_abs_path = lambda path: os.path.normpath(os.path.join(BASE_PATH, path))

# 通用词库

JIEBA_DICT = _get_abs_path('jieba_dict.txt') # jieba词典

def txt2df2dt(filename=JIEBA_DICT, sep=' '):

df = pd.read_table(filename, sep, header=None)

return dict(df[[0, 1]].values)

class Cutter:

re_eng = re.compile('[a-zA-Z0-9_\-]+')

re_num = re.compile('[0-9.\-+%/~]+')

def __init__(self, dt=None, max_len=0):

self.t = time()

self.dt = dt or txt2df2dt()

self.total = sum(list(self.dt.values()))

# 词最大长度,默认等于词典最长词

if not max_len:

for k in self.dt.keys():

if len(k) > max_len:

max_len = len(k)

self.max_len = max_len

def __del__(self):

t = time() - self.t

print('分词耗时:%.2f秒' % t) if t < 60 else print('分词耗时:%.2f分钟' % (t/60))

def _get_DAG(self, sentence):

length = len(sentence)

dt = dict()

for head in range(length):

tail = head + self.max_len

if tail > length:

tail = length

dt.update({head: [head]})

for middle in range(head + 2, tail + 1):

word = sentence[head: middle]

# ------------- 词典 + 正则 ------------- #

if word in self.dt:

dt[head].append(middle - 1)

elif self.re_eng.fullmatch(word):

dt[head].append(middle - 1)

elif self.re_num.fullmatch(word):

dt[head].append(middle - 1)

return dt

def _calculate(self, sentence):

DAG = self._get_DAG(sentence)

route = {}

N = len(sentence)

route[N] = (0, 0)

logtotal = log(self.total)

for idx in range(N - 1, -1, -1):

route[idx] = max(

(log(self.dt.get(sentence[idx:x + 1], 1)) - logtotal + route[x + 1][0], x)

for x in DAG[idx])

return route

def cut(self, sentence):

route = self._calculate(sentence)

x = 0

N = len(sentence)

buf = ''

while x < N:

y = route[x][1] + 1

l_word = sentence[x:y]

if re.compile('[a-zA-Z0-9]').match(l_word) and len(l_word) == 1:

buf += l_word

x = y

else:

if buf:

yield buf

buf = ''

yield l_word

x = y

if buf:

yield buf

def lcut(self, sentence):

return list(self.cut(sentence))

def add_word(self, word, freq=0):

new_freq = freq or 1

original_freq = self.dt.get(word, 0)

self.dt[word] = new_freq

self.total = self.total - original_freq + new_freq

def del_word(self, word):

original_freq = self.dt.get(word)

if original_freq:

del self.dt[word]

self.total -= original_freq

cut = lambda sentence: Cutter().cut(sentence)

lcut = lambda sentence: Cutter().lcut(sentence)