一.安装CentOS7和基础配置

安装过程大部分都是缺省配置,只有如下两处存储和软件选择配置需要注意:

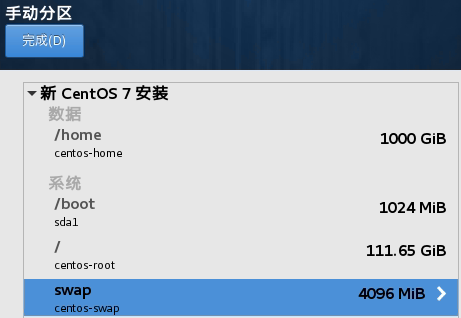

1.1存储配置

安装位置-->我要配置分区-->完成-->分区方案选LVM-->新增如下四个挂载点-->完成-->接受更改。其中:

ü boot通常配置1G,设备类型选标准分区,文件系统选ext3;

ü swap通常4G,设备类型选LVM,文件系统当然是swap;

ü Root配100G左右,设备类型选LVM,文件系统选ext3;

ü 剩下最大的空间当然留给home,设备类型选LVM,文件系统选ext3。

1.2软件选择

使用缺省的最小安装即可:

1.3网络配置

ü 对于控制节点,ens44f0地址为:10.47.181.26,网关10.47.181.1,DNS10.30.1.9;ens44f1暂不启用;

ü 对于计算节点,ens44f0地址为:10.47.181.27,网关10.47.181.1,DNS10.30.1.9;ens44f1暂不启用;

ü 同时控制节点的主机名改为controller,计算节点的主机名改为compute;

ü 如果后续要手工配置IP地址:[root@controller /]# vi /etc/sysconfig/network-scripts/ifcfg-ens44f0。(特别注意:配置文件中的ONBOOT要配置为yes,BOOTPROTO要从dhcp改为none或static,其它只需配置IPADDR0=10.47.181.26,PREFIX0=24,GATEWAY0=10.47.181.1,DNS1=10.30.1.9即可)。修改配置后,重启网卡的命令是[root@controller /]# service network restart;

ü 手工修改主界面配置文件:[root@controller /]# vi /etc/hostname。直接查看主界面的命令:[root@controller /]# hostname;

ü Hosts文件修改:[root@controller /]# vi /etc/hosts,增加一下对本实践中控制节点和计算节点的配置:

10.47.181.26 controller

10.47.181.27 compute

ü root密码设置为root。

1.4关闭防火墙和SELinux

(控制和计算节点都执行)

[root@controller /]# systemctl stop firewalld

[root@controller /]# systemctl disable firewalld

[root@controller /]# setenforce 0

[root@controller /]# sed -i 's/=enforcing/=disabled/' /etc/selinux/config

1.5修改yum源

(控制和计算节点都一样配置)

ü 先备份原有*.repo;

ü 新建:[root@controller /]# vi /etc/yum.repos.d/zte-mirror.repo,内容如下:

[base]

name=CentOS-$releasever - Base

baseurl=http://mirrors.zte.com.cn/centos/7/os/$basearch/

gpgcheck=1

enabled=1

gpgkey=http://mirrors.zte.com.cn/centos/RPM-GPG-KEY-CentOS-7

[epel]

name=CentOS-$releasever - Epel

baseurl=http://mirrors.zte.com.cn/epel/7/$basearch/

gpgcheck=0

enabled=1

[extras]

name=CentOS-$releasever - Extras

baseurl=http://mirrors.zte.com.cn/centos/7/extras/$basearch/

gpgcheck=0

enabled=1

[updates]

name=CentOS-$releasever - Updates

baseurl=http://mirrors.zte.com.cn/centos/7/updates/$basearch/

gpgcheck=0

enabled=1

[openstack-rocky]

name=CentOS-$releasever - Rocky

baseurl=http://mirrors.zte.com.cn/centos/7/cloud/x86_64/openstack-rocky/

gpgcheck=0

enabled=1

ü 保存后依次执行:

[root@controller /]# yum clean all

[root@controller /]# yum makecache

[root@controller /]# yum update

[root@controller /]# reboot

(重启后出现一次删掉的*.repo又回来了,那就再删除(只保留zte-mirror.repo),并重新clean all和makecache)

1.6安装Chrony或NTP时钟同步服务

1.6.1控制节点安装Chrony

ü 安装:[root@controller /]# yum install chrony

ü 配置:[root@controller /]# vi /etc/chrony.conf

注释掉原有的server,新增两个配置:

server controller iburst

allow 10.47.0.0/16

ü 启动服务:

[root@controller /]# systemctl start chronyd

[root@controller /]# systemctl enable chronyd

1.6.2计算节点安装Chrony

除了配置chrony.conf,其它同上:

注释掉原有的server,新增一个配置:

server controller iburst

1.6.3控制节点安装NTP

前面安装Chrony后,观察发现没有同步时钟,暂时先不查原因。先把已经熟练掌握的NTP搞上。同时将chronyd.service关掉(关掉方法:[root@controller /]# systemctl stop chronyd [root@controller /]# systemctl disable chronyd)。

ü 安装:[root@controller ~]# yum install ntp

ü 配置:[root@controller ~]# vi /etc/ntp.conf

注释掉原有的server,新增如下两行配置:

server 127.127.1.0

fudeg 127.127.1.0 startum 10

ü 配置:[root@controller ~]# vi /etc/sysconfig/ntpd

增加配置:SYNC_HWCLOCK=yes

ü 启动服务:

[root@controller /]# systemctl start ntp

[root@controller /]# systemctl enable ntp

1.6.4计算节点安装NTP

ü 安装:[root@controller ~]# yum install ntp

ü 配置:[root@controller ~]# vi /etc/ntp.conf

注释掉原有的server,新增如下两行配置:

server controller

ü 配置:[root@controller ~]# vi /etc/sysconfig/ntpd

增加配置:SYNC_HWCLOCK=yes

ü 启动服务:

[root@controller /]# systemctl start ntp

[root@controller /]# systemctl enable ntp

ü 观察同步状态:[root@compute /]# ntpq -p

remote refid st t when poll reach delay offset jitter

===========================================================================

*controller LOCAL(0) 6 u 25 64 77 0.160 1.140 0.741

1.7安装openstack客户端和selinux服务

(控制和计算节点都安装)

[root@controller /]# yum install python-openstackclient

[root@controller /]# yum install openstack-selinux

二.控制节点的安装

2.1安装数据库

ü 安装:[root@controller /]# yum install mariadb mariadb-server python2-PyMySQL

ü 新建文件:[root@controller /]# vi /etc/my.cnf.d/openstack.cnf

内容为:

[mysqld]

bind-address = 10.47.181.26

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

ü 启动服务:

[root@controller /]# systemctl enable mariadb.service

[root@controller /]# systemctl start mariadb.service

ü 通过脚本[root@controller /]# mysql_secure_installation设置DB的密码为dbrootpass,设置过程中其它都选Y即可。第一次设置需要输入当前密码,因为是空,所以直接回车即可。

ü 调大最大连接数:

1)查看当前连接数(Threads):[root@controller ~]# mysqladmin -uroot -pdbrootpass status

Uptime: 431 Threads: 214 Questions: 24884 Slow queries: 0 Opens: 67 Flush tables: 1 Open tables: 61 Queries per second avg: 57.735

2)查看默认最大连接数:[root@controller ~]# mysql -uroot -pdbrootpass

MariaDB [(none)]> show variables like "max_connections";

+-----------------+-------+

| Variable_name | Value |

+-----------------+-------+

| max_connections | 214 |

+-----------------+-------+

3)编辑:[root@controller ~]# vi /etc/my.cnf

在[mysqld]下新增一行:max_connections=1000

4)编辑:[root@controller ~]# vi /usr/lib/systemd/system/mariadb.service

在[service]下新增两行:

LimitNOFILE=10000

LimitNPROC=10000

5)重启数据库:

[root@controller ~]# systemctl --system daemon-reload

[root@controller ~]# systemctl restart mariadb.service

6)重新验证:

[root@controller ~]# mysqladmin -uroot -pdbrootpass status

Uptime: 1012 Threads: 238 Questions: 55067 Slow queries: 0 Opens: 70 Flush tables: 1 Open tables: 64 Queries per second avg: 54.414

7)[root@controller ~]# mysql -uroot -pdbrootpass

MariaDB [(none)]> show variables like "max_connections";

+-----------------+-------+

| Variable_name | Value |

+-----------------+-------+

| max_connections | 4096 |

+-----------------+-------+

2.2安装Message queue

ü 安装:[root@controller /]# yum install rabbitmq-server

ü 启动服务:

[root@controller /]# systemctl enable rabbitmq-server.service

[root@controller /]# systemctl start rabbitmq-server.service

ü 添加openstack用户,密码为rabbitpass:

[root@controller /]# rabbitmqctl add_user openstack rabbitpass

ü 为openstack用户最高权限:

[root@controller /]# rabbitmqctl set_permissions openstack “.*” “.*” “.*”

返回:Setting permissions for user "openstack" in vhost "/" ...

2.3安装Memcached

ü 安装:[root@controller /]# yum install memcached python-memcached

ü 编辑:[root@controller /]# vi /etc/sysconfig/memcached

在现有OPTIONS中增加控制节点地址,如下红色字体:

OPTIONS="-l 127.0.0.1,::1,controller"

ü 启动服务:

[root@controller /]# systemctl enable memcached.service

[root@controller /]# systemctl start memcached.service

2.4安装Etcd

ü 安装:[root@controller /]# yum install etcd

ü 编辑:[root@controller /]# vi /etc/etcd/etcd.conf

#[Member]节点下修改如下配置:

ETCD_LISTEN_PEER_URLS="http://10.47.181.26:2380"

ETCD_LISTEN_CLIENT_URLS="http://10.47.181.26:2379"

ETCD_NAME="controller"

#[Clustering]节点修改如下配置:

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.47.181.26:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://10.47.181.26:2379"

ETCD_INITIAL_CLUSTER="controller=http://10.47.181.26:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER_STATE="new"

后来将上面配置中几个ip地址替换为localhost,也能正常启动本服务。

ü 启动并设置为开机自启动:

[root@controller /]# systemctl enable etcd

[root@controller /]# systemctl start etcd

2.5安装Keystone

2.5.1数据库中创建keystone相关数据

(密码为keystonedbpass)

ü [root@controller /]# mysql -uroot -pdbrootpass

ü MariaDB [(none)]> CREATE DATABASE keystone;

ü MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'keystonedbpass';

ü MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'keystonedbpass';

ü MariaDB [(none)]> exit

2.5.2安装Keystone

ü 安装:[root@controller /]# yum install openstack-keystone httpd mod_wsgi

ü 编辑:[root@controller /]# vi /etc/keystone/keystone.conf

[database]节点下配置:

connection = mysql+pymysql://keystone:keystonedbpass@controller/keystone

[token]节点下配置:

provider = fernet

ü 同步数据库:[root@controller /]# su -s /bin/sh -c "keystone-manage db_sync" keystone

ü 初始化fernet库:

[root@controller /]# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

[root@controller /]# keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

ü 引导身份认证(admin用户的密码为设定为adminpass):[root@controller /]# keystone-manage bootstrap --bootstrap-password adminpass --bootstrap-admin-url http://controller:5000/v3/ --bootstrap-internal-url http://controller:5000/v3/ --bootstrap-public-url http://controller:5000/v3/ --bootstrap-region-id RegionOne

2.5.3配置Apache HTTP sever

ü 编辑:[root@controller /]# vi /etc/httpd/conf/httpd.conf

ServerName controller

ü 创建文件链接:[root@controller /]# ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

ü 启动httpd服务:

[root@controller /]# systemctl enable httpd.service

[root@controller /]# systemctl start httpd.service

(启动时遇到启动失败,重新执行了一下文档开头部分执行的关闭SELinux:setenforce 0后,再次启动httpd.service成功。)

ü 准备一个环境变量脚本[root@controller /]# vi admin-openrc.sh,内容如下:

export OS_USERNAME=admin

export OS_PASSWORD=adminpass

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

保存后加载:[root@controller /]# source admin-openrc.sh

2.5.4创建service项目

ü 创建project:[root@controller /]# openstack project create --domain default --description "Service Project" service

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Service Project |

| domain_id | default |

| enabled | True |

| id | d16834db814a423aa6354644c20b6384 |

| is_domain | False |

| name | service |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

ü 验证:

[root@controller /]# openstack user list

+----------------------------------+-------+

| ID | Name |

+----------------------------------+-------+

| cd365f993a51434d9443230e1faa1d44 | admin |

+----------------------------------+-------+

[root@controller /]# openstack token issue

+------------+--------------------------------------------------------------+

| Field | Value |

+------------+--------------------------------------------------------------+

| expires | 2018-10-27T02:17:39+0000 |

| id | gAAAAABb07yzbeKvZPi_uZT0UKkqA7sLaDvJ3sZEFebqDk3Tnk...... |

| project_id | b8471b54426d4b0ba497592862054d5a |

| user_id | cd365f993a51434d9443230e1faa1d44 |

+------------+--------------------------------------------------------------+

(id太长,被我缩减了一下贴在这里)

2.6安装Glance

2.6.1数据库中创建glance相关数据

(密码为glancedbpass)

ü [root@controller /]# mysql -uroot -pdbrootpass

ü MariaDB [(none)]> CREATE DATABASE glance;

ü MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO glance@'localhost' IDENTIFIED BY ‘glancedbpass';

ü MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO glance@'%' IDENTIFIED BY 'glancedbpass';

ü MariaDB [(none)]> exit

2.6.2创建用户、角色和服务等

ü 加载环境变量脚本:[root@controller /]# source admin-openrc.sh

ü 创建glance用户:[root@controller ~]# openstack user create --domain default --password-prompt glance

User Password:(此处输入user密码为userpass)

Repeat User Password:(重复输入userpass)

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | fee4fcb2d77b4df19d28dcf3e2163dd6 |

| name | glance |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

ü 创建glance角色:[root@controller ~]# openstack role add --project service --user glance admin

ü 创建glance服务:[root@controller ~]# openstack service create --name glance --description "OpenStack Image" image

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Image |

| enabled | True |

| id | 9fa19cf860ac4f9c9f8a494df611a2c2 |

| name | glance |

| type | image |

+-------------+----------------------------------+

ü 创建镜像公共节点:[root@controller ~]# openstack endpoint create --region RegionOne image public http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 880e0f6663a34b5ab17928a8a5d5ac17 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 9fa19cf860ac4f9c9f8a494df611a2c2 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

ü 创建镜像内部节点:[root@controller ~]# openstack endpoint create --region RegionOne image internal http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 1d05c65ce1d9434f940e7d5c18ec6f32 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 9fa19cf860ac4f9c9f8a494df611a2c2 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

ü 创建镜像管理员节点:[root@controller ~]# openstack endpoint create --region RegionOne image admin http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | fca8e745877a4416b9b23f0a70407338 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 9fa19cf860ac4f9c9f8a494df611a2c2 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

2.6.3安装Glance

ü 安装:[root@controller ~]# yum install openstack-glance

ü 编辑:[root@controller ~]# vi /etc/glance/glance-api.conf

[database]节点下修改如下配置:

connection = mysql+pymysql://glance:glancedbpass@controller/glance

[keystone_authtoken]节点下修改如下配置:

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000(务必小心:原文件写的是auth_uri,一定要改为auth_url)

memcached_servers = controller:11211

auth_type = password

以及新增如下配置:

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = userpass

[paste_deploy]节点下放开如下配置:

flavor = keystone

[glance_store]节点下放开如下配置:

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images(保存镜像文件的路径)

ü 编辑:[root@controller ~]# vi /etc/glance/glance-registry.conf

[database]节点下修改如下配置:

connection = mysql+pymysql://glance:glancedbpass@controller/glance

[keystone_authtoken]节点下修改如下配置:

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000(务必小心:原文件写的是auth_uri,一定要改为auth_url)

memcached_servers = controller:11211

auth_type = password

以及新增如下配置:

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = userpass

[paste_deploy]节点下放开如下配置:

flavor = keystone

ü 同步数据库:[root@controller ~]# su -s /bin/sh -c "glance-manage db_sync" glance

......

Database is synced successfully.

ü 启动服务:

[root@controller ~]# systemctl start openstack-glance-api.service openstack-glance-registry.service

[root@controller ~]# systemctl enable openstack-glance-api.service openstack-glance-registry.service

ü 验证:

1)本控制节点还不能上外网,那就通过能访问外网的PC机直接通过IE浏览器下载,https://download.cirros-cloud.net/,下载其中的cirros-0.3.2-x86_64-disk.img即可。然后上传的本控制节点:

[root@controller ~]# ll

总用量 12888

-rw-r--r-- 1 root root 264 10月 27 09:36 admin-openrc.sh

-rw-------. 1 root root 2063 10月 26 16:37 anaconda-ks.cfg

-rw-r--r-- 1 root root 13167616 10月 27 10:30 cirros-0.3.2-x86_64-disk.img

2)加载环境变量:[root@controller /]# source admin-openrc.sh

3)创建镜像:[root@controller ~]# openstack image create "cirros" --file cirros-0.3.2-x86_64-disk.img --disk-format qcow2 --container-format bare --public

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| checksum | 64d7c1cd2b6f60c92c14662941cb7913 |

| container_format | bare |

| created_at | 2018-10-27T02:43:53Z |

| disk_format | qcow2 |

| file | /v2/images/b50f92a7-f49b-4908-9144-568f98dbbb8f/file |

| id | b50f92a7-f49b-4908-9144-568f98dbbb8f |

| min_disk | 0 |

| min_ram | 0 |

| name | cirros |

| owner | b8471b54426d4b0ba497592862054d5a |

| properties | os_hash_algo='sha512', os_hash_value='de74eeff61ad129d3945dead39dbdb02c942702e423628c6fbb35cf18747141d4ebdae914ffebaf6e18dcb174d4066010df8829960c6b95f8777d4f5fb5567f2', os_hidden='False' |

| protected | False |

| schema | /v2/schemas/image |

| size | 13167616 |

| status | active |

| tags | |

| updated_at | 2018-10-27T02:43:54Z |

| virtual_size | None |

| visibility | public |

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

4)查看镜像:[root@controller ~]# openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| b50f92a7-f49b-4908-9144-568f98dbbb8f | cirros | active |

+--------------------------------------+--------+--------+

2.7安装Nova

2.7.1数据库中创建nova相关数据

(密码为novadbpass和placementdbpass)

ü [root@controller /]# mysql -uroot -pdbrootpass

ü MariaDB [(none)]> CREATE DATABASE nova_api;

ü MariaDB [(none)]> CREATE DATABASE nova;

ü MariaDB [(none)]> CREATE DATABASE nova_cell0;

ü MariaDB [(none)]> CREATE DATABASE placement;

ü MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova’@'localhost' IDENTIFIED BY ‘novadbpass';

ü MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova’@'%' IDENTIFIED BY 'novadbpass';

ü MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@'localhost' IDENTIFIED BY ‘novadbpass';

ü MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@'%' IDENTIFIED BY 'novadbpass';

ü MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO ‘nova’@'localhost' IDENTIFIED BY ‘novadbpass';

ü MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO ‘nova’@'%' IDENTIFIED BY 'novadbpass';

ü MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO ‘placement’@'localhost' IDENTIFIED BY placementdbpass';

ü MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO ‘placement’@'%' IDENTIFIED BY 'placementdbpass';

MariaDB [(none)]> exit

2.7.2创建用户、角色和服务等

ü 创建nova用户:[root@controller ~]# openstack user create --domain default --password-prompt nova

User Password:(此处输入user密码为userpass)

Repeat User Password:(重复输入userpass)

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 2a0232df17b04e18ba0f4840eabdcb30 |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

ü 创建nova角色:[root@controller ~]# openstack role add --project service --user nova admin

ü 创建nova服务:[root@controller ~]# openstack service create --name nova --description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | 3f06ee745943444e8d8bdafb853ee589 |

| name | nova |

| type | compute |

+-------------+----------------------------------+

ü 创建计算公共节点:[root@controller ~]# openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 3497ffc263dc478280b262771deda363 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 3f06ee745943444e8d8bdafb853ee589 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

ü 创建计算内部节点:[root@controller ~]# openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | bb05c8c80f0144ec8f99321079aae2f6 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 3f06ee745943444e8d8bdafb853ee589 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

ü 创建计算管理员节点:[root@controller ~]# openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 24a99f4bd80044b697badf2eeee521d0 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 3f06ee745943444e8d8bdafb853ee589 |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

ü 创建placement用户:[root@controller ~]# openstack user create --domain default --password-prompt placement

User Password:(此处输入user密码为userpass)

Repeat User Password:(重复输入userpass)

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 44bee82aab8c4edcbb2bdc27df93ef07 |

| name | placement |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

ü 创建placement角色:[root@controller ~]# openstack role add --project service --user placement admin

ü 创建placement服务:[root@controller ~]# openstack service create --name placement --description "Placement API" placement

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Placement API |

| enabled | True |

| id | 515d392c6a72479491f5f893a77d2cb2 |

| name | placement |

| type | placement |

+-------------+----------------------------------+

ü 创建公共placement节点:[root@controller ~]# openstack endpoint create --region RegionOne placement public http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 72c78deee3fc47cc9fee0c9d22c1e0a1 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 515d392c6a72479491f5f893a77d2cb2 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

ü 创建内部placement节点:[root@controller ~]# openstack endpoint create --region RegionOne placement internal http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 3e1c1336907247c9825bbb6c7cf98273 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 515d392c6a72479491f5f893a77d2cb2 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

ü 创建管理员placement节点:[root@controller ~]# openstack endpoint create --region RegionOne placement admin http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | b3451088d3774e94a08d9aa1561fb78d |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 515d392c6a72479491f5f893a77d2cb2 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

2.7.3安装Nova

ü 安装:[root@controller ~]# yum install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-placement-api

ü 编辑:[root@controller ~]# vi /etc/nova/nova.conf

[DEFAULT]节点修改如下配置:

enabled_apis=osapi_compute,metadata

transport_url=rabbit://openstack:rabbitpass@controller

my_ip=10.47.181.26

use_neutron=true

firewall_driver=nova.virt.firewall.NoopFirewallDriver

[api_database]节点修改如下配置:

connection=mysql+pymysql://nova:novadbpass@controller/nova_api

[database]节点修改如下配置:

connection=mysql+pymysql://nova:novadbpass@controller/nova

[placement_database]节点修改如下配置:

connection=mysql+pymysql://placement:placementdbpass@controller/placement

[DEFAULT]节点修改如下配置:

[api]节点放开如下配置:

auth_strategy=keystone

[keystone_authtoken]节点修改如下配置:

auth_url=http://controller:5000/v3(务必小心,原文件是uri,此处要改为url)

memcached_servers=controller:11211

auth_type=password

并新增如下配置:

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = userpass

[vnc]节点修改如下配置:

enabled=true

server_listen=$my_ip

server_proxyclient_address=$my_ip

[glance]节点修改如下配置:

api_servers=http://controller:9292

[oslo_concurrency]节点放开如下配置:

lock_path=/var/lib/nova/tmp

[placement]节点修改如下配置:

region_name=RegionOne

project_domain_name=Default

project_name=service

auth_type=password

user_domain_name=Default

auth_url=http://controller:5000/v3

username=placement

password=userpass

ü 小窍门:上面这个配置文件中有大量的以#开头的注释,对于准确快速地找到具体的配置项带来了很大的麻烦,因此可以用这个指令只显示字母a到z开头和符号’[‘开头的文字,检查配置结果:

[root@controller ~]# grep ^[a-z,\[] /etc/nova/nova.conf

[DEFAULT]

instances_path=/home/novainstances

my_ip=10.47.181.26

use_neutron=true

firewall_driver=nova.virt.firewall.NoopFirewallDriver

enabled_apis=osapi_compute,metadata

transport_url=rabbit://openstack:rabbitpass@controller

[api]

auth_strategy=keystone

[api_database]

connection=mysql+pymysql://nova:novadbpass@controller/nova_api

[barbican]

[cache]

[cells]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[database]

connection=mysql+pymysql://nova:novadbpass@controller/nova

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers=http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

auth_url=http://controller:5000/v3

memcached_servers=controller:11211

auth_type=password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = userpass

[libvirt]

[matchmaker_redis]

[metrics]

[mks]

[neutron]

url=http://controller:9696

service_metadata_proxy=true

metadata_proxy_shared_secret = METADATA_SECRET

auth_type=password

auth_url=http://controller:5000

project_name=service

project_domain_name=default

username=neutron

user_domain_name=default

password=userpass

region_name=RegionOne

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path=/var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

auth_type=password

auth_url=http://controller:5000/v3

project_name=service

project_domain_name=Default

username=placement

user_domain_name=Default

password=userpass

region_name=RegionOne

[placement_database]

connection=mysql+pymysql://placement:placementdbpass@controller/placement

[powervm]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

discover_hosts_in_cells_interval=300

[serial_console]

[service_user]

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled=true

server_listen=0.0.0.0

server_proxyclient_address=$my_ip

novncproxy_base_url=http://10.47.171.26:6080/vnc_auto.htmldaid

[workarounds]

[wsgi]

[xenserver]

[xvp]

[zvm]

ü 编辑:[root@controller ~]# vi /etc/httpd/conf.d/00-nova-placement-api.conf

添加如下内容:

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

ü 重启httpd服务:[root@controller ~]# systemctl restart httpd

ü 同步nova_api数据库:[root@controller ~]# su -s /bin/sh -c "nova-manage api_db sync" nova

ü 注册cell0数据库:[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

ü 创建cell1单元:[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

d697d4f8-5df5-4725-860a-858b79fa989f

ü 同步nova数据库:[root@controller ~]# su -s /bin/sh -c "nova-manage db sync" nova(有两个警告产生,正常!)

ü 验证cell0和cell1注册成功:[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

| 名称 | UUID | Transport URL | 数据库连接 | Disabled |

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@controller/nova_cell0 | False |

| cell1 | d697d4f8-5df5-4725-860a-858b79fa989f | rabbit://openstack:****@controller | mysql+pymysql://nova:****@controller/nova | False |

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

(此处输出结果和官方文档不一样,后面抽空再查一下。。。)

ü 启动服务:

[root@controller ~]# systemctl start openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service openstack-nova-conductor openstack-nova-consoleauth.service

[root@controller ~]# systemctl enable openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service openstack-nova-conductor openstack-nova-consoleauth.service

(注意:官方文档此处缺少openstack-nova-conductor,会导致虚机创建失败;官方文档还缺少openstack-nova-consoleauth.service,会导致dashboard上打不开实例的控制台。)

(特别注意:到此时应该先跳到计算节点,完成计算节点的计算服务的安装(3.1章节)。然后再回到此处检查数据库中是否有计算节点了。)

2.8检查计算节点

ü 确认数据库中有发现节点:

[root@controller ~]# . admin-openrc.sh

[root@controller ~]# openstack compute service list --service nova-compute

An unexpected error prevented the server from fulfilling your request. (HTTP 500) (Request-ID: req-15c38b56-c9bd-4bb1-a648-a5419789458b)

出现上述错误后, 检查发现时钟没有同步。于是重新安装了熟悉的NTP,同步后再次确认,结果如下:

+----+--------------+---------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+--------------+---------+------+---------+-------+----------------------------+

| 10 | nova-compute | compute | nova | enabled | up | 2018-10-29T00:19:46.000000 |

+----+--------------+---------+------+---------+-------+----------------------------+

ü 主动发现节点:[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': d697d4f8-5df5-4725-860a-858b79fa989f

Checking host mapping for compute host 'compute': 3bc5b488-5013-4585-a3f7-c084cc80098e

Creating host mapping for compute host 'compute': 3bc5b488-5013-4585-a3f7-c084cc80098e

Found 1 unmapped computes in cell: d697d4f8-5df5-4725-860a-858b79fa989f

ü 如果想自动发现节点,配置:[root@controller ~]# vi /etc/nova/nova.conf

在[scheduler]节点修改如下配置:

discover_hosts_in_cells_interval=300

2.9安装Neutron

2.9.1数据库中创建Neutron相关数据

(密码为neutrondbpass)

ü [root@controller ~]# mysql -uroot-pdbrootpass

ü MariaDB [(none)]> CREATE DATABASE neutron;

ü MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'neutrondbpass';

ü MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'neutrondbpass';

ü MariaDB [(none)]> exit

2.9.2创建用户、角色和服务等

ü 创建neutron用户:[root@controller ~]# openstack user create --domain default --password-prompt neutron

User Password:(此处输入user密码为userpass)

Repeat User Password:(重复输入userpass)

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | daa00fa366c34859adfec17275f70311 |

| name | neutron |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

ü 创建neutron角色:[root@controller ~]# openstack role add --project service --user neutron admin

ü 创建neutron服务:[root@controller ~]# openstack service create --name neutron --description "OpenStack Networking" network

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Networking |

| enabled | True |

| id | 77d6cdb4d2cf4e33a7fd57377493a1e9 |

| name | neutron |

| type | network |

+-------------+----------------------------------+

ü 创建网络公共节点:[root@controller ~]# openstack endpoint create --region RegionOne network public http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | f379acbc323948558b938ae413aa0513 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 77d6cdb4d2cf4e33a7fd57377493a1e9 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

ü 创建网络内部节点:[root@controller ~]# openstack endpoint create --region RegionOne network internal http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 1656c27c8f4b4295a36e93fb8f217558 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 77d6cdb4d2cf4e33a7fd57377493a1e9 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

ü 创建网络管理节点:[root@controller ~]# openstack endpoint create --region RegionOne network admin http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 24b0401b9c794fdb9a4198d2e2146f85 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 77d6cdb4d2cf4e33a7fd57377493a1e9 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

2.9.3配置provider网络

(与下一节的self-serive网络是二选一,本次练习不使用provider,请直接跳到下一节。)

ü 安装:[root@controller ~]# yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables

ü 编辑:[root@controller ~]# vi /etc/neutron/neutron.conf

在[database]节点修改如下配置:

connection = mysql+pymysql://neutron:neutrondbpass@controller/neutron

在[DEFAULT]节点修改如下配置:

core_plugin = ml2

service_plugins =

transport_url = rabbit://openstack:rabbitpass@controller

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

在[keystone_authtoken]节点修改如下配置:

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000(注意要把uri改为url)

memcached_servers = controller:11211

auth_type = password

并新增如下配置:

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = userpass

在[nova]节点修改如下配置:

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = userpass

在[oslo_concurrency]节点修改如下配置:

lock_path = /var/lib/neutron/tmp

ü 编辑:[root@controller ~]# vi /etc/neutron/plugins/ml2/ml2_conf.ini

在[ml2]节点修改如下配置:

type_drivers = flat,vlan

tenant_network_types =

mechanism_drivers = linuxbridge

extension_drivers = port_security

在[ml2_type_flat]节点修改如下配置:

flat_networks = provider

在[securitygroup]节点修改如下配置:

enable_ipset = true

ü 编辑:[root@controller ~]# vi /etc/neutron/plugins/ml2/linuxbridge_agent.ini

在[linux_bridge]节点修改如下配置:

physical_interface_mappings = provider:ens44f0(暂时填写第一个网卡。后面再研究。)

在[vxlan]节点修改如下配置:

enable_vxlan = false

在[securitygroup]节点修改如下配置:

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

enable_security_group = true

ü 编辑:[root@controller ~]# vi /etc/neutron/dhcp_agent.ini

在[DEFAULT]节点修改如下配置:

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

2.9.4配置self-service网络

(与上一节的proivder网络是二选一,本次实验使用本self-service网络。)

ü 安装:[root@controller ~]# yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables

ü 编辑:[root@controller ~]# vi /etc/neutron/neutron.conf

在[database]节点修改如下配置:

connection = mysql+pymysql://neutron:neutrondbpass@controller/neutron

在[DEFAULT]节点修改如下配置:

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

transport_url = rabbit://openstack:rabbitpass@controller

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

在[keystone_authtoken]节点修改如下配置:

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000(注意要把uri改为url)

memcached_servers = controller:11211

auth_type = password

并新增如下配置:

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = userpass

在[nova]节点修改如下配置:

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = userpass

在[oslo_concurrency]节点修改如下配置:

lock_path = /var/lib/neutron/tmp

(注意:下面这些配置和官网文档差别较大,官网使用linuxbridge,现在更流行openvswitch。并且这些配置也是为了后续配置vlan、路由、以及通过浮动IP实现外网互通做准备。)

ü 编辑:[root@controller ~]# vi /etc/neutron/plugins/ml2/ml2_conf.ini

在[ml2]节点修改如下配置:

type_drivers = local,flat,vlan,gre,vxlan

tenant_network_types = vlan

mechanism_drivers = openvswitch,l2population

extension_drivers = port_security

在[ml2_type_flat]节点修改如下配置:

flat_networks = *

修改[ml2_type_vlan]节点的如下配置:

network_vlan_ranges = default:3001:4000

新增[ovs]节点及其如下配置:

physical_interface_mappings = default:ens44f1

上述配置中,default为[ml2_type_vlan]的label(含义是物理网络名称),任意字符串都可以,并且配置其对应的物理网卡为ens44f1。

在[securitygroup]节点修改如下配置:

enable_ipset = true

配置:[root@controller ~]# vi /etc/neutron/plugins/ml2/openvswitch_agent.ini

修改[agent]节点的如下配置:

tunnel_types = vxlan

l2_population = True

prevent_arp_spoofing = True

修改[ovs]节点的如下配置:

phynic_mappings = default:ens44f1

local_ip = 10.47.181.26

bridge_mappings = default:br-eth

修改[securitygroup]节点的如下配置:

enable_security_group = false

ü 编辑:[root@controller ~]# vi /etc/neutron/l3_agent.ini

在[DEFAULT]节点修改如下配置:

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

ü 编辑:[root@controller ~]# vi /etc/neutron/dhcp_agent.ini

在[DEFAULT]节点修改如下配置:

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

2.9.5继续配置

ü 配置:[root@controller ~]# vi /etc/neutron/metadata_agent.ini

在[DEFAULT]节点修改如下配置:

nova_metadata_host = controller

metadata_proxy_shared_secret = METADATA_SECRET

ü 配置:[root@controller ~]# vi /etc/nova/nova.conf

在[neutron]节点修改如下配置:

url=http://controller:9696

auth_url=http://controller:5000

auth_type=password

project_domain_name=default

user_domain_name=default

region_name=RegionOne

project_name=service

username=neutron

password=userpass

service_metadata_proxy=true

metadata_proxy_shared_secret = METADATA_SECRET

ü 创建链接:[root@controller ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

ü 同步数据库:[root@controller ~]# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

ü 配置openvswitch,添加网桥,把物理网卡加入到网桥:

[root@controller ~]# ovs-vsctl add-br br-eth

[root@controller ~]# ovs-vsctl add-port br-eth ens44f1

ü 启动服务:(如果启动失败,就reboot一下)

[root@controller ~]# systemctl restart openstack-nova-api

[root@controller ~]# systemctl start neutron-server.service neutron-openvswitch-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

[root@controller ~]# systemctl enable neutron-server.service neutron-openvswitch-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

ü 如果选择了self-service网络,还需启动这个服务:

[root@controller ~]# systemctl start neutron-l3-agent.service

[root@controller ~]# systemctl enable neutron-l3-agent.service

(特别注意:此时应该跳转到计算节点安装Neutron,3.2章节。)

2.10安装dashboard

2.10.1安装

ü 安装:[root@controller ~]# yum install openstack-dashboard

ü 编辑:[root@controller ~]# vi /etc/openstack-dashboard/local_settings

修改如下配置:

OPENSTACK_HOST = "controller"

ALLOWED_HOSTS = ['*', 'localhost']

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'(新增的)

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.locmem.LocMemCache',

'LOCATION':'controller:11211',(新增的)

},

}

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST(不变)

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = 'Default'

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

OPENSTACK_NEUTRON_NETWORK = {

'enable_router': False,

'enable_quotas': False,

'enable_distributed_router': False,

'enable_ha_router': False,

'enable_lb':False,(新增的)

'enable_firewall':False,(新增的)

'enable_vpn':False,(新增的)

'enable_fip_topology_check': False,

......

}

ü 编辑:[root@controller ~]# vi /etc/httpd/conf.d/openstack-dashboard.conf

增加一行配置:WSGIApplicationGroup %{GLOBAL}

ü 启动服务:[root@controller ~]# systemctl restart httpd.service memcached.service

ü 验证:浏览器中输入http://10.47.181.26/dashboard

域:Default,用户名:admin,密码:adminpass

2.10.2简单配置和管理镜像、网络和实例

这里不详细描述了,可以参考4.4章节的网络配置。

三.计算节点的安装

3.1安装 Nova

(注意:和控制节点安装的Nova不一样!)

ü 安装:[root@compute /]# yum install openstack-nova-compute

刚开始安装时,遇到报错:需要:qemu-kvm-rhev >= 2.10.0

于是在yum源文件zte-mirror.repo中新增一个源,如下:

[Virt]

name=CentOS-$releasever - Virt

baseurl=http://mirrors.zte.com.cn/centos/7.5.1804/virt/x86_64/kvm-common/

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

然后依次yum clean all,makecache,update。再重新安装openstack-nova-compute,问题解决。

ü 编辑:[root@compute /]# vi /etc/nova/nova.conf

[DEFAULT]节点修改如下配置:

enabled_apis=osapi_compute,metadata

transport_url=rabbit://openstack:rabbitpass@controller

my_ip=10.47.181.27

use_neutron=true

firewall_driver=nova.virt.firewall.NoopFirewallDriver

[api]节点放开如下配置:

auth_strategy=keystone

[keystone_authtoken]节点修改如下配置:

auth_url=http://controller:5000/v3(务必小心,原文件是uri,要改为url)

memcached_servers=controller:11211

auth_type=password

并新增如下配置:

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = userpass

[vnc]节点修改如下配置:

enabled=true

server_listen=0.0.0.0

server_proxyclient_address=$my_ip

novncproxy_base_url=http://10.47.181.26:6080/vnc_auto.html(注意:此处不能用hostname代替地址,因为这个url是通过PC上的浏览器访问的,PC用的DNS应该不能解析controller这个hostname。)

[glance]节点修改如下配置:

api_servers=http://controller:9292

[oslo_concurrency]节点放开如下配置:

lock_path=/var/lib/nova/tmp

[placement]节点修改如下配置:

region_name=RegionOne

project_domain_name=Default

project_name=service

auth_type=password

user_domain_name=Default

auth_url=http://controller:5000/v3

username=placement

password=userpass

ü 检查是否支持虚拟化:[root@compute /]# egrep -c '(vmx|svm)' /proc/cpuinfo

56

(注意:如果返回0,表示不支持,则需要在/etc/nova/nova.conf的[libvirt]下修改virt_type=qemu)

启动服务:

[root@compute /]# systemctl start libvirtd.service openstack-nova-compute.service

[root@compute /]# systemctl enable libvirtd.service openstack-nova-compute.service

(现在回到计算节点安装的2.8章节,检查是否能发现此计算节点。)

3.2安装Neutron

3.2.1基础安装和配置

ü 安装:[root@compute ~]# yum install openstack-neutron-openvswitch ebtables ipset

ü 编辑:[root@compute ~]# vi /etc/neutron/neutron.conf

在[DEFAULT]节点修改如下配置:

transport_url = rabbit://openstack:rabbitpass@controller

auth_strategy = keystone

在[keystone_authtoken]节点修改如下配置:

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000(注意将uri改为url)

memcached_servers = controller:11211

auth_type = password

并新增如下配置:

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = userpass

在[oslo_concurrency]节点修改如下配置:

lock_path = /var/lib/neutron/tmp

3.2.2配置provider网络

(provider网络和self-service网络二选一,本次使用self-service,请直接跳到下一节。)

ü 编辑:[root@compute ~]# vi /etc/neutron/plugins/ml2/linuxbridge_agent.ini

在[linux_bridge]节点修改如下配置:

physical_interface_mappings = provider:ens44f0

在[vxlan]节点修改如下配置:

enable_vxlan = false

在[securitygroup]节点修改如下配置:

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

3.2.3配置self-service网络

(provider网络和self-service网络二选一,本次练习选用self-service。)

(这里的配置与官网差别较大,官网使用linuxbridge,本次使用更流行的openvswitch。同时也是为了后续配置vlan、路由、以及通过浮动IP与外网互通做准备。)

ü 配置:[root@controller ~]# vi /etc/neutron/plugins/ml2/openvswitch_agent.ini

修改[agent]节点的如下配置:

tunnel_types = vxlan

l2_population = True

prevent_arp_spoofing = True

修改[ovs]节点的如下配置:

phynic_mappings = default:ens44f1

local_ip = 10.47.181.26

bridge_mappings = default:br-eth

修改[securitygroup]节点的如下配置:

enable_security_group = false

然后重启neutron-openvswitch-agent服务:

[root@controller ~]# systemctl restart neutron-openvswitch-agent.service

配置OVS,添加网桥,把物理网卡加入到网桥:

[root@controller ~]# ovs-vsctl add-br br-eth

[root@controller ~]# ovs-vsctl add-port br-eth ens44f1

3.2.4继续配置

ü 编辑:[root@compute ~]# vi /etc/nova/nova.conf

在[neutron]节点修改如下配置:

url=http://controller:9696

auth_url=http://controller:5000

auth_type=password

project_domain_name=default

user_domain_name=default

region_name=RegionOne

project_name=service

username=neutron

password=userpass

ü 启动服务:

[root@compute ~]# systemctl restart openstack-nova-compute

[root@compute ~]# systemctl start neutron-openvswitch-agent.service

[root@compute ~]# systemctl enable neutron-openvswitch-agent.service

ü 在控制节点上验证:[root@controller ~]# openstack network agent list

结果显示HTTP 500错误,经过分析,应该是超过MySQL的最大连接数,于是回到2.1章节修改数据库最大连接数,然后再回来验证,结果类似如下:

[root@controller ~]# openstack network agent list

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| 186f4029-341a-4a2f-a662-e0b388f517ab | Linux bridge agent | controller | None | XXX | UP | neutron-linuxbridge-agent |

| 33705d2b-3cb0-4003-b1c1-f1434bee8abc | Open vSwitch agent | controller | None | :-) | UP | neutron-openvswitch-agent |

| 4b73a101-e17c-4de2-85ff-213cff13df4a | Linux bridge agent | compute | None | XXX | UP | neutron-linuxbridge-agent |

| 5668bf57-6d63-4883-bf9a-fa3596447bf8 | L3 agent | controller | nova | :-) | UP | neutron-l3-agent |

| d4524c6d-7ca7-4c7a-adc4-a1663c5be9df | Metadata agent | controller | None | :-) | UP | neutron-metadata-agent |

| e690b9d6-3022-4a66-921c-5af7292a4038 | Open vSwitch agent | compute | None | :-) | UP | neutron-openvswitch-agent |

| f358e884-2158-42e4-a927-edaafa2ff650 | DHCP agent | controller | nova | :-) | UP | neutron-dhcp-agent |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+