############### warm up! ##############

# 均方误差MSE的计算函数:

loss = tf.reduce_mean(tf.square(y_ - y))

# 反向传播的训练方法/优化器:

## 梯度下降

train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss)

## 动量法

train_step = tf.train.MomentumOptimizer(learning_rate).minimize(loss)

## adam

train_step = tf.train.AdamOptimizer(learning_rate).minimize(loss)

############ formal coding! #############

# coding: utf-8

import tensorflow as tf

import numpy as np

import os

os.environ['TF_CPP_MIN_LOG_LEVEL']='2' #hide warnings

BATCH_SIZE = 8 # 一次喂入神经网络多少组数据,不可过大

seed = 23455 # 设置种子使随机生成值一样

#*** np.random.RandomState:伪随机数产生器。seed数值相同,则产生的随机数相同

rng = np.random.RandomState(seed)

#*** 随机数返回32行2列矩阵,表示32组数据,作为输入数据集

X = rng.rand(32,2)

#*** classify the random samples

Y = [[int (x0 + x1 < 1)] for (x0, x1) in X] # pay attention to the square brackets !!!!

#*** show the samples for training

print("X:\n", X)

print("Y:\n", Y)

#*** forward propagation

x = tf.placeholder(tf.float32, shape = (None, 2))

y_= tf.placeholder(tf.float32, shape = (None, 1))

# initiate the weights randomly

w1= tf.Variable(tf.random_normal([2,3], stddev = 1, seed = 1))

w2= tf.Variable(tf.random_normal([3,1], stddev = 1, seed = 1))

# show the compute gragh

a = tf.matmul(x, w1)

y = tf.matmul(a, w2)

#*** back propagation

# define the loss function

loss = tf.reduce_mean(tf.square(y - y_))

# select one training optimizer

train_step = tf.train.GradientDescentOptimizer(0.001).minimize(loss)

# or: MomentumOptimizer

# or: AdamOptimizer

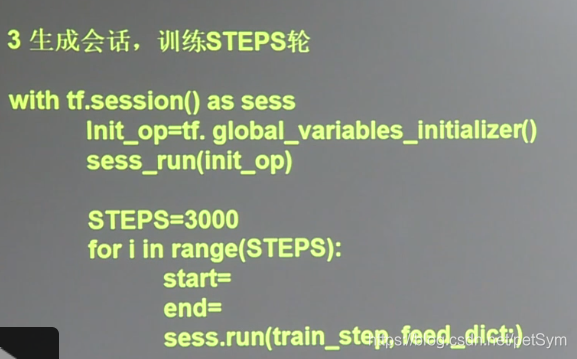

#*** session and train

with tf.Session() as sess:

init_op = tf.global_variables_initializer()

sess.run(init_op)

# print w1 & w2 before training

print("w1 and w2 before training:\n")

print("w1:\n", sess.run(w1))

print("w2:\n", sess.run(w2))

print("\n")

# training

STEPS = 3000

for i in range(STEPS):

# 共32组数据,BATCH_SIZE为8,0-8,8-16,16-24,24-32,然后再循环,共3000轮

start = (i*BATCH_SIZE) % 32

end = start + BATCH_SIZE

sess.run(train_step, feed_dict = {x: X[start:end], y_: Y[start:end]})

# print total loss every 500 rounds

if i % 500 == 0:

total_loss = sess.run(loss, feed_dict = {x: X, y_: Y}) # feed in all the data

print("w1 and w2 after %d training steps, loss on all data is: %g" %(i, total_loss))

print("\n")

print("w1:\n", sess.run(w1))

print("w2:\n", sess.run(w2))

################### 神经网络搭建八股 #################

# 1.准备

## import modules

## define constants

## prepare datasets

# 2.前传

## define x, define correct answer y_

## initialize w1 w2... (randomly)

## define the compute relationshipe a and y (matrix multiple)

# 3.反传

## define loss funtion

## select one back propagation method train_step

# 4.迭代

## use " with tf.Session() as sess: " to iterate

## use " Init_op = tf.global_variables_initializer() " to initialize

## use " sess.run(init_op) " to run/compute the neural network

## use " for i in range(STEPS) " to iterate

################### 迭代细节 #################