概述

前面的博文讲到的是全连接识别MNIST。这篇博客主要讲解使用卷积和池化(POOL)来和别MNIST。

牵涉的代码来自tensorflow的源码工程,目录是:tensorflow\examples\tutorials\mnist\minist_deep.py。

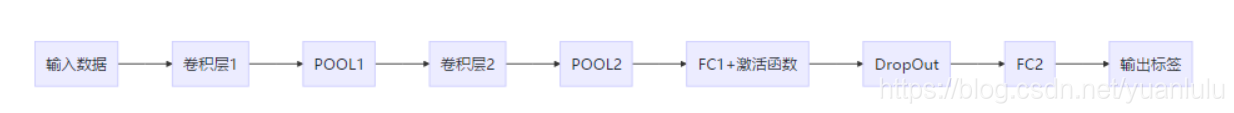

源码中构建的计算图前向推断结构如下:

里面有两个卷积层和两个POOL。后面一个非线性全连接和一个线性全连接,中间隔了一个DropOut层。

网络结构细节

卷积

两个卷积层的定义如下:

def conv2d(x, W):

"""conv2d returns a 2d convolution layer with full stride."""

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

.....

with tf.name_scope('conv1'):

W_conv1 = weight_variable([5, 5, 1, 32])

b_conv1 = bias_variable([32])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1)

......

with tf.name_scope('conv2'):

W_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)

其定义形式和全连接层很像,只是把tf.matmul换成了tf.nn.conv2d。其参数定义如下:

def conv2d(input, filter, strides, padding, use_cudnn_on_gpu=True, data_format="NHWC", dilations=[1, 1, 1, 1], name=None):

- input:输入张量。所以对于MNIST这样单个样本是一维的情况,要reshape为二维的。

- filter:卷积核,要求是一个tensor。对应上述代码中的W。四维形状分别是:[filter_height, filter_width, in_channels, out_channels]。其中in_channels要与输入tensor一致,也就是图片的通道数。out_channels就是输出通道数,这个可以随意指定。

- strides:指定在各个维度上的卷积步长。"NHWC"格式的步长顺序为:[batch, height, width, channels], "NCHW"格式的步长顺序为:[batch, channels, height, width]。其中后者是caffe常用的格式。一般不会跳过样本或者通道,所以NHWC格式下strides[0]和strides[3]固定为1,中间两个用于指定水平和垂直上的步长。

- padding:补齐像素的方式。只能为"SAME",或"VALID"之一。前者表示卷积后大小不变,后者表示不填充,该多少多少。

- use_cudnn_on_gpu:布尔类型,默认为True。

卷积层用的激活函数是relu,这是一个很常用也很好用的激活函数。

POOL

池化层的使用方式

def max_pool_2x2(x):

"""max_pool_2x2 downsamples a feature map by 2X."""

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1], padding='SAME')

.......

# Pooling layer - downsamples by 2X.

with tf.name_scope('pool1'):

h_pool1 = max_pool_2x2(h_conv1)

常用的池化有平均池化和最大池化,这里用的最大池化。器原型为:

def max_pool(value, ksize, strides, padding, data_format="NHWC", name=None):

- value:输入张量。对应上述代码的h_conv2

- ksize:1维4元张量。定义池化窗口的大小。每一维的意义与strides很像。一般不在batch和通道间做池化,所以一般格式为[1, height, width, 1]。

- padding:和上面卷积的含义类似。

- strides:滑动步长,和上面卷积的含义类似。可以看到,上述例子代码里,池化窗口是2X2,滑动步长也是2X2,池化之后tensor的长宽都变为原来的1/2,相当于降采样。虽然卷积过程也可以降采样,但是卷积过程一般都保持大小不变,在池化层做降采样。

参数量的计算

- 上述卷积层1的卷积核形状是[5, 5, 1, 32],所以卷积层1的可训练参数是5X5X1X32=800个。

- 两个池化层没有可选连参数,所有参数都是固定的。

- 卷积层2的卷积核形状是[5, 5, 32, 64],所以其可训练参数是5X5X32X64=51200个。

- FC1的权重形状是[7 * 7 * 64, 1024],偏执的形状是1024,所以FC1的可训练参数是:7X7X64X1024+1024=3212288,大于320万个,相当惊人。

- 最后一层FC2,权重的形状是[1024, 10],偏执形状是1024,所以其可训练参数是:1024X10+1024=10250.

- 和池化层一样,dropout没有可训练参数

和FC1的320万+参数比较,其它层都不是事。所以全连接层相比卷积层,计算量会很恐怖。按照8KB的浮点数存储,大约需要25M。

责任也不全是全连接层的,要怪就怪前一级卷积层输出的特征图太多:64个。

代码研究

损失函数

# Import data

mnist = input_data.read_data_sets(FLAGS.data_dir)

......

with tf.name_scope('loss'):

cross_entropy = tf.losses.sparse_softmax_cross_entropy(

labels=y_, logits=y_conv)

cross_entropy = tf.reduce_mean(cross_entropy)

由于载入数据的时候没有指定one_hot参数,所以返回的参数不是独热编码的格式。这时候求取softmac交叉熵的时候要使用稀疏版本(sparse)的函数:sparse_softmax_cross_entropy。

权重和偏执

def weight_variable(shape):

"""weight_variable generates a weight variable of a given shape."""

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

"""bias_variable generates a bias variable of a given shape."""

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

权重使用阶段正太分布函数truncated_normal来初始化,偏置使用常数0.1初始化。

两次reshape

MNIST的数据每个样本是一维的,所以进入卷积之前要强制reshape为2维。

with tf.name_scope('reshape'):

x_image = tf.reshape(x, [-1, 28, 28, 1])

从第二个卷积层出来的tensor,要重新reshpe为1维,因为后面要接全连接层。

with tf.name_scope('fc1'):

W_fc1 = weight_variable([7 * 7 * 64, 1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2, [-1, 7 * 7 * 64])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

测试结果

| dropout保活比例 | 训练步数 | 准确率 |

|---|---|---|

| 0.5 | 2000 | 0.974 |

| 0.5 | 10000 | 0.9905 |

| 0.7 | 2000 | 0.9775 |

| 0.7 | 5000 | 0.9883 |

| 0.7 | 10000 | 0.99 |

| 0.8 | 2000 | 0.9797 |

上述是原始网络的测试结果。

我试着将卷积层2的卷积核个数分别调整为16、8、4、1,准确率分别是

0.9909、0.989、0.9868、0.973

可以说,把参数从320万+降到5万,效果没有数量级差异。对弈MNIST数据集来说,这个网络过于冗余了。可以不断优化调整各个层的超参,应该可以组合出一个最好、最快的结果。

各个超参的影响

参考资料的《用于MNIST的简单卷积神经网络的设计》总结了各个超参的影响,主要内容如下:

- 卷积核越多,收敛越快,最终准确率越高

- 使用不同的激活函数,卷积层使用relu6和relu都不错,softmax最差。全连接层的激活函数elu是最好的。可以看到,全连接激活函数,没有负数部分效果不会太好,elu比较均衡。

- 不同学习率下降速度不同,达到的准确率也不同。太大太小都不好。学习率大时下降较快。

- 权重初始化的方差有影响,但是不是很大。

上述代码是在作者的源码上的结论,和本文的代码稍有不同,但是可以借鉴这些结论。

代码

"""A deep MNIST classifier using convolutional layers.

See extensive documentation at

https://www.tensorflow.org/get_started/mnist/pros

"""

# Disable linter warnings to maintain consistency with tutorial.

# pylint: disable=invalid-name

# pylint: disable=g-bad-import-order

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import argparse

import sys

import tempfile

from tensorflow.examples.tutorials.mnist import input_data

import tensorflow as tf

FLAGS = None

def deepnn(x):

"""deepnn builds the graph for a deep net for classifying digits.

Args:

x: an input tensor with the dimensions (N_examples, 784), where 784 is the

number of pixels in a standard MNIST image.

Returns:

A tuple (y, keep_prob). y is a tensor of shape (N_examples, 10), with values

equal to the logits of classifying the digit into one of 10 classes (the

digits 0-9). keep_prob is a scalar placeholder for the probability of

dropout.

"""

# Reshape to use within a convolutional neural net.

# Last dimension is for "features" - there is only one here, since images are

# grayscale -- it would be 3 for an RGB image, 4 for RGBA, etc.

with tf.name_scope('reshape'):

x_image = tf.reshape(x, [-1, 28, 28, 1])

# First convolutional layer - maps one grayscale image to 32 feature maps.

with tf.name_scope('conv1'):

W_conv1 = weight_variable([5, 5, 1, 32])

b_conv1 = bias_variable([32])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1)

# Pooling layer - downsamples by 2X.

with tf.name_scope('pool1'):

h_pool1 = max_pool_2x2(h_conv1)

# Second convolutional layer -- maps 32 feature maps to 64.

with tf.name_scope('conv2'):

W_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)

# Second pooling layer.

with tf.name_scope('pool2'):

h_pool2 = max_pool_2x2(h_conv2)

# Fully connected layer 1 -- after 2 round of downsampling, our 28x28 image

# is down to 7x7x64 feature maps -- maps this to 1024 features.

with tf.name_scope('fc1'):

W_fc1 = weight_variable([7 * 7 * 64, 1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2, [-1, 7 * 7 * 64])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

# Dropout - controls the complexity of the model, prevents co-adaptation of

# features.

with tf.name_scope('dropout'):

keep_prob = tf.placeholder(tf.float32)

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

# Map the 1024 features to 10 classes, one for each digit

with tf.name_scope('fc2'):

W_fc2 = weight_variable([1024, 10])

b_fc2 = bias_variable([10])

y_conv = tf.matmul(h_fc1_drop, W_fc2) + b_fc2

return y_conv, keep_prob

def conv2d(x, W):

"""conv2d returns a 2d convolution layer with full stride."""

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

def max_pool_2x2(x):

"""max_pool_2x2 downsamples a feature map by 2X."""

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1], padding='SAME')

def weight_variable(shape):

"""weight_variable generates a weight variable of a given shape."""

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

"""bias_variable generates a bias variable of a given shape."""

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

def main(_):

# Import data

mnist = input_data.read_data_sets(FLAGS.data_dir)

# Create the model

x = tf.placeholder(tf.float32, [None, 784])

# Define loss and optimizer

y_ = tf.placeholder(tf.int64, [None])

# Build the graph for the deep net

y_conv, keep_prob = deepnn(x)

with tf.name_scope('loss'):

cross_entropy = tf.losses.sparse_softmax_cross_entropy(

labels=y_, logits=y_conv)

cross_entropy = tf.reduce_mean(cross_entropy)

with tf.name_scope('adam_optimizer'):

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

with tf.name_scope('accuracy'):

correct_prediction = tf.equal(tf.argmax(y_conv, 1), y_)

correct_prediction = tf.cast(correct_prediction, tf.float32)

accuracy = tf.reduce_mean(correct_prediction)

graph_location = tempfile.mkdtemp()

print('Saving graph to: %s' % graph_location)

train_writer = tf.summary.FileWriter(graph_location)

train_writer.add_graph(tf.get_default_graph())

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(2000):

batch = mnist.train.next_batch(50)

if i % 100 == 0:

train_accuracy = accuracy.eval(feed_dict={

x: batch[0], y_: batch[1], keep_prob: 1.0})

print('step %d, training accuracy %g' % (i, train_accuracy))

train_step.run(feed_dict={x: batch[0], y_: batch[1], keep_prob: 0.7})

print('test accuracy %g' % accuracy.eval(feed_dict={

x: mnist.test.images, y_: mnist.test.labels, keep_prob: 1.0}))

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--data_dir', type=str,

default='./data',

help='Directory for storing input data')

FLAGS, unparsed = parser.parse_known_args()

tf.app.run(main=main, argv=[sys.argv[0]] + unparsed)

参考资料

官方教程:深入MNIST(卷积神经网络)